When this series began, we looked at how to process files in the Data Lake. We saw how to manually create folders and upload files using the Azure Portal. All well and good, but not ideal if you want to automate the process. Sounds like it’s time to see how we can use PowerShell to manage the content of our Data Lakes.

What can we do in PowerShell?

Well, pretty much everything we can do manually! Specifically, we can use PowerShell to:

- Create a new file or folder

- Upload a file or folder to the Data Lake

- Download a file or folder from the Data Lake

- Move a file or folder from one place to another in the Data Lake

- Remove a file or folder from the Data Lake

It is possible to replace existing versions of files or folders with new versions, and it’s also possible to upload a folder and all of that folder’s sub-folders too. You can also download entire folders or remove entire folders with one statement. It’s even possible to delete multiple items – no matter whether some are files or some are folders – in one statement.

What Cmdlets do I use?

The cmdlets we are interested in here are:

- Test-AdlStoreItem

- New-AzureRmDataLakeStoreItem

- Import-AzureRmDataLakeStoreItem

- Export-AzureRmDataLakeStoreItem

- Move-AzureRmDataLakeStoreItem

- Remove-AzureRmDataLakeStoreItem

We have met Test-AdlStoreItem before, it checks if a specified path exists in the target Data Lake Storage Account. The others are fairly self-explanatory from their names, let’s have a quick look at them.

New-AzureRmDataLakeStoreItem

The key parameters for this cmdlet, which allows you to create either a new file or folder, are Account, which you use to specify in which Data Lake Storage account the item should be created; Path, which is the name of the file or folder to create; Force, which will cause the item to be created even if it already exists (by firstly deleting the existing version); and Folder, which if specified creates a folder – a file is created if this parameter is omitted. Paths must start with a leading /, as this denotes the root directory.

Import-AzureRmDataLakeStoreItem

There are three key parameters here – Account, which we’ve just met; Path, which points at the local file or folder you want to upload; and Destination, which is the location in the Data Lake where you want the file or folder to be created.

It’s possible to rename a file or folder during the upload – just give it a different name in the Destination. The destination path should start with /, identifying the root directory.

There are a couple of other options that may come in handy. DiagnosticLogPath allows you to capture events during the upload process to a specified log file. Confirm displays a confirmation prompt before the upload commences, whilst Force will overwrite the target file/folder even if it already exists. Recurse is also of interest, this will copy all sub-items in a folder up to the Data Lake Store. If Recurse is not specified, only the items at the top level will be uploaded.

Export-AzureRmDataLakeStoreItem

This works in almost exactly the same way as Import-AzureRmDataLakeStoreItem, and all of the parameters specified above can be used with this cmdlet. The only difference lies with Path and Destination. Path this time points at the file or folder you wish to download from the Data Lake; Destination represents the local path you want to save the file or folder to. Again, you can change the name of the destination if you require, renaming the file or folder at the same time as downloading it.

Move-AzureRmDataLakeStoreItem

It’s common to want to move a file or folder from a “live” area to a “processed” area – a file comes in, you process it, and then you move it to the processed area. That’s exactly what this cmdlet is for. The difference with this cmdlet to the import and export cmdlets is it only affects the Data Lake – you aren’t moving items from local storage to Data Lake or vice versa, you’re moving items from one area of the Data Lake to another area of the Data Lake.

The usual Path and Destination parameters are present, as are Account, Confirm and Force. As with the other cmdlets, you can change the name of the file or folder when you move it.

Remove-AzureRmDataLakeStoreItem

This is a bit simpler than the other cmdlets. All you really need to think about here are the Account and Paths parameters, both of which are mandatory. This cmdlet can accept multiple paths, each separated by a comma. Some other options which may be useful include Force, Confirm and Recurse, all of which we’ve already met. However, there is one additional option called Clean. This is used if you are removing a folder – it cleans out the contents of the folder, but leaves the empty folder intact, ready to be repopulated whenever you are ready.

Now that we have met our cmdlets, let’s see how to use each one of them – by creating a custom PowerShell script.

The PowerShell Security Context

Open up PowerShell ISE (refer back to the last article if you need a quick primer on this – it’s the PowerShell development environment that comes with Windows). When we looked at how to create the database last time around, we discussed the PowerShell security context – we had to provide PowerShell with a context to log in with, otherwise the script would not have the appropriate permissions to execute tasks. We created a login function called Perform-AdlLogin to provide the relevant permissions. Refer back to the last article for an overview of what you need to do (if you missed it of course – and in that case, where have you been?!). Here’s the code for the function, which should be pasted at the beginning of a new PowerShell script.

# Login to the Data Lake using a context file

function Perform-AdlLogin ([string] $contextPath)

{

$contextFileExists = Test-Path $contextPath

Write-Output "Logging in"

Try

{

if ($contextFileExists)

{

# Login to Azure using saved context

Import-AzureRmContext -Path $contextPath

}

else

{

Write-Output "The context file does not exist: $contextPath"

break

}

}

Catch

{

Write-Output "Logging in from context file failed - check your context file."

break

}

}Creating Items in the Data Lake

Time to create a custom function, which will create either a new file or folder. The code for this one is pretty basic.

# Creates a new file or folder in the Data Lake

function Add-ItemToDataLake

([string] $accountName, [string] $contextPath, [string] $newItemName, [bool] $isFolder)

{

Perform-AdlLogin($contextPath)

if ($isFolder)

{

Write-Output "Creating folder..."

New-AzureRmDataLakeStoreItem -Account $accountName -Path $newItemName -Folder -Force

}

else

{

Write-Output "Creating file..."

New-AzureRmDataLakeStoreItem -Account $accountName -Path $newItemName -Force

}

}The first two parameters tell the function which Data Lake account to use, and where the security context file can be found. newItemName is obvious, and the last Boolean tells the function whether to create a file or folder. The code uses this parameter to determine whether the Folder parameter is specified or not in the call to New-AzureRmDataLakeStoreItem. Force has been specified, so if the specified file or folder already exists it will be overwritten.

The two examples below create a file and a folder, respectively. I’m pointing at my sqlservercentral Data Lake Store account.

Add-ItemToDataLake "sqlservercentral" "c:\temp\azurecontext.json" "/testfile.txt" $false Add-ItemToDataLake "sqlservercentral" "c:\temp\azurecontext.json" "/testfolder/testfolder2" $true

Interestingly, it’s possible to create a full path of folders. This command will create all three folders in the specified path if they don’t already exist.

Add-ItemToDataLake "sqlservercentral" "c:\temp\azurecontext.json" "/testfolder1/testfolder2/testfolder3" $true

Uploading Items to the Data Lake

Now on to our second custom function, which can upload either a file or a folder to the Data Lake. Here’s what the function will do:

- Login to Azure using Perform-AdlLogin

- Upload the file or folder

Here’s the listing for that method:

# Uploads the specified folder to the specified Data Lake account

function Upload-ItemToDataLake

([string] $accountName, [string] $contextPath, [string] $localSourceItem, [string] $remoteTargetItem)

{

if (([string]::IsNullOrEmpty($accountName)) -Or

([string]::IsNullOrEmpty($contextPath)) -Or

([string]::IsNullOrEmpty($localSourceItem)) -Or

([string]::IsNullOrEmpty($remoteTargetItem)))

{

Write-Output "All options are mandatory. Usage: Upload-ItemToDataLake ""accountname"" ""contextPath"" ""localSourceItem"" ""remoteTargetItem"""

}

else

{

Perform-AdlLogin($contextPath)

# Import data

Write-Output "Uploading data..."

Import-AdlStoreItem -Account $accountName -Path $localSourceItem -Destination $remoteTargetItem -Recurse -Force

Write-Output "Successfully uploaded $localSourceItem to $remoteTargetItem"

}

}

Although the code is longer, there isn’t really too much to this. It takes four parameters – accountName, contextPath, localSourceItem and remoteTargetItem. The first two tell the function which Data Lake account to use and the context file to use for security purposes. localSourceItem is the item we want to upload to the Data Lake, whilst remoteTargetItem is the path we want to upload it to.

The function checks if all values have been provided, displaying a warning message if a value is missing. If the values have been provided, a login occurs and the item is uploaded. There are no checks to see whether the item exists or not, or even whether the relevant Data Lake account exists – the Import-AdlStoreItem cmdlet does all of this kind of thing itself. Let’s assess the call to Import-AdlStoreItem a little more closely.

Import-AdlStoreItem -Account $accountName -Path $localSourceItem -Destination $remoteTargetItem -Recurse -Force

The first parameter is the Azure account. The second is Path, which identifies the item we want to upload. Destination tells the function where to upload to. Finally, the Recurse and Force parameters are provided, both of which we met earlier.

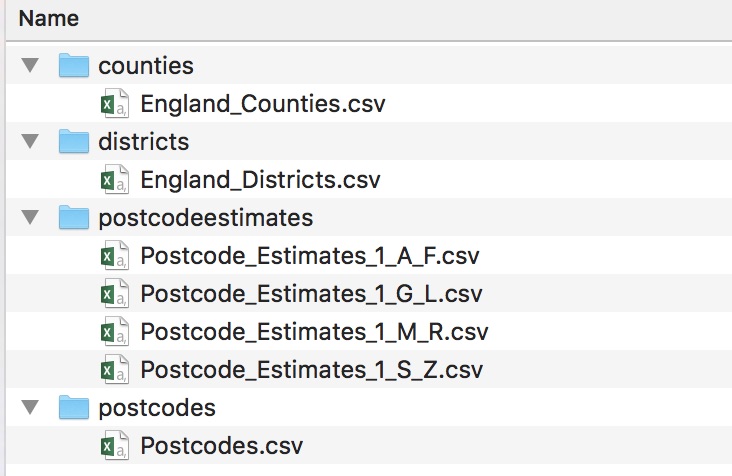

Let’s create some examples using this method. I have a folder called SampleData, located at c:\temp\SampleData. Here are the contents of that folder (which may look familiar to regular readers of this Stairway).

Using my sqlservercentral Data Lake account, I try to upload a single file:

Upload-ItemToDataLake "sqlservercentral" "c:\temp\azurecontext.json" "C:\temp\sampledata\postcodes\postcodes.csv" "/uploaded_postcodes.csv"

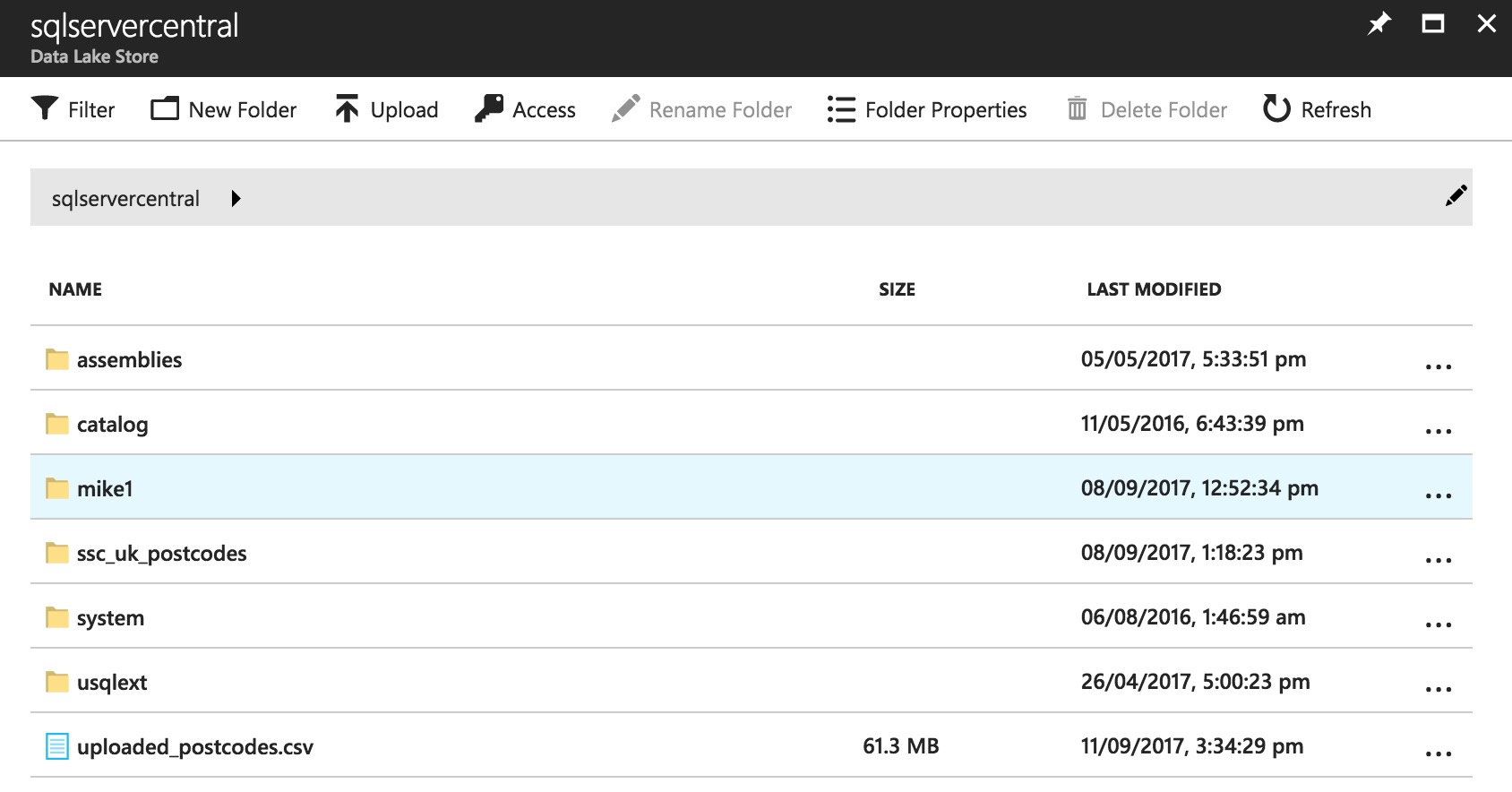

I have asked the function to upload the file to my Data Lake root directory, renaming the file to uploaded_postcodes.csv for good measure. Here are the contents of my Data Lake Store after uploading:

You can see uploaded_postcodes.csv at the bottom of the image. So far, so good! Let’s try that again, but with a local file that doesn’t exist:

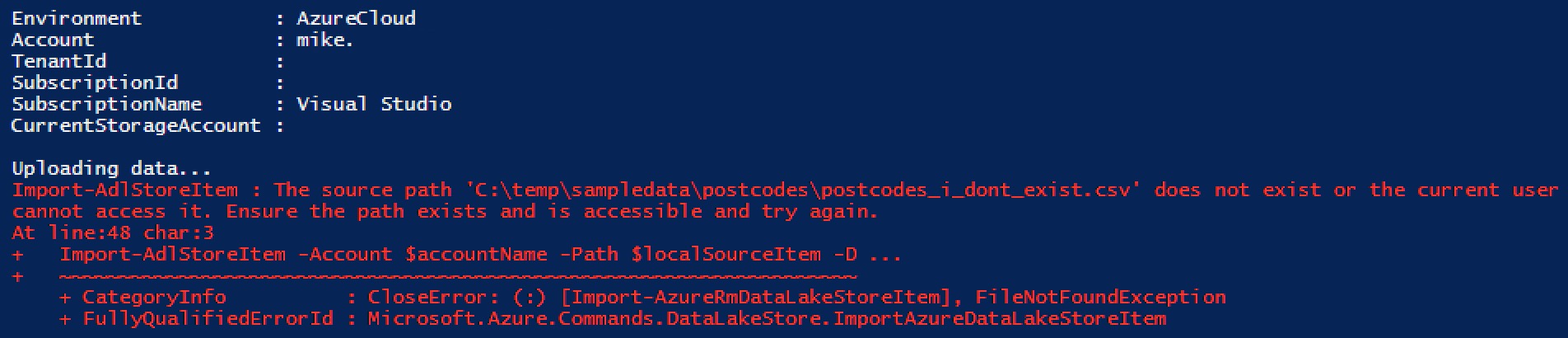

Upload-ItemToDataLake "sqlservercentral" "c:\temp\azurecontext.json" "C:\temp\sampledata\postcodes\postcodes_i_dont_exist.csv" "/uploaded_postcodes.csv"

This time we see an error – Import-AdlStoreItem is working as expected! Let’s finish up by uploading the entire SampleData folder to a folder called all_sample_data.

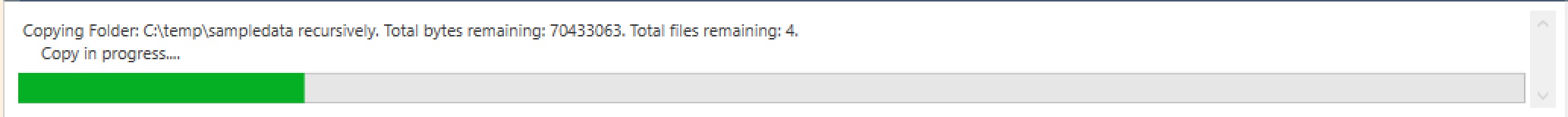

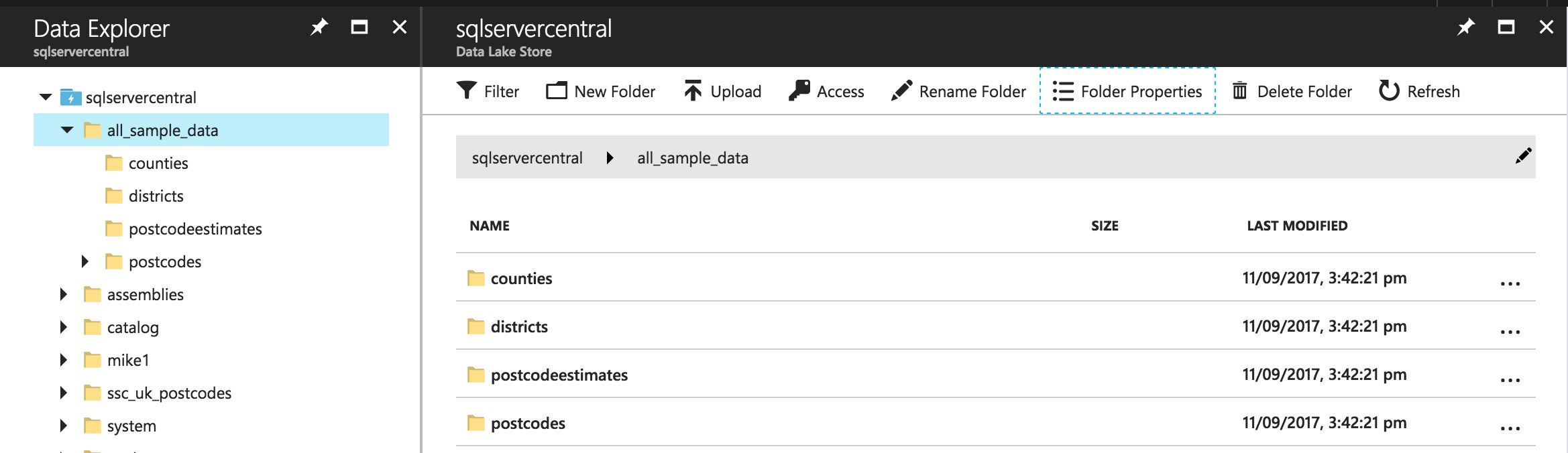

Upload-ItemToDataLake "sqlservercentral" "c:\temp\azurecontext.json" "C:\temp\sampledata" "/all_sample_data"

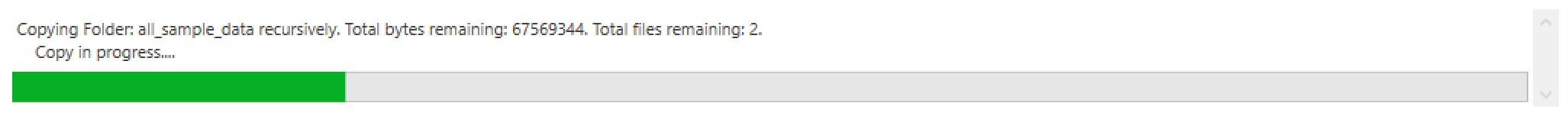

We see a nice progress bar…

And once that finishes, the folder is there in Azure. Be aware that empty folders cannot be uploaded, the folder has to contain at least one item. Use New-AzureRmDataLakeStoreItem if you want to create an empty folder.

Downloading Items from the Data Lake

Now that we’re pretty comfortable with uploading, let’s create a download function. I’ve left out the parameter validation checks for brevity’s sake.

function Download-ItemFromDataLake

([string] $accountName, [string] $contextPath, [string] $remoteSourcePath, [string] $localTargetPath)

{

Perform-AdlLogin($contextPath)

Export-AzureRmDataLakeStoreItem -Account $accountName -Path $remoteSourcePath -Destination $localTargetPath -Force -Recurse

}This is almost identical to the Upload method. Let’s give it a go by downloading the England_Districts file.

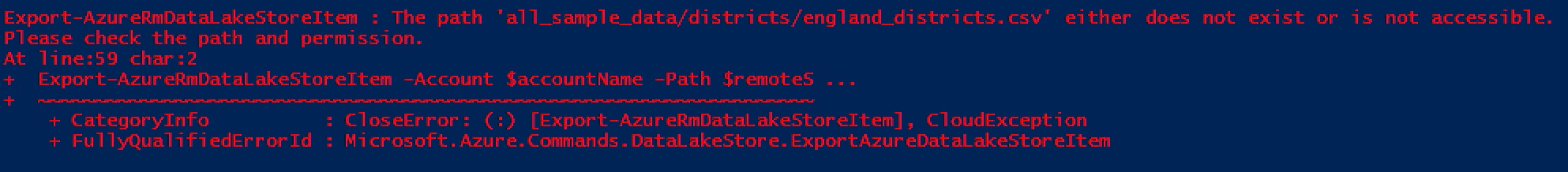

Download-ItemFromDataLake "sqlservercentral" "c:\temp\azurecontext.json" "/all_sample_data/districts/england_districts.csv" "c:\temp\downloaded_data\districts.csv"

You may be surprised to learn this fails!

The paths in Azure are case-sensitive. I’ve specified “england_districts.csv”, but the file is called “England_Districts.csv”. A quick correction:

Download-ItemFromDataLake "sqlservercentral" "c:\temp\azurecontext.json" "/all_sample_data/districts/England_Districts.csv" "c:\temp\downloaded_data\districts.csv"

And everything works as expected. Another interesting wrinkle here is the downloaded_data folder did not exist within c:\temp when I ran the above command. Download-ItemFromDataLake created the parts of the path that didn’t already exist. Very handy.

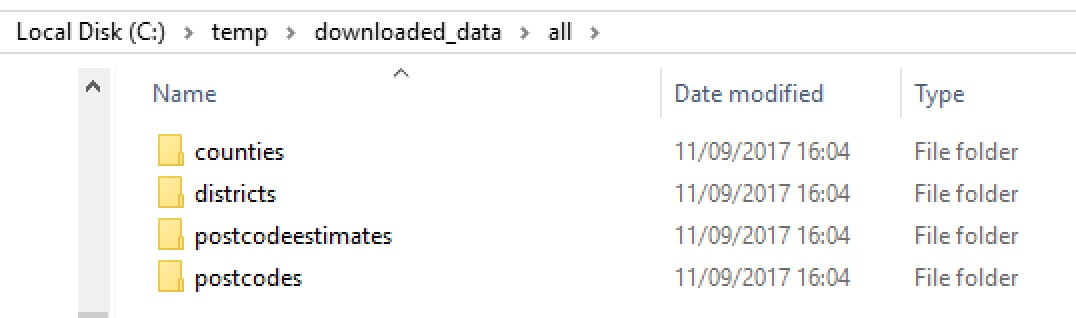

Now to download the entire all_sample_data folder, to a folder within downloaded_data, called simply all.

Download-ItemFromDataLake "sqlservercentral" "c:\temp\azurecontext.json" "/all_sample_data" "c:\temp\downloaded_data\all"

As if by magic, it pulls the entire folder down to my c: drive.

Now let’s look at how we can move data around the Data Lake!

Moving Items within the Data Lake

The code for this function is very similar to the download function we’ve just written.

function Move-DataLakeItem([string] $accountName, [string] $contextPath, [string] $sourceItemPath, [string] $targetItemPath)

{

Perform-AdlLogin($contextPath)

Move-AzureRmDataLakeStoreItem -Account $accountName -Path $sourceItemPath -Destination $targetItemPath -Force

}A simple login, followed by a call to Move-AzureRmDataLakeStoreItem. Here is a call that moves the ssc_uk_postcodes folder to a folder called postcodes. It recurses automatically, so all sub-folders are moved too.

Move-DataLakeItem "sqlservercentral" "c:\temp\azurecontext.json" "/ssc_uk_postcodes" "/postcodes"

This effectively renames the ssc_uk_postcodes folder to postcodes, the contents remain the same. Now, assume we tried to move the folder to a sub-folder of a path that doesn’t exist.

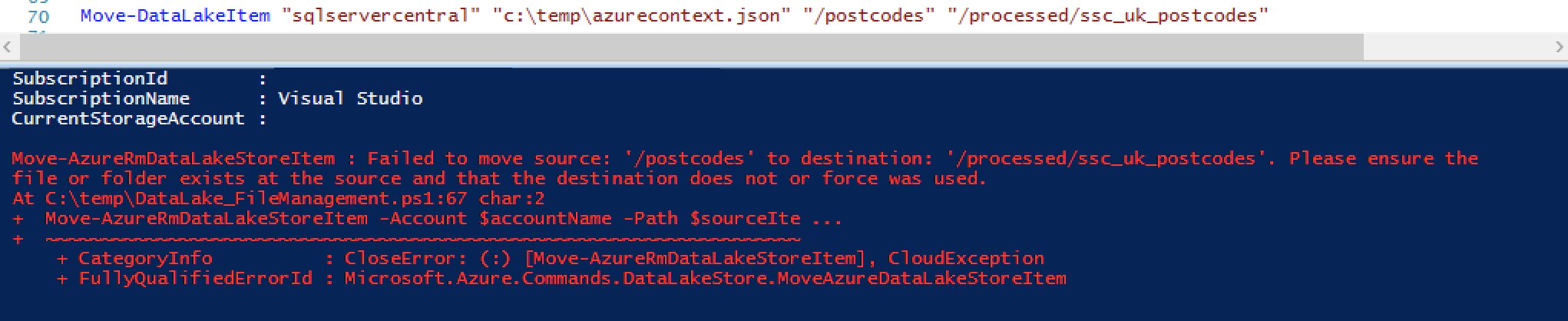

Move-DataLakeItem "sqlservercentral" "c:\temp\azurecontext.json" "/postcodes" "/processed/ssc_uk_postcodes"

In the above case, the processed folder doesn’t exist. The task fails, which is different to what we saw with the download function. The download function created the path as needed, but this function hits an error:

So, it’s important to remember that you need to create any top-level folders in advance! Once the processed folder exists, the command above will execute correctly.

Files can be processed in a similar manner to the other functions.

Removing Items from the Data Lake

The circle is almost complete. We can add, upload, download and move data. Quite often, you’ll find you want to execute the following set of commands:

- Upload some data

- Execute a script against that data

- Download the results of the script

- Delete the uploaded data files and the results file (or move the files, in which case substitute the delete call with the move function we’ve just seen)

Why would you do this? Purely to save money – Microsoft charges you for the amount of data stored in your Data Lake, so if your data is transient you may want to keep it in the lake for a limited amount of time. The costs may be fairly negligible, but still. Of course, there will be times when this model doesn’t work for you, but it’s a good pattern to use when all you need is to aggregate some data and grab the results.

So, we may have some situations where we need to delete data. I guess we’d better put together a function which allows us to do that then:

function Delete-DataLakeItem([string] $accountName, [string] $contextPath, [string] $pathToDelete)

{

Perform-AdlLogin($contextPath)

$pathExists = Test-AdlStoreItem -Account $accountName -Path $pathToDelete

if ($pathExists)

{

Remove-AzureRmDataLakeStoreItem -Account $accountName -Paths $pathToDelete -Force -Recurse

Write-Output "Deleted $pathToDelete"

}

else

{

Write-Output "The specified file or folder does not exist."

}

}A check has been added to this method, to determine whether the file or folder exists. If it does, great, we delete it. If it doesn’t a little warning pops up. This isn’t strictly necessary, as Remove-AzureRmDataLakeStoreItem would display an error anyway – but it shows the various ways we can handle things in PowerShell.

The first two parameters have been present in all of our methods, so the only additional parameter we have is pathToDelete. This command will delete the file we uploaded before, /uploaded_postcodes.csv.

Delete-DataLakeItem "sqlservercentral" "c:\temp\azurecontext.json" "/uploaded_postcodes.csv"

As we saw earlier, Azure paths are case-sensitive, so always double-check the casing of your paths. Now we can kill the all_sample_data folder, and all of its contents.

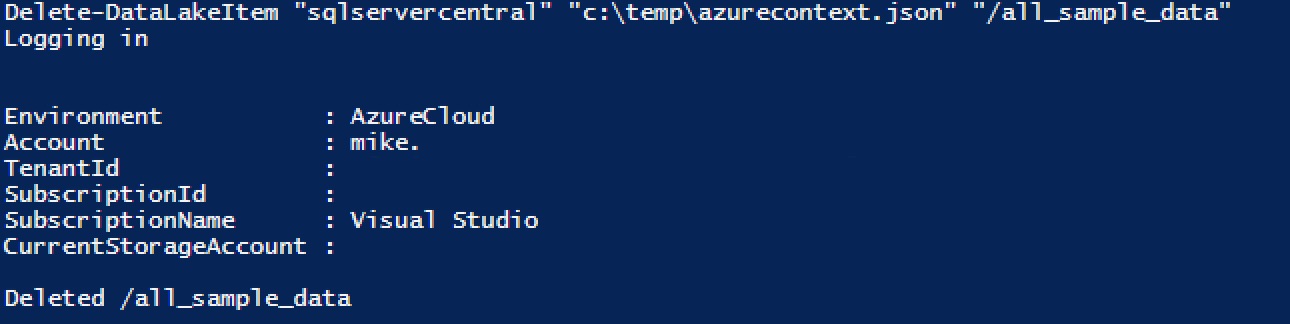

Delete-DataLakeItem "sqlservercentral" "c:\temp\azurecontext.json" "/all_sample_data"

The function does have one limitation – we have to call it for each individual item we want to delete. As I mentioned earlier, Remove-AzureRmDataLakeStoreItem can actually accept multiple paths. If we had wanted to delete both the file and folder in one foul swoop, we could have executed:

Remove-AzureRmDataLakeStoreItem -Account $accountName –Paths “/uploaded_postcodes.csv”, “/all_sample_data” -Force -Recurse

If you’re so inclined, feel free to change the function so it can accept an array of items to delete. Could be a fun little programming exercise!

Summary

Another journey through the Data Lake PowerShell landscape is complete. We’ve covered the basic tenets of what you need to manage your Data Lake via PowerShell. There are plenty of other cmdlets you can use. Add-AzureRmDataLakeStoreContent allows you to add content, such as strings, to a file in the Data Lake. There are a set of cmdlets that allow you to set up firewall rules for your Data Lake, and another set that can be used to configure permissions and access control list entries. Take some time to look at what’s available.

There are still a few more PowerShell things to cover in this series, so we’ll finish off our look at PowerShell for Data Lakes in the very next article. See you next time pardner!