Understanding and Improving Query Plans

-

April 7, 2020 at 9:05 pm

Hi everyone.

I have a process that is taking a long time to execute 12 hours down from 16 after addition of index and maintenance. There are about 180+ million records of financial info in this table (the last 4 years). Theses records cannot be archived just yet.

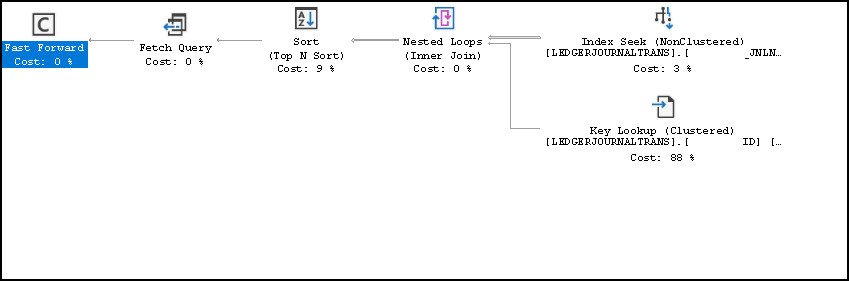

Running an invoice process is taking longer and longer. There are no blocks and less than 10% CPU utilization. Looking at the SQL execution plan it looks pretty clean, I am noticing high costs.

Can someone tell how to understand these plans and how to improve upon them?

Attached is one example.

- This topic was modified 5 years, 10 months ago by girac127. Reason: Image not attached

-

April 7, 2020 at 9:26 pm

This is almost certainly a coding problem - but to be sure we would need to see the code and the execution plan. The actual execution plan saved as a .sqlplan file.

180 million rows seems like a lot - but really isn't so large that it should take 12+ hours for a query.

Jeffrey Williams

“We are all faced with a series of great opportunities brilliantly disguised as impossible situations.”― Charles R. Swindoll

How to post questions to get better answers faster

Managing Transaction Logs -

April 7, 2020 at 10:36 pm

The is a Microsoft Dynamics AX ERP system and this is custom code that runs this invoicing process. There are roughly 80-100K transactions that get processed in this run so its not just one query.

How can I save the .sqlPlan file?

-

April 8, 2020 at 7:31 pm

If I am looking at that execution plan correctly, the left-most operation is a cursor. Cursors will impact performance as they are row based operations. If you can remove that cursor, you will likely see a performance improvement.

The above is all just my opinion on what you should do.

As with all advice you find on a random internet forum - you shouldn't blindly follow it. Always test on a test server to see if there is negative side effects before making changes to live!

I recommend you NEVER run "random code" you found online on any system you care about UNLESS you understand and can verify the code OR you don't care if the code trashes your system. -

April 8, 2020 at 10:14 pm

I was not able to see the picture before - and since this is a cursor it is definitely a coding problem. If you can provide the code - then someone will be able to help optimize that code.

Jeffrey Williams

“We are all faced with a series of great opportunities brilliantly disguised as impossible situations.”― Charles R. Swindoll

How to post questions to get better answers faster

Managing Transaction Logs -

April 14, 2020 at 1:34 pm

Without the code, it is just a guess. But reducing the 180M rows down to a smaller number before running through them will be key.

There might be an index on the Ledger that can be used. Date or Status come to mind. Might be the earliest date on the small side, or open status on the Ledger.

An Exists query is something I have used at times to tackle similar issues.

If you thought indexes got a big improvement, you might find a lot more yet to come. And when they say ‘row by agonizing row’ with a cursor, you really can feel the pain.

Viewing 6 posts - 1 through 6 (of 6 total)

You must be logged in to reply to this topic. Login to reply