Transaction log and updates on large tables

-

April 16, 2021 at 7:15 pm

ScottPletcher wrote:The data still has to be read -- how else would SQL know that the proposed new value was the same as the value in the table itself?

It's the writes/dirty pages that get avoided.

Yes, I understand that. But I would expect the writes to increase the run time, and I didn't see any signs of that even at 200,000 rows being updated. Thanks again.

__________________________________________________

Against stupidity the gods themselves contend in vain. -- Friedrich Schiller

Stop, children, what's that sound? Everybody look what's going down. -- Stephen Stills -

April 16, 2021 at 7:34 pm

The Dixie Flatline wrote:ScottPletcher wrote:The data still has to be read -- how else would SQL know that the proposed new value was the same as the value in the table itself?

It's the writes/dirty pages that get avoided.

Yes, I understand that. But I would expect the writes to increase the run time, and I didn't see any signs of that even at 200,000 rows being updated. Thanks again.

Don't forget, the physical writes are deferred, it's just the buffer(s) that initially get modified. Since the buffers are in memory only, natch, I wouldn't expect that to take much time. It's the flush to disk that will consume the time. That's my best guess at least.

SQL DBA,SQL Server MVP(07, 08, 09) "It's a dog-eat-dog world, and I'm wearing Milk-Bone underwear." "Norm", on "Cheers". Also from "Cheers", from "Carla": "You need to know 3 things about Tortelli men: Tortelli men draw women like flies; Tortelli men treat women like flies; Tortelli men's brains are in their flies".

-

April 16, 2021 at 9:27 pm

The Dixie Flatline wrote:Paul is certainly a worthwhile authority. I will study his article later. I did notice this quote, which was emphasized:

A clustered table will always produce full logging and dirty buffer pages if (any part of) the clustering key is updated.

But I also notice that his article didn't cover the use case I created above. He only tested setting a column to a constant, or setting a column to itself. It probably holds true in the case of setting a column to the value of a column from a source table, but I will use his methodology to check.

Thanks. 🙂

My guess, and this is purely a guess, is that SQL compares the value after it has converted it to its physical storage format. This would allow SQL to do a purely binary compare, which I presume would be the fastest -- and most accurate?! -- comparison method.

SQL DBA,SQL Server MVP(07, 08, 09) "It's a dog-eat-dog world, and I'm wearing Milk-Bone underwear." "Norm", on "Cheers". Also from "Cheers", from "Carla": "You need to know 3 things about Tortelli men: Tortelli men draw women like flies; Tortelli men treat women like flies; Tortelli men's brains are in their flies".

-

April 16, 2021 at 9:27 pm

ScottPletcher wrote:The Dixie Flatline wrote:Paul is certainly a worthwhile authority. I will study his article later. I did notice this quote, which was emphasized:

A clustered table will always produce full logging and dirty buffer pages if (any part of) the clustering key is updated.

But I also notice that his article didn't cover the use case I created above. He only tested setting a column to a constant, or setting a column to itself. It probably holds true in the case of setting a column to the value of a column from a source table, but I will use his methodology to check.

Thanks. 🙂

My thinking, and this is purely a guess, is that SQL compares the value after it has converted it to its physical storage format. This would allow SQL to do a purely binary compare, which I presume would be the fastest -- and most accurate?! -- comparison method.

SQL DBA,SQL Server MVP(07, 08, 09) "It's a dog-eat-dog world, and I'm wearing Milk-Bone underwear." "Norm", on "Cheers". Also from "Cheers", from "Carla": "You need to know 3 things about Tortelli men: Tortelli men draw women like flies; Tortelli men treat women like flies; Tortelli men's brains are in their flies".

-

April 18, 2021 at 1:27 pm

Jeff Moden wrote:ScottPletcher wrote:Jeff Moden wrote:ScottPletcher wrote:(3) SQL does that itself, so that's only a very minor consideration.

I might be misinterpreting what you're saying above but, at face value, I have to ask...

How so? Since when does T-SQL decide that a value in a row containing, for example, the letter "A" is supposed to be updated to an "A". What do you think there is in T-SQL that says "Oh... that has the same value as what I'm trying to update so I'm not going to update that particular value"?

Or do you mean something else?

No, I meant exactly that SQL generally does not log updates of a column to the same value. SQL does not log a modification to the data page, does not modify the page, and thus does not mark page(s) as dirty.

There are a some exceptions: if snapshot is enabled, then full logging and page updates are always done: yet another reason I urge you to active snapshot only if you really, really need it; updating a LOB column > 1 page, unless the update is exactly "lob_column = lob_column"; updating of a clustering key column(s) to the same value.

I believe SQL still does update the totals in sys.dm_db_index_operational_stats to reflect the "UPDATE", even though no data pages were actually modified / rewritten.

As to timing, since at least SQL 2005 (perhaps even before, although I think additional bypasses were added for 2005).

Why would SQL waste time and resources to "update" a column from 'A' to 'A' when a simple comparison could prevent that?

Thanks, Scott. I'll check it out.

Having read Paul's fine article, I have to disagree with the statement you made in Bold Red above.

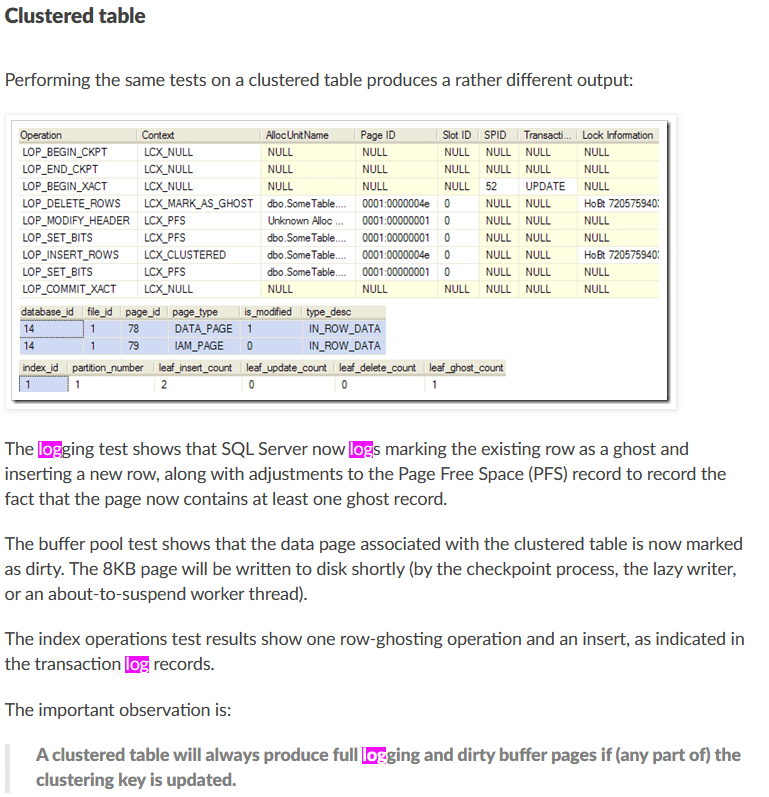

As others have posted, Paul both clearly states and clearly demonstrates with code that not only are the updates always recorded in the log when a Clustered Table is updated with identical data, it even forms ghost records as it would if the data had changed.

Here is the pertinent snippet from Paul's good article...

________________________________________________________________________________________

________________________________________________________________________________________

That also means that what you said in a previous post in response to Sergiy's post...

Sergiy wrote:3) Make sure you update only values which have actually changed.

ScottPletcher wrote:(3) SQL does that itself, so that's only a very minor consideration.

... Sergiy is still correct and that SQL does, indeed, NOT do that itself for Clustered Tables.

That will also impact performance (meaning that having identical data updates or not will be exactly the same) and, most certainly, the usage of multiple resources, both of which can usually be reduced simply by doing more surgical updates insofar as updating only those rows that suffer actual changes.

While HEAPs are a different story when it comes to logging, it still takes extra time to do the comparisons. Whether or not it saves time or not when doing huge updates of Heaps when compared to the surgical updates that Sergiy recommended needs to be tested but, the bottom line is that Clustered Tables are fully logged for UPDATEs whether the values of the data change or not.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

April 18, 2021 at 10:16 pm

Jeff Moden wrote:Jeff Moden wrote:ScottPletcher wrote:Jeff Moden wrote:ScottPletcher wrote:(3) SQL does that itself, so that's only a very minor consideration.

I might be misinterpreting what you're saying above but, at face value, I have to ask...

How so? Since when does T-SQL decide that a value in a row containing, for example, the letter "A" is supposed to be updated to an "A". What do you think there is in T-SQL that says "Oh... that has the same value as what I'm trying to update so I'm not going to update that particular value"?

Or do you mean something else?

No, I meant exactly that SQL generally does not log updates of a column to the same value. SQL does not log a modification to the data page, does not modify the page, and thus does not mark page(s) as dirty.

There are a some exceptions: if snapshot is enabled, then full logging and page updates are always done: yet another reason I urge you to active snapshot only if you really, really need it; updating a LOB column > 1 page, unless the update is exactly "lob_column = lob_column"; updating of a clustering key column(s) to the same value.

I believe SQL still does update the totals in sys.dm_db_index_operational_stats to reflect the "UPDATE", even though no data pages were actually modified / rewritten.

As to timing, since at least SQL 2005 (perhaps even before, although I think additional bypasses were added for 2005).

Why would SQL waste time and resources to "update" a column from 'A' to 'A' when a simple comparison could prevent that?

Thanks, Scott. I'll check it out.

Having read Paul's fine article, I have to disagree with the statement you made in Bold Red above.

As others have posted, Paul both clearly states and clearly demonstrates with code that not only are the updates always recorded in the log when a Clustered Table is updated with identical data, it even forms ghost records as it would if the data had changed.

Here is the pertinent snippet from Paul's good article...

________________________________________________________________________________________

________________________________________________________________________________________

That also means that what you said in a previous post in response to Sergiy's post...

Sergiy wrote:3) Make sure you update only values which have actually changed.

ScottPletcher wrote:(3) SQL does that itself, so that's only a very minor consideration.

... Sergiy is still correct and that SQL does, indeed, NOT do that itself for Clustered Tables.

That will also impact performance (meaning that having identical data updates or not will be exactly the same) and, most certainly, the usage of multiple resources, both of which can usually be reduced simply by doing more surgical updates insofar as updating only those rows that suffer actual changes.

While HEAPs are a different story when it comes to logging, it still takes extra time to do the comparisons. Whether or not it saves time or not when doing huge updates of Heaps when compared to the surgical updates that Sergiy recommended needs to be tested but, the bottom line is that Clustered Tables are fully logged for UPDATEs whether the values of the data change or not.

His article is phrased rather awkwardly in some places. You're missing the restriction about IF ANY PART OF THE CLUSTERING KEY IS UPDATED THEN, and only then, SQL ghosts the row, etc.. In fact, of course, there would be no need for a ghost row if you just updated non-key columns in the table, because those new values would simply replace existing values in the same, existing row, right? At least as far as I understand SQL's processing methods. I wouldn't think SQL would generate a completely new row if, for example, you just lengthened one varchar column by 1 byte.

I noted the exception about clus key column(s) still being fully logged in what I stated earlier and it's in your quote above.

SQL DBA,SQL Server MVP(07, 08, 09) "It's a dog-eat-dog world, and I'm wearing Milk-Bone underwear." "Norm", on "Cheers". Also from "Cheers", from "Carla": "You need to know 3 things about Tortelli men: Tortelli men draw women like flies; Tortelli men treat women like flies; Tortelli men's brains are in their flies".

-

April 18, 2021 at 11:02 pm

Data pages make up a clustering key. No data page exists outside a clustering key.

if any data in a table with a clustering key is updated then some part of it’s clustering key is updated.

_____________

Code for TallyGenerator -

April 19, 2021 at 12:01 am

Sergiy wrote:Data pages make up a clustering key. No data page exists outside a clustering key. if any data in a table with a clustering key is updated then some part of it’s clustering key is updated.

Huh? None of that really makes any sense. The clus key is only specific column(s); all other columns are not part of the clus key, so changing them would not affect the clus key value. Thus, I'm confused about what you're trying to say here? That every UPDATE of a clus index row is fully rewritten any time any data is changed? Again, I see that as too wasteful for SQL to be doing it all the time.

SQL DBA,SQL Server MVP(07, 08, 09) "It's a dog-eat-dog world, and I'm wearing Milk-Bone underwear." "Norm", on "Cheers". Also from "Cheers", from "Carla": "You need to know 3 things about Tortelli men: Tortelli men draw women like flies; Tortelli men treat women like flies; Tortelli men's brains are in their flies".

-

April 19, 2021 at 1:27 am

When you search by a value in non-indexed column what can you see in the query plan?

It's "Table scan" for HEAPs, and "Clustered Index Scan" for clustered tables.

does it make sense?

_____________

Code for TallyGenerator -

April 20, 2021 at 2:38 pm

Jeff Moden wrote:Jeff Moden wrote:ScottPletcher wrote:Jeff Moden wrote:ScottPletcher wrote:(3) SQL does that itself, so that's only a very minor consideration.

I might be misinterpreting what you're saying above but, at face value, I have to ask...

How so? Since when does T-SQL decide that a value in a row containing, for example, the letter "A" is supposed to be updated to an "A". What do you think there is in T-SQL that says "Oh... that has the same value as what I'm trying to update so I'm not going to update that particular value"?

Or do you mean something else?

No, I meant exactly that SQL generally does not log updates of a column to the same value. SQL does not log a modification to the data page, does not modify the page, and thus does not mark page(s) as dirty.

There are a some exceptions: if snapshot is enabled, then full logging and page updates are always done: yet another reason I urge you to active snapshot only if you really, really need it; updating a LOB column > 1 page, unless the update is exactly "lob_column = lob_column"; updating of a clustering key column(s) to the same value.

I believe SQL still does update the totals in sys.dm_db_index_operational_stats to reflect the "UPDATE", even though no data pages were actually modified / rewritten.

As to timing, since at least SQL 2005 (perhaps even before, although I think additional bypasses were added for 2005).

Why would SQL waste time and resources to "update" a column from 'A' to 'A' when a simple comparison could prevent that?

Thanks, Scott. I'll check it out.

Having read Paul's fine article, I have to disagree with the statement you made in Bold Red above.

As others have posted, Paul both clearly states and clearly demonstrates with code that not only are the updates always recorded in the log when a Clustered Table is updated with identical data, it even forms ghost records as it would if the data had changed.

Here is the pertinent snippet from Paul's good article...

________________________________________________________________________________________

________________________________________________________________________________________

That also means that what you said in a previous post in response to Sergiy's post...

Sergiy wrote:3) Make sure you update only values which have actually changed.

ScottPletcher wrote:(3) SQL does that itself, so that's only a very minor consideration.

... Sergiy is still correct and that SQL does, indeed, NOT do that itself for Clustered Tables.

That will also impact performance (meaning that having identical data updates or not will be exactly the same) and, most certainly, the usage of multiple resources, both of which can usually be reduced simply by doing more surgical updates insofar as updating only those rows that suffer actual changes.

While HEAPs are a different story when it comes to logging, it still takes extra time to do the comparisons. Whether or not it saves time or not when doing huge updates of Heaps when compared to the surgical updates that Sergiy recommended needs to be tested but, the bottom line is that Clustered Tables are fully logged for UPDATEs whether the values of the data change or not.

So, you're sticking with your bottom line conclusion? That SQL does no comparison of data before updating non-key columns for clus indexes? And thus that one would have to add your own WHERE conditions to prevent such unnecessary updating and logging?

There are times when adding such WHERE checks is helpful for SQL in limiting the rows to process, etc., but I don't think it ultimately is needed to prevent a row from being physically updated to identical column value(s).

Were you able to find cases where SQL updated and logged an identical value in, say, a non-key varchar or int column?

SQL DBA,SQL Server MVP(07, 08, 09) "It's a dog-eat-dog world, and I'm wearing Milk-Bone underwear." "Norm", on "Cheers". Also from "Cheers", from "Carla": "You need to know 3 things about Tortelli men: Tortelli men draw women like flies; Tortelli men treat women like flies; Tortelli men's brains are in their flies".

-

April 20, 2021 at 6:54 pm

Heh... it's not my story. It's in the link you provided. Paul proved it with code. Can you prove it to be otherwise with code?

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

April 20, 2021 at 7:25 pm

what about fn_dblog?

I updated a table with the same value and after doing this there were two more rows returned by fn_dblog. each time I ran the (duplicate) update, two more rows.

clustered key, data being updated is not part of key.

I guess it could be writing log records without updating the actual data, I'm sure corporate systems programming has done sillier things, but I have to believe that SQL probably does not test before writing duplicate values.

Welcoming contrary viewpoints!

edit: executing the update statement following Sergei's suggested test for equality as a part of the update criteria did not produce extra rows from fn_dblog. Food for thought!

edit2: I did not really read Paul's blog in detail, I could just be duplicating things but its a fine tradition to spout off on things I haven't read about LOL

-

April 20, 2021 at 9:39 pm

x wrote:what about fn_dblog?

I updated a table with the same value and after doing this there were two more rows returned by fn_dblog. each time I ran the (duplicate) update, two more rows.

clustered key, data being updated is not part of key.

I guess it could be writing log records without updating the actual data, I'm sure corporate systems programming has done sillier things, but I have to believe that SQL probably does not test before writing duplicate values.

Welcoming contrary viewpoints!

edit: executing the update statement following Sergei's suggested test for equality as a part of the update criteria did not produce extra rows from fn_dblog. Food for thought!

edit2: I did not really read Paul's blog in detail, I could just be duplicating things but its a fine tradition to spout off on things I haven't read about LOL

Yes... duplicating things a bit. But that's totally awesome because it shows that it doesn't just happen on Paul's machine.

Heh... now... if we could just get Scott to try it. 😀

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

April 21, 2021 at 8:08 am

ajans_guernsey wrote:I’ve inherited a query that on first glance should be re-written. The main part of which is performing an update on a table (200m+ rows) in a while and using nested selects in a not exists. I realise this is quite vague so can provide more detail.

The query has a tendency to fill my transaction log. Db is in simple recovery mode, so stupid question time. Other than re-write the script to update in batches, is there anything I could do to minimise the logging to the transaction log, or a way of estimating the correct size of the log for this particular query.

thanks!

If you're having issues with your transaction log filling up, you could try doing the update in batches. The technique is described here (albeit for deletes, but can be used for updates too): https://sqlperformance.com/2013/03/io-subsystem/chunk-deletes

Alternatively just google "sql update in batches" to find lots of examples

I'm on LinkedIn -

April 28, 2021 at 5:55 am

This is achieved by writing a log record to the SQL transaction log file before It runs by default in a single long-running transaction, that prevents the the DML changes that are performed on the SQL Server database table.

Viewing 15 posts - 16 through 30 (of 30 total)

You must be logged in to reply to this topic. Login to reply