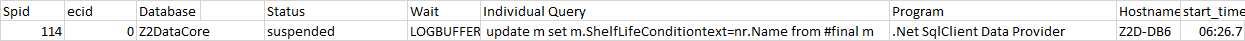

too much slow when check long wrong query i found status suspend with wait

-

March 2, 2022 at 6:14 pm

I work on sql server 2019 i run my stored procedure on sql server .

it take may be 5 hours so i try to trace why it take too much time or too long time .

so I make this query to trace issue on my procedure

SET TRANSACTION ISOLATION LEVEL READ UNCOMMITTED

-- What SQL Statements Are Currently Running?

SELECT [Spid] = session_Id

, ecid

, [Database] = DB_NAME(sp.dbid)

, [User] = nt_username

, [Status] = er.status

, [Wait] = wait_type

, [Individual Query] = SUBSTRING (qt.text,

er.statement_start_offset/2,

(CASE WHEN er.statement_end_offset = -1

THEN LEN(CONVERT(NVARCHAR(MAX), qt.text)) * 2

ELSE er.statement_end_offset END -

er.statement_start_offset)/2)

,[Parent Query] = qt.text

, Program = program_name

, Hostname

, nt_domain

, start_time

FROM sys.dm_exec_requests er

INNER JOIN sys.sysprocesses sp ON er.session_id = sp.spid

CROSS APPLY sys.dm_exec_sql_text(er.sql_handle)as qt

WHERE session_Id > 50 -- Ignore system spids.

AND session_Id NOT IN (@@SPID) -- Ignore this current statement.

ORDER BY 1, 2individual query suspend as below

update m set m.ShelfLifeConditiontext=nr.Name from #final m

inner JOIN dbo.Nop_AcceptedValuesOption nr WITH(NOLOCK) ON nr.AcceptedValuesOptionID = m.ShelfLifeConditinI see query update m set as above with status suspend

so what i do to solve issue of long time process for process suspend with wait log buffer please ?

-

March 2, 2022 at 6:37 pm

Try running an "Estimated Execution plan on that". I'm thinking that the join condition is inadequate and has caused a huge and terrible CROSS JOIN.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

March 2, 2022 at 9:05 pm

can you may clear what you mean by

has caused a huge and terrible CROSS JOIN.

really this issue is very strange

-

March 3, 2022 at 12:02 am

Sure. Your join condition is as follows...

ON nr.AcceptedValuesOptionID = m.ShelfLifeConditin

Do you have indexes on both of those columns? Probably not especially since one of the tables is a Temp Table (although that's why we ask for ACTUAL Execution Plans when you post performance problems. See the article at the 2nd link in my signature line below for more info). So, what ends up happening is that every row in the "m" has to check every row in the "nr" table. If the "nr" table has only 10 rows and no index on the column, that means a good part of the work to be done has to be done "m" times.

There's also another issue. There's a pretty good chance that your #Final (m) table is a HEAP and there's an equally good chance that the m.ShelfLifeConditiontext column starts off as a NULL and that's why you're updating it. The problem is that adding anything to a variable width column constitutes an "ExpAnsive" update, cause each row to grow. Since your #Final table probably doesn't have a Clustered Index on it with the appropriate Fill Factor to handle the growth of the individual rows AND it's a HEAP, the rows have to move to the end of the HEAP, creating new pages to do so and having to calculate "forwarding addresses" from the current row to the new row. Of course, all of the data has to be copied to the new row at the end of the HEAP and the original has to be deleted and replaced by the forwarding address.

There are a few ways to fix this... of course this is all based on guesses, especially about the column datatypes and the size of the two tables. I'm sorry to say it but your posts have been making some serious sucking sounds because you never seem to provide enough data for anyone to help you and you don't answer clarification questions very well, if at all. If you haven't done so and with the number of questions you've been asking, YOU REALLY NEED TO REED AND HEED the articles at the first two links in my signature line below or your going to have people start ignoring your posts. Seriously... you need to provide more and the right kind of info in the future.

Anyway, here are a couple of possibilities based on that total lack of info is just got done ranting about...

- Add an appropriate Clustered Index to the #Final Table with a properly sized Fill Factor, all of which takes extra memory AND then you have to remember to do Minimal Logging during the inserts to that you don't blow out the log file.

- Instead of giving that column a variable width, give it fixed width of the largest piece of information in the dbo.Nop_AcceptedValuesOption (nr ) table so that inserting the join data into it doesn't cause the expansion. It IS, after all, just a temporary table. That will also cost extra memory and extra work because if you need to insert the data from the #Final table into another table, you have to remember to trim the trailing blanks before you do.

- A 3rd way, ant his might be the best way, in this case, is to leave the column as a variable width column but add a DEFAULT equal to the widest with that you expect to update the column with. For example, if the widest width is going to be 30 characters, then use SPACE(30) as the DEFAULT. Anything that goes into the column after that will make the row shrink a bit or stay the same size. As with the other two methods, it does take some extra memory initially BUT it won't take much more that what the final size would be to begin with, figuring out the size of the default is easy, it will solve ALL of the "ExpAnsive" Update issues including not having any rows needing to be moved or deleted, and there's nothing special you have to do if you insert that data into another table later. If you don't populate all the rows in that column and you want NULLs instead of spaces, then you just need to use a NULLIF(thecolumnnamehere,' ') when you do the final insert to another table.

And, seriously.... I know there's a bit of a language gap between some of us and you but the language of SQL is common between us. You really need to provide more information in the form of CREATE TABLE statements, sometimes test data as "Readily Consumable Data", and the occasional Actual Execution Plan. Like I said, what you've posted on this thread is virtually useless and all I'm doing is guess based on the small query you posted without even including rowcounts or row width.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

March 4, 2022 at 10:04 am

This was removed by the editor as SPAM

-

March 4, 2022 at 6:04 pm

"...I work on sql server 2019 ..."

Post in the SQL 2019 area next time. It's more visible, and others who are familiar with 2019 will see it.

-

March 4, 2022 at 7:17 pm

AS Jeff emphasied in his post, look at your indexes on both of your tables (even the temp table). It is worth re-emphasising.

I would also look into how the table #final is created. Again indexes, any that could be added ?

----------------------------------------------------

Viewing 7 posts - 1 through 6 (of 6 total)

You must be logged in to reply to this topic. Login to reply