STUFF Function use

-

February 5, 2020 at 12:44 pm

Hello DB people

I've this table TableA that have these fields: [intIdEntidad],[intIdEjercicio],[idTipoGrupoCons]. The tableA look like for idTipoGrupoCons = 16

Result 1

idTipoGrupoCons intIdEntidad intIdEjercicio

16 50 7

16 45 4

16 45 2

I'm trying to use STUFF function to show the column intIdEjercicio separated by coma, this is query I'm using

SELECT DISTINCT o.idTipoGrupoCons, o.intIdEntidad, ejercicios= STUFF((

SELECT ', ' + CONVERT(VARCHAR,a.intIdEjercicio)

FROM dbo.[TableA ] AS a

WHERE a.idTipoGrupoCons = 16

FOR XML PATH, TYPE).value(N'.[1]', N'varchar(max)'), 1, 2, '')

FROM [TableA ] AS o

JOIN TableB p On O.intIdEntidad = p.intIdEntidad

WHERE o.idTipoGrupoCons = 16

The result that obtain is this:

idTipoGrupoCons intIdEntidad ejercicios

16 45 7, 4, 2

16 50 7, 4, 2

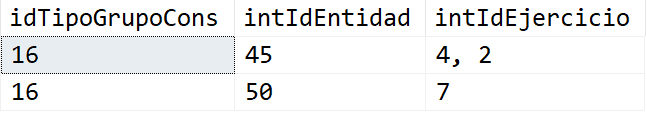

The above result isn't that I nedd, because the result It's must be like this, its' means like query in Result 1(above)

dTipoGrupoCons intIdEntidad ejercicios

16 45 4, 2

16 50 7

I supouse that the problem is that I need to add a subquery to or a function into STUFF or in the outer WHERE to add condition to intIdEntidad each time to call STUFF function.

I've read about use of CROSS APPLY and perhaps it can used to solved the problem

I expect to explain my problem correct Thanks in advanced

-

February 5, 2020 at 2:04 pm

Please help us help you... in the future, please post readily consumable data. Please Read'n'Heed the article at the first link for how to help us help you more quickly and easily and why it helps.

The rest of your post is great because it shows what you've tried and what the desired result should be.

Here's another way to post readily consumable data...

--===== Create the readily consumable test data

CREATE TABLE #MyHead

(

idTipoGrupoCons INT NOT NULL

,intIdEntidad INT NOT NULL

,intIdEjercicio INT NOT NULL

)

;

INSERT INTO #MyHead

(idTipoGrupoCons,intIdEntidad,intIdEjercicio)

VALUES (16,50,7)

,(16,45,4)

,(16,45,2)

;You were pretty close with your code but, instead of using DISTINCT, you actually do need to use a GROUP BY. Here's code that solves the problem. Note that STUFF only gets rid of the leading delimiter that's formed. The "FOR XML PATH concatenation" is what actually does the heavy lifting.

--===== Solve the problem with XML PATH to concatenate the data with commas and spaces.

-- The only thing STUFF does is it gets rid of the first comma and space.

SELECT idTipoGrupoCons

,intIdEntidad

,intIdEjercicio = STUFF(

(SELECT ', '+CONVERT(VARCHAR(10),intIdEjercicio)

FROM #MyHead t2

WHERE t2.idTipoGrupoCons = t1.idTipoGrupoCons

AND t2.intIdEntidad = t1.intIdEntidad

FOR XML PATH ('')

)

,1,2,'')

FROM #MyHead t1

GROUP BY idTipoGrupoCons,intIdEntidad

;That results in the following:

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 5, 2020 at 2:18 pm

with grupo_cte(idTipoGrupoCons, intIdEntidad, intIdEjercicio) as (

select 16, 50, 7

union all

select 16, 45, 4

union all

select 16, 45, 2)

SELECT

o.idTipoGrupoCons,

o.intIdEntidad,

stuff((select ', ' + convert(varchar,a.intidejercicio)

from grupo_cte a

where

a.idtipogrupocons = o.idtipogrupocons

and a.intidentidad=o.intidentidad

for xml path, type).value(N'.[1]', N'varchar(max)'), 1, 2, '') Ejercicio

FROM

grupo_cte o

group by

o.idTipoGrupoCons,

o.intIdEntidad;- This reply was modified 6 years ago by Steve Collins. Reason: type

Aus dem Paradies, das Cantor uns geschaffen, soll uns niemand vertreiben können

-

February 5, 2020 at 2:24 pm

Ha it's the exact same query.

Aus dem Paradies, das Cantor uns geschaffen, soll uns niemand vertreiben können

-

February 5, 2020 at 2:32 pm

Thaks you very much for both scdecade and Jeff Moden, I aprecciate very much your help.

This is exactly that a need . In a future I'll document better my posts

Greetings

Madrazo.

-

February 5, 2020 at 7:39 pm

Steve Collins wrote:Ha it's the exact same query.

I left out "TYPE" because it was guaranteed to be integer digits and "TYPE" makes things a bit slower over the long haul but, yep, the same other wise. Proof that we both had a good idea 😀

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally)

Viewing 6 posts - 1 through 6 (of 6 total)

You must be logged in to reply to this topic. Login to reply