SSIS task that runs a PowerQuery

-

I already have a PowerQuery that reads and parses a table from a PDF file, cleans it up and then lands it in PowerBI, but I'd like to make that land in my database. (Because who wants to rerun a bunch of PowerQueries over and over again when it's totally unnecessary?)

I'm reading this article, but getting nowhere:

Power Query Source - SQL Server Integration Services (SSIS) | Microsoft Learn

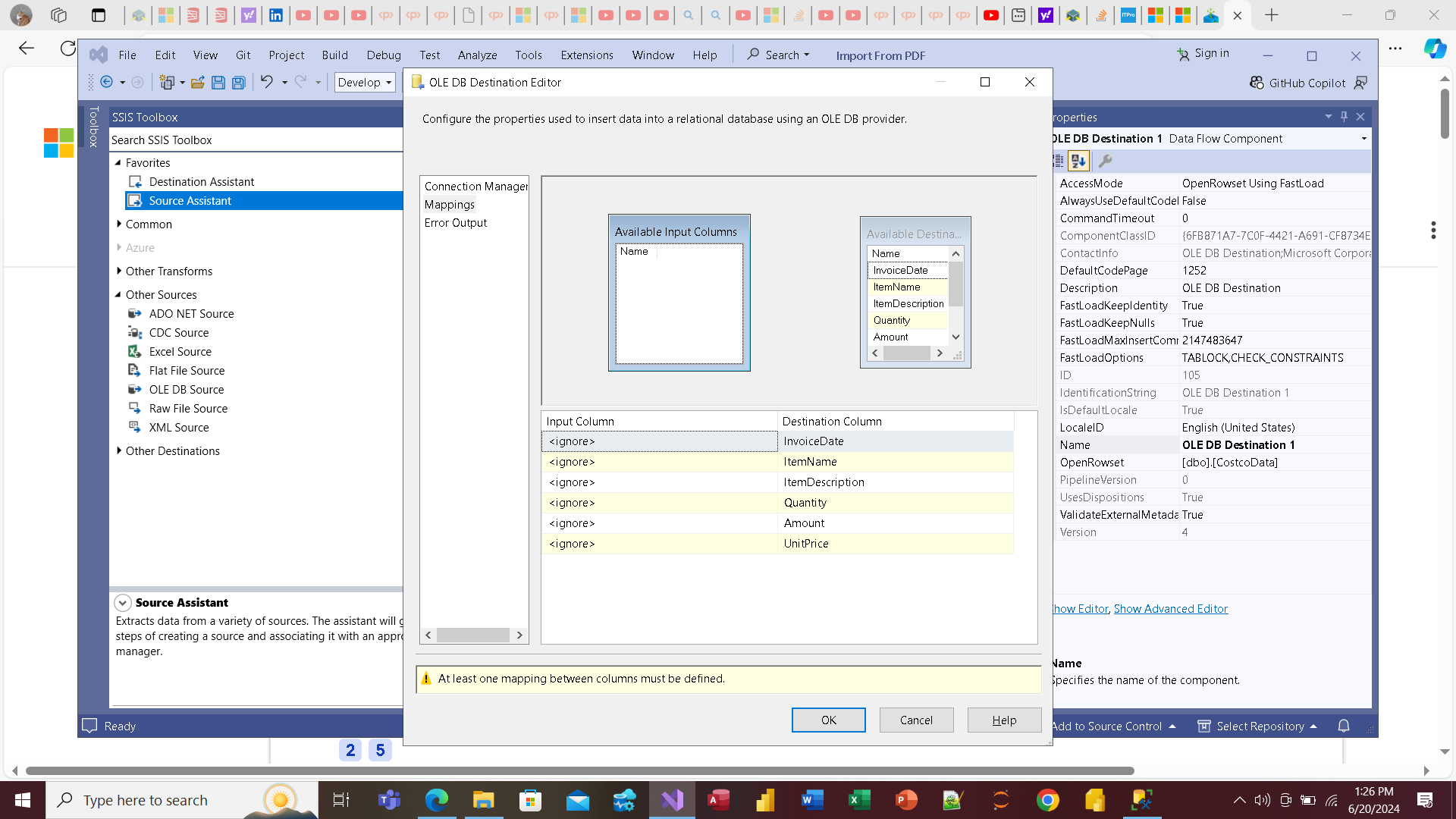

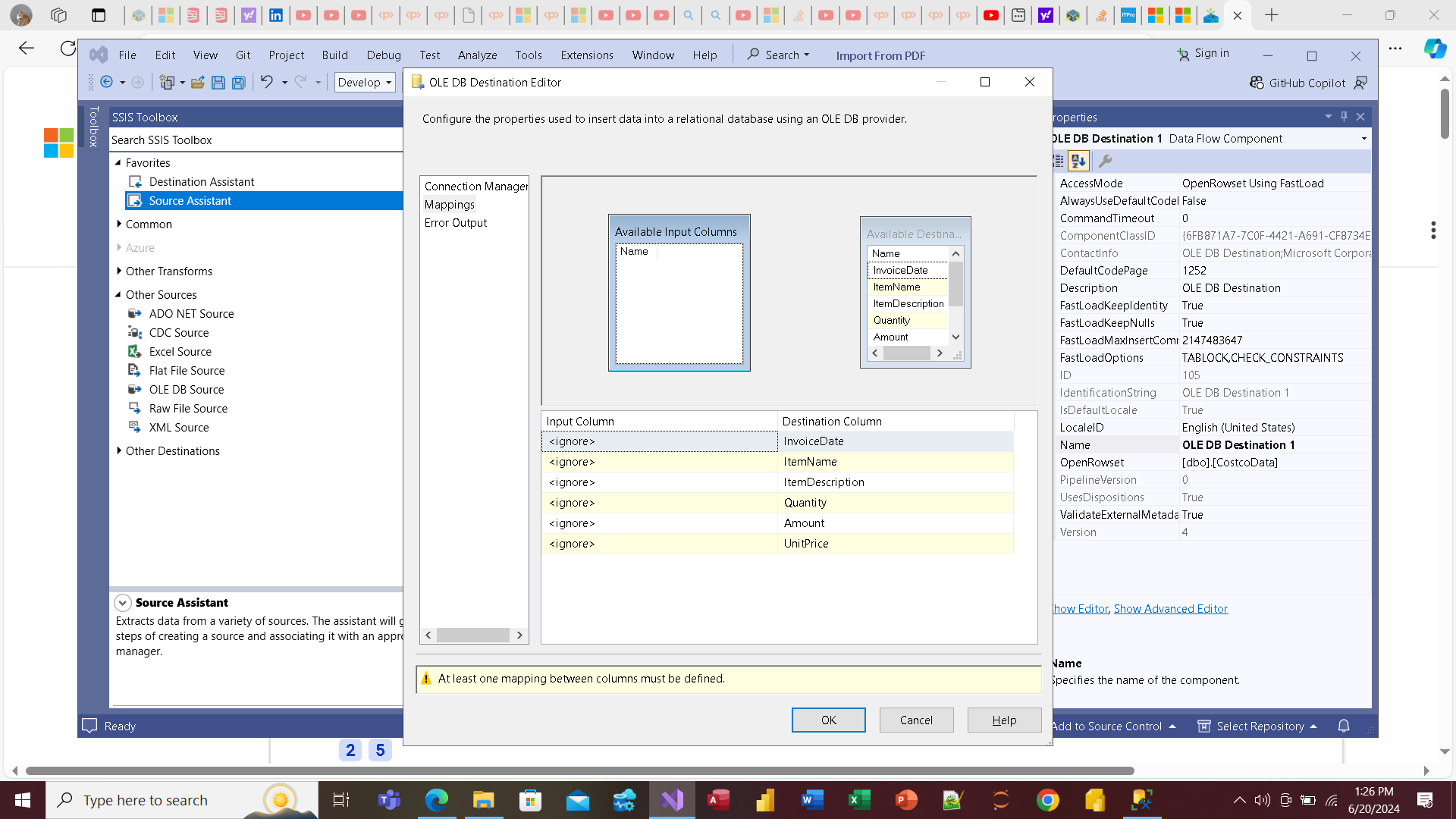

When I try to map the columns from the PowerQuery source to my table destination in SQL Server, none of the columns in the PowerQuery source show up. (see the screenshot below).

I decided I must be missing something, so for grins, I had SSIS read an Excel file and report on the number of rows. Just to make sure. And it worked.

Is there some magic trick to getting a PowerQuery to run in SSIS? here's my query, if that matters:

let

Source = Pdf.Tables(File.Contents("C:\Users\User\Downloads\CostcoInvoice.pdf"), [Implementation="1.3"]),

Table001 = Source{[Id="Table001"]}[Data],

#"Promoted Headers" = Table.PromoteHeaders(Table001, [PromoteAllScalars=true]),

#"Changed Type" = Table.TransformColumnTypes(#"Promoted Headers",{{"CARD#", Int64.Type}, {"Column2", Int64.Type}, {"WHSDATE ITEM", type text}, {"Column4", Int64.Type}, {"ITEM", type text}, {"DEPT REG# TIME TRAN# QTY AMOUNT TAXABLE REFUND REFUND", Int64.Type}, {"Column7", Int64.Type}, {"Column8", Int64.Type}, {"Column9", Int64.Type}, {"Column10", Int64.Type}, {"Column11", type number}, {"Column12", type text}, {"Column13", type text}}),

#"Merged Columns" = Table.CombineColumns(Table.TransformColumnTypes(#"Changed Type", {{"Column4", type text}}, "en-US"),{"WHSDATE ITEM", "Column4"},Combiner.CombineTextByDelimiter(" ", QuoteStyle.None),"Merged"),

#"Split Column by Delimiter" = Table.SplitColumn(#"Merged Columns", "Merged", Splitter.SplitTextByDelimiter("- ", QuoteStyle.Csv), {"Merged.1", "Merged.2"}),

#"Changed Type1" = Table.TransformColumnTypes(#"Split Column by Delimiter",{{"Merged.1", type text}, {"Merged.2", Int64.Type}}),

#"Filtered Rows" = Table.SelectRows(#"Changed Type1", each ([ITEM] <> "DESCRIPTION")),

#"Duplicated Column" = Table.DuplicateColumn(#"Filtered Rows", "Merged.2", "ITEM_"),

#"Merged Columns1" = Table.CombineColumns(Table.TransformColumnTypes(#"Duplicated Column", {{"Merged.2", type text}, {"Column9", type text}}, "en-US"),{"Merged.2", "Column9"},Combiner.CombineTextByDelimiter("", QuoteStyle.None),"ItemTxn"),

#"Cleaned Text" = Table.TransformColumns(#"Merged Columns1",{{"ItemTxn", Text.Clean, type text}}),

#"Changed Type2" = Table.TransformColumnTypes(#"Cleaned Text",{{"ItemTxn", Int64.Type}}),

#"Filled Down" = Table.FillDown(#"Changed Type2",{"ItemTxn"}),

#"Added Conditional Column" = Table.AddColumn(#"Filled Down", "ItemTxn2", each if [ItemTxn] < 5000 then null else [ItemTxn]),

#"Filled Down1" = Table.FillUp(#"Added Conditional Column",{"ItemTxn2"}),

#"Filled Down2" = Table.FillDown(#"Filled Down1",{"Column7"}),

#"Merged Columns2" = Table.CombineColumns(Table.TransformColumnTypes(#"Filled Down2", {{"Column7", type text}, {"ItemTxn2", type text}}, "en-US"),{"ItemTxn2", "Column7"},Combiner.CombineTextByDelimiter("", QuoteStyle.None),"Merged"),

#"Renamed Columns" = Table.RenameColumns(#"Merged Columns2",{{"Merged", "ItemTxn2"}}),

#"Grouped Rows" = Table.Group(#"Renamed Columns", {"ItemTxn2"}, {{"ItemTxn3", each _, type table [#"CARD#"=nullable number, Column2=nullable number, Merged.1=nullable text, ITEM=nullable text, #"DEPT REG# TIME TRAN# QTY AMOUNT TAXABLE REFUND REFUND"=nullable number, Column7=nullable number, Column8=nullable number, ItemTxn=nullable number, Column10=nullable number, Column11=nullable number, Column12=nullable text, Column13=nullable text, ItemTxn2=number]}}),

#"Added Custom1" = Table.AddColumn(#"Grouped Rows", "Custom1", each Table.Column([ItemTxn3], "ITEM")),

#"Extracted Values" = Table.TransformColumns(#"Added Custom1", {"Custom1", each Text.Combine(List.Transform(_, Text.From), " "), type text}),

#"Added Custom2" = Table.AddColumn(#"Extracted Values", "CustomDate", each Table.Column([ItemTxn3], "Merged.1")),

#"Extracted Values1" = Table.TransformColumns(#"Added Custom2", {"CustomDate", each Text.Combine(List.Transform(_, Text.From), " "), type text}),

#"Expanded ItemTxn3" = Table.ExpandTableColumn(#"Extracted Values1", "ItemTxn3", {"Column10", "Column11", "ITEM_"}, {"Column10", "Column11", "ITEM_"}),

#"Filtered Rows1" = Table.SelectRows(#"Expanded ItemTxn3", each ([ITEM_] <> null)),

#"Removed Columns" = Table.RemoveColumns(#"Filtered Rows1",{"ItemTxn2"}),

#"Reordered Columns" = Table.ReorderColumns(#"Removed Columns",{"ITEM_", "Custom1", "Column10", "Column11", "CustomDate"}),

#"Renamed Columns1" = Table.RenameColumns(#"Reordered Columns",{{"Custom1", "DESCRIPTION"}, {"Column10", "QTY"}, {"Column11", "AMOUNT"}}),

#"Added Custom Column" = Table.AddColumn(#"Renamed Columns1", "Custom", each let splitCustomDate = Splitter.SplitTextByDelimiter(" ", QuoteStyle.None)([CustomDate]), splitsplitCustomDate1 = Splitter.SplitTextByDelimiter("-", QuoteStyle.None)(splitCustomDate{1}?) in Text.Combine({Text.Combine(splitsplitCustomDate1, "/"), "/", Text.Start([CustomDate], 4)}), type text),

#"Changed Type3" = Table.TransformColumnTypes(#"Added Custom Column",{{"Custom", type date}}),

#"Removed Columns1" = Table.RemoveColumns(#"Changed Type3",{"CustomDate"}),

#"Renamed Columns2" = Table.RenameColumns(#"Removed Columns1",{{"Custom", "DATE"}}),

#"Reordered Columns1" = Table.ReorderColumns(#"Renamed Columns2",{"DATE", "ITEM_", "DESCRIPTION", "QTY", "AMOUNT"})

in

#"Reordered Columns1"Basically, it's grabbing a table from the PDF file, doing some transformations on the data, and then adding it to the data model in PowerBI. Do I have to point the SSIS task somewhere to get it to read the file? (I thought PowerQuery did that and just returned a dataset or whatever. In PowerBI, it works just fine.)

I can create a SQL Server destination just fine, but when I try to use the PowerQuery(PDF) source, no column names show up. (like it's not evaluating the result of the query). When I try to exit the PowerQuery Source editor, I get this error:

The import Pdf.Tables matches no exports. Did you miss a module reference?

So I can't map the columns (the PDF file columns don't show up in SSIS. If I run the same query in PowerBI or in Excel, they show up just fine). Any idea what I'm doing wrong? What more information do you need? Screen shots? Here's one of what I see when I try to map the columns for the import.

I guess it's time to see if I copy the same PowerQuery into Excel, can I import those results into SQL Server? Or is there something I'm just not seeing here?

Thanks!

UPDATE:

Okay, I got it to work sort of. Had to resort to using Excel to run the PowerQuery on a folder source, filter for PDFs I want, and then once that's all working, Use the 64-bit Import/Export Wizard to import the Excel file into the database.

that SSIS PowerQuery source against as PDF at least doesn't seem to work. (When I try to map the columns from the table in the PDF, the columns are apparently not read, so you can't map the columns)

Just wondering... has anybody gotten the PowerQuery source to work against a PDF with tables? If so, how did you do it?

( Just another part of the Bakery puzzle... )

- This topic was modified 1 years, 8 months ago by pietlinden. Reason: more information

- This topic was modified 1 years, 8 months ago by pietlinden.

- This topic was modified 1 years, 8 months ago by pietlinden. Reason: consolidation

-

June 20, 2024 at 9:10 pm

Thanks for posting your issue and hopefully someone will answer soon.

This is an automated bump to increase visibility of your question.

-

June 21, 2024 at 1:38 am

Okay, I got it to work sort of. Had to resort to using Excel to run the PowerQuery on a folder source, filter for PDFs I want, and then once that's all working, Use the 64-bit Import/Export Wizard to import the Excel file into the database.

that SSIS PowerQuery source against as PDF at least doesn't seem to work. (When I try to map the columns from the table in the PDF, the columns are apparently not read, so you can't map the columns)

Just wondering... has anybody gotten the PowerQuery source to work against a PDF with tables? If so, how did you do it?

( Just another part of the Bakery puzzle... )

- This reply was modified 1 years, 8 months ago by pietlinden.

- This reply was modified 1 years, 8 months ago by pietlinden.

-

June 24, 2024 at 1:08 am

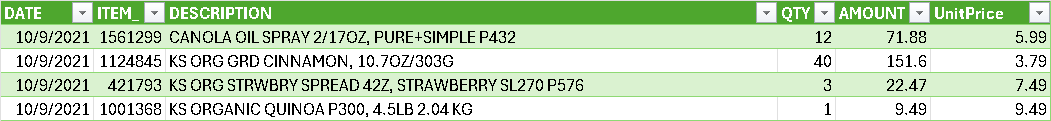

After fiddling with this for what seems like forever, I finally got most of the way there. The PowerQuery transform works, but the data type transformations do not. I inserted a RecordCount transform between the Source (PowerQuery) and destination (SQL Server), and I get a number, so something's working. but when I try to map the column types, it fails.

I saw this: Working with SSIS Data Types - Simple Talk (red-gate.com) but I must be doing something wrong, because I get type conversions all over the place. Not sure what to do... here's the definition of the destination table:

CREATE TABLE [dbo].[CostcoData](

[InvoiceDate] [date] NULL,

[ItemName] [nvarchar(10) NULL],

[ItemDescription] [nvarchar](100) NULL,

[Quantity] [tinyint] NULL,

[Amount] [decimal](5, 2) NULL,

[UnitPrice] [decimal](5, 2) NULL

) ON [PRIMARY]

GOHere's some sample data.

How do I set the column types in SSIS so that I can import the contents? I tried...

Date as Date,

Item_ as INT,

Description NVARCHAR(200),

Qty INT,

Amount DECIMAL(5,2),

UnitPrice DECIMAL(4,2)

but no joy. I get conversion errors all over the place. Is there an article that explains how the conversion types work? I guess I just don't get it yet.

Thanks!

-

June 24, 2024 at 8:00 am

Rather than setting the column types, I suggest you add a data conversion component after the source to convert from source to target datatypes. It is these converted columns which should then be mapped to your target.

-

June 29, 2024 at 3:01 pm

Just wondering... can I put the PowerQuery source inside a For Each File Loop, and then, if I make the PowerQuery a variable, basically do a REPLACE (Yes, I know, I'm mixing apples (TSQL) and oranges (PowerQuery)) to update the Source to a new PDF file on each loop?

Well, what happened when you TRIED it? You know, just have the inner loop do something simple like returning a rowcount?

Great idea!

-

July 4, 2024 at 3:33 am

I tweaked my code because I wanted to be able to call the PowerQuery function that reads the PDF once for each file... here's the code (Note: this works perfectly in both PowerBI and Excel):

// ParseCostcoReceipt

let

Source = (filePath as any) => let

Source = Pdf.Tables(File.Contents(filePath), [Implementation="1.3"]),

Table001 = Source{[Id="Table001"]}[Data],

#"Promoted Headers" = Table.PromoteHeaders(Table001, [PromoteAllScalars=true]),

#"Changed Type" = Table.TransformColumnTypes(#"Promoted Headers",{{"CARD#", Int64.Type}, {"Column2", Int64.Type}, {"WHSDATE ITEM", type text}, {"Column4", Int64.Type}, {"ITEM", type text}, {"DEPT REG# TIME TRAN# QTY AMOUNT TAXABLE REFUND REFUND", Int64.Type}, {"Column7", Int64.Type}, {"Column8", Int64.Type}, {"Column9", Int64.Type}, {"Column10", Int64.Type}, {"Column11", type number}, {"Column12", type text}, {"Column13", type text}}),

#"Merged Columns" = Table.CombineColumns(Table.TransformColumnTypes(#"Changed Type", {{"Column4", type text}}, "en-US"),{"WHSDATE ITEM", "Column4"},Combiner.CombineTextByDelimiter(" ", QuoteStyle.None),"Merged"),

#"Split Column by Delimiter" = Table.SplitColumn(#"Merged Columns", "Merged", Splitter.SplitTextByDelimiter("- ", QuoteStyle.Csv), {"Merged.1", "Merged.2"}),

#"Changed Type1" = Table.TransformColumnTypes(#"Split Column by Delimiter",{{"Merged.1", type text}, {"Merged.2", Int64.Type}}),

#"Filtered Rows" = Table.SelectRows(#"Changed Type1", each ([ITEM] <> "DESCRIPTION")),

#"Duplicated Column" = Table.DuplicateColumn(#"Filtered Rows", "Merged.2", "ITEM_"),

#"Merged Columns1" = Table.CombineColumns(Table.TransformColumnTypes(#"Duplicated Column", {{"Merged.2", type text}, {"Column9", type text}}, "en-US"),{"Merged.2", "Column9"},Combiner.CombineTextByDelimiter("", QuoteStyle.None),"ItemTxn"),

#"Cleaned Text" = Table.TransformColumns(#"Merged Columns1",{{"ItemTxn", Text.Clean, type text}}),

#"Changed Type2" = Table.TransformColumnTypes(#"Cleaned Text",{{"ItemTxn", Int64.Type}}),

#"Filled Down" = Table.FillDown(#"Changed Type2",{"ItemTxn"}),

#"Added Conditional Column" = Table.AddColumn(#"Filled Down", "ItemTxn2", each if [ItemTxn] < 5000 then null else [ItemTxn]),

#"Filled Down1" = Table.FillUp(#"Added Conditional Column",{"ItemTxn2"}),

#"Filled Down2" = Table.FillDown(#"Filled Down1",{"Column7"}),

#"Merged Columns2" = Table.CombineColumns(Table.TransformColumnTypes(#"Filled Down2", {{"Column7", type text}, {"ItemTxn2", type text}}, "en-US"),{"ItemTxn2", "Column7"},Combiner.CombineTextByDelimiter("", QuoteStyle.None),"Merged"),

#"Renamed Columns" = Table.RenameColumns(#"Merged Columns2",{{"Merged", "ItemTxn2"}}),

#"Grouped Rows" = Table.Group(#"Renamed Columns", {"ItemTxn2"}, {{"ItemTxn3", each _, type table [#"CARD#"=nullable number, Column2=nullable number, Merged.1=nullable text, ITEM=nullable text, #"DEPT REG# TIME TRAN# QTY AMOUNT TAXABLE REFUND REFUND"=nullable number, Column7=nullable number, Column8=nullable number, ItemTxn=nullable number, Column10=nullable number, Column11=nullable number, Column12=nullable text, Column13=nullable text, ItemTxn2=number]}}),

#"Added Custom1" = Table.AddColumn(#"Grouped Rows", "Custom1", each Table.Column([ItemTxn3], "ITEM")),

#"Extracted Values" = Table.TransformColumns(#"Added Custom1", {"Custom1", each Text.Combine(List.Transform(_, Text.From), " "), type text}),

#"Added Custom2" = Table.AddColumn(#"Extracted Values", "CustomDate", each Table.Column([ItemTxn3], "Merged.1")),

#"Extracted Values1" = Table.TransformColumns(#"Added Custom2", {"CustomDate", each Text.Combine(List.Transform(_, Text.From), " "), type text}),

#"Expanded ItemTxn3" = Table.ExpandTableColumn(#"Extracted Values1", "ItemTxn3", {"Column10", "Column11", "ITEM_"}, {"Column10", "Column11", "ITEM_"}),

#"Filtered Rows1" = Table.SelectRows(#"Expanded ItemTxn3", each ([ITEM_] <> null)),

#"Removed Columns" = Table.RemoveColumns(#"Filtered Rows1",{"ItemTxn2"}),

#"Reordered Columns" = Table.ReorderColumns(#"Removed Columns",{"ITEM_", "Custom1", "Column10", "Column11", "CustomDate"}),

#"Renamed Columns1" = Table.RenameColumns(#"Reordered Columns",{{"Custom1", "DESCRIPTION"}, {"Column10", "QTY"}, {"Column11", "AMOUNT"}}),

#"Added Custom Column" = Table.AddColumn(#"Renamed Columns1", "Custom", each let splitCustomDate = Splitter.SplitTextByDelimiter(" ", QuoteStyle.None)([CustomDate]), splitsplitCustomDate1 = Splitter.SplitTextByDelimiter("-", QuoteStyle.None)(splitCustomDate{1}?) in Text.Combine({Text.Combine(splitsplitCustomDate1, "/"), "/", Text.Start([CustomDate], 4)}), type text),

#"Changed Type3" = Table.TransformColumnTypes(#"Added Custom Column",{{"Custom", type date}}),

#"Removed Columns1" = Table.RemoveColumns(#"Changed Type3",{"CustomDate"}),

#"Renamed Columns2" = Table.RenameColumns(#"Removed Columns1",{{"Custom", "DATE"}}),

#"Reordered Columns1" = Table.ReorderColumns(#"Renamed Columns2",{"DATE", "ITEM_", "DESCRIPTION", "QTY", "AMOUNT"}),

#"Renamed Columns3" = Table.RenameColumns(#"Reordered Columns1",{{"DESCRIPTION", "Product Description"}, {"ITEM_", "ProductKey"}, {"DATE", "TransactionDate"}, {"QTY", "Quantity"}, {"AMOUNT", "LineTotal"}})

in

#"Renamed Columns3"

in

SourceI'm following the video here: Power Query in Integration Services - YouTube

But in my PowerQuery (as you can maybe see), I'm reading a PDF, which Powerquery can do just fine, but SSIS doesn't have a connector for it, so if I click the "Detect Data Source" button, it fails to detect anything. How do I get that part to work? Basically I'm just calling a function (albeit a PowerQuery function, and trying to pass the filename)... in something hideous like VBA it would be ReadPDFContents(byval strFilePath) and I'd call it by doing something like

strContents = ReadPDFContents("C:\PathToPDF\MyCostcoReceipt.pdf")

and maybe assigning the value to strFilePath inside a loop that reads files from a directory ... DIR(strPathToPDF & "\*.pdf")...

but when I go to the PowerQuery Source Editor, and select Columns, there are no column names listed (the box is empty).

Does this thing just not work (maybe what Koen was talking about in his article from maybe 6 years ago)? If I run this through Excel, how do I pass the filepath to the PDF back to the PowerQuery there? (Can't help but think that MSFT is all "You should do this in Fabric!!!", which seems to be code for "We don't support SSIS or SQL Server on the desktop anymore".

the whole point of making the PowerQuery a function that accepts a path to a valid PDF file was so I could imitate the Folder source in PowerQuery, and decide what to do with the file once I try to import it. (Sure, if it fails, it gets moved to the Fail folder etc).

has anyone gotten this to work? Right now I'd settle for just writing the results to SQL Server, but I can't map the columns. Since I can't do that, I don't know how creating a stored procedure or BULK INSERT will solve the problem. Or am I missing some key detail that allows this to happen? can I not pass a file type that SSIS cannot natively read to a PowerQuery source?

I guess I could in theory get Excel to do this, but then how do I pass the file path to the PDF through SSIS to Excel to do that?

- This reply was modified 1 years, 8 months ago by pietlinden.

-

July 4, 2024 at 9:15 am

The image you have posted is of an OLEDB Destination.

What does your source look like? Does it have output columns?

-

July 5, 2024 at 3:14 am

Okay.. I got things working so far... not quite what I want, but closer:

Okay... progress, of a sort.

Excel Source

to

Derived Column (unit price)

to

Data Conversion (Convert PQ and Excel Types to SQL Server Types)

to

OLEDB Destination (SQL Server).

Not perfect, but so far so good. No idea why I can't use a PowerQuery source, but it just plain borks when I try it. Bummer, because

data transforms in Powerquery are WAAAAY more flexible. What I would really like to do is to be able to pass a variable value into the PowerQuery (create an input parameter in the PowerQuery to accept a filename/path), and then use that in a ForEach File Loop, so I can import an entire folder full of PDFs that look like the one I have already processed, and all of the data will get written to my database. Well, I'd rather not have to use Excel as a crutch, but I'm not completely convinced that the PowerQuery source in SSIS really works 100%. (Is there better documentation on it somewhere?)

Got that at least kind of working. What it really should do is connect to a collection/series of PDF files, parse them one at a time, and append the contents to the database, but that's in a little while. When I tried using a PowerQuery source, I could no longer map columns the way I could with an Excel file. So I'm a bit stymied. I haven't found any documentation on the PowerQuery source "widget" that's worth a dime. (Well, 5p over there...) I'm starting to understand why this thing isn't GA or whatever.

Soooo close, and yet...

-

July 5, 2024 at 3:40 am

Not 100% sure the destination matters at this point. I can't get the PowerQuery Source to output anything at all. If I use an Excel source (that runs a PowerQuery), I can map the columns just fine (well, after a bunch of transformations)... but they do write to the database.

To test it, I made a copy of the working package and used a PowerQuery source instead of an Excel source, and all of the sudden, the columns didn't show up in the "mapper".

-

July 5, 2024 at 8:22 am

I wanted to test this, but after running the two 2022 installation files (from here), the PQ connector did not appear for me in VS2022. Can't see anything which says it isn't supported there, but I assume that's the issue.

Can you post screenshots of your PQ source, after configuring it in VS? Include the 'Columns' tab, please.

-

July 6, 2024 at 7:20 am

I think the PQ Source is a dead end. It's crazy buggy. Either that or I'm doing something incredibly stupid in SSIS, because if I use the PQ Source, SSIS routinely crashes. I'm starting to wonder if I could create a template in Excel where I can change the PDF file(s) that that the template connects to, and then call that mess from SSIS. The PowerQuery source in SSIS is a gimped version of the one in Excel, and that's being nice about it.

-

Calling Excel from SSIS is possible, though it requires Excel to be installed on the server next to where SSIS is running and I would not encourage that.

I cannot think of anything better than a two-stage solution, given the issues with the PQ source in SSIS:

- Use PQ in a loop to create a series of suitably delimited text files from your PDFs

- Import those files into SQL Server using your tool of choice.

-

July 6, 2024 at 4:15 pm

Okay, thanks for looking at this. Thought I was just missing something. Maybe I'm not. The PowerQuery source just isn't nearly as functional as I had hoped it would be. (Shame, because it would be nice to use SSIS to manage the processing of the individual source files!)

Oh well. I'll see if I can get it to work using Excel. (VBA, really Microsoft?)

- This reply was modified 1 years, 7 months ago by pietlinden.

Viewing 14 posts - 1 through 14 (of 14 total)

You must be logged in to reply to this topic. Login to reply