SSIS error

-

Hi everyone

My SSIS has an issue that I was attempting to fix. It re-processes calculations for old data which isn't really needed. Once the calculation is done then it should not be re-calc'd when new data is added. To achieve this, I added a field called "Processed_Flag". This field will track if the SP has processed the data for this day. If the field says "N" then that means that SP has not processed it yet so it should be processed. By default, all new records added will have "N". It is only once the SP has processed the data for the "N" records then the field is changed to "Y". This is the background. I am getting an error in SSIS which I am not sure how to fix. Is someone able to help?

Code:

CREATE TABLE #TEMP_TABLE

(

other fields

[TIME_STAMP] [datetime] NOT NULL,

[PROCESSED_FLAG] [nchar](1) NOT NULL,

[PROCESSED_DATE] [datetime] NULL

);

Exec ( 'BULK INSERT #TEMP_TABLE

FROM ''' +

@FILEPATH +

''' WITH (FIRSTROW = 2, FIELDTERMINATOR = '','', ROWTERMINATOR = ''\n'', MAXERRORS = 0);

UPDATE #TEMP_TABLE

SET UNDERLYING_SYMBOL = RIGHT(UNDERLYING_SYMBOL, LEN(UNDERLYING_SYMBOL) - CHARINDEX(''^'', UNDERLYING_SYMBOL))

WHERE CHARINDEX(''^'', UNDERLYING_SYMBOL) = 1

UPDATE #TEMP_TABLE

SET PROCESSED_FLAG = ''N''

INSERT INTO DBO.DataTable

SELECT *, ''' + @RECORD_ADD_DT + ''' AS TIME_STAMP

FROM #TEMP_TABLE'

)This is the new part I added to the SP:

...

[PROCESSED_FLAG] [nchar](1) NOT NULL,

[PROCESSED_DATE] [datetime] NULL

...

UPDATE #TEMP_TABLE

SETPROCESSED_FLAG = ''N''Error:

Information: 0x0 at Update Table DataTable: Message: Bulk load data conversion error (type mismatch or invalid character for the specified codepage) for row 2, column 35 (PROCESSED_FLAG).

The OLE DB provider "BULK" for linked server "(null)" reported an error. The provider did not give any information about the error.

Cannot fetch a row from OLE DB provider "BULK" for linked server "(null)".

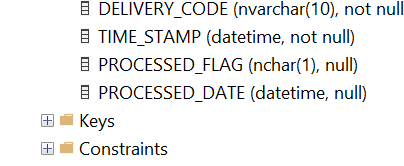

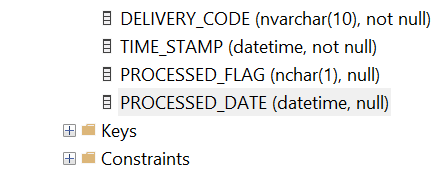

Information: 0x0 at Update Table DataTable: Rollback successfulThe destination table has the same datatype as SP:

CREATE TABLE [dbo].[DataTable](

other fields

[TIME_STAMP] [datetime] NOT NULL,

[PROCESSED_FLAG] [nchar](1) NOT NULL,

[PROCESSED_DATE] [datetime] NULL

) ON [PRIMARY]

GOThank you

-

March 11, 2024 at 7:10 am

Does you code actually look like what you posted? I ask because there is no space between the terms "SET" and "Processed_Flag" and there needs to be.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

March 11, 2024 at 8:20 am

error is very clear - your bulk insert is failing because of data conversion between input (your file) and the output (#TEMP_TABLE).

it has Nothing to do with that flag or its update.

-

March 11, 2024 at 2:39 pm

frederico_fonseca wrote:error is very clear - your bulk insert is failing because of data conversion between input (your file) and the output (#TEMP_TABLE).

it has Nothing to do with that flag or its update.

I revised the code a bit:

[TIME_STAMP] [datetime] NOT NULL,

[PROCESSED_FLAG] [nchar](1) NULL,

[PROCESSED_DATE] [datetime] NULL

);

Exec ( 'BULK INSERT #TEMP_TABLE

FROM ''' +

@FILEPATH +

''' WITH (FIRSTROW = 2, FIELDTERMINATOR = '','', ROWTERMINATOR = ''\n'', MAXERRORS = 0);

UPDATE #TEMP_TABLE

SET UNDERLYING_SYMBOL = RIGHT(UNDERLYING_SYMBOL, LEN(UNDERLYING_SYMBOL) - CHARINDEX(''^'', UNDERLYING_SYMBOL))

WHERE CHARINDEX(''^'', UNDERLYING_SYMBOL) = 1

UPDATE #TEMP_TABLE

SET PROCESSED_FLAG = ''N''

UPDATE #TEMP_TABLE

SET TIME_STAMP = ''' + @RECORD_ADD_DT + '''

INSERT INTO DBO.DataTable

SELECT *

FROM #TEMP_TABLE'

)this SP was working fine before I made this change. Does my SP revisions look ok?

the type is same for temp table and destination tables:

temp:

[TIME_STAMP] [datetime] NOT NULL,

[PROCESSED_FLAG] [nchar](1) NULL,

[PROCESSED_DATE] [datetime] NULLdestination:

I also looked at the source csv file. there are no changes to number of columns.

The error message is slightly different this time. Rather than saying 35 it says 36:

Information: 0x0 at Update Table DataTable: Message: Bulk load data conversion error (type mismatch or invalid character for the specified codepage) for row 2, column 36 (PROCESSED_FLAG).

The OLE DB provider "BULK" for linked server "(null)" reported an error. The provider did not give any information about the error.

Cannot fetch a row from OLE DB provider "BULK" for linked server "(null)".I am really stumped. I don't see what I did wrong.

-

I assume the source file does not contain a processed_flag or processed_date column. I just ran a quick bulk insert test and when I added an extra column to the table I got a conversion error for that column, even if I gave it a default value in the table definition.

If you are literally creating the table every time, you could alter the table and add the two columns after the insert.

-

March 11, 2024 at 4:19 pm

I'd check your variables. No sign of @FILEPATH being declared or populated anywhere in that.

What does the execute statement of the stored procedure that you are issuing look like?

-

March 11, 2024 at 4:31 pm

MarkP wrote:I'd check your variables. No sign of @FILEPATH being declared or populated anywhere in that.

What does the execute statement of the stored procedure that you are issuing look like?

it is declared earlier:

DECLARE @FILEPATH VARCHAR(200)

SET @FILEPATH = @FILENAME

DECLARE @RECORD_ADD_DT varchar(26)

SET @RECORD_ADD_DT = convert(varchar(26),@RECORD_ADD_DATE,121) -

March 11, 2024 at 4:35 pm

Next step on the debug root now we've sorted the "is it plugged in" equivalent.

Can you swap the exec for print so that you can see exactly what is executed?

That would then be easier for you to debug as a SQL statement.

-

March 11, 2024 at 4:35 pm

MarkP wrote:I'd check your variables. No sign of @FILEPATH being declared or populated anywhere in that.

What does the execute statement of the stored procedure that you are issuing look like?

Ed B wrote:I assume the source file does not contain a processed_flag or processed_date column. I just ran a quick bulk insert test and when I added an extra column to the table I got a conversion error for that column, even if I gave it a default value in the table definition.

If you are literally creating the table every time, you could alter the table and add the two columns after the insert.

Yes you are correct. those two fields do not exist in the source CSV. You brought up a good point. the field TIME_STAMP does not exist in the source CSV. it is added afterwards. The SP has been working fine with this approach.

I updated my SP to work with PROCESSED_FLAG the same way. Now, I am getting a different error message. Any suggestions on how to fix it?

Code:

Exec ( 'BULK INSERT #TEMP_TABLE

FROM ''' +

@FILEPATH +

''' WITH (FIRSTROW = 2, FIELDTERMINATOR = '','', ROWTERMINATOR = ''\n'', MAXERRORS = 0);

UPDATE#TEMP_TABLE

SETUNDERLYING_SYMBOL = RIGHT(UNDERLYING_SYMBOL, LEN(UNDERLYING_SYMBOL) - CHARINDEX(''^'', UNDERLYING_SYMBOL))

WHERECHARINDEX(''^'', UNDERLYING_SYMBOL) = 1

INSERT INTO DBO.DataTable

SELECT *, ''' + @RECORD_ADD_DT + ''' AS TIME_STAMP, ''N'' AS PROCESSED_FLAG

FROM #TEMP_TABLE'

)Error:

Column name or number of supplied values does not match table definition.

-

March 11, 2024 at 4:38 pm

That's straightforward.

The number of columns in your DataTable don't match what's in your final select statement. Using SELECT * will always leave you at risk.

-

March 11, 2024 at 4:38 pm

MarkP wrote:Next step on the debug root now we've sorted the "is it plugged in" equivalent.

Can you swap the exec for print so that you can see exactly what is executed?

That would then be easier for you to debug as a SQL statement.

I am fairly new to SS. What exactly do you mean by print?

-

March 11, 2024 at 4:53 pm

Ed B wrote:I assume the source file does not contain a processed_flag or processed_date column. I just ran a quick bulk insert test and when I added an extra column to the table I got a conversion error for that column, even if I gave it a default value in the table definition.

If you are literally creating the table every time, you could alter the table and add the two columns after the insert.

this solved the issue. thank you!

I had to re-create the columns after the bulk insert. I have another question. I had to enter a date for the processed_date otherwise the SP would fail. I would like to enter NULL as the date but I tried that and I got an error.

the destination table allows for null value:

How can I add NULL to the processed_date field?

-

March 11, 2024 at 5:27 pm

Does this work?

'INSERT INTO DBO.DataTable

SELECT *, NULL, ''N'' AS PROCESSED_FLAG

FROM #TEMP_TABLE'If you use a column list for the insert and select you can exclude processed_date and it will remain null

'INSERT INTO DBO.DataTable (col1, col2, col3 etc, processed_flag)

SELECT col1, col2, col3 etc, ''N''

FROM #TEMP_TABLE'The suggestion was made to use a @debug variable so you can view the code before executing it. If you make the entire query a variable, you can view the variable before trying to execute it. It makes debugging dynamic sql much easier. This is an example.

DECLARE @Debug INT = 1,

@SQLString NVARCHAR (MAX),

@FILEPATH VARCHAR(100) = 'F:\DATA\ThisFile.txt'

SET @SQLString =

'BULK INSERT #TEMP_TABLE

FROM ''' +

@FILEPATH +

''' WITH (FIRSTROW = 2, FIELDTERMINATOR = '','', ROWTERMINATOR = ''\n'', MAXERRORS = 0);

UPDATE#TEMP_TABLE

SETUNDERLYING_SYMBOL = RIGHT(UNDERLYING_SYMBOL, LEN(UNDERLYING_SYMBOL) - CHARINDEX(''^'', UNDERLYING_SYMBOL))

WHERECHARINDEX(''^'', UNDERLYING_SYMBOL) = 1

INSERT INTO DBO.DataTable

SELECT *, NULL, ''N'' AS PROCESSED_FLAG

FROM #TEMP_TABLE'

IF @Debug = 0

BEGIN

EXEC SP_EXECUTESQL @Query = @SQLString

END

ELSE

BEGIN

PRINT @SQLString

END -

March 11, 2024 at 5:35 pm

water490 wrote:MarkP wrote:Next step on the debug root now we've sorted the "is it plugged in" equivalent.

Can you swap the exec for print so that you can see exactly what is executed?

That would then be easier for you to debug as a SQL statement.

I am fairly new to SS. What exactly do you mean by print?

Instead of exec('your sql expression here') executing your SQL code, using print('your sql expression here') posts the output of what SQL would be run so you can then cut and paste and debug the exact code that would be issued. It's a useful way of capturing the SQL before you run it.

Viewing 15 posts - 1 through 15 (of 18 total)

You must be logged in to reply to this topic. Login to reply