Sampled Statistics Question

-

August 15, 2019 at 10:21 am

Hello all

I'm researching how the Query Optimizer uses statistics to come up with row count estimates, and have found that the histogram value for AVG_RANGE_ROWS, returned by DBCC SHOW_STATISTICS, is usually described as being calculated from RANGE_ROWS divided by DISTINCT_RANGE_ROWS.

While this appears to hold true for FULLSCAN statistics, there are slight discrepancies with sampled statistics whereby the AVG_RANGE_ROWS value differs by up to +/- 0.5% from the calculated value.

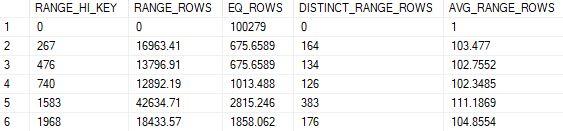

For example, in this histogram snippet...

... the calculated value for step 2 is (16963.41 / 164) = 103.4354, not the stored AVG_RANGE_ROWS value of 103.477.

I'm sure these admittedly tiny discrepancies have been noted before. Have they ever been explained?

-

August 15, 2019 at 3:01 pm

I don't know of any official reasons but my take on it is... we are talking about the company that can't display a match between the listed MB allocations in the files sizes in database properties and the SSRS space used reports. 😀

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

August 19, 2019 at 9:27 am

Thanks Jeff, you may be right!

Since those AVG_RANGE_ROWS values are stored to four decimal places of accuracy, you'd hope it might be possible to divine the computations that led to them.

But I guess STATMAN moves in mysterious ways.

Viewing 3 posts - 1 through 3 (of 3 total)

You must be logged in to reply to this topic. Login to reply