Result of executing SQLCMD from command prompt differs from batch file

-

November 14, 2023 at 1:18 pm

I have the following to find the failed logins from yesterday from the server logs.

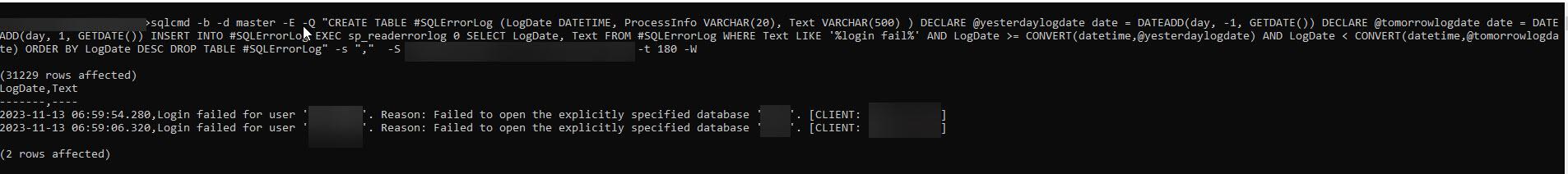

sqlcmd -b -d master -E -Q "CREATE TABLE #SQLErrorLog (LogDate DATETIME, ProcessInfo VARCHAR(20), Text VARCHAR(500) ) DECLARE @yesterdaylogdate date = DATEADD(day, -1, GETDATE()) DECLARE @tomorrowlogdate date = DATEADD(day, 1, GETDATE()) INSERT INTO #SQLErrorLog EXEC sp_readerrorlog 0 SELECT LogDate, Text FROM #SQLErrorLog WHERE Text LIKE '%login fail%' AND LogDate >= CONVERT(datetime,@yesterdaylogdate) AND LogDate < CONVERT(datetime,@tomorrowlogdate) ORDER BY LogDate DESC DROP TABLE #SQLErrorLog" -s "," -S serverA,1234 -t 180 -W

The result is OK. see attachment below.

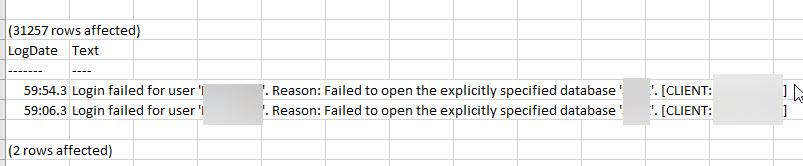

when i output to a .txt file (.csv file the date is not in date format). see attachment below, but i guess it's ok for now. i think this can be fixed. not the issue.

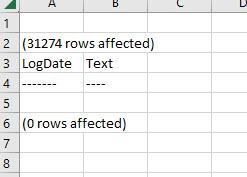

The issue is when i execute the sqlcmd from a .bat file or from powershell. i get null result. see below.

Question, what could cause discrepancy between executing from command prompt and from .bat?

- This topic was modified 1 year, 1 month ago by lan2022nguyen.

-

November 14, 2023 at 2:13 pm

I can only think of 2 reasons for this. First is permissions - are you using the same account to run the bat/ps1 file or are you changing accounts and thus different permissions on the system?

Second thought - I see the row count is different which makes me think the timing of the run is different. Could it be that the failed logins happened more than 24 hours ago? Your script is getting the current date AND time, so going back 1 day from now would be 8:10 AM yesterday (based on my timezone).

That being said, my next step when troubleshooting things like this would be to export the unfiltered log to CSV then open it up in Excel (note a CSV file is NOT an Excel file, it's just that Excel can parse a CSV file), then I'd use some Excel functions to find the text "login failed" to see if it is in the export by bat/ps1. I have a feeling that your failed logins are happening outside the timeframe and that it isn't a problem with the bat/ps1 file.

The above is all just my opinion on what you should do.

As with all advice you find on a random internet forum - you shouldn't blindly follow it. Always test on a test server to see if there is negative side effects before making changes to live!

I recommend you NEVER run "random code" you found online on any system you care about UNLESS you understand and can verify the code OR you don't care if the code trashes your system. -

November 14, 2023 at 2:59 pm

Mr. Brian Gale wrote:I can only think of 2 reasons for this. First is permissions - are you using the same account to run the bat/ps1 file or are you changing accounts and thus different permissions on the system?

same account/user

Second thought - I see the row count is different which makes me think the timing of the run is different. Could it be that the failed logins happened more than 24 hours ago? Your script is getting the current date AND time, so going back 1 day from now would be 8:10 AM yesterday (based on my timezone).

am not sure what the top rows affected mean. it could be all the logs w/o any filtering and the bottom 2 rows affected means after filtering.

That being said, my next step when troubleshooting things like this would be to export the unfiltered log to CSV then open it up in Excel (note a CSV file is NOT an Excel file, it's just that Excel can parse a CSV file), then I'd use some Excel functions to find the text "login failed" to see if it is in the export by bat/ps1. I have a feeling that your failed logins are happening outside the timeframe and that it isn't a problem with the bat/ps1 file.

even if i have no where clause for the date, the result output from bat/ps1 still result in 0 rows affected.

- This reply was modified 1 year, 1 month ago by lan2022nguyen.

-

November 14, 2023 at 4:01 pm

My only thought there is that your bat/ps1 file is not 100% identical to your command. To me it sounds like the bat file or ps1 file is not running the above mentioned command 100% identical.

My next step with troubleshooting would be to load up extended events or profiler and have a peek at what is ACTUALLY being run. Look at the exact code when you run it manually and when you run it through the script and see what is different. SOMETHING is different.

The above is all just my opinion on what you should do.

As with all advice you find on a random internet forum - you shouldn't blindly follow it. Always test on a test server to see if there is negative side effects before making changes to live!

I recommend you NEVER run "random code" you found online on any system you care about UNLESS you understand and can verify the code OR you don't care if the code trashes your system. -

November 14, 2023 at 4:14 pm

you better give us your .ps1 file - and if using powershell you really should be outputting a valid CSV file instead of dumping the output of the command into a file (as you are now)

-

November 14, 2023 at 5:16 pm

As you are using a trusted connection (-E for SQLCMD) it might just be easier to exec a signed stored procedure. I cannot test anything at the moment but something like:

USE [master];

GO

SET ANSI_NULLS, QUOTED_IDENTIFIER ON;

GO

CREATE PROCEDURE dbo.sp_FailedLoginsYesterday

AS

SET NOCOUNT, XACT_ABORT ON;

DECLARE @MinLog int, @MaxLog int, @i int;

DECLARE @logs TABLE

(

LogNumber int

,LogDate datetime

,LogSize INT

);

INSERT @logs

EXEC sys.sp_enumerrorlogs;

WITH LogRange

AS

(

SELECT LogNumber, LogDate AS DateFrom

,LEAD(LogDate , 1, '9999') OVER (ORDER BY LogDate) AS DateTo

FROM @logs

)

SELECT @MinLog = MIN(LogNumber)

,@MaxLog = MAX(LogNumber)

FROM LogRange

WHERE DateFrom < DATEADD(day, DATEDIFF(day, '1900', CURRENT_TIMESTAMP), '1900')

AND DateTo >= DATEADD(day, DATEDIFF(day, '1900', CURRENT_TIMESTAMP) - 1, '1900')

DECLARE @LogInfo TABLE

(

LogDate datetime NOT NULL

,ProcessInfo varchar(255) NOT NULL

,[Text] varchar(MAX) NULL

);

SET @i = @MinLog;

WHILE (@i <= @MaxLog)

BEGIN;

INSERT INTO @LogInfo

EXEC sys.sp_readerrorlog @i, 1, N'Login failed';

SET @i += 1;

END;

SELECT LogDate, ProcessInfo, [Text]

FROM @LogInfo

WHERE LogDate >= DATEADD(day, DATEDIFF(day, '1900', CURRENT_TIMESTAMP) -1, '1900')

AND LogDate < DATEADD(day, DATEDIFF(day, '1900', CURRENT_TIMESTAMP), '1900');

GO

CREATE CERTIFICATE spFailedLoginsYesterday$cert

ENCRYPTION BY PASSWORD = 'A load of Junk1$%'

WITH SUBJECT = '"Needs to be in SysAdmin"';

GO

ADD SIGNATURE TO sp_FailedLoginsYesterday BY CERTIFICATE spFailedLoginsYesterday$cert

WITH PASSWORD = 'A load of Junk1$%';

GO

ALTER CERTIFICATE spFailedLoginsYesterday$cert REMOVE PRIVATE KEY;

GO

CREATE LOGIN spFailedLoginsYesterday$certlogin FROM CERTIFICATE spFailedLoginsYesterday$cert;

GO

ALTER SERVER ROLE sysadmin ADD MEMBER spFailedLoginsYesterday$certlogin;

GO

/* *** Add your Windows user or Group here *** */CREATE USER FailedLoginsYesterday FROM LOGIN [Your Windows User or Group];

GO

GRANT EXEC ON sp_FailedLoginsYesterday TO FailedLoginsYesterday;

GOThis should allow you to call SQLCMD with -Q "EXEC sp_FailedLoginsYesterday".

- This reply was modified 1 year, 1 month ago by Ken McKelvey.

- This reply was modified 1 year, 1 month ago by Ken McKelvey.

-

November 14, 2023 at 8:27 pm

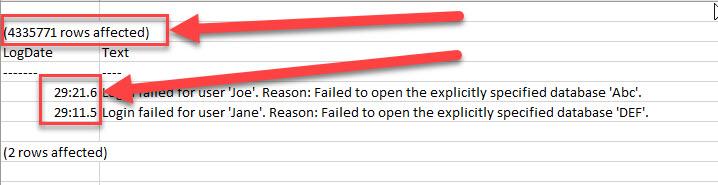

@ken, thanks for the suggestion in putting this as a stored proc. i didn't use yours, but i did create a sp with my t-sql. seems to be working better, but did have some follow-up questions. here's the result of the .csv file

not sure what the top rows affected is coming from. perhaps it's the total of all the logs for the time frame?

and then the logdate is not showing in a datetime format.

thanks for any suggestions

-

November 14, 2023 at 8:34 pm

Try returning LogDate as a datetime2 instead of datetime. Some programs, like Excel, do not format datetime very well.

The total rows suggests you have not SET NOCOUNT ON; at the top of your SP.

- This reply was modified 1 year, 1 month ago by Ken McKelvey.

-

November 14, 2023 at 8:37 pm

the rows affected can be removed by running the command "SET NOCOUNT ON" at the start of the SP.

As for the date/time issue, that is Excel taking the date and having no clue what to do with it, so it puts in something. Try opening the file in a text editor instead of Excel (notepad for example) and you will see the datetime is what you expect.

If you need to review the file in Excel, then you will need to change the number format on that column.

That weird value is 100% Excel trying to be "helpful" with the data and converting it to a stupid format.

The above is all just my opinion on what you should do.

As with all advice you find on a random internet forum - you shouldn't blindly follow it. Always test on a test server to see if there is negative side effects before making changes to live!

I recommend you NEVER run "random code" you found online on any system you care about UNLESS you understand and can verify the code OR you don't care if the code trashes your system.

Viewing 10 posts - 1 through 9 (of 9 total)

You must be logged in to reply to this topic. Login to reply