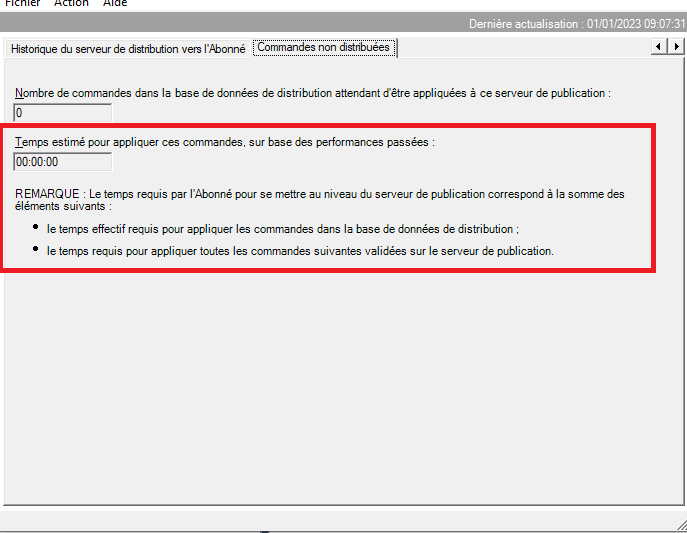

Request to Estimated time to apply these commands,

-

January 1, 2023 at 8:43 am

Good morning all

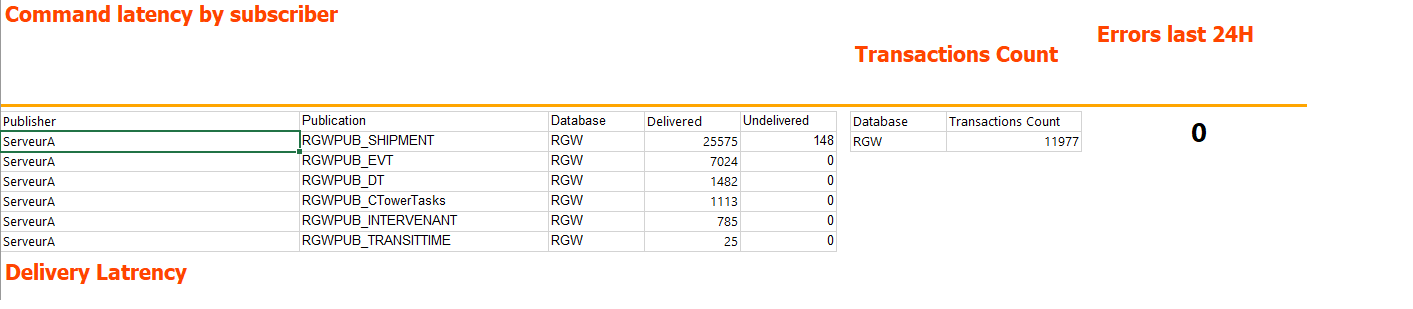

I am currently working on the development of an ssrs report to supervise a transactional replication in production

I used this request to retrieve the number of commands that are not delivered to the subscriber per publication

IF EXISTS(SELECT * FROM sys.objects WHERE name = 'MSdistribution_history')

BEGIN

SELECT top(200) CONVERT(CHAR(15), time, 121) + '0:00' dt

, ROUND(AVG(CAST(current_delivery_latency AS bigint)), 0) AS [Average Delivery Latency]

, ROUND(AVG(CAST(current_delivery_rate AS bigint)), 0) [Average Delivery Rate]

, db_name() as [Database]

FROM dbo.MSdistribution_history WITH (READUNCOMMITTED)

WHERE current_delivery_latency > 0

AND time BETWEEN dateadd(day,-1,getdate()) and getdate()

GROUP BY CONVERT(CHAR(15), time, 121) + '0:00'

ORDER BY dt DESC ENDI need to get the estimate of the time needed to apply these commands

thanks for your help

-

January 2, 2023 at 9:10 am

Thanks for posting your issue and hopefully someone will answer soon.

This is an automated bump to increase visibility of your question.

-

January 3, 2023 at 2:52 pm

There's no way to know without testing it on the system you're running it against. Query performance is not simply the T-SQL itself (although that alone is a huge factor). You will see different performance between two machines based on the processors, the amount of memory, the size of the disks, the speed of the disks, the type of memory, server settings, database settings, statistics maintenance, contention for any or all of these resources, locking, blocking, I'm sure I'm missing some too.

Best bet, run the query on two or three systems, 10-20 times. Get the average. Go up one or two standard deviations, use that number.

"The credit belongs to the man who is actually in the arena, whose face is marred by dust and sweat and blood"

- Theodore RooseveltAuthor of:

SQL Server Execution Plans

SQL Server Query Performance Tuning -

January 5, 2023 at 6:51 am

This would be difficult to do how much time it will take for the subscriber database to receive the replicated commands and process them from the distributor. Network performance, disk I/O, daily processing tasks can all affect how long it takes.

You can use the Wireshark/sniffer tool to get the time it takes for the commands completion on the Subscriber server.

DBASupport

-

January 5, 2023 at 10:27 am

One nice trick that I learned from Brent Ozar is to create a table for each publication that has only one row and one column of datetime datatype. Have a job that each minute updates the record to the current time. Add this table to the publication that you want to monitor. Monitor the table on the target database. If it is few minutes behind the current time, then the replication is running slow or even has a problem and doesn't run. Helped me in the past few times

Viewing 5 posts - 1 through 4 (of 4 total)

You must be logged in to reply to this topic. Login to reply