remove double quotes from file ssis- azure

-

October 22, 2019 at 7:22 pm

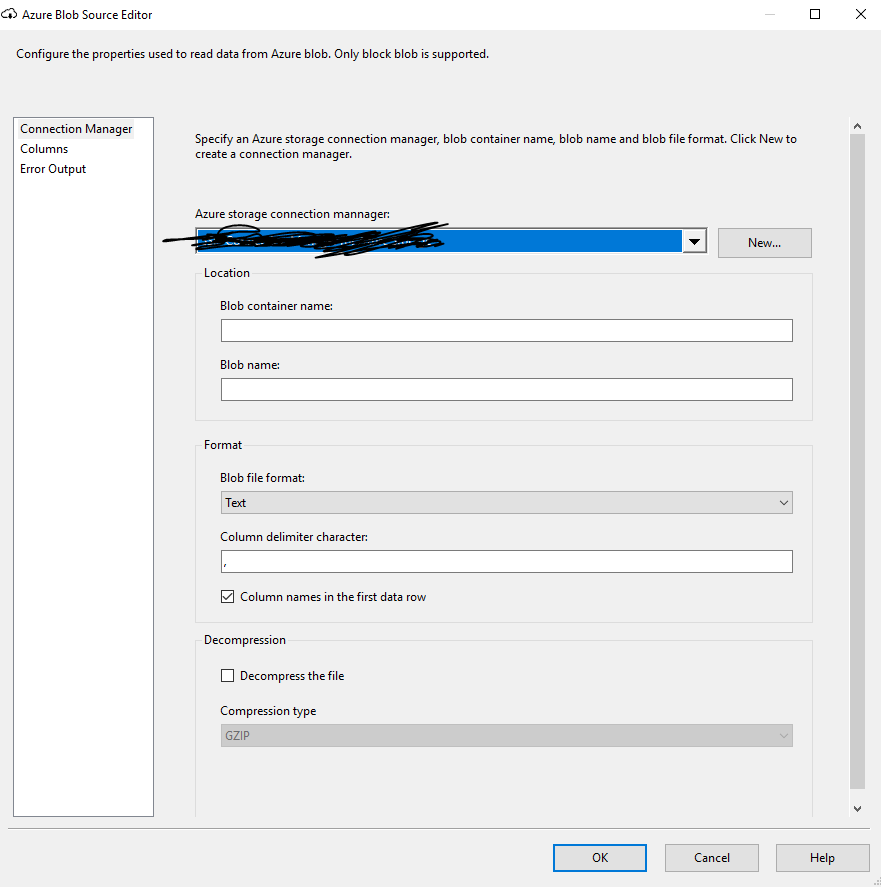

I have a csv file coming with column names and column values are in double quotes. The csv file resides inside Azure Blob and for that I am using Azure blob source task (downloaded from azure featurepack). I need to load this file using SSIS to sql and don't want those double quotes from COLUMN NAMES as well as for COLUMN VALUES. Here is how it looks.

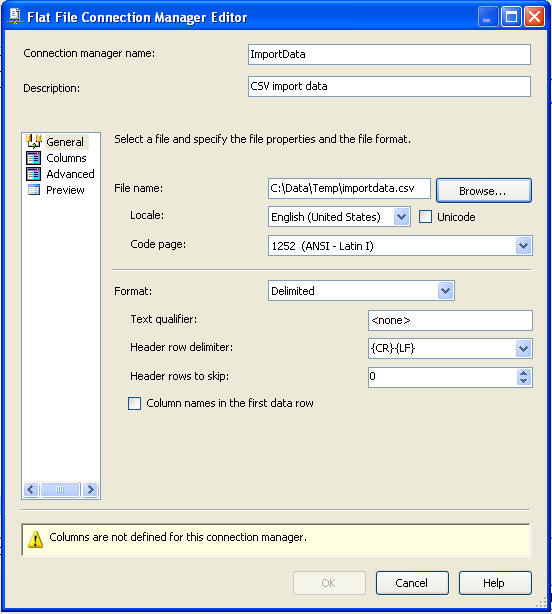

Unlike Flat file connection manager, I can not use " as text qualifier so that it will get rid of those double quotes. Because its not there in Azure Blob Source task.

"ID","Name","Phone"

"1", "sam","11-911-9111"

"2","ham","01-101-1011"

How do i get rid of it using ssis? Thanks

-

October 22, 2019 at 7:27 pm

in your flatfile connection manager, change the text qualifier to a double quote. it will remove the dbl quotes when it builds that path to the destination for clean data.

Lowell

--help us help you! If you post a question, make sure you include a CREATE TABLE... statement and INSERT INTO... statement into that table to give the volunteers here representative data. with your description of the problem, we can provide a tested, verifiable solution to your question! asking the question the right way gets you a tested answer the fastest way possible! -

October 22, 2019 at 7:38 pm

Thanks Sir but I am using Azure Blob Source and not a flat file connection manager. (I've mentioned that in my post)

Is there any other way to get rid of this?

-

October 22, 2019 at 7:59 pm

dang i missed that part.

that literally says bring it into someplace where you can use a flat file connection manager, since it is not supported yet.

sorry:

Azure Blob Source does not supports multiple-character delimiter.

A workaround that is to use standard Flat File Source, so you need to first download the csv file with Azure Blob Download Task then handle it.

If you are running the SSIS package in Azure, you can download it to Azure Virtual machine.

Lowell

--help us help you! If you post a question, make sure you include a CREATE TABLE... statement and INSERT INTO... statement into that table to give the volunteers here representative data. with your description of the problem, we can provide a tested, verifiable solution to your question! asking the question the right way gets you a tested answer the fastest way possible! -

October 22, 2019 at 9:07 pm

If you can live with the quotes on the column names, it would be easy enough to use derived columns to remove the quotes from the data itself.

The absence of evidence is not evidence of absence.

Martin ReesYou can lead a horse to water, but a pencil must be lead.

Stan Laurel -

October 22, 2019 at 10:07 pm

Thanks and I've tried that and it works for varchar datatype but not for datetime datatype. REPLACE(COLUMN_NAME, "\"", "")

-

October 22, 2019 at 10:10 pm

dallas13 wrote:Thanks and I've tried that and it works for varchar datatype but not for datetime datatype. REPLACE(COLUMN_NAME, "\"", "")

As it's coming out of a text file, it has no inherent datatype. The appropriate conversion is down to you. You'll have to treat the source column as a string and convert it within the package.

The absence of evidence is not evidence of absence.

Martin ReesYou can lead a horse to water, but a pencil must be lead.

Stan Laurel -

October 23, 2019 at 12:40 am

Lowell wrote:dang i missed that part.

that literally says bring it into someplace where you can use a flat file connection manager, since it is not supported yet.

sorry:

Azure Blob Source does not supports multiple-character delimiter.

A workaround that is to use standard Flat File Source, so you need to first download the csv file with Azure Blob Download Task then handle it.

If you are running the SSIS package in Azure, you can download it to Azure Virtual machine.

I'm somehow not even close to being surprised by such lack of functionality. Thanks for the info, Lowell.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

October 23, 2019 at 1:02 am

Azure Blob Source does not supports multiple-character delimiter.

Nit-picking a little, but the delimiter is only a single comma. The double quotes are text qualifiers.

The absence of evidence is not evidence of absence.

Martin ReesYou can lead a horse to water, but a pencil must be lead.

Stan Laurel -

October 23, 2019 at 1:47 am

Phil Parkin wrote:Azure Blob Source does not supports multiple-character delimiter.

Nit-picking a little, but the delimiter is only a single comma. The double quotes are text qualifiers.

Now I don't feel so bad about the nit-picking I decided not to do. 😀

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

October 23, 2019 at 1:51 am

@Phil...

As you know, I'm not a great study of SSIS or Azure and so I have to ask... for something like this (consistent use of delimiters and text qualifiers), it's wicked easy to solve using a BCP format file with the likes of BULK INSERT. Does SSIS allow for the use of something like a BCP format file (with Azure Blobs, to be sure)? If not, does Azure have the equivalent of BULK INSERT and BCP Format files?

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

October 23, 2019 at 1:46 pm

Hi Jeff, I'm still fairly new to Azure (six months and counting ... slowly!) and have been spending a lot of time on the DevOps side rather than doing 'proper' development. It's nice when it works, but making it work takes five times as long as before.

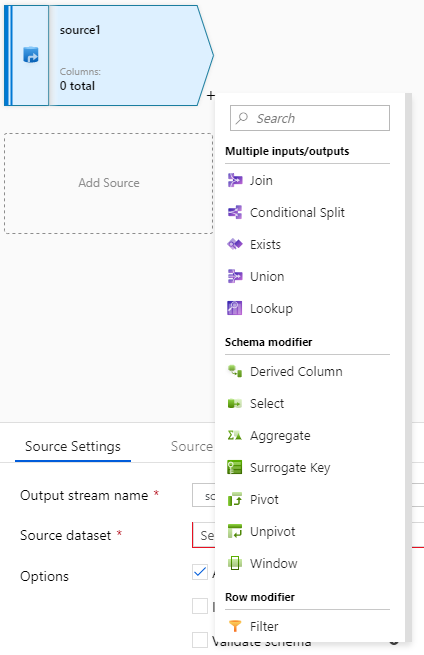

Not only that, but (as far as I can see) the Azure ETL strategy is to get rid of SSIS and instead to promote the Cloud version – this stuff:

As a consequence, I have been doing less SSIS dev than usual.

Nonetheless, I can confirm that it is possible to use BCP from within SSIS, but not without 'cheating'.

By cheating, I mean creating a PoSh script to run the necessary BCP commands and then using an Execute Process task from within SSIS to execute the PoSh script. Hardly what we we call Native Support.

I can also confirm that (to my knowledge) the native SSIS import tools (which are powerful in their own right, in my opinion) do not have any 'format file' capability.

The absence of evidence is not evidence of absence.

Martin ReesYou can lead a horse to water, but a pencil must be lead.

Stan Laurel -

October 23, 2019 at 3:53 pm

Thanks, Phil. I really appreciate your feedback on these types of things.

As a bit of a side bar, how do you like that new Azure ETL tool? Does it have any advantages/improvements over SSIS?

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

October 23, 2019 at 4:14 pm

Jeff Moden wrote:Thanks, Phil. I really appreciate your feedback on these types of things.

As a bit of a side bar, how do you like that new Azure ETL tool? Does it have any advantages/improvements over SSIS?

Always a pleasure to give something back to you ... I've taken so much from the articles you've written, it's only fair!

In terms of capability, SSIS smokes the new Azure ETL tool. But the new tool is immature and the feature gap between the two will close with time. There is no equivalent to the SSIS script task, for example. Maybe that's deliberate, but sometimes you just have to crank out some code to get that perfect solution.

If your ETL does not require complex transformations, the Azure tool (more accurately, a Data Factory Data Flow) seems to do the job and the administrative overhead of managing packages, deployments and configurations goes away. The user experience is fairly clean and everything happens in a normal browser session.

On the other hand, building a DevOps solution which moves Data Factory components from QA to Production has been time-consuming and challenging. It's been the sort of challenge where Google searches often return "No Results", so you really have to work things out.

The absence of evidence is not evidence of absence.

Martin ReesYou can lead a horse to water, but a pencil must be lead.

Stan Laurel -

October 23, 2019 at 7:40 pm

Thanks for the great feedback and the very kind words, Phil. Good to know this stuff from someone using it. I've not had the opportunity to even dip my toe in Azure yet. Info like what you just provided will help take some of the edge off when I do.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally)

Viewing 15 posts - 1 through 15 (of 15 total)

You must be logged in to reply to this topic. Login to reply