MDF size increasing without table size increase

-

September 6, 2022 at 12:58 pm

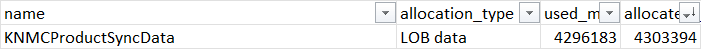

We have a database whose data file size was 1500 GB and used space within the file was around 900 GB on Friday. Today when I checked, the file has grown to 5200 GB and it is growing continuously. When I did the breakup of table sizes, its still 920 GB, which is expected. How do I find which object is using the remaining 4+ TB?

Regards

Pradeep Singh -

September 6, 2022 at 1:03 pm

Do you have a message broker running? Have the messages stopped being consumed?

The query below might shed some light on it for you:

SELECT sch.[name], obj.[name], ISNULL(obj.[type_desc], N'TOTAL:') AS [type_desc],

COUNT(*) AS [ReservedPages],

(COUNT(*) * 8) / 1024.0 / 1024.0 AS [ReservedGB]

FROM sys.dm_db_database_page_allocations(DB_ID(), NULL, NULL, NULL, DEFAULT) pa

INNER JOIN sys.all_objects obj

ON obj.[object_id] = pa.[object_id]

INNER JOIN sys.schemas sch

ON sch.[schema_id] = obj.[schema_id]

GROUP BY GROUPING SETS ((sch.[name], obj.[name], obj.[type_desc]), ())

ORDER BY [ReservedPages] DESC; -

September 6, 2022 at 1:08 pm

I ran your query sometimes back and its still running (1 hr 15 mins+), waiting for the output. Service broker is not enabled.

I ran another query (below) and I see 3 rows of information with row column=0 for each of the 3 rows.

select * from

sys.service_queues sq

join sys.schemas sch on sch.schema_id=sq.schema_id

join sys.internal_tables it on it.parent_object_id=sq.object_id

join sys.partitions p on p.object_id=it.object_id

where p.index_id=1

- This reply was modified 3 years, 5 months ago by ps..

Pradeep Singh -

September 6, 2022 at 2:48 pm

MarkP wrote:Do you have a message broker running? Have the messages stopped being consumed?

The query below might shed some light on it for you:

SELECT sch.[name], obj.[name], ISNULL(obj.[type_desc], N'TOTAL:') AS [type_desc],

COUNT(*) AS [ReservedPages],

(COUNT(*) * 8) / 1024.0 / 1024.0 AS [ReservedGB]

FROM sys.dm_db_database_page_allocations(DB_ID(), NULL, NULL, NULL, DEFAULT) pa

INNER JOIN sys.all_objects obj

ON obj.[object_id] = pa.[object_id]

INNER JOIN sys.schemas sch

ON sch.[schema_id] = obj.[schema_id]

GROUP BY GROUPING SETS ((sch.[name], obj.[name], obj.[type_desc]), ())

ORDER BY [ReservedPages] DESC;This is still running for almost 3 hrs now.

Pradeep Singh -

September 6, 2022 at 2:51 pm

That's strange, it should run in less than a second.

Do you have a DBA to refer this to or are you the DBA for this?

-

September 6, 2022 at 3:03 pm

MarkP wrote:That's strange, it should run in less than a second.

Do you have a DBA to refer this to or are you the DBA for this?

I'm the DBA. We have weekend reorganize job (cant do rebuilds as system is always online and I have seen rebuilds blocking processes).

The strage part is sp_SpaceUsed is giving different numbers - About 1600 GB for data and 3700 GB for indexes. This is weird. Even though actual table sizes (including indexes) is ~900 GB..

SP_SpaceUsed info:-

reserved data index_size unused

5456043464 KB 1698157760 KB 3745980032 KB 11905672 KB

Pradeep Singh -

September 6, 2022 at 3:16 pm

-

September 6, 2022 at 3:30 pm

ps. wrote:MarkP wrote:That's strange, it should run in less than a second.

Do you have a DBA to refer this to or are you the DBA for this?

I'm the DBA. We have weekend reorganize job (cant do rebuilds as system is always online and I have seen rebuilds blocking processes).

The strage part is sp_SpaceUsed is giving different numbers - About 1600 GB for data and 3700 GB for indexes. This is weird. Even though actual table sizes (including indexes) is ~900 GB..

SP_SpaceUsed info:-

reserved data index_size unused 5456043464 KB 1698157760 KB 3745980032 KB 11905672 KB

My recommendation would be to stop using REORGANIZE. It doesn't work the way most people think it works. It's quite possible that your indexes are massively bloated because of all the page splits (the primary cause of fragmentation) you perpetuated by using REORGANIZE.

Doing index maintenance wrong is much worse than doing none at all. If you're using REORGANIZE, you're doing it wrong for about 90% to 95% of all your indexes.

It's especially true for indexes that are fragmenting that have the default Fill Factor of "0".

REORGANIZE is so bad that it's the real reason behind the scenes for the myth of Random GUID fragmentation.

Do a sys.dm_db_index_physical_stats() into a table and then sort in descending order by page_count. Then look at the average_percent_of_page_fullness. I think you're in for a surprise. And, if you add a column that divides the page_count by 128, it'll tell you the actual size of the indexes/heaps.

Which edition and version of SQL Server are you using and do you have any "features" (like AG, log shipping, etc) that require you to stay in the FULL Recovery Model?

Also, what are your FILE GROWTH settings set to on all your databases? If they're based on "percent", that's a problem. If they're based on 4GB, that could also be a problem that I'm not sure that MS ever actually fixed.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

September 6, 2022 at 3:33 pm

p,s, Also, are you using sp_SpaceUsed with the "updateusage" option enabled? If not, you need to start.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

September 16, 2022 at 2:20 pm

You never came back with answers to my questions about the "autogrow" settings and version of SQL Server. Did you ever get this issue figured out?

Fast Inserts Issue?

If it's still an issue, there's one other thought on this... MS tried to help with a "Fast Insert" option. It's meant for "bulk" (a lot of rows meeting certain conditions... not necessarily just BULK INSERT) inserts. The way it does that is by bypassing the normal method of checking if space is available somewhere in the database and, instead, creates brand new extents even if there are already extents available.

Depending on how the Front End was setup, there's a bit of code that comes from the Front End There's a "command" that it produces called "Insert Bulk" (again, not to be confused with BULK INSERT) and it's meant for "a lot of rows" to be passed. The trouble is, a lot of 3rd party software companies think it's a "go faster button" and use it even for single-row inserts. That creates a huge issue of one row occupying and entire extent (8 pages, 64KB)... even if the new row contains just 1 user byte. You can see how that can very quickly get out of hand as "Allocated but Unused" space. It'll show up in the "Disk Usage" pie chart report that you can get to by right clicking on the database in the Object Explorer of SSMS and following your nose for "Reports".

You can disable this "Fast Insert" feature by enabling Trace Flag 692 with the understanding that it's a Global or Session scoped Trace Flag. That means that you can't apply it by database.

And, yeah... I know this from experience. I had a decent size 3rd party database that I didn't do any index maintenance on. Several of the tables where 75% empty where the empty part was "Allocated but Unused" according to SQL Server. Since it's 3rd party stuff and it was only Front End RBAR that was feeding it, I enabled the trace flag at the Server Level (I know our big bulk inserts are done correctly and don't need it for performance) and watched it for 2 weeks. The growth had stopped. I rebuilt the indexes on the affected tables to recover the "Allocated but Unused" space and the problem hasn't recurred.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally)

Viewing 10 posts - 1 through 10 (of 10 total)

You must be logged in to reply to this topic. Login to reply