Limits, Not Goals

-

February 15, 2025 at 1:18 am

Comments posted to this topic are about the item Limits, Not Goals

"The credit belongs to the man who is actually in the arena, whose face is marred by dust and sweat and blood"

- Theodore RooseveltAuthor of:

SQL Server Execution Plans

SQL Server Query Performance Tuning -

February 15, 2025 at 5:25 pm

2100 parameters for a sproc? If you think that is a problem, you have far larger real problems.

Rick

Disaster Recovery = Backup ( Backup ( Your Backup ) ) -

February 15, 2025 at 9:02 pm

Agreed. When we reach these limits, we need to rethink the approach. I can only assume someone needing that many parameters is trying to process a lot of data. If that is the case, you can send a structured dataset as a Json object via a single varchar(max) parameter and the sproc can easily parse that into a temp table for further processing.

- This reply was modified 10 months, 2 weeks ago by SQLGrinder.

-

February 15, 2025 at 9:05 pm

Agreed. When we start reaching a limit, we should reconsider the approach. I can only assume someone needing that many parameters is trying to process a lot of data in an odd manner. If that is the case, you can send a structured Json object via a single varchar(max) parameter and the sproc can easily parse that into a temp table for further processing.

- This reply was modified 10 months, 2 weeks ago by SQLGrinder.

-

February 16, 2025 at 2:20 am

Heh... it's funny how timely this article is, Grant.

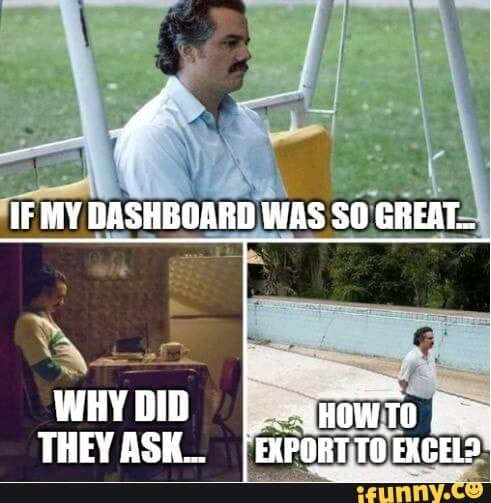

One of the companies that I do a little work for has customers that must be responsible for the following meme...

The end result is that the people serving the customers wrote some code to return some data (all of it, actually) had gotten into the habit of running the query to the grid output in SSMS and then doing a copy/paste of the data to a spreadsheet... all 200+ THOUSAND ROWS with 81 columns, at least 4 of which contained lengthy comments.

It ran that way for quite a while and then it all started to fail earlier this month. They all started to fail on the same day. Something had changed and no one knew exactly what and it's not like the data grew and not all the reports were the same size. I wrote a work around that turned out to be much faster than the original and manually ran that while troubleshooting continued. Fortunately, one of the infrastructure guys had a hunch based on the list of symptoms we had developed and it turned out to be an "improvement" that our firewall vendor had installed. We backed that out and everything went back to "normal"... and to Grant's point... "normal" was very bad to start with.

It turned out that there are many customers they do this for and the firewall was rejecting 9 reports for the 3 largest customers. Most people would say "problem solved" after the fire wall "fix" but I, and the other DBAs at that company are ticked off that no one sought their help in coming up with a proper, safe, and automatic method of extracting and transporting so much data on a daily basis.

So, to add to Grant's well stated suggestion that you shouldn't press the envelope on limits, also understand that just because something voluminous works now, either scale or a rule change in a product is going to put the screws to even your best "data analysis" jobs and you need to get a DBA or a Database Developer involved in the original design to begin with.

Remember that failures normally occur at the very worst time you can afford such a failure and that is when your customer is big and they need data NOW. People should have said "Stop... we need this report every day and we need it for multiple customers and we know our largest customers are going to be huge". We need some help from the 'data folks' (DBA/DB Developers)".

In other words, one of the most important limits to realize early is your own limits. There's no shame in recognizing those and asking for help. Like Red-Green was known for saying, "I'm pullin' for ya... We're all in this together".

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 17, 2025 at 1:39 pm

I can only assume that anyone using that many parameters is simply doing it plain wrong. Either

1) the table structure is just wrong - I mean in a properly designed structure, what table actually requires 2000+ columns? OLTP or Reporting, that's a design smell like an open sewer on a hot day - or

2) you're trying to write (incredibly) complex business objects in the database, rather than app, code. Just. No.

3) probably both

I'm a DBA.

I'm not paid to solve problems. I'm paid to prevent them. -

February 17, 2025 at 2:11 pm

skeleton567 wrote:2100 parameters for a sproc? If you think that is a problem, you have far larger real problems.

Ha! Yeah, no kidding. However, someone, with a bunch of money, went to Microsoft and said, "you know how we can only have 512 parameters. Yeah, we need 2000 can you help us?" And they did. Jumped from 512 in 2005 and previous to 2100 in 2008. Someone needed that. Why? No idea. Sounds horrific.

"The credit belongs to the man who is actually in the arena, whose face is marred by dust and sweat and blood"

- Theodore RooseveltAuthor of:

SQL Server Execution Plans

SQL Server Query Performance Tuning -

February 17, 2025 at 2:12 pm

SQLGrinder wrote:Agreed. When we start reaching a limit, we should reconsider the approach. I can only assume someone needing that many parameters is trying to process a lot of data in an odd manner. If that is the case, you can send a structured Json object via a single varchar(max) parameter and the sproc can easily parse that into a temp table for further processing.

When you find yourself in a hole, step one, stop digging.

"The credit belongs to the man who is actually in the arena, whose face is marred by dust and sweat and blood"

- Theodore RooseveltAuthor of:

SQL Server Execution Plans

SQL Server Query Performance Tuning -

February 17, 2025 at 2:15 pm

Right there with you Jeff & Andrew. Stuff like this makes so many things more difficult.

"The credit belongs to the man who is actually in the arena, whose face is marred by dust and sweat and blood"

- Theodore RooseveltAuthor of:

SQL Server Execution Plans

SQL Server Query Performance Tuning -

February 17, 2025 at 2:22 pm

If they are passing in a collection IDs like CustomerID or SKU, then they should consider using a table valued parameter.

"Do not seek to follow in the footsteps of the wise. Instead, seek what they sought." - Matsuo Basho

-

February 17, 2025 at 3:43 pm

I'm imagining an application that does a call with an inordinate amount of parameters, what a gong show on both sides (front and backend). I sure would like to hear from people who have seen inordinate amounts of params in production code!

I could see relative complexity in a dashboard that is designed in C# instead of PBI/SSRS for instance, but even then, that would be forcibly modularized to a certain extent I would hope...

I've been doing this for 18 years or so and never have I seen anything designed that badly. Though I'm not near retirement; so there is always "tomorrow"!

-

February 18, 2025 at 5:31 pm

I've seen excessive parameters being requested as a result of badly configured ORMS. The exception I can remember is a table that got too wide and stopped working. It had a name like tbl_NR Loans. The solution put forward by the muppets that managed to produce it was to add a 2nd table in a 1:1 relationship called tbl_NR_Loans2.

My experience is that someone who is builds a good object model will produce a reasonable table design. It might need a few tweaks here and there but nothing fundamentally wrong. It cuts the other way too. Someone who can produce a good relational model can produce a reasonable object model, maybe a bit rough around the edges but not something that gets you dumped in a skip at the end of the day.

People who inflict a DB abomination are no better in their alleged area of expertise. If I see something with a lot of parameters I have a suspicion that someone either doesn't know or is ignoring SOLID principles. Even though SOLID principles were not conceived with DBs in mind they have applicability in the DB world.

-

February 18, 2025 at 9:40 pm

I try to desparately avoid limits in many things, but definitely in code. I've seen those 100s of parameters in a proc and 100s of columns in tables, when a little refactoring and tech debt cleanup would make things run better.

Viewing 14 posts - 1 through 14 (of 14 total)

You must be logged in to reply to this topic. Login to reply