Implicit conversion on hash key column is causes very slow insert

-

September 13, 2021 at 7:42 am

I am trying to insert some data into some stage tables where the insert is taking far too long. For example a table containing up 600000 records is taking nearly an hour to complete. In the select part of the query we are are creating a hash of the columns which is later used for change detection. Due to the Data Warehouse methodology we are using, DataVault, we cannot remove the change hash. When I look at the execution plan I see warnings of type conversion in expression. and example is shown below.

<Warnings>

<PlanAffectingConvert ConvertIssue="Cardinality Estimate" Expression="CONVERT(varchar(max),[load].[load_acbs_loan_product_dimension].[product_month],0)" />I have also included a example of an insert statement that we are using:

INSERT INTO [stage_acbs_balance_category_dimension]

( hk_h_balance_category_dimension

, balance_category_key

, balance_category_code

, balance_category_description

, balance_class_code

, balance_class_description

, user_define_code_1

, user_define_code_2

, user_define_code_3

, user_define_code_4

, user_define_code_5

, bal_cat_short_name

, include_bal_in_tax_reporting

, include_in_billings_statements

, include_in_past_due_reporting

, dss_change_hash_acbs_balance_category_dimension_lroc

, dss_record_source

, dss_load_date

, dss_create_time)

SELECT CAST(HASHBYTES('sha2_256',

COALESCE(CAST(load_acbs_balance_category_dimension.balance_category_key AS VARCHAR(MAX)),'null')

) AS BINARY(32)) AS hk_h_balance_category_dimension

, load_acbs_balance_category_dimension.balance_category_key AS balance_category_key

, load_acbs_balance_category_dimension.balance_category_code AS balance_category_code

, load_acbs_balance_category_dimension.balance_category_description AS balance_category_description

, load_acbs_balance_category_dimension.balance_class_code AS balance_class_code

, load_acbs_balance_category_dimension.balance_class_description AS balance_class_description

, load_acbs_balance_category_dimension.user_define_code_1 AS user_define_code_1

, load_acbs_balance_category_dimension.user_define_code_2 AS user_define_code_2

, load_acbs_balance_category_dimension.user_define_code_3 AS user_define_code_3

, load_acbs_balance_category_dimension.user_define_code_4 AS user_define_code_4

, load_acbs_balance_category_dimension.user_define_code_5 AS user_define_code_5

, load_acbs_balance_category_dimension.bal_cat_short_name AS bal_cat_short_name

, load_acbs_balance_category_dimension.include_bal_in_tax_reporting AS include_bal_in_tax_reporting

, load_acbs_balance_category_dimension.include_in_billings_statements AS include_in_billings_statements

, load_acbs_balance_category_dimension.include_in_past_due_reporting AS include_in_past_due_reporting

, CAST(HASHBYTES('SHA2_256',

COALESCE(CAST(load_acbs_balance_category_dimension.balance_category_code AS VARCHAR(MAX)),'null') +'||'+

COALESCE(CAST(load_acbs_balance_category_dimension.balance_category_description AS VARCHAR(MAX)),'null') +'||'+

COALESCE(CAST(load_acbs_balance_category_dimension.balance_class_code AS VARCHAR(MAX)),'null') +'||'+

COALESCE(CAST(load_acbs_balance_category_dimension.balance_class_description AS VARCHAR(MAX)),'null') +'||'+

COALESCE(CAST(load_acbs_balance_category_dimension.user_define_code_1 AS VARCHAR(MAX)),'null') +'||'+

COALESCE(CAST(load_acbs_balance_category_dimension.user_define_code_2 AS VARCHAR(MAX)),'null') +'||'+

COALESCE(CAST(load_acbs_balance_category_dimension.user_define_code_3 AS VARCHAR(MAX)),'null') +'||'+

COALESCE(CAST(load_acbs_balance_category_dimension.user_define_code_4 AS VARCHAR(MAX)),'null') +'||'+

COALESCE(CAST(load_acbs_balance_category_dimension.user_define_code_5 AS VARCHAR(MAX)),'null') +'||'+

COALESCE(CAST(load_acbs_balance_category_dimension.bal_cat_short_name AS VARCHAR(MAX)),'null') +'||'+

COALESCE(CAST(load_acbs_balance_category_dimension.include_bal_in_tax_reporting AS VARCHAR(MAX)),'null') +'||'+

COALESCE(CAST(load_acbs_balance_category_dimension.include_in_billings_statements AS VARCHAR(MAX)),'null') +'||'+

COALESCE(CAST(load_acbs_balance_category_dimension.include_in_past_due_reporting AS VARCHAR(MAX)),'null')

) AS BINARY(32)) AS dss_change_hash_acbs_balance_category_dimension_lroc

, load_acbs_balance_category_dimension.dss_record_source AS dss_record_source

, load_acbs_balance_category_dimension.dss_load_date AS dss_load_date

, getdate() AS dss_create_time

FROM [load_acbs_balance_category_dimension] load_acbs_balance_category_dimensiondoes anybody have any idea on how to get rid of the implicit conversions and get the insert to perform? I initially thought of making the column a computed persisted hash but the hash is non-deterministic. Any ideas would be much appreciated.

- This topic was modified 4 years, 3 months ago by eseosaoregie.

-

September 13, 2021 at 7:42 pm

As a thought - are you sure it is the conversion that is causing the slowness?

What I mean is what if you take out the INSERT INTO statement and just SELECT the data. How long does that take?

If you want to remove the implicit conversion, you have 2 options:

1 - do explicit conversion

2 - change the destination datatype to match the source

My guess, without seeing the full execution plan, is that it isn't the INSERT that is slow, but it could be. How large is the data set you are trying to write? You say it is 600,000 rows of data, but how may KB is each row? For example, 1 KB would be 600 MB (approximately) of data being written. It would be interesting to know too how fast your disk is and how fast your network connection is.

But I would start with doing the query as a SELECT with actual execution plan turned on (performance hit with that, but will help determine what the slow operation is) and review the execution plan to find the slowness. I would take the INSERT out as that should remove the implicit conversion you were talking about and you can compare the performance.

Something to note - the INSERT INTO statement you gave us doesn't use the column that is listed in the warning. The expression it shows is:

CONVERT(varchar(max),[load].[load_acbs_loan_product_dimension].[product_month],0)

and if you look through your SELECT statement and INSERT statement, the column "product_month" doesn't show up. To me, this makes me think that the problem is NOT with the statement you provided us, but with something else?

The above is all just my opinion on what you should do.

As with all advice you find on a random internet forum - you shouldn't blindly follow it. Always test on a test server to see if there is negative side effects before making changes to live!

I recommend you NEVER run "random code" you found online on any system you care about UNLESS you understand and can verify the code OR you don't care if the code trashes your system. -

September 13, 2021 at 11:17 pm

I agree with Brian. The code you posted doesn't even contain the "product_month" column and so I also wonder if you have the correct code posted or if you've just mistaken the advisory...

... OR ...

Is there a trigger on the table? How about a (materialized) indexed view?

Also, check for these other issues.

- How many indexes are there on the table?

- Are there any FKs pointing at the table?

- How many rows are you inserting at one time?

- Does the sort order of the inserts match and come after the any existing rows in the table or are they being inserted into the logical middle of the table, which could be causing massive page splits and the resulting fragmentation?

Also, the method that you're using to convert the string is not only quite long because of the conversions and concatenations you're doing but could also be a part of what is slowing things down because you're using COALESCE to do the conversions and also because of the way they're you're trying to deal with NULL columns AND you're casting almost everything as a VARCHAR(MAX) but have a bunch of stuff that you're concatenating that will cause implicit conversions on top of everything else.

Since you posting on a 2019 forum, I strongly recommend that you look into the proper use of the CONCAT_WS function. Not only will it seriously reduce the code you have but it will take care of all the conversions AND it will all handle NULLs correctly AND it'll easily handle the double_pipe delimiter as a single first parameter.

Fix the hashing using CONCAT_WS, check for triggers and indexed views, and check the number list of some (theres more) of the things that could be causing a slow down.

And, yes, Brian is correct. Look at the execution plan because a lot of that stuff is going to show up there.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

September 14, 2021 at 7:44 am

@JeffModen @Brian. Thank you for the responses. Regarding the warning, it indeed comes from a different query. There are quite a few inserts that I am doing and I just wanted to give an example of the warning. The query with the product_month was just to long to post, 250 columns, so I just wanted to give an example that was shorter.

I think you guys essentially hit the nail on the head with the hashing. I removed the insert and ran the select and indeed it was still slow. I changed varchar(max) to varbinary(max) and got a significant boost in performance, 1 hour down to 40 seconds. I am yet to make the syntax change you mentioned regarding CONCAT_WS so I will do that. Regarding your questions

How many indexes are there on the table? 1 index which is dropped before insert

Are there any FKs pointing at the table? No

How many rows are you inserting at one time? rows are batched at 20,000

Does the sort order of the inserts match and come after the any existing rows in the table or are they being inserted into the logical middle of the table, which could be causing massive page splits and the resulting fragmentation? The inserts match

In the past I have used md5 and sha1 which have performed quite well. However a decision was made by the developing team to use sha2_256 because of clashes and the fact that all other algorithms are deprecated.

In any case I think you guys have given enough information for me to get the inserts performing optimally. Thanks for the assistance

-

September 14, 2021 at 8:36 am

regarding SHA1 - it is deprecated for crypt functions - for this type of uses and because it will be on SQL for a very long time I would not consider ignoring it vs the faster speed/smaller size.

collisions when using a separator are so negligible that could be ignored within DV processing.

where you may gain more performance is on specifying just about the size you need for each column (potentially allow for a bit more than current size if some columns are prone to changes) - MAX will tell SQL to allocate more memory - being it varbinary or varchar - less memory allocated is better.

also and in order to avoid possible server settings changes I strongly advise that any date/datetime column is cast to a specific format - 121 or 126 would cover all possible values for dates and are fixed 26 bytes - or if using a date only then 112 would take 8 bytes only.

This would ensure consistency of the hash regardless of the server settings.

changing to concat could look like this

CAST(HASHBYTES('SHA2_256',

concat_ws('||'

, COALESCE(CAST(load_acbs_balance_category_dimension.balance_category_code AS VARCHAR(MAX)),'null')

, COALESCE(CAST(load_acbs_balance_category_dimension.balance_category_description AS VARCHAR(MAX)),'null')

, COALESCE(CAST(load_acbs_balance_category_dimension.balance_class_code AS VARCHAR(MAX)),'null')

, COALESCE(CAST(load_acbs_balance_category_dimension.balance_class_description AS VARCHAR(MAX)),'null')

, COALESCE(CAST(load_acbs_balance_category_dimension.user_define_code_1 AS VARCHAR(MAX)),'null')

, COALESCE(CAST(load_acbs_balance_category_dimension.user_define_code_2 AS VARCHAR(MAX)),'null')

, COALESCE(CAST(load_acbs_balance_category_dimension.user_define_code_3 AS VARCHAR(MAX)),'null')

, COALESCE(CAST(load_acbs_balance_category_dimension.user_define_code_4 AS VARCHAR(MAX)),'null')

, COALESCE(CAST(load_acbs_balance_category_dimension.user_define_code_5 AS VARCHAR(MAX)),'null')

, COALESCE(CAST(load_acbs_balance_category_dimension.bal_cat_short_name AS VARCHAR(MAX)),'null')

, COALESCE(CAST(load_acbs_balance_category_dimension.include_bal_in_tax_reporting AS VARCHAR(MAX)),'null')

, COALESCE(CAST(load_acbs_balance_category_dimension.include_in_billings_statements AS VARCHAR(MAX)),'null')

, COALESCE(CAST(load_acbs_balance_category_dimension.include_in_past_due_reporting AS VARCHAR(MAX)),'null')

)

) AS BINARY(32))

@jeff - while concat_ws deals well with nulls on this case it could also cause a collision on a case where one row has null vs one with '' (empty string) which likely should be considered different values - so replacing null with 'null' will avoid that as long as the underlying data never has 'null' as content of the field.

-

September 14, 2021 at 11:53 pm

frederico_fonseca wrote:@jeff - while concat_ws deals well with nulls on this case it could also cause a collision on a case where one row has null vs one with '' (empty string) which likely should be considered different values - so replacing null with 'null' will avoid that as long as the underlying data never has 'null' as content of the field.

The coffee is starting to wear off and so I was having a bit of difficulty in trying to imagine where it would be a problem but, now having tried it, here's some info in the form of code that proves that you're correct.

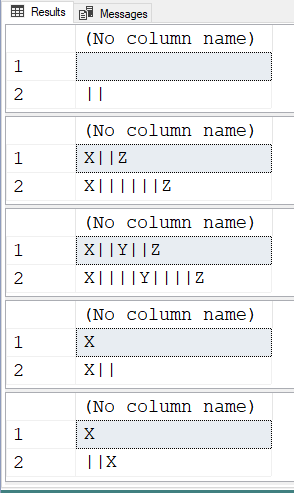

These examples don't create collisions:

SELECT CONCAT_WS('||',NULL,NULL) UNION ALL

SELECT CONCAT_WS('||','','')

;

SELECT CONCAT_WS('||','X',NULL,NULL,'Z') UNION ALL

SELECT CONCAT_WS('||','X','','','Z')

;

SELECT CONCAT_WS('||','X',NULL,'Y',NULL,'Z') UNION ALL

SELECT CONCAT_WS('||','X','','Y','','Z')

;

SELECT CONCAT_WS('||','X',NULL) UNION ALL

SELECT CONCAT_WS('||','X','')

;

SELECT CONCAT_WS('||',NULL,'X') UNION ALL

SELECT CONCAT_WS('||','','X')

;Results with No Collisions:

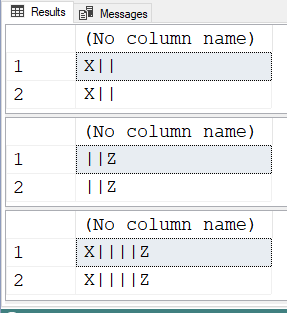

But these examples DO cause collisions:

SELECT CONCAT_WS('||','X',NULL,'') UNION ALL

SELECT CONCAT_WS('||','X','',NULL)

;

SELECT CONCAT_WS('||',NULL,'','Z') UNION ALL

SELECT CONCAT_WS('||','',NULL,'Z')

;

SELECT CONCAT_WS('||','X',NULL,'','Z') UNION ALL

SELECT CONCAT_WS('||','X','',NULL,'Z')

;Results with Collisions:

So, if you're comparing two tables that have NULLable columns, I agree that you're going to have to do an ISNULL or COALESCE to replace NULLs with something like the word 'NULL".

If, however, you're doing imports from CSV/TSV or FIXED FIELD files and you don't convert empty strings to NULLs during input to a staging (or other) table, then it should work fine because you won't have any NULLs. At least I think that would be the case but I'm not trusting me right now because the caffeine low level alarm has be sounding for a couple of hours now. 😀

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

September 17, 2021 at 2:18 am

You should write a short article on the NULL/Empty String collision problem you pointed out to me. It's a great catch.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally)

Viewing 7 posts - 1 through 7 (of 7 total)

You must be logged in to reply to this topic. Login to reply