How to import csv file into a sql table and include filename

-

July 21, 2023 at 2:56 pm

Hi everyone,

I have a directory of many csv files such as

C:\Temp\NP-001.csv

C:\Temp\NP-002.csv

C:\Temp\NP-003.csv

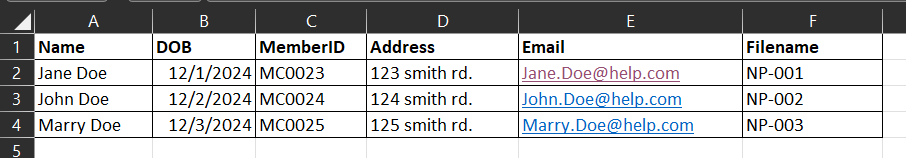

In each csv file, there are 5 columns: Name, DOB, MemberID, Address, Email

We need to somehow loop through each csv file and bulk insert or openrowset information to a sql table. The final result would be like a sql table below. And, codes need to written to get unicode text format to get the Name info correctly if possible.

Please help. Thank you so much in advance!

-

July 21, 2023 at 3:04 pm

This is easy in SSIS, if you are open to that.

-

July 21, 2023 at 3:08 pm

Thanks but I really want SQL script so it can loop through many directories. I should have said I have more than one directory.

-

July 21, 2023 at 3:31 pm

You can do that with SSIS as well. Actually I think this would be pretty painful to do without SSIS and would be difficult to support an maintain in xp_cmdshell or powershell or whatever you were thinking of using

-

July 21, 2023 at 7:24 pm

shogunSQL wrote:Hi everyone,

I have a directory of many csv files such as

C:\Temp\NP-001.csv

C:\Temp\NP-002.csv

C:\Temp\NP-003.csv

In each csv file, there are 5 columns: Name, DOB, MemberID, Address, Email

We need to somehow loop through each csv file and bulk insert or openrowset information to a sql table. The final result would be like a sql table below. And, codes need to written to get unicode text format to get the Name info correctly if possible.

My personal preference is to use xp_CmdShell (it's not the security issue that so many make it out to be when done properly) but xp_DirTree will work here if xp_CmdShell isn't an option.

Please help. Thank you so much in advance!

Provided that the user running the T-SQL (which can be forced to be the SQL Server if done in a proper proc), can actually "see" the UNC or local-to-the-server directory, you can use the mostly undocumented xp_DirTree extended stored procedure to capture the file names into a TempTable and easily cycle through them.

On which machine is the source /drive/directory for the files?

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

July 21, 2023 at 9:03 pm

Actually, I forgot about a PowerShell module that would make this very easy: ImportExcel

It is in the PS Gallery now so you can install it from a computer with an internet connection running Install-Module ImportExcel

I would recommend using import excel to load the file as text as a step in the SQL agent, and then use a stored procedure to do type conversions

-

July 22, 2023 at 9:24 am

Thank you so much, Jeff! I was looking for something like xp_DirTree. Will try it out. Thanks.

-

July 22, 2023 at 10:48 pm

shogunSQL wrote:Thank you so much, Jeff! I was looking for something like xp_DirTree. Will try it out. Thanks.

Although it's more than a decade old, good things don't need to change over time. The following article may help your initial plunge a bit.

https://www.sqlservercentral.com/blogs/how-to-use-xp_dirtree-to-list-all-files-in-a-folder

When "isfile" = 1, the "subdirectory" is the actual file name, including the extension.

If you run into any issues or can't fine the answer to a question, post back. And don't forget that BULK INSERT has had a "CSV" file type since SQL Server 2017 came out. It'll even handle the wonky output that Excel creates if you export a spreadsheet where some of the cells contain a delimiter character.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally)

Viewing 8 posts - 1 through 8 (of 8 total)

You must be logged in to reply to this topic. Login to reply