how to find duplicate rows, dynamically, with a loop

-

December 19, 2019 at 7:24 pm

Hi,

I might be asking too much here (in terms of code) but I need to find duplicates but dynamically. Meaning let's say I have a DB with 50 tables. I need to have SQL scan every table and do a having count>1, but without we me ever entering the table or column names. I know this involves the schema code like information.schema type stuff but beyond that I don't know. I know I need to do a loop through but have not a clue of how I would do this as I'm not advanced at SQL. We're talking about duplicate rows, not individual values in individual columns.

Thanks!

-

December 19, 2019 at 7:48 pm

SELECT CONCAT('

SELECT ',x.c, '

FROM ',QUOTENAME(t.TABLE_SCHEMA), '.', QUOTENAME(t.TABLE_NAME),'

GROUP BY ', x.c, '

HAVING COUNT(*) > 1;')

FROM INFORMATION_SCHEMA.TABLES t

CROSS APPLY (VALUES(STUFF((SELECT ',' + QUOTENAME(c.COLUMN_NAME)

FROM INFORMATION_SCHEMA.COLUMNS c

WHERE c.TABLE_SCHEMA = t.TABLE_SCHEMA

AND c.TABLE_NAME = t.TABLE_NAME

ORDER BY c.ORDINAL_POSITION ASC

FOR XML PATH (''), TYPE).value('.', 'nvarchar(max)'),1,1,''))) x(C)

GO -

December 19, 2019 at 7:50 pm

Why do you need to do this? Any table with a unique index on it, even a non-clustered one, will not have duplicates in it. Same goes for any table with a PK because the PK is enforced with a unique index. I agree that the PK or unique index would make even otherwise duplicate rows unique and those could be candidates for de-duping but why do you think you need to check for duplicates for every table in a database?

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

December 19, 2019 at 8:20 pm

well the PK alone doesn't indicate real world duplicates as in you can create an index column of sorts but that doesn't solve potential duplicates and even where there is a truly unique PK that still doesn't prevent duplicates as I have a warehouse that ingests data from over 20 sources on a daily basis. Need to make sure the data coming in doesn't have duplicates.

-

December 19, 2019 at 8:23 pm

So it's for staging tables on your database, not your application tables?

-

December 19, 2019 at 8:23 pm

Hi..thanks so much. just one question, what does the x.c in the select statement mean to infer?

-

December 19, 2019 at 8:24 pm

you would think but and maybe it will be but for now b/c this hasn't been implemented yet (stupidly) it is on prod tables. there prob aren't many if any but the check makes it official and removes any speculation

-

December 19, 2019 at 8:38 pm

so I ran that and it worked but I'm not sure how to interpret the results. First, is there a way for me to simply spit out the metadata such as when it finds a duplicate it gives me the table name, the count, and the record numbers.

something like this

Table X |14-22| 8

this is a sample record of the query based on your code

SELECT [assetTag],[brandName],[contractDEnd],[contractRef],[dtDepreciation],[dtInvent],[dtLastScan],[enrichedTcpIpHostname],[itServiceArea],[locBarCode],[locFullName],[locFacility],[modelFullName],[modelName],[natureName],[operatingSystem],[portfolioCode],[regionCode],[room],[seAssignment],[serverType],[tcpIpDomain],[tcpIpHostname],[translatedAssignment],[viClusterName],[REF_DATE],[ETL_DATE],[RunSourceID] FROM [dbo].[HPAM_CB_SERVERS] GROUP BY [assetTag],[brandName],[contractDEnd],[contractRef],[dtDepreciation],[dtInvent],[dtLastScan],[enrichedTcpIpHostname],HAVING COUNT(*) > 1;

-

December 19, 2019 at 11:13 pm

SELECT CONCAT('

SELECT ''', QUOTENAME(t.TABLE_SCHEMA), '.', QUOTENAME(t.TABLE_NAME), ''' [TABLE_NAME], COUNT(*) COUNT

FROM (SELECT ',x.c, '

FROM ',QUOTENAME(t.TABLE_SCHEMA), '.', QUOTENAME(t.TABLE_NAME),'

GROUP BY ', x.c, '

HAVING COUNT(*) > 1) y;') SQL

FROM INFORMATION_SCHEMA.TABLES t

CROSS APPLY (VALUES (STUFF((SELECT ',' + QUOTENAME(c.COLUMN_NAME)

FROM INFORMATION_SCHEMA.COLUMNS c

WHERE c.TABLE_SCHEMA = t.TABLE_SCHEMA

AND c.TABLE_NAME = t.TABLE_NAME

ORDER BY c.ORDINAL_POSITION ASC

FOR XML PATH (''), TYPE).value('.', 'nvarchar(max)'),1,1,''))) x(C)

GOI don't know what "14-22" is in your request?

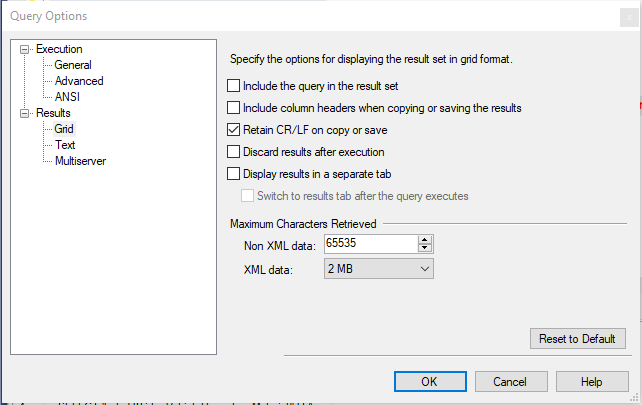

You will get it better formatted if you go into the menu option Query/Options and select "Retain CR/LF on copy or save"

-

December 19, 2019 at 11:16 pm

cuseman03 wrote:well the PK alone doesn't indicate real world duplicates as in you can create an index column of sorts but that doesn't solve potential duplicates and even where there is a truly unique PK that still doesn't prevent duplicates as I have a warehouse that ingests data from over 20 sources on a daily basis. Need to make sure the data coming in doesn't have duplicates.

Yep... I pointed out that the PK alone, especially if it's a surrogate key, doesn't do squat in the real world of duplicates and that's why I asked the question of why you want to do this. It sounds like you have a great reason to do what you've asked about but because you have a valid reason, you've indicated a very real problem that ultimately needs to be repaired. That's what I was really getting at. You shouldn't have to do what you're asking to do.

Of course, based on your comment above, you already know that and that's what I wanted to be sure of.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

December 19, 2019 at 11:22 pm

Jonathan AC Roberts wrote:

SELECT CONCAT('

SELECT ''', QUOTENAME(t.TABLE_SCHEMA), '.', QUOTENAME(t.TABLE_NAME), ''' [TABLE_NAME], COUNT(*) COUNT

FROM (SELECT ',x.c, '

FROM ',QUOTENAME(t.TABLE_SCHEMA), '.', QUOTENAME(t.TABLE_NAME),'

GROUP BY ', x.c, '

HAVING COUNT(*) > 1) y;') SQL

FROM INFORMATION_SCHEMA.TABLES t

CROSS APPLY (VALUES (STUFF((SELECT ',' + QUOTENAME(c.COLUMN_NAME)

FROM INFORMATION_SCHEMA.COLUMNS c

WHERE c.TABLE_SCHEMA = t.TABLE_SCHEMA

AND c.TABLE_NAME = t.TABLE_NAME

ORDER BY c.ORDINAL_POSITION ASC

FOR XML PATH (''), TYPE).value('.', 'varchar(max)'),1,1,''))) x(C)

GOI don't know what "14-22" is in your request?

You will get it better formatted if you go into the menu option Query/Options and select "Retain CR/LF on copy or save"

That looks pretty good. We went 1 step further (you're more than half way there) and it turned out to be nasty fast.

Instead of making a monster wide column through concatenation, consider using the same technique to make a hashbytes column and then compare the hashbytes.

Of course, that recommendation also comes with the warning that if the hashbytes are different, then the rows are guaranteed to be different. However (and you know this but I have to say it out loud for others that may read this), if the hashbytes are the same, there is no guarantee that the rows are identical because of the collisions that can occur within any hashing algorithm. After you examined the true differences with hashbytes, then you have to do the kind of check your doing to prove they're identical.

The extra bit of complexity may not be worth it if all you have are short and narrow tables but it's a god-send of performance for the big stuff.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

December 20, 2019 at 12:29 am

Jeff Moden wrote:Of course, that recommendation also comes with the warning that if the hashbytes are different, then the rows are guaranteed to be different. However (and you know this but I have to say it out loud for others that may read this), if the hashbytes are the same, there is no guarantee that the rows are identical because of the collisions that can occur within any hashing algorithm. After you examined the true differences with hashbytes, then you have to do the kind of check your doing to prove they're identical.

If you use SHA2_256 or SHA2_512 the probability of a hash collision for any two different rows in a table is as good as zero. For example, if you are using SHA2_256 and have 4.8×10^(29) rows in your table then there is a probability of 10^(-18) that there will be at least one clash. These numbers are way outside the realm of the possible. If you are using SHA2_512 then it's even more unlikely, you need to have 1.6×10^(68) rows in your table for there to be a 10^(-18) probability of there being at least one clash.

https://en.wikipedia.org/wiki/Birthday_problem#Probability_table

-

December 20, 2019 at 1:42 pm

Jonathan AC Roberts wrote:Jeff Moden wrote:Of course, that recommendation also comes with the warning that if the hashbytes are different, then the rows are guaranteed to be different. However (and you know this but I have to say it out loud for others that may read this), if the hashbytes are the same, there is no guarantee that the rows are identical because of the collisions that can occur within any hashing algorithm. After you examined the true differences with hashbytes, then you have to do the kind of check your doing to prove they're identical.

If you use SHA2_256 or SHA2_512 the probability of a hash collision for any two different rows in a table is as good as zero. For example, if you are using SHA2_256 and have 4.8×10^(29) rows in your table then there is a probability of 10^(-18) that there will be at least one clash. These numbers are way outside the realm of the possible. If you are using SHA2_512 then it's even more unlikely, you need to have 1.6×10^(68) rows in your table for there to be a 10^(-18) probability of there being at least one clash.

https://en.wikipedia.org/wiki/Birthday_problem#Probability_table

Yes. Totally agreed about the probability. If someone wants to trust that ultra-low probability, it'll probably never be wrong but... it's not a guarantee and I just want people to know that.

To wit, I also use unique constraints on Random GUID columns that are supposed to contain unique values in SQL Server even though the probability of a dupe is also incredibly low because they're actually Type 4 UIDs, which are not guaranteed to be globally unique.

- This reply was modified 4 years, 11 months ago by Jeff Moden.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

December 20, 2019 at 3:49 pm

Jeff Moden wrote:Jonathan AC Roberts wrote:Jeff Moden wrote:Of course, that recommendation also comes with the warning that if the hashbytes are different, then the rows are guaranteed to be different. However (and you know this but I have to say it out loud for others that may read this), if the hashbytes are the same, there is no guarantee that the rows are identical because of the collisions that can occur within any hashing algorithm. After you examined the true differences with hashbytes, then you have to do the kind of check your doing to prove they're identical.

If you use SHA2_256 or SHA2_512 the probability of a hash collision for any two different rows in a table is as good as zero. For example, if you are using SHA2_256 and have 4.8×10^(29) rows in your table then there is a probability of 10^(-18) that there will be at least one clash. These numbers are way outside the realm of the possible. If you are using SHA2_512 then it's even more unlikely, you need to have 1.6×10^(68) rows in your table for there to be a 10^(-18) probability of there being at least one clash.

https://en.wikipedia.org/wiki/Birthday_problem#Probability_table

Yes. Totally agreed about the probability. If someone wants to trust that ultra-low probability, it'll probably never be wrong but... it's not a guarantee and I just want people to know that.

To wit, I also use unique constraints on Random GUID columns that are supposed to contain unique values in SQL Server even though the probability of a dupe is also incredibly low because they're actually Type 4 UIDs, which are not guaranteed to be globally unique.

How do you hash all the columns together in a row? What do you do about null values in columns?

Viewing 15 posts - 1 through 15 (of 16 total)

You must be logged in to reply to this topic. Login to reply