How fast can SQL Server Insert data into table - 40 Million plus

-

February 3, 2024 at 6:09 am

Working on different approaches to make this work faster - have a source data which has 40 million plus records . Would like to move to a target table - currently it takes around 30 to 40 minutes . Solutions applied

- Did a direct select and Insert with no filters - took more time

- When you create a new table like Select * into #Tbl1 from Table2 -- this step is faster . Problem is that we need to add the indexes back to the target table which takes a lot of time and spikes up the resources . Same with disabling all the indexes and then do the insert to the target table - then rebuilding the indexes ( which becomes time consuming as well spiking up the resources in the server

- Doing a loop where it pushes the data in chunk from source to destination - it takes 30 plus minutes

What are the other ways which we can do in the stored procedure - not planning to use the SSIS package . Please share your experience .

-

February 3, 2024 at 4:09 pm

Just to encourage you, I have a table of the following "specs"...

52.1 GB

100 Million Rows

529 Bytes Average Row Size

With the Clustered Index in place, it only took 1 minute and 44 seconds to do the insert of all 100 million rows into a new table.

We can probably do that with your table... I just need the info below, please (just to be sure if something goes haywire).

- Please provide the CREATE TABLE code for both tables including any constraints/keys. This is important because datatypes (especially LOBs) can make some fairly substantial differences.

- Please provide the CREATE INDEX statements for all indexes in both tables.

- Please let us know the following...

- Will the target table be completely empty before a transfer starts or will it already contain any rows?

- Which EDITION of SQL Server 2022 you're using (Standard, Enterprise)? Also, you posted in a 2022 forum... please confirm that you're actually using 2022 and the correct version if you are not.

- What is the Recovery Model for the database where the target table will live in and, if in the FULL Recovery Model, if a temporary excursion to the BULK LOGGED Recovery Model is allowed.

- Are the source and target tables in the same database? Confirm if yes and, if not, please fully describe.

- Number of core SQL Server thinks it has access to (according to the SQL Server instance properties).

- Physical memory available to the instance.

- Max memory setup for SQL Server (max memory setting from the SQL Server instance properties).

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 6, 2024 at 4:08 am

Thanks for your response - for sure it's encouraging .

following are the details

Table schema as follows :

CREATE TABLE [dbo].[Target_Table](

[D_Src] [int] NULL, [D_Src_Descr] [varchar](5) NULL,

[Data_Key] [bigint] NOT NULL, [Col_ID] [int] NULL,

[YrMo] [int] NULL, [Dt] [date] NULL, [YrMo2] [int] NULL,

[SourceSys] [varchar](20) NULL, [Src_Nbr] [varchar](20) NULL, [Src_Nbr2] [varchar](20) NULL, [ID] [varchar](20) NULL,

[ID_Key] [int] NULL, [ID_2] [int] NULL, [ID_3] [int] NULL, [txt_1] [varchar](255) NULL, [ID_4] [int] NULL,

[Val_1] [decimal](14, 4) NULL, [Val_2] [decimal](14, 4) NULL, [Val_3] [decimal](14, 4) NULL, [ID_5] [int] NULL,

[V1] [decimal](14, 4) NULL, [V2] [decimal](14, 4) NULL, [V3] [decimal](14, 4) NULL, [V4] [decimal](14, 4) NULL,

[V5] [decimal](14, 4) NULL, [D1] [date] NULL, [D2] [date] NULL, [D3] [date] NULL, [D4] [date] NULL, [D5] [date] NULL,

[item1_value] [varchar](255) NULL, [item2_value] [varchar](255) NULL, [item3_value] [varchar](255) NULL,

[item4_value] [varchar](255) NULL, [item5_value] [varchar](255) NULL, [Attri1] [varchar](255) NULL,

[Attri2] [varchar](255) NULL, [Attri3] [varchar](255) NULL, [Attri4] [varchar](255) NULL, [Attri5] [varchar](255) NULL,

[Attri6] [varchar](255) NULL, [Attri7] [varchar](255) NULL, [Attri8] [varchar](255) NULL,

[Attri9] [varchar](255) NULL, [Attri10] [varchar](255) NULL, [P_Key] [bigint] NULL,

[E_1] [nchar](1) NULL, [CTime] [datetime] NULL, [p_data_key] [bigint] NULL, [ID_6] [int] NOT NULL,

[ID_7] [int] NULL, [txt_2] [varchar](20) NULL, [txt_3] [varchar](5) NULL, [ID_8] [int] NULL, [ID_9] [int] NULL

)

Following are the indexes :

CREATE CLUSTERED INDEX [IX_1] ON [dbo].[Target_Table]

(

[D_Src] ASC,

[Data_Key] ASC,

[Col_ID] ASC

)GO

CREATE NONCLUSTERED INDEX [IDX_2] ON [dbo].[Target_Table]

(

[YrMo] ASC,

[ID_Key] ASC,

[Col_ID] ASC

)

GO

CREATE NONCLUSTERED INDEX [IDX_3] ON [dbo].[Target_Table]

(

[Col_ID] ASC

)

GO

CREATE NONCLUSTERED INDEX [IDX_4] ON [dbo].[Target_Table]

(

[ID_2] ASC,

[Col_ID] ASC

)

GO

CREATE NONCLUSTERED INDEX [IDX_5] ON [dbo].[Target_Table]

(

[ID_3] ASC,

[ID_Key] ASC,

[YrMo] ASC,

[Col_ID] ASC

)

GO

CREATE NONCLUSTERED INDEX [IDX_6] ON [dbo].[Target_Table]

(

[D_Src] ASC,

[Col_ID] ASC

)

GO

Note : its Partitioned on column - Col_ID ( have to modify the clustered index but just provided as is )

Following are the answers :

Will the target table be completely empty before a transfer starts or will it already contain any rows? - No Target table has currently 120 Million Records - truncation happens on a column col_id which removes off 40 M and then insert back 40 M records . Currently using a temp table which is the source to insert back to the target table ( its all happening in stored procedure )

Which EDITION of SQL Server 2022 you're using (Standard, Enterprise)? Also, you posted in a 2022 forum... please confirm that you're actually using 2022 and the correct version if you are not. SQL Server 2017 Enterprise- planning to upgrade to latest soon

What is the Recovery Model for the database where the target table will live in and, if in the FULL Recovery Model, if a temporary excursion to the BULK LOGGED Recovery Model is allowed. - FULL Recovery Model

Are the source and target tables in the same database? Confirm if yes and, if not, please fully describe. - both are in the same database - as i mentioned earlier - we use sp - get the calculations applied and have it in temp table . Using a while loop to insert data to the target table in a loop on the key column filter ( which also has a index on the source temp table ). If we have one select and insert without any filter to the temp table - it was consuming a lot of time . So , changed that in a loop . Tried to drop indexes and then add back at the later stage after the data is populated also takes a lot of time .

Number of core SQL Server thinks it has access to (according to the SQL Server instance properties). - 12

Physical memory available to the instance.

Max memory setup for SQL Server (max memory setting from the SQL Server instance properties). - 80000

Hope the above information helps . Thanks a lot

- This reply was modified 2 years ago by Balu R.

-

February 6, 2024 at 6:20 am

As a bit of a sidebar, I'd change the YrMo and YrMo2 columns to a DATE datatype. It's 1 byte less than an INT and a whole lot more useful for temporal calculations.

According to what you said, you're deleting about 1/3rd of the rows each month and then adding about 1/3rd new rows each month. The DELETEs are killing you. It would actually be faster to do a "Minimally Logged" transfer of the 80 million remaining "keeper" rows to a new table that it will do delete 40 million rows. If you try doing a UNION all with the NEW 40 million rows along with the old 80 million rows in a "Minimally logged" fashion, you'd likely make a performance killing in a single pass.

The loops are killing you. Even if you were in the SIMPLE Recovery Model, only the first INSERT (regardless of number of rows) into a truly empty table with just a Clustered Index on it will be minimally logged. All the other inserts will be fully logged, even if the SIMPLE Recovery Model were possible.

As for MAXDOP during the copy and index rebuilds, look at your NUMA nodes. If all 12 CPUs are in one NUMA node (as I suspect they will be), I'd likely limit MAXDOP to 4 (no more and no less) for the copy/index runs and leave two other sets of 4 for your 24/7 code to run on.

For the transfer, the target table needs to be empty and not from deletes. It needs to be empty from a truncate or a new creation. It needs to have only a clustered index on it. You need to be in the BULK LOGGED recovery model for both the population of the 120 million rows AND the builds of the Non-Clustered indexes so that, they too, will be minimally logged.

You can go back to the FULL Recovery Model after the copies are all done.

I don't see anything in the table, like LOBs or other superwide columns that would mess with you.

Shifting gears a bit, I see no reason why you shouldn't use a partitioned view for these tables. I probably wouldn't bother with a partitioned table. That would make your total downtime very near zero with little extra overhead. The method above would take more disk space if you needed for the target table to be preserved and online during the copy and mock deletes and index builds.

If you don't want to bother with partitioning of any type, a few well placed "flop over" synonyms with bit of temporary extra storage space can make this whole evolution nasty fast with virtually no down time and really cut back on the overall duration and the load you're putting on the log file.

You've gotta get away from using the loops and get into "Minimal Logging", no matter what you do.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 6, 2024 at 6:28 am

Duplicate post deleted.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 6, 2024 at 6:29 am

As yet another side bar, unless you have a REALLY good reason to move to 2022, I'm here to tell you that, compared to 2017, 2022 is about 30% slower across the board. So is 2019. A lot of shops don't really notice because they're mostly single row OLTP and they can tolerate slower reporting especially since they normally don't keep track of such things. We do HUGE file I/O and the related processing and, man, did we ever notice the difference.

We were required to make the upgrade to 2022 for security purposes.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 6, 2024 at 9:19 am

Balu R wrote:Thanks for your response - for sure it's encouraging .

following are the details

Table schema as follows :

Note : its Partitioned on column - Col_ID ( have to modify the clustered index but just provided as is )

Hope the above information helps . Thanks a lot

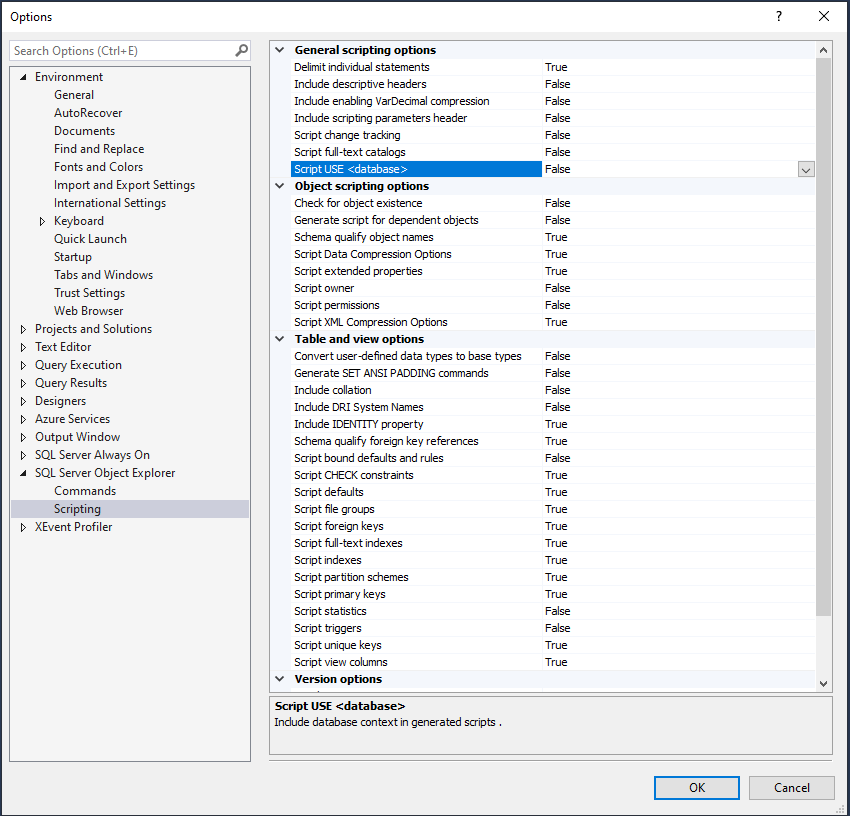

do you mean by the above that you are using Partitioniong? if so can you please post the FULL DDL for the table including the that the correct scripting options are enable (defaults do not generate required info).

better yet would be if you have this on a VS project and supply us with the table definition from its script.

if the table is indeed partitioned (and all indexes are partition aligned) it may be possible to use partition switching if the 40m rows you are removing/inserting all fall within the same partition (or even more).

desired SSMS settings

-

February 6, 2024 at 1:35 pm

Balu R wrote:Note : its Partitioned on column - Col_ID ( have to modify the clustered index but just provided as is )

Crud... I missed that detail because I was only looking at the code and the partitioning wasn't in it. My apologies.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally)

Viewing 8 posts - 1 through 8 (of 8 total)

You must be logged in to reply to this topic. Login to reply