Bad data - updating date from new FK

-

Hi,

Our DB vendor is now implementing a new FK. This new FK is not going in due to duplicates -

The CREATE UNIQUE INDEX statement terminated because a duplicate key was found for the object name 'dbo.PractitionerInsurance' and the index name 'UX_PractitionerInsurance_PractitionerID_CarrierID_TypeID_Policy_EffectiveDate'. The duplicate key value is (43246, 96146, 13261, K1510, Aug 9 1988 12:00AM).

Thinking I need to update the effectivedate by changing the date by one hour Thus saving the record and allowing the new Unique Index to be created without the error.

So I run this to get the practitionerrecid:

select * from [PractitionerInsurance] where PractitionerID = 43246

Then I manually update the effectivedate using the newly found pratitionerinsurancerecid.

Update [PractitionerInsurance] set EffectiveDate = '2021-12-01 01:00:00.000' where PractitionerInsuranceRecID = '675283' which just updates the effective date of the duplicated record.

Then when I go to apply the new unique index it errors for the next 'bad' record.

Been trying for hours to think of a way to automate this process as there allot of these.

Is there a way for me to keep trying at add the index and then based on whats returned update the effective date hour (only)?

- This topic was modified 2 years, 8 months ago by krypto69.

-

April 4, 2023 at 7:11 pm

All right it sounds tedious. To differentiate the duplicates you're looking to add 1, 2, 3, ... n hours to the datetime column, EffectiveDate. Of course, this could create new duplicates (and so on... ) so you'll probably need a WHILE loop. Hmm... maybe it's possible a quirky UPDATE could work but that would require being clever. To just make it work you could JOIN on all of columns which define the duplicate rows and UPDATE the EffectiveDate=DATEADD(hour, fn.N, EffectiveDate) where fn.N is the 1, 2, 3, ... n sequence generated from a tally function, or numbers table. Then run it until there are no more dupes. Needs sample data

Aus dem Paradies, das Cantor uns geschaffen, soll uns niemand vertreiben können

-

something along these lines - it assumes that none of the duplicates already contain a row that is 1 hour ahead

if object_id('tempdb..#keys') is not null

drop table #keys

;

select src.PractitionerInsuranceRecID

, row_number() over(partition by src.PractitionerID

, src.CarrierID

, src.TypeID

, src.Policy

, src.EffectiveDate

order by src.PractitionerInsuranceRecID

) as rownum

into #keys

from dbo.PractitionerInsurance src

inner join (select PractitionerID

, CarrierID

, TypeID

, Policy

, EffectiveDate

from dbo.PractitionerInsurance

group by PractitionerID

, CarrierID

, TypeID

, Policy

, EffectiveDate

having count(*) > 1

) t

on t.PractitionerID = src.PractitionerID

and t.CarrierID = src.CarrierID

and t.TypeID = src.TypeID

and t.Policy = src.Policy

and t.EffectiveDate = src.EffectiveDate

;

update dst

set dst.Effectivedate = dateadd(hour, 1, dst.Effectivedate)

from dbo.PractitionerInsurance dst

inner join #keys k1

on k1.PractitionerInsuranceRecID = dst.PractitionerInsuranceRecID

and k1.rownum > 1 -- ignore the first row

; -

April 4, 2023 at 7:27 pm

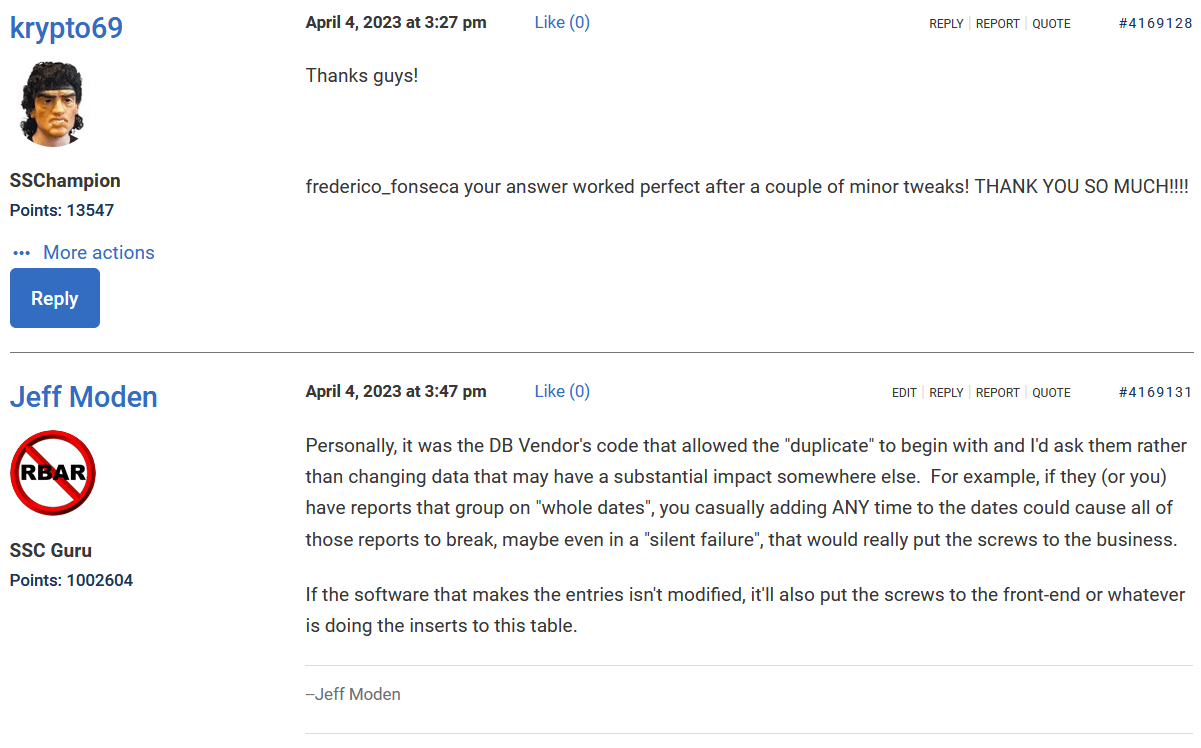

Thanks guys!

frederico_fonseca your answer worked perfect after a couple of minor tweaks! THANK YOU SO MUCH!!!!

-

April 4, 2023 at 7:47 pm

Personally, it was the DB Vendor's code that allowed the "duplicate" to begin with and I'd ask them rather than changing data that may have a substantial impact somewhere else. For example, if they (or you) have reports that group on "whole dates", you casually adding ANY time to the dates could cause all of those reports to break, maybe even in a "silent failure", that would really put the screws to the business.

If the software that makes the entries isn't modified, it'll also put the screws to the front-end or whatever is doing the inserts to this table.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

April 4, 2023 at 7:50 pm

frederico_fonseca wrote:something along these lines - it assumes that none of the duplicates already contain a row that is 1 hour ahead

if object_id('tempdb..#keys') is not null

drop table #keys

;

select src.PractitionerInsuranceRecID

, row_number() over(partition by src.PractitionerID

, src.CarrierID

, src.TypeID

, src.Policy

, src.EffectiveDate

order by src.PractitionerInsuranceRecID

) as rownum

into #keys

from dbo.PractitionerInsurance src

inner join (select PractitionerID

, CarrierID

, TypeID

, Policy

, EffectiveDate

from dbo.PractitionerInsurance

group by PractitionerID

, CarrierID

, TypeID

, Policy

, EffectiveDate

having count(*) > 1

) t

on t.PractitionerID = src.PractitionerID

and t.CarrierID = src.CarrierID

and t.TypeID = src.TypeID

and t.Policy = src.Policy

and t.EffectiveDate = src.EffectiveDate

;

update dst

set dst.Effectivedate = dateadd(hour, 1, dst.Effectivedate)

from dbo.PractitionerInsurance dst

inner join #keys k1

on k1.PractitionerInsuranceRecID = dst.PractitionerInsuranceRecID

and k1.rownum > 1 -- ignore the first row

;This reads the base table at least three times. The following should be much faster.

WITH Key_Updates AS

(

select src.PractitionerInsuranceRecID

, row_number() over(partition by src.PractitionerID

, src.CarrierID

, src.TypeID

, src.Policy

, src.EffectiveDate

order by src.PractitionerInsuranceRecID

) as rownum

, count(*) over(partition by src.PractitionerID

, src.CarrierID

, src.TypeID

, src.Policy

, src.EffectiveDate

) as cnt

from dbo.PractitionerInsurance src

)

update ku

set ku.Effectivedate = dateadd(hour, ku.rownum - 1, ku.Effectivedate)

from Key_Updates ku

WHERE ku.cnt > 1

;Drew

Edited to remove incorrect

ORDER BYclause in theCOUNT()windowed function.- This reply was modified 2 years, 8 months ago by drew.allen.

J. Drew Allen

Business Intelligence Analyst

Philadelphia, PA -

April 4, 2023 at 7:56 pm

I seriously wish you the best of luck. Get your QA/UAT started on checking for bad info/reporting/etc.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

April 4, 2023 at 11:16 pm

Hi Jeff

Yeah vendor is aware of this issue. But get where you are coming from for sure.It was caused by a crappy import tool they wrote.. Really the records should be deleted, but that goes against my best practices..

-

April 5, 2023 at 1:02 am

krypto69 wrote:Hi Jeff

Yeah vendor is aware of this issue. But get where you are coming from for sure.It was caused by a crappy import tool they wrote.. Really the records should be deleted, but that goes against my best practices..

If they are truly duplicates, copy the tupes to an archive table (along with the date and time you copied them in an additional column) and then delete just the dupes from the main table.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally)

Viewing 9 posts - 1 through 9 (of 9 total)

You must be logged in to reply to this topic. Login to reply