backup practice/strategy/risks

-

September 14, 2022 at 1:24 pm

I have a current job that does FULL backup to all my USER DBs daily and system DBs weekly. I do not do DIFF at all. only Log for those full recovery model.

Are there any risks to this practice when it comes to restoring in case i need to.

Thanks

-

September 14, 2022 at 1:37 pm

- Why only systemdbs once a week ??

You will lose a week of systems administration actions, sqlagent job information, ...

2. What kind of SQL instance is this? DWH / OLTP ?

What data activity is on it and can that be reproduced?

3. what's the frequency of your log backups ?

4. are all backup files ported (or created to) another server ( best in another location )

5. are your backups checksummed ? ( detect errors ASAP )

6. how frequent do you perform restores ? YOU NEED TO TEST THIS to be sure it works !

7. how much data is your (interal?) client willing to lose ?

Johan

Learn to play, play to learn !Dont drive faster than your guardian angel can fly ...

but keeping both feet on the ground wont get you anywhere :w00t:- How to post Performance Problems

- How to post data and code to get the best help- How to prevent a sore throat after hours of presenting ppt

press F1 for solution, press shift+F1 for urgent solution 😀

Who am I ? Sometimes this is me but most of the time this is me

-

September 14, 2022 at 6:28 pm

lan2022nguyen wrote:only Log for those full recovery model.

To echo one of Johan's questions, how often do you do those?

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

September 14, 2022 at 10:59 pm

@johan - wow, alot to unpack here 🙂

i just join the team and relatively new to sql server/dba. i should have said our current team backup practice, not me specifically. i'd like to have an understanding why they do such practice (without really asking them directly why they do certain things). will try to answer:

1.Why only systemdbs once a week ?? good question. i don't know.

You will lose a week of systems administration actions, sqlagent job information, ...

2. What kind of SQL instance is this? DWH / OLTP ? internal app with transactions

What data activity is on it and can that be reproduced? user activities such as updating, deletion, inserting. it will be very difficult to reproduce. will have to ask people what they did xx days/hours ago...with potentially thousands of users.

3. what's the frequency of your log backups ? daily

4. are all backup files ported (or created to) another server ( best in another location ) i think another group does external site backup. not sure of their policy.

5. are your backups checksummed ? ( detect errors ASAP ). believe so. don't have SA rights to PROD box. will have to rely another team to get info. again, not ready to ask directly yet.

6. how frequent do you perform restores ? YOU NEED TO TEST THIS to be sure it works ! another good question. i don't know.

7. how much data is your (interal?) client willing to lose ? i'd imagine none.

i will have to eventually start asking (silly) questions directly but meanwhile i will stay observant and ask the community of experts instead for now.

thank you and appreciate all

- This reply was modified 3 years, 5 months ago by lan2022nguyen.

-

September 15, 2022 at 7:35 am

With the answers you provided, DRP = worst case, how much data do you think you will loose ? At least 24h !

and how long will it take to get things working again ?

How long will your company need to reproduce the lost data ?

1st idea: set up a decent log backup frequency !

btw: Restore header only will show if the backup file has checksums applied:

RESTORE HEADERONLY FROM DISK = N'D:\MSSQL15\MSSQL\Backup\dbFull.BAK'

HasBackupChecksums

Johan

Learn to play, play to learn !Dont drive faster than your guardian angel can fly ...

but keeping both feet on the ground wont get you anywhere :w00t:- How to post Performance Problems

- How to post data and code to get the best help- How to prevent a sore throat after hours of presenting ppt

press F1 for solution, press shift+F1 for urgent solution 😀

Who am I ? Sometimes this is me but most of the time this is me

-

September 15, 2022 at 11:04 am

thanks johan!

-

September 15, 2022 at 6:36 pm

lan2022nguyen wrote:What prevents you from having conversations with your co-workers so that you can learn, make suggestions, and become better at what you do?

- This reply was modified 3 years, 5 months ago by Michael L John.

Michael L John

If you assassinate a DBA, would you pull a trigger?

To properly post on a forum:

http://www.sqlservercentral.com/articles/61537/ -

September 26, 2022 at 7:54 am

I may be wrong but it seems like the backup strategy was set up for the convenience of the people running it and not for the needs of the business.

The business will have its needs and expectations for database resilience and availability, and the backup strategy forms part of meeting this. Other tools needed to meet business requirements may include availability groups, multi-site operations, air-gapped backups and others. You should always plan two routes to make a recovery, so that if a piece of one route fails the DB is not lost. A good resilience plan will cope with all things that could make a DB not available, such as the traditional server failure, pandemic preventing access to the data centre, and hacking. Technology changes mean resilience plans should not stay the same year after year - new techniques can be cheaper than an old process and new threats require new mitigations.

However, everything starts with the basics of RTO and RPO:

Every database should have a Recovery Time Objective (RTO). This measures the amount of time the business can afford to be without the database.

RTO should be measured from the time management decide to begin recovery to the time the DB is available for use. If you measure RTO from the time of failure you will need to automate the recovery process. This can be expensive and will always (trust me) give some false positives that start recovery when nothing is wrong.

A small DB with an RTO of 6 hours can be delt with by backup and restore. A big DB with a RTO of 5 minutes will need an availability group. The RTO will also give you the priority order for restoring databases. The shortest RTO gets restored first, the longest last.

Every database should have a Recovery Point Objective (RPO). This measures the how much data the business can afford to lose if the database becomes unavailable.

RPO should always be measured from the point of failure. It is normally measured as a time interval, but streaming data might get measured in Mb.

A DB with an RPO of 30 minutes can be delt with by running log backups every half hour. A DB with an RPO of 1 minute will need an availability group.

When you have the RTO and RPO of every database they will naturally form groups. These can be given names (Gold, Silver, Bronze) and you can build Service Level Agreements with the business for each group. A DB in the Gold group will cost more to make available than one in the Bronze group. The business must give their agreement to the SLA definitions and to which group a given DB is put in to. If a manager says Bronze is good enough but demands Gold when a problem comes then they (not you!) have to explain to their boss why they said Bronze.

SQL Server can cope with a minimum RTO and RPO of about 3 to 5 seconds using availability groups (including multi-site resilience). Anything less than that is likely to need specialist software and hardware and get very expensive. Giving every DB a 5 second RTO and RPO will require a a network with a lot of fast bandwidth which will be expensive. If you want resilience to cover a data centre failure then the network between multiple data centres may be the biggest cost. All this applies both to in-house and cloud hosting.

Perhaps the hardest part of all this will be the company politics. Be careful how you ask the questions and how you present any problems you find and changes you propose. A manager who has made bad decisions may prefer to keep their job rather than yours, even if it might end up killing the business.

Original author: https://github.com/SQL-FineBuild/Common/wiki/ 1-click install and best practice configuration of SQL Server 2019, 2017 2016, 2014, 2012, 2008 R2, 2008 and 2005.

When I give food to the poor they call me a saint. When I ask why they are poor they call me a communist - Archbishop Hélder Câmara

-

September 27, 2022 at 7:34 pm

My "general" recommendation for backups is...

- Full Backup once per week.

- Dif Backup once per day.

- "Conditional" Log file backups every 15 to 30 minutes.

Of course, that's subject to change based on RPO/RTO, etc. "It Depends".

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

September 27, 2022 at 8:30 pm

Jeff Moden wrote:My "general" recommendation for backups is...

- Full Backup once per week.

- Dif Backup once per day.

- "Conditional" Log file backups every 15 to 30 minutes.

Of course, that's subject to change based on RPO/RTO, etc. "It Depends".

Just for clarity - my general recommendation is daily full backups and frequent transaction log backups. I only recommend differentials for special cases and only after sufficient review of database activity has concluded that there will be some benefit.

I have seen way too many times now where a weekly full and daily diffs are just wasting disk space and time. As soon as there have been enough changes in the database to mark most pages as being modified - the differential then becomes essentially another full backup and you end up with 7 days of full sized backup files - and a recovery path that can require twice as much time to recover.

Jeffrey Williams

“We are all faced with a series of great opportunities brilliantly disguised as impossible situations.”― Charles R. Swindoll

How to post questions to get better answers faster

Managing Transaction Logs -

September 28, 2022 at 12:38 am

Jeffrey Williams wrote:Jeff Moden wrote:My "general" recommendation for backups is...

- Full Backup once per week.

- Dif Backup once per day.

- "Conditional" Log file backups every 15 to 30 minutes.

Of course, that's subject to change based on RPO/RTO, etc. "It Depends".

Just for clarity - my general recommendation is daily full backups and frequent transaction log backups. I only recommend differentials for special cases and only after sufficient review of database activity has concluded that there will be some benefit.

I have seen way too many times now where a weekly full and daily diffs are just wasting disk space and time. As soon as there have been enough changes in the database to mark most pages as being modified - the differential then becomes essentially another full backup and you end up with 7 days of full sized backup files - and a recovery path that can require twice as much time to recover.

Ok... so what do you do instead? Just T-Log backups for a week?

I've not yet seen where a DIF becomes as large a Full, yet, especially when you have large tables with years of data in them that don't change (which is another issue).

Still, it's a good warning on what to watch for.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

September 28, 2022 at 5:39 am

We only create full backups (some daily, some every 3 days, some weekly) and log backups.

Most of our databases are small, but have a fair amount of I/U/D transactions.

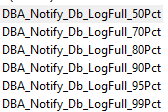

We do followup on log consumption and start log backup jobs when it reaches 50% full and send out alerts to the dba, as this should be exceptional.

We managed to get databases back online within RTO (and the number of log backups have been processed fast enough to support that).

#KnockOnWood

Johan

Learn to play, play to learn !Dont drive faster than your guardian angel can fly ...

but keeping both feet on the ground wont get you anywhere :w00t:- How to post Performance Problems

- How to post data and code to get the best help- How to prevent a sore throat after hours of presenting ppt

press F1 for solution, press shift+F1 for urgent solution 😀

Who am I ? Sometimes this is me but most of the time this is me

-

September 29, 2022 at 11:19 am

I recommend the same what Jeff suggested.

More over during recovery if you have only logs, you need all logs after full backup and in sequence to be restored.

but if you have Diff backup, sequence is full, Last diff backup and log backup in sequence post diff backup. This saves some time.

Regards

Durai Nagarajan -

September 29, 2022 at 4:22 pm

Jeff Moden wrote:Ok... so what do you do instead? Just T-Log backups for a week?

I've not yet seen where a DIF becomes as large a Full, yet, especially when you have large tables with years of data in them that don't change (which is another issue).

Still, it's a good warning on what to watch for.

As I stated - I start with daily full backups and frequent transaction log backups. As long as we can perform the full backups within a reasonable maintenance window there is no reason to include differentials. In a weekly full, daily diff - transaction logs the restore time will always be more using that model than using a daily full and transaction logs because you have to restore the full, the latest diff - and all log backups up to point in time. Using daily full backups we restore the full - and all log backups to point in time.

Now - let's assume you have implemented Ola's utilities. You have setup and configured the index optimize utility according to the **recommendations** and schedule it to run every day. On the first day after your full - the largest table in the database is selected to be rebuilt. The second day the second largest table is selected - and so on...

By the fourth day after your full backup - the differential backup is nearly the same size as your full backup or it is a significant portion of the full backup. If your full backup is 100GB (compressed) then you will need at least 800GB of space available to host just the full and differentials for the week. You really don't know how large the differentials will be - but you must keep the full, all differentials and all log backups from that full backup forward available. If you miss just one file you run the risk of not being able to restore to a point in time past that one file.

Of course - you are also scraping those files to offline storage, but if you have to go to those files you are going to extend your downtime and recovery and probably will not be able to meet your RTO. Of course, that depends on a whole lot of other factors - but it needs to be considered.

Same scenario with daily full - and you could get away with having only 400GB of space. The current full - the previous full - log backups from the previous full to current point in time. Any prior backup files have already been scraped to offline storage so it is still available if needed.

Recovery is from the current full backup and all log backups to current point in time. If the current full is corrupted - you restore from the previous full and all log backups to current point in time.

Jeffrey Williams

“We are all faced with a series of great opportunities brilliantly disguised as impossible situations.”― Charles R. Swindoll

How to post questions to get better answers faster

Managing Transaction Logs -

September 29, 2022 at 7:11 pm

@Jeffrey Williams,

How big is the largest server instance that you're doing the Daily full backups on?

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally)

Viewing 15 posts - 1 through 15 (of 23 total)

You must be logged in to reply to this topic. Login to reply