Are the posted questions getting worse?

-

July 17, 2022 at 5:51 pm

Grant Fritchey wrote:Question for the smart people, and the rest of you.

Would you endorse or get behind the statement: Some deadlocks are healthy. or Some level of deadlocks is healthy.

Or is more something like: Some level of deadlocks we can live with.

I'm just surprised to hear deadlocks described as "healthy" in any terms. I'd argue, in a perfect world, any deadlock is bad. I simply don't see a place where using the word "healthy" to describe deadlocks is accurate. Certainly, there's a level below which they can be tolerated, and there's a level above which they're a major issue. That level is very much up to interpretation.

What do you think?

I'm not one of those that condone them. I've just given up on me agonizing over them because no one else cares. When I bring them up, people tell me that the "system handles and fixes or retries them" with "system" being their word for the code I've identified as a pretty serious performance issue (man, did I clean up that statement!

). I've told them in the past that it will become an issue as the "systems" grow and the number of concurrent runs grows and slows. Their comment is usually, "It's not an issue now and we don't have time to work on it. We'll deal with it if and when it becomes an issue".

And, BTW, it's recently started to become an issue.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

August 25, 2022 at 4:42 pm

I know... I'm weird... I couldn't stop laughing as I read the following article about AI and on-prem. I mean who knew that computationally intense processes on the cloud might be expensive, right?

Who knew that long-haul connections might be intermittent, right?

https://www.protocol.com/enterprise/ai-machine-learning-cloud-data

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

August 25, 2022 at 5:58 pm

Jeff Moden wrote:I know... I'm weird... I couldn't stop laughing as I read the following article about AI and on-prem. I mean who knew that computationally intense processes on the cloud might be expensive, right?

Who knew that long-haul connections might be intermittent, right?

https://www.protocol.com/enterprise/ai-machine-learning-cloud-data%5B/quote%5D

Of course, AI has a built-in response, "Let me think about it" whilst you pay for the computational resources...

There is a misconception when it comes to the "Cloud", few realise that the name comes from the transparency of the billing or rather the lack thereof.

-

August 25, 2022 at 8:05 pm

I thought "cloud" was a polite way of saying "smoke and mirrors" where your wallet gets smoked and they use mirror to deflect your billing questions.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

August 26, 2022 at 2:13 pm

Just reiterating a question I posted on the 2019 Administration forum, on SSMS backward compatibility, does anyone have any input?

The issue is that I'm trying to simplify an environment where SSMS 2008R2, 2012 and 2019 are currently used for accessing the corresponding versions of SQL Server.

-

September 4, 2022 at 4:38 am

Eirikur Eiriksson wrote:Just reiterating a question I posted on the 2019 Administration forum, on SSMS backward compatibility, does anyone have any input?

The issue is that I'm trying to simplify an environment where SSMS 2008R2, 2012 and 2019 are currently used for accessing the corresponding versions of SQL Server.

Sorry, Eirikur... I've got nothing for you on this issue. I don't have anything prior to 2016.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

September 4, 2022 at 4:41 am

I'm having an issue on 2016 and 2017... If you have 2019 or any version prior to 2016, would you run the following code, please, and let me know the version you ran it on and post the results? Thanks for the help, folks.

SELECT A = 2147483647/1000

,B = 2147483648/1000

;--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

September 4, 2022 at 6:55 am

Jeff Moden wrote:I'm having an issue on 2016 and 2017... If you have 2019 or any version prior to 2016, would you run the following code, please, and let me know the version you ran it on and post the results? Thanks for the help, folks.

SELECT A = 2147483647/1000

,B = 2147483648/1000

;SSMS 18.12.1

SQL 2019 (64-bit)

-- SERVERPROPERTY('ProductVersion') = 15.0.2000.5

-- SERVERPROPERTY('ProductLevel') = RTM

-- COMPAT 100, 110, 120, 130, 140, 150

A B

----------- ---------------

2147483 2147483.648000SSMS 2014

SQL 2014 (64-bit)

-- SERVERPROPERTY('ProductVersion') = 12.0.6164.21

-- SERVERPROPERTY('ProductLevel') = SP3

-- COMPAT 100, 110, 120

A B

----------- ---------------

2147483 2147483.648000 -

September 4, 2022 at 10:48 am

Jeff Moden wrote:I'm having an issue on 2016 and 2017... If you have 2019 or any version prior to 2016, would you run the following code, please, and let me know the version you ran it on and post the results? Thanks for the help, folks.

SELECT A = 2147483647/1000

,B = 2147483648/1000

;IIRC, 2016 and 2017 don't automatically convert literal numerical values greater than (2^31) - 1 to float as the other versions do.

This was one of the static code analysis tests I had to do for 2014 and earlier to 2016.

-

September 4, 2022 at 5:13 pm

DesNorton wrote:Jeff Moden wrote:I'm having an issue on 2016 and 2017... If you have 2019 or any version prior to 2016, would you run the following code, please, and let me know the version you ran it on and post the results? Thanks for the help, folks.

SELECT A = 2147483647/1000

,B = 2147483648/1000

;SSMS 18.12.1

SQL 2019 (64-bit)

-- SERVERPROPERTY('ProductVersion') = 15.0.2000.5

-- SERVERPROPERTY('ProductLevel') = RTM

-- COMPAT 100, 110, 120, 130, 140, 150

A B

----------- ---------------

2147483 2147483.648000SSMS 2014

SQL 2014 (64-bit)

-- SERVERPROPERTY('ProductVersion') = 12.0.6164.21

-- SERVERPROPERTY('ProductLevel') = SP3

-- COMPAT 100, 110, 120

A B

----------- ---------------

2147483 2147483.648000Thank you, good Sir. That's what I've gotten on 2008, 2016, and 2017. I was seriously interested in 2014 and 2019 and you just happen to hit those spots on the mark. I also very much appreciate the compatibility level testing you did.

It's just my opinion but that violates the rules of integer division and I've not been able to find anywhere in the SQL Documentation where it says that such a thing will happen.

https://docs.microsoft.com/en-us/sql/t-sql/data-types/constants-transact-sql

https://docs.microsoft.com/en-us/sql/t-sql/language-elements/divide-transact-sql

As a result, I opened the following "bug" with MS. I've been aware of the "problem" since 2008 (never had to use such large constants before then) but it's caused me issues in the past because it's one of those unexpected "Crud, I forgot about that nuance" moments every time.

https://feedback.azure.com/d365community/idea/153f4b95-e82b-ed11-a81b-6045bd853c94

I suspect, though, that this is one of those things where they'll simply mark it as "operates as designed" and won't even bother updating the documentation to warn people about it. With that, I've added the alternative of properly documenting the phenomenon.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

September 4, 2022 at 5:29 pm

Eirikur Eiriksson wrote:Jeff Moden wrote:I'm having an issue on 2016 and 2017... If you have 2019 or any version prior to 2016, would you run the following code, please, and let me know the version you ran it on and post the results? Thanks for the help, folks.

SELECT A = 2147483647/1000

,B = 2147483648/1000

;IIRC, 2016 and 2017 don't automatically convert literal numerical values greater than (2^31) - 1 to float as the other versions do.

This was one of the static code analysis tests I had to do for 2014 and earlier to 2016.

Correct... but it didn't convert to FLOAT in other versions either (thank goodness for that!)... It converts numeric literals (constants) that have a value larger than the bounds of INT to the NUMERIC() datatype with a "0" for scale. At least it's NUMERIC() and not FLOAT... that would cause some serious inaccuracies due to the limited scale of FLOAT().

I can see it using NUMERIC() if it exceeds the bounds of BIGINT but it shouldn't be implicit, IMHO. That should be one place where an explicit cast of a numeric constant should actually require an explicit conversion.

In retrospect, though, it may be that there's a user base that relies on the current implicit conversion and have suggested additions to the documentation as a reasonable alternative.

- This reply was modified 2 years, 6 months ago by Jeff Moden. Reason: Added a note about a resonable documentation alternative

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

September 4, 2022 at 7:50 pm

Jeff Moden wrote:I'm having an issue on 2016 and 2017... If you have 2019 or any version prior to 2016, would you run the following code, please, and let me know the version you ran it on and post the results? Thanks for the help, folks.

SELECT A = 2147483647/1000

,B = 2147483648/1000

;

-

September 4, 2022 at 8:56 pm

That's a beautiful thing, Steve. I didn't know of anyone that had actually downloaded the public eval of 2022 and installed it. Thank you very much?

I was thinking that this would make a fun little QOD and wrote it up. It's still in draft mode and the site software won't show me a preview for some reason. If you want to have a look at it and let me know if it's something that you might want to use or any changes, let me know. If I need to submit for you to see it, let me know that, as well.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

September 5, 2022 at 1:30 am

Jeff Moden wrote:[

Correct... but it didn't convert to FLOAT in other versions either (thank goodness for that!)... It converts numeric literals (constants) that have a value larger than the bounds of INT to the NUMERIC() datatype with a "0" for scale. At least it's NUMERIC() and not FLOAT... that would cause some serious inaccuracies due to the limited scale of FLOAT().

I can see it using NUMERIC() if it exceeds the bounds of BIGINT but it shouldn't be implicit, IMHO. That should be one place where an explicit cast of a numeric constant should actually require an explicit conversion.

And it'd better be converted to FLOAT straight away.

For the sake of precision.

Check this out:

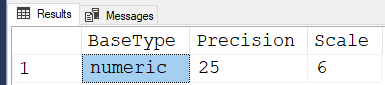

select SQL_VARIANT_PROPERTY(2147483648, 'basetype') basetype,

SQL_VARIANT_PROPERTY(2147483648, 'precision') [precision],

SQL_VARIANT_PROPERTY(2147483648, 'scale') scaleIt's NUMERIC(10,0) - precision is actually lower than for FLOAT, which is

1653 .But now let's try this:

select SQL_VARIANT_PROPERTY(2147483648/1000, 'basetype') basetype,

SQL_VARIANT_PROPERTY(2147483648/1000, 'precision') [precision],

SQL_VARIANT_PROPERTY(2147483648/1000, 'scale') scaleNow it's NUMERIC(16,6)

Can you guess where precision=16 is coming from?

- This reply was modified 2 years, 6 months ago by Sergiy.

_____________

Code for TallyGenerator -

September 5, 2022 at 1:55 am

Sergiy wrote:Jeff Moden wrote:[

Correct... but it didn't convert to FLOAT in other versions either (thank goodness for that!)... It converts numeric literals (constants) that have a value larger than the bounds of INT to the NUMERIC() datatype with a "0" for scale. At least it's NUMERIC() and not FLOAT... that would cause some serious inaccuracies due to the limited scale of FLOAT().

I can see it using NUMERIC() if it exceeds the bounds of BIGINT but it shouldn't be implicit, IMHO. That should be one place where an explicit cast of a numeric constant should actually require an explicit conversion.

And it'd better be converted to FLOAT straight away.

For the sake of precision.

Check this out:

select SQL_VARIANT_PROPERTY(2147483648, 'basetype') basetype,

SQL_VARIANT_PROPERTY(2147483648, 'precision') [precision],

SQL_VARIANT_PROPERTY(2147483648, 'scale') scaleIt's NUMERIC(10,0) - precision is actually lower than for FLOAT, which is

1653 .But now let's try this:

select SQL_VARIANT_PROPERTY(2147483648/1000, 'basetype') basetype,

SQL_VARIANT_PROPERTY(2147483648/1000, 'precision') [precision],

SQL_VARIANT_PROPERTY(2147483648/1000, 'scale') scaleNow it's NUMERIC(16,6)

Can you guess where precision=16 is coming from?

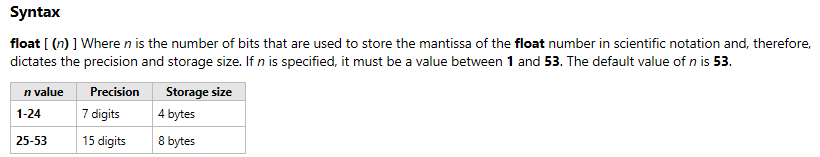

Yes... and it's not FLOAT. EDIT: Careful manipulation of multiple FLOATs behind the scenes could pull it off but the decimal portion would have to go through a decimal decoder. I'd need some serious proof of that, though.

--===== Largest possible number for BIGINT = 9223372036854775807

SELECT BaseType = SQL_VARIANT_PROPERTY(9223372036854775807/1000,'BASETYPE')

,Precision = SQL_VARIANT_PROPERTY(9223372036854775807/1000,'PRECISION')

,Scale = SQL_VARIANT_PROPERTY(9223372036854775807/1000,'SCALE')

;The maximum BIGINT value that I used there has a precision of 19, which exceeds what FLOAT can handle.

Float has a max precision of only 15 digits... not 53. 53 is the max number of bits.

- This reply was modified 2 years, 6 months ago by Jeff Moden. Reason: Added more info about possible multiple floats being used behind the scenes

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally)

Viewing 15 posts - 66,016 through 66,030 (of 66,789 total)

You must be logged in to reply to this topic. Login to reply