Alter Huge Table

-

June 5, 2022 at 6:49 pm

Ooh, I accidentally left off one critical pre-step:

(0) Pre-allocate enough log space to handle the INSERTs. How much total log space you'll need depends on whether minimal logging is available or not, i.e., that the db is not in FULL recovery model.

SQL DBA,SQL Server MVP(07, 08, 09) "It's a dog-eat-dog world, and I'm wearing Milk-Bone underwear." "Norm", on "Cheers". Also from "Cheers", from "Carla": "You need to know 3 things about Tortelli men: Tortelli men draw women like flies; Tortelli men treat women like flies; Tortelli men's brains are in their flies".

-

June 5, 2022 at 7:13 pm

Jeff Moden wrote:Using a loop to break things up into sections will cause the every section except the first to be FULLY LOGGED, which takes as much as 3 times longer than minimal logging.

No, not from SQL 2016 on (reminder: this is a SQL 2017 forum). And, even before then, you could use trace flag 610 to get minimal logging, at least as far back as 2008 (and IIRC even 2005, although I'm much more fuzzy on that one). Thus, copying the rows in clustering key order not only reduces read I/O, but it also reduces write I/O, by making sure that almost all pages are new pages and not modifications to existing pages.

And I agree with Johan... consider making modifications to take advantage of using COMPRESS, which does a GZIP on the column.

That was my suggestion, not Johan's :-).

And, like I said above, forcing the LOBs our of row the right way has some HUGE performance advantages.

Step (2) in my suggested action plan above.

SQL DBA,SQL Server MVP(07, 08, 09) "It's a dog-eat-dog world, and I'm wearing Milk-Bone underwear." "Norm", on "Cheers". Also from "Cheers", from "Carla": "You need to know 3 things about Tortelli men: Tortelli men draw women like flies; Tortelli men treat women like flies; Tortelli men's brains are in their flies".

-

June 6, 2022 at 5:07 am

Apologies on the mistake about COMPRESS.

I know you think that 2016 is magic after the first insert. The documentation says that new pages will be minimally logged. I'll have to dig out the documentation but I'm pretty sure that's not true in the FULL recovery model.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

June 6, 2022 at 1:57 pm

Jeff Moden wrote:Apologies on the mistake about COMPRESS.

I know you think that 2016 is magic after the first insert. The documentation says that new pages will be minimally logged. I'll have to dig out the documentation but I'm pretty sure that's not true in the FULL recovery model.

Not in FULL of course. As you had already stated, FULL is always fully logged. So your comment had to be about non-FULL. I'm not sure why you felt the need to slur me with "magic", but, at any rate, of course it's not "magic", it's how the product is designed to work.

SQL DBA,SQL Server MVP(07, 08, 09) "It's a dog-eat-dog world, and I'm wearing Milk-Bone underwear." "Norm", on "Cheers". Also from "Cheers", from "Carla": "You need to know 3 things about Tortelli men: Tortelli men draw women like flies; Tortelli men treat women like flies; Tortelli men's brains are in their flies".

-

June 6, 2022 at 2:02 pm

ScottPletcher wrote:Jeff Moden wrote:Apologies on the mistake about COMPRESS.

I know you think that 2016 is magic after the first insert. The documentation says that new pages will be minimally logged. I'll have to dig out the documentation but I'm pretty sure that's not true in the FULL recovery model.

Not in FULL of course. As you had already stated, FULL is always fully logged. So your comment:

Using a loop to break things up into sections will cause the every section except the first to be FULLY LOGGED, which takes as much as 3 times longer than minimal logging.

had to be about non-FULL (because even the first section (INSERT) would be fully logged under FULL). You keep repeating this claim about SQL switching to full logging, and it's just no longer true in the scenario I described above (only clus index, INSERT sections in sequential order). The only INSERTs that would be fully logged are those that go into an already existing page.

SQL DBA,SQL Server MVP(07, 08, 09) "It's a dog-eat-dog world, and I'm wearing Milk-Bone underwear." "Norm", on "Cheers". Also from "Cheers", from "Carla": "You need to know 3 things about Tortelli men: Tortelli men draw women like flies; Tortelli men treat women like flies; Tortelli men's brains are in their flies".

-

June 6, 2022 at 2:13 pm

ScottPletcher wrote:ScottPletcher wrote:Jeff Moden wrote:Apologies on the mistake about COMPRESS.

I know you think that 2016 is magic after the first insert. The documentation says that new pages will be minimally logged. I'll have to dig out the documentation but I'm pretty sure that's not true in the FULL recovery model.

Not in FULL of course. As you had already stated, FULL is always fully logged. So your comment:

Using a loop to break things up into sections will cause the every section except the first to be FULLY LOGGED, which takes as much as 3 times longer than minimal logging.

had to be about non-FULL (because even the first section (INSERT) would be fully logged under FULL).

You keep repeating this claim about SQL switching to full logging, and it's just no longer true in the scenario I described above (only clus index, INSERT sections in sequential order). The only INSERTs that would be fully logged are those that go into an already existing page.

I'm not sure why you felt that a comment about "magic" was unnecessary. Of course I don't think it's "magic", it's simply how the product was designed to work.

SQL DBA,SQL Server MVP(07, 08, 09) "It's a dog-eat-dog world, and I'm wearing Milk-Bone underwear." "Norm", on "Cheers". Also from "Cheers", from "Carla": "You need to know 3 things about Tortelli men: Tortelli men draw women like flies; Tortelli men treat women like flies; Tortelli men's brains are in their flies".

-

June 6, 2022 at 2:13 pm

ignore, duplicate post

SQL DBA,SQL Server MVP(07, 08, 09) "It's a dog-eat-dog world, and I'm wearing Milk-Bone underwear." "Norm", on "Cheers". Also from "Cheers", from "Carla": "You need to know 3 things about Tortelli men: Tortelli men draw women like flies; Tortelli men treat women like flies; Tortelli men's brains are in their flies".

-

June 6, 2022 at 5:03 pm

@scott... the comment about "magic" wasn't meant as a slam to you. It was meant as a slam to MS because they claim that you can do minimal logging if NCIs are present in the table. They also have a footnote about things on that subject and how it might not work. To date, I've not seen that form of "magic" ever work before or after 2016.

As for secondary inserts being minimally logged per the MS claims of new pages not being fully logged, I tested that when 2016 first came out and it didn't appear to work as advertised. I believe I still have code for that test and will try to resurrect it. To date, I've not seen anyone else prove it with code in an article. Do you have such a bit of proof code?

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

June 7, 2022 at 10:17 am

Hello,

first of all thank you very much for your help !

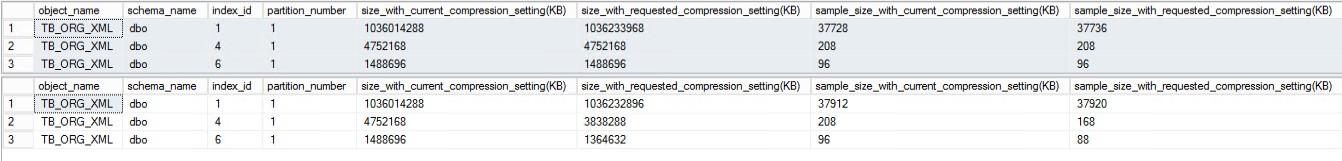

I have checked the expected compression and the data saving is really huge.

Attached are the predicted results first on ROW then on PAGE level

Is the compression really transparent for the application?

Unfortunately I don't know the application and I don't know if it creates problems after turning on the compression.

What did Jeff mean by "If you're not using stored procedures with the front-end"?

But as Jeffrey already recognized by the wrongly written trigger, I don't suspect that this is a well written application.

I am testing importing data with the table only with PK and page compression.

At the moment the Import via DBA Tools runs with 5230,4 rows/sec

Just to summarize again if I understood it correctly:

You would create the basic structure of the table only with the PK index.

Then copy the data and afterwards

Swap the name between the old table and the new table.

Then create the rest of the indexes and the trigger ?

best regads

BAM

-

June 7, 2022 at 12:29 pm

process is as follows

- create new table

- create clustered index (if it is a PK the pk should have a new name associated with the new table)

- insert data onto new table

- create remaining indexes

- add trigger to table

- rename (or drop) old table

- rename new table to old table

- rename PK to match table rename

regarding data_compression - this is completely transparent to the application and requires no code changes

and have you tried the tests I asked you to do? those will give you both an indication of how long it will take to transfer as well as a potential indication of how much log space you are going to need.

- This reply was modified 3 years, 8 months ago by frederico_fonseca.

-

June 8, 2022 at 3:45 pm

Just remember that you'll end up with a rather huge transaction log file on Step 3 and it will take 2-3 times longer if you don't achieve "Minimal Logging".

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

June 8, 2022 at 4:49 pm

Jeff Moden wrote:Just remember that you'll end up with a rather huge transaction log file on Step 3 and it will take 2-3 times longer if you don't achieve "Minimal Logging".

Also depends on whether or not you put the db into Simple mode for the duration of this load.

SQL DBA,SQL Server MVP(07, 08, 09) "It's a dog-eat-dog world, and I'm wearing Milk-Bone underwear." "Norm", on "Cheers". Also from "Cheers", from "Carla": "You need to know 3 things about Tortelli men: Tortelli men draw women like flies; Tortelli men treat women like flies; Tortelli men's brains are in their flies".

-

June 8, 2022 at 5:20 pm

ScottPletcher wrote:Jeff Moden wrote:Just remember that you'll end up with a rather huge transaction log file on Step 3 and it will take 2-3 times longer if you don't achieve "Minimal Logging".

Also depends on whether or not you put the db into Simple mode for the duration of this load.

You do not need to put the DB into the SIMPLE Recovery Mode to attain "Minimal Logging". The BULK LOGGED Recovery Model does fine and it won't break the log file chain like going into the SIMPLE Recovery Model.

I do agree that the SIMPLE Recovery Model would be the best insofar as how much is logged especially after the even but the BULL LOGGED Recovery Model still works a treat.

It DOES have the issue with whether or not you can do a PiT restore so somewhere in the middle of the given log file but it does not prevent PiT restores either before or after any log fie that contains "Minimally Logged" events.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally)

Viewing 13 posts - 16 through 28 (of 28 total)

You must be logged in to reply to this topic. Login to reply