A varchar(MAX) STRING_SPLIT function for SQL 2012 and above

-

December 11, 2019 at 4:22 pm

Jonathan AC Roberts wrote:Thom A wrote:having something that has a

varcharpassed to it that returns annvarcharcould be very bad for SARGability, if the column being compared afterwards is avarchartoo (as the Column would be implicitly converted, meaning any indexes are effectively useless).I've just run a test and not converting the results of the dbo_STRING_SPLIT function and joining the results to a varchar still does an index seek on the table. ... It does a seek on both queries but the second query (without the convert varchar(5)) is a bit slower to execute

Hi there.

Regarding @Thom-A's statement about implicit conversions: that is an unfortunately common misunderstanding / over-simplification. The

CONVERT_IMPLICITis not the cause of the performance degradation. It's Microsoft's horrible miscalculation that the pain of switching the default collation for OSs using the US English (i.e. en-US) locale / culture toLatin1_General_CI_AS(and dealing with a customers upgrading and having problems with queries against temp tables doing joins on string columns) would be worse than keeping an increasingly obsolete default collation for new installations (i.e.SQL_Latin1_General_CP1_CI_AS). SQL Server collations (those starting with "SQL_") have different sort orders forVARCHARandNVARCHARdata, and that is why the conversion (implicit or explicit) toNVARCHARcauses problems: the index isn't in the correct physical order, so it needs to be scanned or ignored. Please see my post, "Impact on Indexes When Mixing VARCHAR and NVARCHAR Types", for full details.Regarding @jonathan-ac-roberts 's test: you might want to include a

GObetween the two test queries. It might not make a difference, but I have found that quite often running multiple queries in a single batch will produce different performance results as compared to running them individually / in separate batches. Typically one of them, if executed in the same batch, ends up being affected for the worse than when executed independently.Take care,

Solomon...

- This reply was modified 6 years, 2 months ago by Solomon Rutzky.

- This reply was modified 6 years, 2 months ago by Solomon Rutzky.

SQL# — https://SQLsharp.com/ ( SQLCLR library ofover 340 Functions and Procedures)

Sql Quantum Lift — https://SqlQuantumLift.com/ ( company )

Sql Quantum Leap — https://SqlQuantumLeap.com/ ( blog )

Info sites — Collations • Module Signing • SQLCLR -

December 11, 2019 at 4:36 pm

Solomon Rutzky wrote:Regarding @jonathan-ac-roberts 's test: you might want to include a

GObetween the two test queries. It might not make a difference, but I have found that quite often running multiple queries in a single batch will produce different performance results as compared to running them individually / in separate batches. Typically one of them, if executed in the same batch, ends up being affected for the worse than when executed independently.Excellent point, Solomon! To wit, I've found that even the use of GO between batches is sometimes not enough. Sometimes they need to physically be executed separately.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

December 11, 2019 at 6:07 pm

Jeff Moden wrote:Solomon Rutzky wrote:Regarding @jonathan-ac-roberts 's test: you might want to include a

GObetween the two test queries. It might not make a difference, but I have found that quite often running multiple queries in a single batch will produce different performance results as compared to running them individually / in separate batches.Excellent point, Solomon! To wit, I've found that even the use of GO between batches is sometimes not enough. Sometimes they need to physically be executed separately.

Hi Jeff. Yes, I have experienced the same, but was just trying to not throw too much into that reply ;-). But yes, nowadays when I do any performance testing of this nature, I nearly always do fully separate executions just to be sure. Thanks for mentioning that as I wasn't sure if I was the only one seeing such things.

Take care,

Solomon...

SQL# — https://SQLsharp.com/ ( SQLCLR library ofover 340 Functions and Procedures)

Sql Quantum Lift — https://SqlQuantumLift.com/ ( company )

Sql Quantum Leap — https://SqlQuantumLeap.com/ ( blog )

Info sites — Collations • Module Signing • SQLCLR -

December 11, 2019 at 6:24 pm

Jeff Moden wrote:Solomon Rutzky wrote:Regarding @jonathan-ac-roberts 's test: you might want to include a

GObetween the two test queries. It might not make a difference, but I have found that quite often running multiple queries in a single batch will produce different performance results as compared to running them individually / in separate batches. Typically one of them, if executed in the same batch, ends up being affected for the worse than when executed independently.Excellent point, Solomon! To wit, I've found that even the use of GO between batches is sometimes not enough. Sometimes they need to physically be executed separately.

I've tried the test with a GO between and it does make a difference to the elapsed and CPU times reported in the "set statistics" messages. It brings the query without cast(varchar) down to be only about 10% slower than the query with the cast. Without the GO, the query without the cast(varchar) is about 3 times slower than the one with cast(varchar). I can't say I understand why though?

As for the IO, there are 10 scan counts reported on #t1 for the query without cast(varchar) compared to zero scans with it. This is true for whether or not the GO is included.

-

December 15, 2019 at 4:46 am

fgrodriguez wrote:The usefulness of this function is not that of rapid execution, but the utility of the result.

I've heard many people speak such words and they're usually the same ones begging for a performance fix some time later. Performance (ie, "Rapid Execution") is second only to correct functionality and it's a very close second. Don't dismiss it so easily.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

March 4, 2021 at 5:06 pm

I'm having the following problem with the solution. The following call returns 'bc' as the only value, not 'Abc' as I would suppose:

DECLARE @Test table (Field NVARCHAR(255))

INSERT INTO @Test

SELECT value FROM dbo.STRING_SPLIT('Abc', ', ')

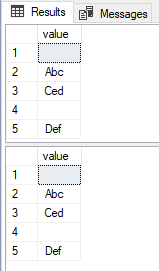

SELECT * FROM @TestIf I try STRING_SPLIT('Abc, Ced', ', '), I get 'bc,' and 'Ced'.

And splitting 'Abc, Ced, Def' with the same delimiter delivers 'bc,', 'Ced,' and 'Def'.

So, it seems that using ', ' (comma and space) as a delimiter makes the first letter to be eaten and it does not remove ',' from the end of the splittet values.

I have also tried STRING_SPLIT('Abc Ced Def', ' '), here I have gotten 'Abc ', 'Ced ', 'Def ' (there are spaces on the end, which I consider to be wrong).

Am I doing something wrong or is there a problem in the function definition? I am using MS SQL Server 2012.

Thank you for your help in advance,

Roman

-

March 4, 2021 at 5:42 pm

rreimche wrote:I'm having the following problem with the solution. The following call returns 'bc' as the only value, not 'Abc' as I would suppose:

DECLARE @Test table (Field NVARCHAR(255))

INSERT INTO @Test

SELECT value FROM dbo.STRING_SPLIT('Abc', ', ')

SELECT * FROM @TestIf I try STRING_SPLIT('Abc, Ced', ', '), I get 'bc,' and 'Ced'. And splitting 'Abc, Ced, Def' with the same delimiter delivers 'bc,', 'Ced,' and 'Def'.

So, it seems that using ', ' (comma and space) as a delimiter makes the first letter to be eaten and it does not remove ',' from the end of the splittet values.

I have also tried STRING_SPLIT('Abc Ced Def', ' '), here I have gotten 'Abc ', 'Ced ', 'Def ' (there are spaces on the end, which I consider to be wrong).

Am I doing something wrong or is there a problem in the function definition? I am using MS SQL Server 2012.

Thank you for your help in advance, Roman

Hi Roman,

Thank you for looking at the code.

I've fixed the problem (code below).

I'll get it republished with the correction.

Jonathan

IF OBJECT_ID('[dbo].[STRING_SPLIT]','IF') IS NULL BEGIN

EXEC ('CREATE FUNCTION [dbo].[STRING_SPLIT] () RETURNS TABLE AS RETURN SELECT 1 X')

END

GO

ALTER FUNCTION [dbo].[STRING_SPLIT]

(

@string nvarchar(MAX),

@separator nvarchar(MAX)

)

RETURNS TABLE WITH SCHEMABINDING

AS RETURN

WITH X(N) AS (SELECT 'Table1' FROM (VALUES (0),(0),(0),(0),(0),(0),(0),(0),(0),(0),(0),(0),(0),(0),(0),(0)) T(C)),

Y(N) AS (SELECT 'Table2' FROM X A1, X A2, X A3, X A4, X A5, X A6, X A7, X A8) , -- Up to 16^8 = 4 billion

T(N) AS (SELECT TOP(ISNULL(LEN(@string),0)) ROW_NUMBER() OVER (ORDER BY (SELECT NULL)) -1 N FROM Y),

Delim(Pos) AS (SELECT t.N FROM T WHERE SUBSTRING(@string, t.N, LEN(@separator+'x')-1) LIKE @separator OR t.N = 0),

Separated(value) AS (SELECT SUBSTRING(@string, d.Pos + CASE WHEN Pos=0 THEN 1 ELSE LEN(@separator+'x')-1 END , LEAD(d.Pos,1,2147483647) OVER (ORDER BY (SELECT NULL)) - CASE WHEN d.Pos=0 THEN 2-LEN(@separator+'x') ELSE d.Pos END - (LEN(@separator+'x')-1))

FROM Delim d

WHERE @string IS NOT NULL)

SELECT s.value

FROM Separated s

WHERE s.value <> @separator

GO -

March 5, 2021 at 4:07 am

Jeff Moden wrote:I'm not sure who or what marked the post by fgrodriguez as spam but I'm reposting it here so that the de-spaminator doesn't delete it.

I'll also state I'm a bit concerned about the possible performance of the code because it's an mTVF rather than an iTVF. That's part of the reason why I wanted to make sure the marked post didn't disappear. It deserves to be tested.

Recursive CTE?

A bit concerned? About the possible performance?

_____________

Code for TallyGenerator -

March 5, 2021 at 2:55 pm

Great, thank you for the correction. Here is another Problem to consider

INSERT INTO @Test SELECT value FROM dbo.STRING_SPLIT('Abc, Ced, Def, ', ', ')gives "Abc", "Ced" and "Def, " (notice whitespace in the laste value). I think ", " should not be present in the last value.

Another catch:

INSERT INTO @Test SELECT value FROM dbo.STRING_SPLIT(', Abc, Ced, Def', ', ')delivers "", "Abc", Ced", Def". I guess the first empty value should not be there.

Cheers,

Roman

-

March 5, 2021 at 6:10 pm

rreimche wrote:Great, thank you for the correction. Here is another Problem to consider

INSERT INTO @Test SELECT value FROM dbo.STRING_SPLIT('Abc, Ced, Def, ', ', ')gives "Abc", "Ced" and "Def, " (notice whitespace in the laste value). I think ", " should not be present in the last value.

Another catch:

INSERT INTO @Test SELECT value FROM dbo.STRING_SPLIT(', Abc, Ced, Def', ', ')delivers "", "Abc", Ced", Def". I guess the first empty value should not be there.

Cheers, Roman

That's also a feature of the SPLIT_STRING function in SQL Server:

/* This version */

SELECT value FROM dbo.STRING_SPLIT(',Abc,Ced,,Def', ',');

/* SQL Server version */

SELECT value FROM STRING_SPLIT(',Abc,Ced,,Def', ',');

If you want to suppress them you could write a WHERE clause:

SELECT value FROM dbo.STRING_SPLIT(',Abc,Ced,,Def', ',');

WHERE value <> '' -

March 5, 2021 at 6:22 pm

I've put an LTRIM on the output to make it the same as the SQL Server version, also it was searching the string for the delimiter one short of the length of the string so the final delimiter wasn't being found when the string was terminated with a delimiter. I've fixed that in the following code:

IF OBJECT_ID('[dbo].[STRING_SPLIT]','IF') IS NULL BEGIN

EXEC ('CREATE FUNCTION [dbo].[STRING_SPLIT] () RETURNS TABLE AS RETURN SELECT 1 X')

END

GO

ALTER FUNCTION [dbo].[STRING_SPLIT]

(

@string nvarchar(MAX),

@separator nvarchar(MAX)

)

RETURNS TABLE WITH SCHEMABINDING

AS RETURN

WITH X(N) AS (SELECT 'Table1' FROM (VALUES (0),(0),(0),(0),(0),(0),(0),(0),(0),(0),(0),(0),(0),(0),(0),(0)) T(C)),

Y(N) AS (SELECT 'Table2' FROM X A1, X A2, X A3, X A4, X A5, X A6, X A7, X A8) , -- Up to 16^8 = 4 billion

T(N) AS (SELECT TOP(ISNULL(LEN(@string),0)+1) ROW_NUMBER() OVER (ORDER BY (SELECT NULL)) -1 N FROM Y),

Delim(Pos) AS (SELECT t.N FROM T WHERE SUBSTRING(@string, t.N, LEN(@separator+'x')-1) LIKE @separator OR t.N = 0),

Separated(value,position) AS (SELECT SUBSTRING(@string, d.Pos + CASE WHEN Pos=0 THEN 1 ELSE LEN(@separator+'x')-1 END , LEAD(d.Pos,1,2147483647) OVER (ORDER BY (SELECT NULL)) - CASE WHEN d.Pos=0 THEN 2-LEN(@separator+'x') ELSE d.Pos END - (LEN(@separator+'x')-1)),

d.pos

FROM Delim d

WHERE @string IS NOT NULL)

SELECT LTRIM(s.value) value,

ROW_NUMBER() OVER (ORDER BY (SELECT NULL)) position

FROM Separated s

WHERE s.value <> @separator;

GOI've also added a column called "position" so the position of the column in the string can be identified.

-

March 5, 2021 at 6:23 pm

Now that's a matter of opinion. I, for one, want it to return empty or null strings for any element that exists but isn't filled. One of the things I test with is passing 3 commas and nothing else. If it doesn't return 4 empty string or 4 nulls, it's broken, IMHO.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

March 5, 2021 at 8:44 pm

My Splitter function takes 3 parameters - data, delimiter and ValueForBlanks - typically empty string or NULL, but the value '[[DELETE]]' then completely removes any blank entries

-

March 5, 2021 at 8:56 pm

Kristen-173977 wrote:My Splitter function takes 3 parameters - data, delimiter and ValueForBlanks - typically empty string or NULL, but the value '[[DELETE]]' then completely removes any blank entries

Or you could just add a WHERE clause to filter them out as it's a table valued function.

-

March 6, 2021 at 7:04 am

Jonathan AC Roberts wrote:Or you could just add a WHERE clause to filter them out as it's a table valued function.

Yes ... but my Splitter returns an Item No, and if I use WHERE afterwards I have gaps in the Item No, so I would then also have to add code to solve that, every time I have that requirement, whereas if I get my Splitter to remove them that function returns contiguous Item Nos.

I still have the option of the Splitter not removing them, so I can have the data "raw" and post-process if that is more appropriate.

Viewing 15 posts - 16 through 30 (of 40 total)

You must be logged in to reply to this topic. Login to reply