Whilst working at a client site, I hit upon one of those issues that you are not sure if that this is something entirely new or a bug or a gap in your knowledge.

The client had a large query that needed optimizing. The query itself looked pretty good, no udfs, UNION ALL were used rather than UNION, most of the predicates were sargable other than one or two minor ones. There were a few extra joins that could be eradicated and having fixed up the query I then started to dive into the plan.

I could see all manor of spills in the hash joins and the sort operations, these are caused when SQL Server has not reserved enough memory and has to write to tempdb. A VERY expensive operation that is generally avoidable. These, however, are a symptom of a bad row estimation somewhere else, and when that bad estimation is combined with other estimation errors, chaos can ensue.

Working my way back down the plan, I found the cause, and the more I thought about it the more i came convinced that the optimizer could be making a much more intelligent choice.

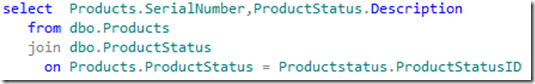

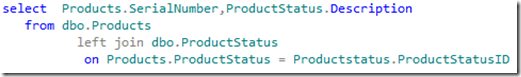

First step is to reproduce and I was able to simplify the query down a single join between two tables, Product and ProductStatus, from a business point of view, quite fundamental, find the status of particular products to show if ‘active’ ,’inactive’ or whatever. The query itself couldn’t be any simpler

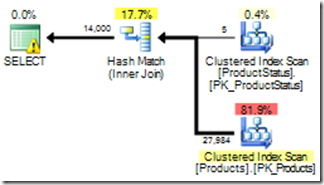

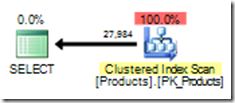

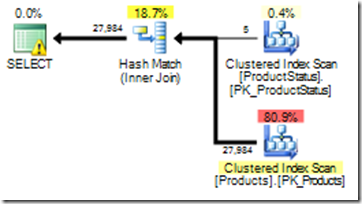

The estimated plan looked like this:

Ignore the “!” warning which is a missing index, but notice that Products has 27,984 rows and the join outputs 14,000.

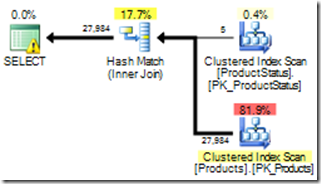

The actual plan shows how bad that estimation of 14,000 is :

So every row in Products has a corresponding row in ProductStatus. This is unsurprising, in fact it is guaranteed, there is a trusted FK relationship between the two columns. There is no way that the actual output of the join can be different from the input.

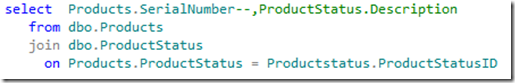

The optimizer is already partly aware of the foreign key meta data, and that can be seen in the simplifiction stage. If we drop the Description column from the query:

the join to ProductStatus is optimized out.

It serves no purpose to the query, there is no data required from the table and the optimizer knows that the FK will guarantee that a matching row will exist so it has been removed.

Surely the same should be applied to the row estimations in the initial example, right ? If you think so, please upvote this connect item.

So what are our options in fixing this error ?

Simply changing the join to a left join will cause the optimizer to think that we could allow the rows not to exist.

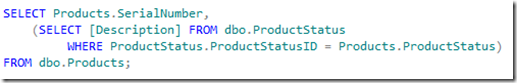

or a subselect would also work

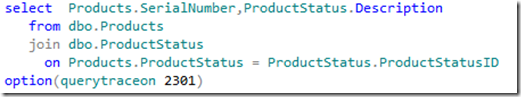

However, this is a client site, Im not able to change each and every query where this join takes place but there is a more global switch that will fix this error, TraceFlag 2301.

This is described as, perhaps loosely, “Enable advanced decision support optimizations”.

We can test this on the original query in isolation by using the “QueryTraceOn” option

and lo and behold our estimated plan now has the ‘correct’ estimation.

Many thanks goes to Paul White (b|t) for his help and keeping me sane through this ![]()