I recently configured a backup job to run on the principal server of a mirrored pair. The idea was to backup the files to a local folder and then have DFSR (Distributed File System Replication) replicate the backups to the same path on the mirror server. All the transaction log backups (.trn) and any logging that the backup job outputted was replicated successfully, but all .bak files in the same folder structure were ignored. This was verified by renaming a .bak file on the principal to .backmeup and watching it appear on the mirror server.

Why ignore .bak files?

Ideas of a cmd job step (after the backup step) that renamed the .bak files to .BTFU started to form, but a quick search showed that there is a default filter on DFSR folders.

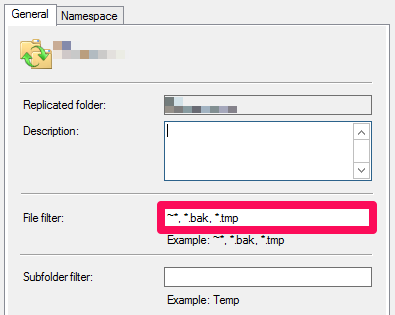

- Files starting with ~ (temporary files created by programs like Word)

- Files with .tmp extension

- Files with a .bak extension.

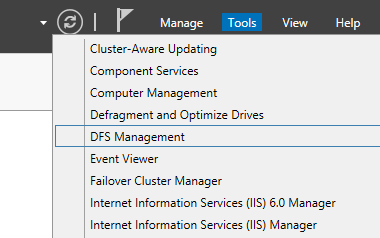

To alter this filter, go to Administrative Tools and then DFS Management.

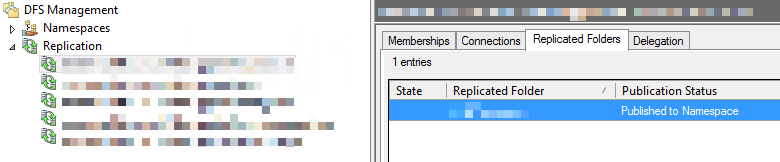

In the pane on the left, click Replication and then click the replication group with the replicated folder that is ignoring .bak files. On the Replicated Folders tab, right click the folder and click Properties.

The existing filer is highlighted below. Remove the *.bak entry from the comma delimited list and the .bak file will now replicate.

In my case, on a very small setup the replication was almost instant. In bigger setups it may take a while for the other members to pull the new filter from their local DCs and replicate the files. If you’re on 2012 R2, you can use the Update-DfsrConfigurationFromAD cmdlet to immediately pull the new filter from the DC.

Is this a good idea?

Well to answer the question posed earlier “Why ignore .bak files?” I expect .bak is in the default filter for 2 reasons:

- Backups tend to be large files, which can cause issues with default configurations of DFSR staging folders.

- DFSR uses Remote Differential Compression to only replicate the parts of files that change, saving time and bandwidth. Compression and encryption (both common with .bak files) would cause problems here.

Backup replication could also be achieved with a PowerShell job to copy the files. This job could use background jobs or work flows to send many files in parallel and it could even use Robocopy to multi thread each copy. It wouldn’t be two way like DFSR but you can work on that

2016 brings us Storage Replica. This could be a better solution if you are using locally attached drives for your backups.

This short post acts an interlude for my series on SQL Server and Continuous Integration. Part 4 of the series will cover the installation and configuration of ReadyRoll.

The post The Non-Replicated Backup Mystery appeared first on The Database Avenger.