USE MydbGOCREATE TABLE LoginfoHeap(

id INT)

GOCREATE TABLE LoginfoCI(

id INT PRIMARY KEY)

INSERT INTO LoginfoHeap VALUES(1),(2)INSERT INTO LoginfoCI VALUES(2),(4)GODELETE FROM LoginfoHeap DELETE FROM LoginfoCIGOCHECKPOINTGOSELECT [Current LSN], Operation ,Context ,[Transaction ID],[Previous LSN],AllocUnitName,[Previous Page LSN],[Page ID],[XACT ID],SUSER_SNAME(CONVERT(VARBINARY,[Transaction SID])) AS 'Login',[Begin Time],[End Time]FROM sys.fn_dblog (NULL, NULL)

Now we will insert,update and delete records to these tables through two session

---SESSION I

BEGIN TRAN

INSERT INTO LoginfoCI VALUES(2)

--SESSION 2

BEGIN TRAN

INSERT INTO LoginfoHeap VALUES(1),(2)

---SESSION I

INSERT INTO LoginfoCI VALUES(4)

--SESSION 2

UPDATE LoginfoHeap SET id =8 WHERE id=1

---SESSION I

UPDATE LoginfoCI SET id =6 WHERE id=2

--SESSION 2

DELETE FROM LoginfoHeap WHERE id=2

---SESSION I

DELETE FROM LoginfoCI WHERE id=4

SELECT * FROM sys.dm_tran_current_transaction

COMMIT

--SESSION 2

SELECT * FROM sys.dm_tran_current_transaction

COMMIT

[Current LSN],

Operation ,

Context ,

[Transaction ID],

[Previous LSN],

AllocUnitName,

[Previous Page LSN],

[Page ID],[XACT ID],

SUSER_SNAME(CONVERT(VARBINARY,[Transaction SID])) AS 'Login',

[Begin Time],

[End Time]

FROM sys.fn_dblog (NULL, NULL)

WHERE [Transaction ID] IN

(

SELECT [Transaction ID] FROM sys.fn_dblog (NULL, NULL)

WHERE [XACT ID] IN (856960,856981)

)

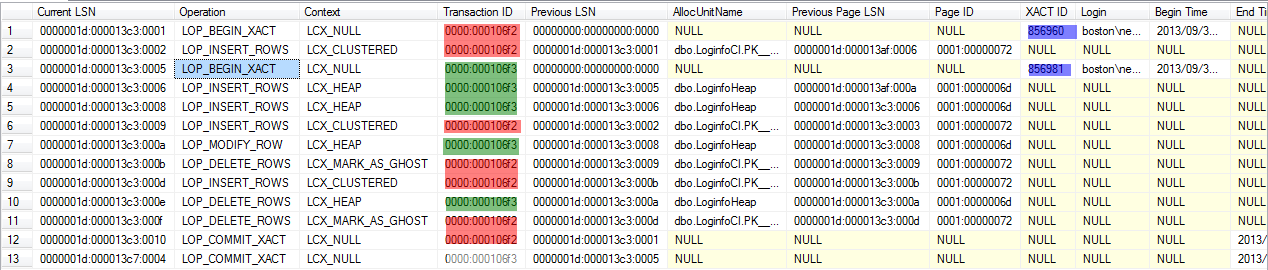

The values 856960 and 856981 are the transaction_id returned from sys.dm_tran_current_transaction.We have filter the output to get only the relevant rows in which we are interested.

In our script, we have opened two transaction and all our transaction log entries are grouped to

one of these transaction_id marked in red and green.Let us analyse what we did and how it is captured in the transaction log.

In the session 1, we have started the transaction and inserted a single record.The first records in the output map to the BEGIN TRAN command. This is the starting point of the transaction and created a new transaction_id.The previous LSN column value is 0 as this is the first log entry in this transaction.In the same log records,it stores the XACT_ID,login and transaction start time.The second record represent the insert into the clustered table.The transaction_id is used to group the entries associated with a transaction. The previouse LSN column, is a pointer to the previous log entry in the same transaction which help SQL server to move backwards in case of rollback.Page id column refer the the page number where this LSN made the change.Previous Page LSN column refer the last log sequence number(LSN) which modify this page.When LSN modify a page, it will also update the corresponding LSN number in the page header (m_lsn field in the header. For more detail refer this post)

In the session 2, we have opened another transaction and inserted two records through single insert statement to the heap table. You can map these operations to row number 3,4, and 5 in the transaction log output. Third row represent the Begin tran command. Even if we inserted two records in single insert statement , SQL server recorded two separate entry in the transaction log.

As a next step, in session 1 we have added 1 record to the clustered index table.We can map this operation to the 6th record in the transaction log output.

In the next statement , we have modified a record in heap table through Session 2. You can map this to the 7th record in the transaction log output.If you look into the previous LSN column , it will be current LSN column value of the last record associated with this transaction.

In the same way, as a next statement we have modified a record in the clustered table through session 1. We can map the 8th and 9th records in the transaction log output to the update operation on the clustered table. You might have noticed that, when we modified a record in the heap table, transaction log recorded operation in a single row. Where as the same operation in a clustered table has two record in the transaction log. One for delete and other one for insert. When you modify the clustered index key, SQL server internally delete the existing record and insert a new record. This is because, the record need to be stored in the new location based on the modified value(based on the order of clustered index column). The easiest way for SQL server to achieve this is , delete the existing record and insert it as new records with modified clustered column value.

In the next two statement, we are deleting one record from heap table and clustered table.This can be mapped to the 10th and 11th records in the output.Finally we have issued the commit statement in both sessions.12th and 13th record in the transaction log output can be mapped to the commit operation.The Previous LSN column refer the Current LSN column of corresponding begin tran statement. It will also capture the transaction end time in the End time column.

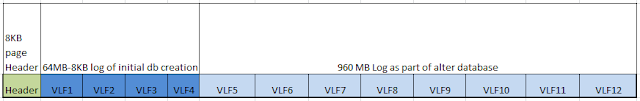

Understanding the VLF(Virtual Log File)

A database can have one or more log file. In general there will be only one log file as there is no performance improvement by having multiple log file. SQL server uses the transaction log in sequential manner.As the data file divided into pages,log files are divided into virtual log file(VLF).The size of the VLFs in a log file may not be in equal size. SQL server decide the size and number of VLF in a log file based on the size of the log file growth as given below.

USE MASTER;

GO

CREATE DATABASE Mydb

ON

( NAME = MyDb_dat, FILENAME = 'D:\MyDb\Mydb.mdf',

SIZE = 10MB, MAXSIZE = 3072MB, FILEGROWTH = 5MB )

LOG ON ( NAME = MyDb_log,FILENAME = 'D:\MyDb\MyDB.ldf',

SIZE = 64MB, MAXSIZE = 2048MB, FILEGROWTH = 5MB ) ;GO

ALTER DATABASE Mydb

MODIFY FILE ( NAME = MyDb_Log,FILENAME = 'D:\MyDb\MyDB.ldf', SIZE = 1024MB)

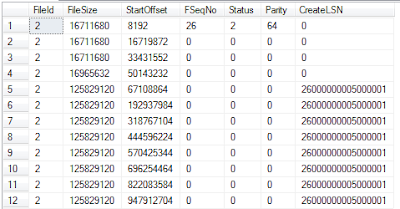

Now Let us see how many VLF got created. To find out the number of VLF in database log file, we can make use of DBCC Loginfo.

DBCC loginfo('mydb')

FileId: This is the file id of the log file and will be same for all 12 records as we have only one log file.If we have multiple log file , we can multiple numbers here

If you liked this post, do like my page on FaceBook