This will probably be a multiple stage discussion. The notes I’m providing today were sparked off of the content located in the fantastic learning resource provided by Brent Ozar Unlimited. Most of the core material based on a training class entitled: Table Variables, Temp Tables, and OPTION RECOMPILE Credit for some of the core discussion in here goes to them! I’ve branched off in discussing the recompilation and statistics issue a little more based on my interest in the topic.

I highly recommend this resource, and taking advantage of the current discount offering by reviewing their fantastic weekly posting of Office Hours.

Temp Table

Pros – Has statistics

Cons – Can allow behavior that requires recompilation

Table Variable

Cons

- Estimate doesn’t account for actual rows. This can lead to incredibly inefficient query plans.

- SQL <=2012 = Estimate of 1 row

- SQL 2014 Estimate 100 rows (article on sqlmag

verifies that it was (backported to sql 2012) with appropriate service pack). small concession that has probably has minimal impact for the cases of large number of variance in rows

Pros

- Their behavior restrictions can ensure plan recompilation is minimized.

- Table variables don’t permit these types of alterations, so they can provide benefits by limiting the behavior that can cause a recompilation. This benefit is lost once the statistics needed in the table outweight the benefit of worrying about recompilation. This is why unless dealing with a small amount of rows (let’s say <=100) that it is typically better to use a temp table for stored procedures. MSDN validates.

Good usage case

- If dealing with a small result set being called by the application continually, then in this case a table variable might be a good fit for ensuring optimized performance, though with the proper coding and diligence to ensure no DDL changes a temp table would perform exactly the same (open to any discussion that contradicts this)

Table variables provide the following benefits for small-scale queries that have query plans that do not change and when recompilation concerns are dominant…

Table variables are not supported in the SQL Server optimizer’s cost-based reasoning model. Therefore, they should not be used when cost-based choices are required to achieve an efficient query plan. Temporary tables are preferred when cost-based choices are required. This typically includes queries with joins, parallelism decisions, and index selection choices…

Queries that modify table variables do not generate parallel query execution plans. Performance can be affected when very large table variables, or table variables in complex queries, are modified. In these situations, consider using temporary tables instead.

Transactions involving table variables last only for the duration of an update on the table variable. Therefore, table variables require less locking and logging resources *(Probably worth a post later on this!)

option(recompile)

Only want to use in specific scenarios. Basically, from various sources, I’ve always heard that these explicit query hints should typically be designed for edge cases or specific scenarios that are tested, documented, and known to scale appropriately. Anytime you introduce hints, you are taking control from the query analyzer and indicating you know best…. This might be the case, but test test test!

Use When

- Not being used often. Brent indicates best to limit this on queries that run more than 1 time in 5 minutes as a guideline. Otherwise, this can tie up CPU resources. This is useful as I had never heard any type of guideline as starting point to gauge this.

- Unpredictable amount of results returned

Pros

- Optimized query plan. For example, a small result set might return an efficient plan with nested loops, but once the result set scales up higher a different join type might be more optimal for returning the larger result set. This can be a balancing act, and another case for breaking up a very large view (20 tables in my case) into smaller pieces that were able to greatly improve the execution plan generation.

- Will run better when results of execution plan may differ greatly, with parameters for example. This again is a balancing act. I have seen the over engineering of a stored procedure that tries to handle so many possible variables that creating an efficient execution plan, much less reading the code can be difficult! I’d personally say as a starting point that maybe the procedure might be considered for refactoring once you start adding in so many parameters that you begin to deal with tremendous variance in the results as a normal occurrence.

Cons

- Lose DM Exec query stats.

- The stats get blown away each time the query is run, meaning your query statistics are blown away each time, so you can track over time. (when Brent mentioned this it helped connect some dots! This could be a critical component if you are wanting to gauge performance issues over time and don’t know why these queries aren’t showing up with full history!)

- Can have tremendous impact on CPU

- every single execution is recompiling the entire execution plan, which requires CPU usage. This can entail a big hit on server performance as instead of benefiting from cached plans in the buffer, SQL server is now having to reevaluate the query execution plan each time. Besides the storage engine, this can be a tremendous cost.

- Set statistics time can provide insight into the milliseconds of CPU time to parse and compile the query

impact of option(recompile) when joins involved

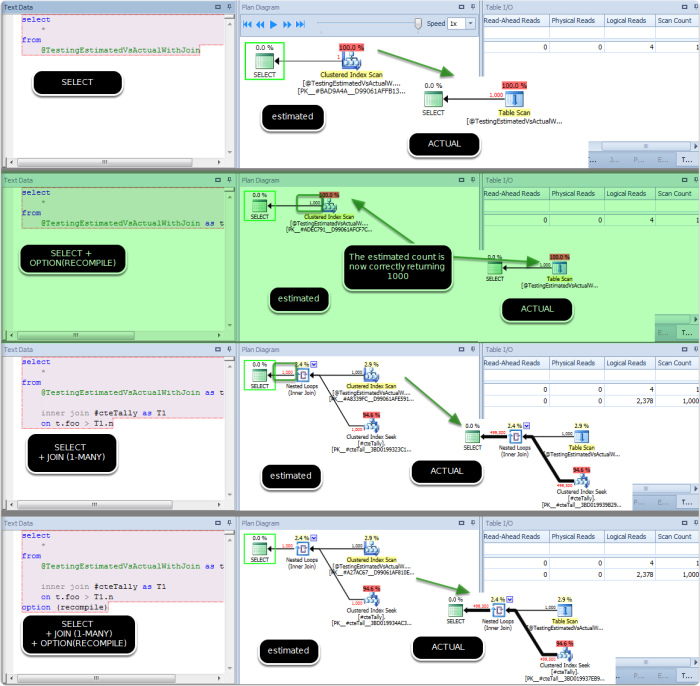

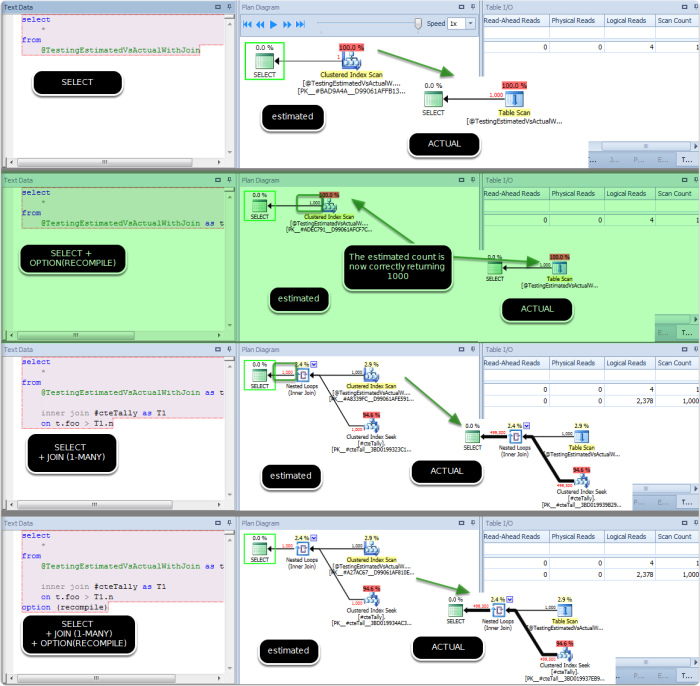

It is important to note that the recompilation of a table variable in a query only partially helps.

If the results are purely being returned in a select, then the correct number of rows will be estimated. However, since we are sql guys… dealing with sets… (death to RBAR)…. the likely usage case many times is going to be that we are returning a table variable that will join to other tables to return….

This entails a big glaring issue with option(recompile) and table variables.

The estimated rows won’t be accounted for the the table join. Instead, the recompile will only provide back the count of the rows in the table variable, without accounting for the possible 1 to many. This is important to remember as option(recompile) added to a table variable with a 1-many won’t fix the correct statistics estimation issue!

The usage pattern that would benefit from option(recompile) on a temp table is the highlighted on, a simple select from the table variable.

closing thoughts

Myth buster

- Temp tables and table variables both are going to hit disk if needed. Note that the common misconception of table variables being in memory and temp tables being on disk is inaccurate. Both can hit disk/tempdb.

- However, temp tables allow some behaviors that can cause plan recompilation (incurring more CPU overhead each time). This could occur with alter table statements that add indexes, or other DDL type statements that alter the structure. Note: additional row changes that change a large number of rows also can cause recompilation on the temp table.

To summarize these ramblings….

- be careful with table variables

- don’t throw option(recompile) on everything as it can impact scalability

- when using temp tables, be careful to design DDL statements at the beginning to avoid unneeded recompilation

- probably just apply the logic Brent recommends…. Use temp tables mostly as consistency is key!

Script for testing

Here is the testing script I was working with. If you find anything wrong or needing clarification, please chime in!