I’ve been working on a project where I use Azure Data Factory to retrieve data from the Azure Log Analytics API. The query language used by Log Analytics is Kusto Query Language (KQL). If you know T-SQL, a lot of the concepts translate to KQL. Here’s an example T-SQL query and what it might look like in KQL.

--T-SQL: SELECT * FROM dbo.AzureDiagnostics WHERE TimeGenerated BETWEEN '2020-12-15 AND '2020-12-16' AND database_name_s = 'mydatabasename'

//KQL:

AzureDiagnostics

| where TimeGenerated between(datetime('2020-12-15') .. datetime('2020-12-16'))

| where database_name_s == 'mydatabasename'For this project, we have several Azure SQL Databases configured to send logs and metrics to a Log Analytics workspace. You can execute KQL queries against the workspace in the Log Analytics user interface in the Azure Portal, a notebook in Azure Data Studio, or directly through the API. The resulting format of the data downloaded from the API leaves something to be desired (it’s like someone shoved a CSV inside a JSON document), but it’s usable after a bit of parsing based upon column position. Just be sure your KQL query actually states the columns and their order (this can be done using the Project operator).

You can use an Azure Data Factory copy activity to retrieve the results of a KQL query and land them in an Azure Storage account. You must first execute a web activity to get a bearer token, which gives you the authorization to execute the query.

I had to create an app registration in Azure Active Directory for the web activity to get the bearer token. The web activity should perform a POST to the following url (with your domain populated and without the quotes): "https://login.microsoftonline.com/[your domain]/oauth2/token"

Make sure you have added the appropriate header of Content-Type: application/x-www-form-urlencoded. The body should contain your service principal information and identify the resource as "resource=https://api.loganalytics.io". For more information about this step, see the API documentation.

Data Factory Copy Activity

The source of the copy activity uses the REST connector. The base url is set to "https://api.loganalytics.io/v1/workspaces/[workspace ID]/" (with your workspace ID populated and without the quotes). Authentication is set to Anonymous. Below is my source dataset for the copy activity. Notice that the relative url is set to “query”.

![Connection properties of a dataset in Azure Data Factory. The base url points to https://api.loganalytics.io/v1/workspaces/[workspaceid] with the workspace ID not shown. The relative url contains the string "query".](https://datasavvy.files.wordpress.com/2020/12/image-2.png?w=1024)

The Source properties of the copy activity should reference this REST dataset. The request method should be POST, and the KQL query should be placed in the request body (more on this below).

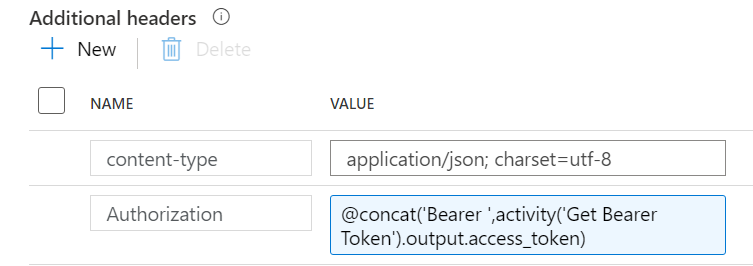

Two additional headers need to be added in the Source properties.

The Authorization header should pass a string formatted as “Bearer [Auth Token]” (with a space between the string “Bearer” and the token). The example above retrieves the token from the web activity that executes before the copy activity in the pipeline. Make sure you are securing your inputs and outputs so your secrets and tokens are not being logged in Data Factory. This option is currently found on the General properties of each activity.

Embedding a KQL Query in the Copy Activity

You must pass the KQL query to the API as a JSON string. But this string is already inside the JSON created by Data Factory. Data Factory is a bit picky in how you enter the query. Here is an example of how to populate the request body in the copy activity.

{

"query": "AzureDiagnostics | where TimeGenerated between(datetime('2020-12-15') .. datetime('2020-12-16')) | where database_name_s == 'mydatabasename'"

}Note that the curly braces are on separate lines, but the query must be on one line. So where I had my query spread across 3 lines in the Log Analytics user interface as shown at the beginning of this post, I have to delete the line breaks for the query to work in Data Factory.

The other thing to note is that I am using single quotes to contain string literals. KQL supports either single or double quotes to encode string literals. But using double quotes in your KQL and then putting that inside the double quotes in the request body in ADF leads to errors and frustration (ask me how I know). So make it easy on yourself and use single quotes for any string literals in your KQL query.

In my project, we were looping through multiple databases for customized time frames, so my request body is dynamically populated. Below is a request body similar to what I use for my copy activity that retrieves Azure Metrics such as CPU percent and data storage percent. The values come from a lookup activity. In this case, the SQL stored procedure that is executed by the lookup puts the single quotes around the database name so it is returned as ‘mydatabasename’.

{

"query": "AzureMetrics | where TimeGenerated between (datetime(@{item().TimeStart}) .. datetime(@{item().TimeEnd})) | where Resource == @{item().DatabaseName} | project SourceSystem , TimeGenerated , Resource, ResourceGroup , ResourceProvider , SubscriptionId , MetricName , Total , Count , Maximum , Minimum , TimeGrain , UnitName , Type, ResourceId"

}With dynamically populated queries like the above, string interpolation is your friend. Paul Andrew’s post on variable string interpolation in a REST API body helped me understand this and get my API request to produce the required results.

You can do similar things with Data Factory to query the Application Insights API. In fact, this blog post on the subject helped me figure out how to get the Log Analytics data I needed.

Be Aware of API Limits

There are limits to the frequency and amount of data you can pull from the Log Analytics API. As noted in the API documentation:

- Queries cannot return more than 500,000 rows

- Queries cannot return more than 64,000,000 bytes (~61 MiB total data)

- Queries cannot run longer than 10 minutes (3 minutes by default)

If there is a risk that you may hit the limit on rows or bytes, you need to be aware that the Log Analytics API does not return an error in this case. It will return the results up to the limit and then note the “partial query failure” in the result set. As far as I can tell, there is no option for pagination, so you will need to adjust your query to keep it under the limits. My current process uses a Get Metadata activity after the copy activity to check file sizes for anything close to the limit and then breaks that query into smaller chunks and re-executes it.

It’s All in the Details

I had a lot of trial and error as I worked my way through populating the request body in the API call and dealing with API limits. I hope this helps you avoid some of the pitfalls.