By Steve Bolton

…………Longer code and substantial performance limitations were the prices we paid in return for greater sophistication with Cook’s Distance, the topic of the last article in this series of amateur self-tutorials on identifying outliers with SQL Server. The same tradeoff was even more conspicuous in this final installment – until I stumbled across a shortcut to coding Mahalanobis Distance that really saved my bacon out of the fire. The incredibly cool moniker sounds intimidating, but the concepts and code required to implement it are trivial, as long as we sidestep the usual matrix math that ordinarily makes it prohibitively expensive to run on “Big Data”-sized tables. It took quite a while for me to blunder into a suitable workaround, but it was worthwhile, since Mahalanobis Distance merits a special place in the pantheon of outlier detection methods, by virtue of the fact that it is uniquely suited to certain use cases. Like Cook’s Distance, it can be used to find outliers defined by more than one column, which automatically puts both in a league the others surveyed in this series can’t touch; their competitors are typically limited to detecting unusual two-column values in cases where neither column is at the extreme low or high end, Cook’s D and Mahalanobis Distance sometimes flag unusual intermediate values. The latter, however, can also be extended to more than two columns. Better yet, it also accounts for distortions introduced by variance into the distances between data points, by renormalizing them on a consistent scale that is in many cases equivalent to ordinary Euclidean Distance. Best of all, efficient approximations can be derived through a shortcut that renders all of the complex matrix math irrelevant; since the goal in outlier detection is mainly to serve as an alarm bell to draw attention to specific data points that might warrant human intervention, we can sacrifice a little accuracy in return for astronomical performance gains.

…………For both the cool name and the even cooler multidimensional outlier detection capabilities, we can thank Prasanta Chandra Mahalanobis (1893-1972), who was born in Calcutta at a time when Gandhi, Mother Teresa, distant tech support call centers, Bangladesh and the other things Westerners associate today with the region were still in the far-flung future. He and his grandfather may have acted as moderating influences in Brahmo Samaj, a 19th Century offshoot of Hinduism that has since apparently died out in Bangladesh; later in life he “served as secretary to Rabindranath Tagore, particularly during the latter’s foreign travels.” Some of that polymath’s brilliance must have rubbed off, because Mahalanobis responded to a dilemma he encountered while trying to compare skull sizes by inventing an entirely new measure of similarity, which can be adapted to finding outliers based on how unalike they are. It has many applications, but for our purposes it is most appropriate for finding multidimensional outliers. If you want to find out how unusual a particular value is for a particular column, any of the detection methods presented earlier in this series may suffice, if all of their particular constraints are taken into account – save for Cook’s Distance, which is a comparison between two columns. Mahalanobis Distance takes the multicolumn approach one step further and represents one of the few means available for finding out whether a particular data point is unusual when compared to several columns at same time.

The Litmus Test: Comparing Outliers to the Shape of Your Data

Think of it this way: instead of measuring the distance of a single data point or mean to a standard deviation or variance, as we do so often in statistics, we’re measuring several variables against an entire multidimensional matrix of multiple columns, as well as the variances, covariances and averages associated with them. These extra columns allow us to compare our data points against a shape that is more geometrically complex than the single center point defined by a simple average or median. That is why Mahalanobis Distance is intimately related to the field of Principal Components Analysis, i.e. the study of various axes that make up a multidimensional dataset. The metric also has distinctive interrelationships with the regression metric known as leverage[ii], the normal distribution (i.e. the bell curve)[iii], Fisher Information and Beta and Chi-Squared distributions[iv] that are still far above my head, but I was able to explain it to myself crudely in this way: the metric measures how many standard deviations[v] a data point is from a set of center points for all of the columns under consideration, which taken together form an ellipsoid[vi] which is transformed into a circle by the constituent mathematical operations. Don’t let the big word ellipsoid fool you, because it’s actually quite obvious that any normal scatter plot of data points will form a cloud in the shape of an irregular curve around the center of the dataset. It’s also obvious that the center will have a more complex shape than when we use a single variable, since if we have three means or medians taken from three columns we could make a triangle out of them, or a four-sided shape like a rectangle from similar measures applied to four columns, and so on; the only difference is that we have to do some internal transformations to the shape, which need not concern us. Suppose, for example, that you wanted to discover if a particular sound differs from the others in a set by its pitch; in this case, you could simply use a typical unidimensional outlier detection method that merely examines the values recorded for the pitch. You could get a more complete picture, however, by taking into account other variables like the length of the song it belongs to, the type of instrument that produced and so on.

…………The price of this more subtle and flexible means of outlier detection would be quite high in terms of both performance and code maintenance, if our implementation consisted of translating the standard matrix math notation into T-SQL. I programmed an entire series of T-SQL matrix procedures to do just that, which seemed to perform reasonably well and with greater accuracy than the method in Figure 1 – until I hit the same SQL Server internals barrier I did with Cook’s Distance in the last article. To put it bluntly, we can’t use recursive calls to table-valued parameters to implement this sort of thing, because we’ll hit the internal limit of 32 locking classes rather quickly, leading to “No more lock classes available from transaction” errors. This long-standing limitation is by design, plus there are apparently no plans to change it and no widely publicized workarounds, so that’s pretty much the end of the line (unless factorization methods and similar matrix math workarounds I’m not familiar with might do the trick).

Bypassing the Matrix Math

I had to put Mahalanobis Distance on the back burner for months until I stumbled across a really simple version expressed in ordinary arithmetic notation (summation operators, division symbols, that sort of thing) rather than matrix operations like transposes and inverses. Unfortunately, I can’t remember where I found this particular formula to give adequate credit, but it allowed me to cut two chop at least two lengthy paragraphs out of this article, which I originally included to explain how the inner working of the gigantic matrix math routines I wrote; otherwise, I might’ve set a SQL Server community record for the lengthiest T-SQL sample code ever crammed into a single blog post. Instead, I present Figure 1, which is short enough to be self-explanatory; the format ought to be familiar to anyone who’s been following this series, since it features similar parameter names and dynamic SQL operations. The good news is that the results derived from the Duchennes muscular dystrophy dataset I’ve been using for practice data throughout this series aren’t substantially different from those derived through the matrix math method. There are indeed discrepancies, but this approximation is good enough to get the job done without any noteworthy performance hit at all.

…………Keep in mind that the results of outlier detection methods are rarely fed into other calculations for further refinement, so perfect accuracy is not mandatory as it might be with hypothesis testing or many other statistical applications. The point of all of the T-SQL sample code in this series is to automate the detection of outliers, whereas their handling requires human intervention; all we’re doing is flagging records for further attention, so that experts with domain knowledge can cast trained eyes upon them, looking for relevant patterns or perhaps evidence of data quality problems. The goal is inspection, not perfection. A few years back I read some articles on how the quality of being “good enough” is affecting software economics of late (although I can’t rustle up the citations for those either) and this hack for Mahalanobis Distance serves as a prime example. It’s not as pretty as a complete T-SQL solution that matches the more common matrix formula exactly, but it serves the purposes of end users just as well – or perhaps even better, considering the short code is more easily maintained and the performance is stellar. This sample code runs in about 20 milliseconds on desktop computer (which could hardly be confused with a real server), compared to 19 for the Cook’s D procedure in the last tutorial. The cool thing is that it scales much better. My implementation of Cook’s D can’t be run at all on the Higgs Boson Dataset I’ve been using to stress-test my code with in this series[vii], because the regression stats would have to be recalculated for each of the 11 million rows, thereby leading to exponential running times and the need to store 121 trillion regression rows in TempDB. That’s not happening on anyone’s server, let alone my wheezing Frankenstein of a desktop. My Mahalanobis hack ran in a respectable 3:43 on the same Higgs Boson data. The lesson I learned from coding Mahalanobis and Cook’s Distances in T-SQL is that arithmetic formulas ought to be preferred to ones defined in matrix notation, whenever possible, even if that entails resorting to approximations of this kind. The difficulty consists of finding them, perhaps hidden in the back of some blog post or journal article in the dark corners of the Internet.

Figure 1: Code for the Mahalanobis Distance Procedure

CREATE PROCEDURE Calculations.OutlierDetectionMahalanobisDistanceSP

@Database1 nvarchar(128), @Schema1 nvarchar(128), @Table1 nvarchar(128), @Column1 AS nvarchar(128), @Column2 AS nvarchar(128)

AS

DECLARE @SchemaAndTable1 nvarchar(400),@SQLString nvarchar(max)

SET @SchemaAndTable1 = @Database1 + ‘.’ + @Schema1 + ‘.’ + @Table1

SET @SQLString = ‘DECLARE @Var1 decimal(38,32)

SELECT @Var1 = Var(CAST(‘ + @Column1 + ‘ AS decimal(38,32)))

FROM ‘ + @SchemaAndTable1 + ‘

WHERE ‘ + @Column1 + ‘ IS NOT NULL AND ‘ + @Column2 + ‘ IS NOT NULL

SELECT ‘ + @PrimaryKeyName + ‘ AS PrimaryKey, ‘ + @Column1 + ‘ AS Value1, ‘ + @Column2 + ‘ AS Value2,

Power(Power(‘ + @Column1 + ‘ – ‘ + @Column2 + ‘, 2) / (@Var1), 0.5) AS MahalanobisDistance

FROM ‘ + @SchemaAndTable1 + ‘

WHERE ‘ + @Column1 + ‘ IS NOT NULL AND ‘ + @Column2 + ‘ IS NOT NULL

ORDER BY MahalanobisDistance DESC’

–SELECT @SQLString — uncomment this to debug dynamic SQL errors

EXEC (@SQLString)

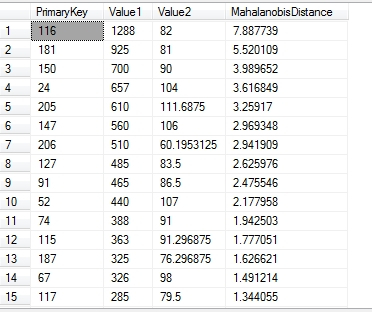

Figure 2: Results for the Mahalanobis Distance Procedure

EXEC Calculations.OutlierDetectionMahalanobisDistanceSP

@Database1 = N’DataMiningProjects‘,

@Schema1 = N’Health‘,

@Table1 = N’DuchennesTable‘,

@PrimaryKeyName = N’ID’,

@Column1 = N’CreatineKinase‘,

@Column2 = ‘Hemopexin’

…………There are still some issues left to be worked out with this approximation of Mahalanobis Distance. I’m not yet sure under which conditions we can expect better accuracy, or conversely greater discrepancies from the matrix version. I know Mahalanobis Distance can also be extended to more than two columns, unlike Cook’s D, but I have yet to engineer a solution. Moreover, I have yet to wrap my head around all of the subtle cases where Mahalanobis is less applicable; for example, it apparently isn’t as appropriate when the relationships are nonlinear.[viii] As I’ve come to realize through reading up on statistical fallacies, these tricky situations can make all of the difference in the world between a mining model that helps end users to make informed decisions and ones that can mislead them into disastrous mistakes. Deriving the numbers certainly isn’t easy, but it is even harder to attach them to the wider scaffolding of hard logic in a meaningful way. As many statisticians themselves decry, that is precisely where a lot of science and public discourse go awry. Thankfully, these issues aren’t life-and-death matters in outlier detection, where the goal is to act as an alarm bell to alert decision-makers, rather than as a decision in and of itself; as I’ve pointed out throughout this series ad infinitum, ad nauseum, these detection methods only tell us that a data point is aberrant, but say nothing about why. This is why knee-jerk reactions like simply deleting outliers are not only unwarranted, but can and often are used for deceptive purposes, particularly when money, reputations and sacred cows are on the line. The frequency with which this sort of chicanery still happens is shocking, as I mentioned earlier in the series. As I’ve learned along the way, perhaps the second-most critical problem dogging outlier detection is the lack of methods capable of dealing with “Big Data”-sized databases, or even the moderately sized tables of a few thousand or millions rows as we see routinely in SQL Server. Most of them simply choke and a few are even invalid or incalculable. It might be useful to develop new ones more suited to these use cases, or track down and popularize any that might’ve already been published long ago in the math literature.

…………Despite such subtle interpretive risks, Mahalanobis Distance is the only statistic I’m aware of in my minimal experience that can be applied to the case of multidimensional outliers, beyond the two columns Cook’s D is limited to. In this capacity it acts as a dissimilarity measure, but can also be used for the converse purpose as a measure of similarity. Its scale-invariance and status as a “dimensionless quantity,” i.e. a pure number attached to no particular system of unit measurement, apparently have their advantages as well.[ix] It can be used in other capacities in data mining, such as in feature selection in Bayesian analysis.[x] I don’t necessarily understand a good share of the data mining and machine learning literature I’ve read to date, but can tell by the frequency it crops up that Mahalanobis Distance has diverse uses beyond mere outlier detection. In a future mistutorial series, I intend to demonstrate just how little I know about several dozen other metrics commonly used in the field of data mining, like Shannon’s Entropy, Bregman’s Divergence, the Aikake Information Criterion, Sørensen’s Similarity Index and Lyapunov Exponent. I’ll also include a whole segment on probabilistic applications of distance measures, such as the popular Küllback-Leibler Divergence, all of which turned out to be easier to code and explain than Cook’s D and Mahalanobis Distance. It only gets easier from here on in, at least in terms of common distance measures. I have no timetable for finishing the dozens of such metrics I intend to survey (if all goes according to plan, I will be posting data mining code on this blog for many years to come) but by the time I’m finished with the series tentatively titled Information Measurement with SQL Server, it should be easier than ever before to quantify just how much information there is in every table. We’ll also be able to measure such properties randomness among a column’s values. Before diving into it, however, I might post a quick wrap-up of this series that includes a makeshift use diagram that classifies all of the outlier detection methods covered in this series, as well as a makeshift method of detecting interstitial outliers that I cooked up to meet some specific use cases, one that allowed me to spot a data quality issue in my own databases. I’ll also take a quick detour into coding goodness-of-fit tests in SQL Server, since these seemed to have quite a bit of overlap with some of the outlier detection methods mentioned earlier in this series. Knowing what probability distribution one is dealing can sometimes tell us an awful lot about the underlying processes that produced it, so they can be indispensable tools in DIY data mining on SQL Server.

I glanced at the biography at the Wikipedia page “Prasanta Chandra Mahalanobis,” at the web address http://en.wikipedia.org/wiki/Prasanta_Chandra_Mahalanobis

[ii] See the Wikipedia page “Mahalanobis Distance” at http://en.wikipedia.org/wiki/Mahalanobis_distance

[iii] IBID.

[iv] “When an infinite training set is used, the Mahalanobis distance between a pattern measurement vector of dimensionality D and the center of the class it belongs to is distributed as a chi2 with D degrees of freedom. However, the distribution of Mahalanobis distance becomes either Fisher or Beta depending on whether cross validation or resubstitution is used for parameter estimation in finite training sets. The total variation between chi2 and Fisher, as well as between chi2 and Beta, allows us to measure the information loss in high dimensions. The information loss is exploited then to set a lower limit for the correct classification rate achieved by the Bayes classifier that is used in subset feature selection.” Ververidis, D. and Kotropoulos, C., 2009, “Information Loss of the Mahalanobis Distance in High Dimensions: Application to Feature Selection,” pp. 2275-2281 in IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 31, No. 12. See the abstract available at http://ieeexplore.ieee.org/xpl/login.jsp?tp=&arnumber=4815271&url=http%3A%2F%2Fieeexplore.ieee.org%2Fiel5%2F34%2F5291213%2F04815271.pdf%3Farnumber%3D4815271

[v] “One interesting feature to note from this figure is that a Mahalanobis distance of 1 unit corresponds to 1 standard deviation along both primary axes of variance.” See the Jennes Enterprises webpage titled “Description” at http://www.jennessent.com/arcview/mahalanobis_description.htm.

[vi] See the post by jjepsuomi titled “Distance of a Test Point from the Center of an Ellipsoid,” published Jun 24, 2013 in the StackExchange Mathematics Forum, as well as the the reply by Avitus on the same date.Available online at http://math.stackexchange.com/questions/428064/distance-of-a-test-point-from-the-center-of-an-ellipsoid. Also see jjepsuomi post titled “Bottom to Top Explanation of the Mahalanobis Distance,” published June 19, 2013 in the CrossValidated forums. Available online at http://stats.stackexchange.com/questions/62092/bottom-to-top-explanation-of-the-mahalanobis-distance. The folks at CrossValidated gave me some help on Aug. 14, 2014 with these calculations in the thread titled “Order of Matrix Operations in Mahalanobis Calculations,” which can be found at http://stats.stackexchange.com/questions/111871/order-of-matrix-operations-in-mahalanobis-calculations

[vii] I downloaded from the University of California at Irvine’s Machine Learning Repository a long time ago and converted it into a SQL Server table of about 6 gigabytes.

[viii] See Rosenmai, Peter, 2013, “Using Mahalanobis Distance to Find Outliers,” posted Nov. 25, 2013 at the EurekaStatistics.com web address http://eurekastatistics.com/using-mahalanobis-distance-to-find-outliers

[ix] See the Wikipedia pages “Mahalanobis Distance” and “Scale Invariance” at http://en.wikipedia.org/wiki/Mahalanobis_distance and http://en.wikipedia.org/wiki/Scale_invariance

[x] See Ververidis and Kotropoulos.