By Steve Bolton[

…………I originally intended to save Cook’s and Mahalanobis Distances to close out this series not only because the calculations and concepts are more difficult yet worthwhile to grasp, but also in part to serve as a bridge to a future series of tutorials on using information measures in SQL Server, including many other distance measures. The long and short of it is that since I’m learning these topics as I go, I didn’t know what I was getting myself into and ended finishing almost all of the other distance measures before Cook’s and Mahalanobis. Like the K-Means algorithm I recapped in the last tutorial and had already explained in greater depth in A Rickety Stairway to SQL Server Data Mining, Algorithm 7: Clustering, these two are intimately related to ordinary Euclidean Distance, so how hard could they be? Some other relatively common outlier detection methods are also based on K-Means relatives (like K-Nearest Neighbors) and from there to Euclidean Distance, so I won’t belabor the point by delving into them further here. There are also distance metrics in use today that are based on mind-bending alternative systems like affine, hyperbolic, elliptic and kinematic geometries in which these laws do not necessarily hold, after relaxing some of the Euclidean postulates; for example, the affine type of non-Euclidean geometry is useful in studying parallel lines, while the hyperbolic version is useful with circles.[1] Some of them are exotic, but others are quite useful in DIY data mining, as we shall see in a whole segment on probabilistic distances (like the Küllback-Leibler Divergence) in that future mistutorial series. What tripped me up in the case of Cook’s and Mahalanobis is that the most common versions of both rely on matrix math, which can present some unexpected stumbling blocks in SQL Server. In both cases I had to resort to alternative formulas, after running into performance and accuracy issues using the formulas based on standard notation. They’re entirely worthwhile to code in T-SQL, because they occupy an important niche in the spectrum of outlier detection methods. All of the methods introduced in this series allows us to automatically flag outliers for further inspection, which can be quite useful for ferreting out data quality issues, finding novel patterns and the like in large databases – where we don’t want to go around deleting or segregating thousands of records without some kind of intelligent examination first. Cook’s and Mahalanobis, however, stand out because they’re among the few standard ways of finding aberrant data points defined by more than one column. This also makes it capable of detecting unusual two-column values in cases where neither column is at the extreme low or high end, although that doesn’t happen often. These outlier detection methods are thus valuable to have on hand, despite the fact that “Cook’s D,” as it is often known, is still prohibitively costly to run on “Big Data”-sized databases, unlike my workaround for Mahalanobis Distance. The “D” may stand for “disappointing,” although it can still be quite useful on small and medium-sized datasets.

…………Cook’s Distance is suitable as the next stepping stone because we can not only draw upon the concept of distances between data points drawn from the K-Means version of the SSDM Clustering algorithm, but also make use of the lessons learned in A Rickety Stairway to SQL Server Data Mining, Algorithm 2: Linear Regression. Like so many other metrics discussed in this series, it made its debut in the American Statistical Association journal Technometrics, in this case in a paper published in 1977 by University of Minnesota statistician R. Dennis Cook, which I was fortunate enough to find a copy of. [2] The underlying equation[3] is not necessarily trivial, but the concepts underpinning it really shouldn’t be too difficult for anyone who can already grasp the ideas underpinning regression and Z-Scores, which have been dealt with in previous posts. I found it helpful to view some of the difference operations performed in Cook’s Distance (and the Mean Square Error (MSE) it depends upon) as a sort of twist on Z-Scores, in which we subtract data points from the data points predicted by a simple regression, rather than data points from the mean as we would in the deviation calculations that Z-Scores depend upon. After deriving each of these differences, we square them and sum them – just as we would in many other outlier detection calculations performed earlier in this tutorial series – then finally multiply by the reciprocal of the count.[4] The deviation calculation in the dividend of a Z-Scores can in fact be seen as a sort of crude distance metric in its own right, in which we are measuring how far each data point is from the center of a dataset, as defined in a mean or median; in the MSE, we are performing a similar distance comparison, except between a predicted value and actual value for a data point. To calculate Cook’s Distance we multiply the MSE by the count of parameters – i.e. which for our purposes means the number of columns we’re predicting, which is limited to just one in my code for now. The result forms the divisor in the final calculations, but the dividend is more complex. Instead of comparing a prediction to an actual value, we recalculate a new prediction for each data point in which the regression has been recalculated with that specific data point omitted, then subtract the result from the prediction made for that data point by the full regression model with no points omitted. The dividend is formed by squaring each of those results and summing them, in a process quite similar to the calculation of MSE and Z-Scores. The end result is a measuring stick that we can compare two-column data points against, rather than just one as we have with all of the other outlier detection methods in this series.

…………The difficulty in all of this is not the underlying concept, which is sound, but the execution, given that we have to recalculate an entirely new regression model for each data point. The dilemma is analogous to the one we faced in previous articles on the Modified Thompson Tau Test and Chauvenet’s Criterion, where we had to perform many of the computations recursively in order to recalculate the metrics after simulating the deletion of data points. Each of the difference operations we perform below tells us something about how important each record is within the final regression model, rather than how many outliers there might be if that record was eliminated, but it still presents a formidable performance problem. This drawback is magnified by the fact that we can’t use SQL Server’s exciting new windowing functions to solve the recursion issue with sliding windows, as we did in the articles on the Modified Thompson Tau test and Chauvenet’s Criterion. In fact, Step 5 in Figure 1 would be an ideal situation for the EXCLUDE CURRENT ROW clause that Itzik Ben-Gan, the renowned expert on SQL Server windowing functions, wants to see Microsoft add to the T-SQL specification.[5] As I discovered to my horror, you can’t use combine existing clauses like ROW UNBOUNDED AND ROWS 1 PRECEDING in conjunction with ROWS 1 FOLLOWING AND ROWS UNBOUNDED FOLLOWING to get the same effect. As a result, I had to perform the recalculations of the regressions in a series of table variables that are much less readable and efficient than an EXCLUDE CURRENT ROW clause might be, albeit more legible than the last remaining alternative, a zillion nested subqueries. I’m not yet fluent enough in T-SQL to say if these table variables cause more of a performance impact than subqueries in contexts like this, but this is one case in which they’re appropriate because readability is at a premium. It may also be worthwhile to investigate temporary tables as a replacement; so far, this method does seem to be faster than the common table expression (CTE) method I originally tried. I initially programmed an entire series of matrix math functions and stored procedures to derive both Cook’s and Mahalanobis Distances, since both are often defined in terms of matrix math notation, unlike many other distances used for data mining purposes. That method worked well, except that it ran into a brick wall: SQL Server has an internal limitation of 32 locking classes, which often leads to “No more lock classes available from transaction” error messages with recursive table-valued parameters. This is by design and I have yet to see any workarounds posted or any glimmer of hope that Microsoft intends to ameliorate it in future upgrades, which means no matrix math using table-valued parameters for the foreseeable future.

…………Yet another issue I ran into was interpreting the notation for Cook’s Distance, which can be arrived at from two different directions: the more popular method seems to be the series of calculations outlined two paragraphs above, but the same results can be had by first calculating an intermediate figure known as Leverage. This can be derived from what is known as a Hat Matrix, which can be easily derived in the course of calculating standard regression figures like MSE, predictions, residuals and the like. Unlike most other regression calculations, which are defined in terms of standard arithmetic operations like divisions, multiplication, etc. the notation for deriving Leverage is almost always given in terms of matrices, since it’s derived from a Hat Matrix. It took me a lot of digging to find an equivalent expression of Leverage in terms of arithmetic operations rather than matrix math, which I couldn’t use due to the locking issue. It was a bit like trying to climb a mountain, using a map from the other side; I was able to easily code all of the stats in the @RegressionTable in Figure 1, alongside many other common regression figures, but couldn’t tell exactly which of them could be used to derive the Hat Matrix and Leverage from the opposite direction. As usual, the folks at CrossValidated (StackExchange’s data mining forum) saved my bacon out of the fire.[6] While forcing myself to learn to code the intermediate building blocks of common mining algorithms in T-SQL, one of the most instructive lessons I’ve learned is that translating notations can be a real stumbling block, one that even professionals encounter. Just consider that a word to the wise, for anyone who tries to acquire the same skills from scratch as I’m attempting to do. Almost all of the steps in Figure 1 revolve around common regression calculations, i.e. intercepts, slopes, covariance and the like, except that fresh regression models are calculated for each row. The actual Cook’s Distance calculation isn’t performed until Step #6. At that point it was trivial to add a related stat known as DFFITS, which can be converted back and forth from Cook’s D; usually when I’ve seen DFFITS mentioned (in what little literature I’ve read), it’s in conjunction with Cook’s, which is definitely a more popular means of measuring the same quantity.[7] For the divisor, we use the difference between the prediction for each row and the prediction when that row is left out of the model and for the dividend, we use the standard deviation of the model when that row is omitted, times the square root of the leverage. I also included the StudentizedResidual and the global values for the intercept, slope and the like in the final results, since it was already necessary to calculate them along the way; it is trivial to calculate many other regression-related stats once we’ve derived these table variables, but I’ll omit them for the sake of brevity since they’re not directly germane to Cook’s Distance and DFFITS.

Figure 1: T-SQL Sample Code for the Cook’s Distance Procedure

CREATE PROCEDURE Calculations.CooksDistanceSP

@Database1 nvarchar(128), @Schema1 nvarchar(128), @Table1 nvarchar(128), @Column1 AS nvarchar(128), @Column2 AS nvarchar(128)

AS

DECLARE @SchemaAndTable1 nvarchar(400),@SQLString1 nvarchar(max),@SQLString2 nvarchar(max)

SET @SchemaAndTable1 = @Database1 + ‘.’ + @Schema1 + ‘.’ + @Table1

SET @SQLString1 = ‘DECLARE

@MeanX decimal(38,21),@MeanY decimal(38,21), @StDevX decimal(38,21), @StDevY decimal(38,21), @Count bigint,

@Correlation decimal(38,21),

@Covariance decimal(38,21),

@Slope decimal(38,21),

@Intercept decimal(38,21),

@MeanSquaredError decimal(38,21),

@NumberOfFittedParameters bigint

SET @NumberOfFittedParameters = 2

DECLARE @RegressionTable table

(ID bigint IDENTITY (1,1),

Value1 decimal(38,21),

Value2 decimal(38,21),

LocalSum bigint,

LocalMean1 decimal(38,21),

LocalMean2 decimal(38,21),

LocalStDev1 decimal(38,21),

LocalStDev2 decimal(38,21),

LocalCovariance decimal(38,21),

LocalCorrelation decimal(38,21),

LocalSlope AS LocalCorrelation * (LocalStDev2 / LocalStDev1),

LocalIntercept decimal(38,21),

PredictedValue decimal(38,21),

Leverage decimal(38,21),

AdjustedPredictedValue decimal(38,21),

GlobalPredictionDifference AS Value2 – PredictedValue,

AdjustmentDifference AS PredictedValue – AdjustedPredictedValue

)

INSERT INTO @RegressionTable

(Value1, Value2)

SELECT ‘ + @Column1 + ‘, ‘ + @Column2 + ‘

FROM ‘ + @SchemaAndTable1 + ‘

WHERE ‘ + @Column1 + ‘ IS NOT NULL AND ‘ + @Column2 + ‘ IS NOT NULL

— STEP #1 – RETRIEVE THE GLOBAL AGGREGATES NEEDED FOR OTHER CALCULATIONS

SELECT @Count=Count(CAST(Value1 AS Decimal(38,21))),

@MeanX = Avg(CAST(Value1 AS Decimal(38,21))), @MeanY = Avg(CAST(Value2 AS Decimal(38,21))),

@StDevX = StDev(CAST(Value1 AS Decimal(38,21))), @StDevY = StDev(CAST(Value2 AS Decimal(38,21)))

FROM @RegressionTable

— STEP #2 – CALCULATE THE CORRELATION (BY FIRST GETTING THE COVARIANCE)

SELECT @Covariance = SUM((Value1 – @MeanX) * (Value2 – @MeanY)) / (@Count – 1)

FROM @RegressionTable

— once weve got the covariance, its trivial to calculate the correlation

SELECT @Correlation = @Covariance / (@StDevX * @StDevY)

— STEP #3 – CALCULATE THE SLOPE AND INTERCEPT AND MAKE PREDICTIONS

SELECT @Slope = @Correlation * (@StDevY / @StDevX)

SELECT @Intercept = @MeanY – (@Slope * @MeanX)

UPDATE @RegressionTable

SET PredictedValue = (Value1 * @Slope) + @Intercept

— STEP #4 – CALCULATE THE MEAN SQUARED ERROR

— subtract the actual values from the PredictedValues and square them; add em together; then multiple the result by the reciprocal of the count

— as defined at the Wikipedia page “Mean Squared Error” http://en.wikipedia.org/wiki/Mean_squared_error

SELECT @MeanSquaredError = SUM(Power((PredictedValue – Value2), 2)) / CAST(@Count – @NumberOfFittedParameters AS float

FROM @RegressionTable

— STEP #5 – NOW CALCULATE A SLIDING WINDOW

— recalculate alternate regression models for each row, plus the leverage from intermediate steps

— none of this is terribly complicated; theres just a lot to fi

— the outer select is needed here because aggregates arent allowed in the main UPDATE statement (silly limitation)

UPDATE T0

SET LocalMean1 = T3.LocalMean1, LocalMean2 = T3.LocalMean2, LocalStDev1 = T3.LocalStDev1, LocalStDev2 = T3.LocalStDev2

FROM @RegressionTable AS T0

INNER JOIN

(SELECT T1.ID AS ID, Avg(T2.Value1) AS LocalMean1, Avg(T2.Value2) AS LocalMean2, StDev(T2.Value1) AS LocalStDev1, StDev(T2.Value2) AS LocalStDev2

FROM @RegressionTable AS T1

INNER JOIN @RegressionTable AS T2

ON T2.ID > T1.ID OR T2.ID < T1.ID

GROUP BY T1.ID) AS T3

ON T0.ID = T3.ID

‘

SET @SQLString2 = ‘UPDATE T0

SET LocalCovariance = T3.LocalCovariance, LocalCorrelation = T3.LocalCovariance / (LocalStDev1 * LocalStDev2), LocalSum = T3.LocalSum

FROM @RegressionTable AS T0

INNER JOIN (SELECT T1.ID AS ID, SUM((T2.Value1 – T2.LocalMean1) * (T2.Value2 – T2.LocalMean2)) / (@Count – 1) AS LocalCovariance,

SUM(Power(T2.Value1 – T2.LocalMean1, 2)) AS LocalSum

FROM @RegressionTable AS T1

INNER JOIN @RegressionTable AS T2

ON T2.ID > T1.ID OR T2.ID < T1.ID

GROUP BY T1.ID) AS T3

ON T0.ID = T3.ID

UPDATE T0

SET Leverage = T3.Leverage

FROM @RegressionTable AS T0

INNER JOIN (SELECT ID, Value1, 1 / CAST(@Count AS float) + (CASE WHEN Dividend1 = 0 THEN 0 ELSE Divisor1 / Dividend1 END) AS Leverage

FROM (SELECT ID, Value1, Power(Value1 – LocalMean1, 2) AS Divisor1, LocalSum AS Dividend1, Power(Value2 – LocalMean2, 2) AS Divisor2

FROM @RegressionTable) AS T2) AS T3

ON T0.ID = T3.ID

UPDATE @RegressionTable

SET LocalIntercept = LocalMean2 – (LocalSlope * LocalMean1)

UPDATE @RegressionTable

SET AdjustedPredictedValue = (Value1 * LocalSlope) + LocalIntercept

— #6 RETURN THE RESULTS

SELECT ID, Value1, Value2, StudentizedResidual,Leverage,CooksDistance,DFFITS

FROM (SELECT ID, Value1, Value2, GlobalPredictionDifference / LocalStDev1 AS StudentizedResidual, Leverage,

(Power(GlobalPredictionDifference, 2) / (@NumberOfFittedParameters * @MeanSquaredError)) * (Leverage / Power(1 – Leverage, 2)) AS CooksDistance, AdjustmentDifference / (LocalStDev2 * Power(Leverage, 0.5)) AS DFFITS

FROM @RegressionTable) AS T1

ORDER BY CooksDistance DESC

— also return the global stats

— SELECT @MeanSquaredError AS GlobalMeanSquaredError, @Slope AS GlobalSlope, @Intercept AS GlobalIntercept, @Covariance AS GlobalCovariance, @Correlation AS GlobalCorrelation

‘

SET @SQLString1 = @SQLString1 + @SQLString2

–SELECT @SQLString1 — uncomment this to debug dynamic SQL errors

EXEC (@SQLString1)

…………Each of the procedures I’ve posted in previous articles has made use of dynamic SQL similar to that in Figure 1, but in this case there’s simply a lot more of it; in this case, it helps to a least have the operations presented sequentially in a series of updates to the @RegressionTable variable rather than bubbling up from the center of a set of nested subqueries. The first three steps in Figure 1 are fairly straightforward: we retrieve the global aggregates we need as usual, then calculate the covariance (a more expensive operation that involves another scan or seek across the table) from them, followed by the slope and intercept in succession.[8] The MSE calculation in Step 4 requires yet another scan or seek across the whole table. Step 5 accounts for most of the performance costs, since we cannot use the aggregates derived in Step 1 for the new regression models we have to build for each data point. It was necessary to break up the dynamic SQL into two chunks via the second SET @SQLString = @SQLString + ‘ statement, which prevents a bug (or “feature”) that apparently limits the size of strings that can be assigned at any one time, even with nvarchar(max).[9] Various thresholds are sometimes baked into the algorithm to flag “influential points” but I decided to allow users to add their own, in part to shorten the code and in part because there’s apparently not a consensus on what those thresholds ought to be.[10]

…………Aside from the lengthy computations, the Cook’s Distance procedure follows much the same format as other T-SQL solutions I’ve posted in this series. One of the few differences is that there is an extra Column parameter so that the user can compare two columns in any database for which they requisite access, since Cook’s Distance involves a comparison between two columns rather than a test of a single column as in previous tutorials. The @DecimalPrecision parameter is still available so that users can avoid arithmetic overflows by manually setting a precision and scale appropriate to the columns they’ve selected. To decomplicate things I omitted the usual @OrderByCode for sorting the results and set a default of 2 for @NumberOfFittedParameters. As usual, the procedure resides in a Calculations schema and there is no code to handle validation, SQL injection or spaces in object names. Uncommenting the next-to-last line allows users to debug the dynamic SQL.

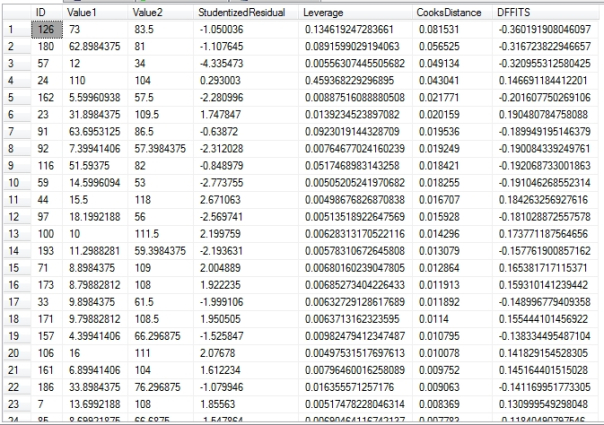

Figure 2: Results for the Cook’s Distance Query

EXEC Calculations.CooksDistanceSP

@Database1 = N’DataMiningProjects‘,

@Schema1 = N’Health‘,

@Table1 = N’DuchennesTable‘,

@Column1 = N’PyruvateKinase‘,

@Column2 = ‘Hemopexin’

…………As I have in many previous articles, I ran the first test query against a 209-row dataset on the Duchennes form of muscular dystrophy, which I downloaded from the Vanderbilt University’s Department of Biostatistics. As the results in Figure 2 show, the protein Hemopexin had the greatest influence on the Pyruvate Kinase enzyme at the 126th record. Here the Cook’s Distance was 0.081531, which was about 4 times higher than the value for the sixth-highest Cook’s Distance, with a bigint primary key of 23, so we may safely conclude that this record is an outlier, unless existing domain knowledge suggests that this particular point is supposed to contain such extreme values. Be warned that for a handful of value pairs, my figures differ from those obtained in other mining tools (which believe it or not, also have discrepancies between each other) but I strongly suspect that depends on how nulls and divide-by-zeros are dealt with, for which there is no standard method in Cook’s D. These minor discrepancies are not of critical importance, however, since the outlier detection figures are rarely plugged into other calculations, nor is it wise to act on them without further inspection.

…………The procedure executed in 19 milliseconds on the table I imported the Duchennes data into, but don’t let that paltry figure deceive you: on large databases, the cost rises exponentially to the point where it becomes prohibitive. There were only a handful of operators, including two Index Seeks which accounted for practically the entire cost of the query, which means that it may be difficult to gain much performance value from optimizing the execution plans. This brings us to the bad news: the procedure simply won’t run against datasets of the same size as the Higgs Boson dataset I downloaded from the University of California at Irvine’s Machine Learning Repository and have being using to stress-test my sample T-SQL throughout this series. Since we need to recalculate a new regression model for each of the 11 million rows, we’re at least talking about 11 million squared, or 121 trillion rows of regression data in order to derive 11 million separate Cook’s Distances. I believe that puts us in the dreaded EXPTIME and EXPSPACE computation complexity classes; without an EXCLUDE CURRENT ROW windowing clause or some other efficient method of calculating intermediate regression aggregates in one pass, I know of no other way to reduce this down from an exponential running time to a polynomial. I’m weak in GROUP BY operations, so perhaps another workaround can be derived through those – but if not, we’re up the proverbial creek without a paddle. Even if you can wait the lifetime of the universe or whatever it takes to run the 11,000,0002 regression operations, it is unlikely that you’ll have enough spare room in TempDB for 121 trillion rows. The price to be paid for the more sophisticated insights Cook’s Distance provides is that it simply cannot be run against Big Data-sized datasets, at least in its current form.

…………As we’ve seen so many times in this series, scaling up existing outlier detection methods to Big Data sizes doesn’t merely present performance issues, but logical ones; in the case of Cook’s Distance, omitting a single observation is only going to have an infinitesimal impact on a regression involving 11 million records, no matter how aberrant the data point might be. Since it is derived from linear least squares regression, Cook’s Distance shares some of its limitations, like “the shapes that linear models can assume over long ranges, possibly poor extrapolation properties, and sensitivity to outliers.”[11] We’re trying to harness that sensitivity when performing outlier detection, but the sheer size of the regression lines generated from Big Data made render it too insensitive to justify such intensive computations. When you factor in the performance costs of recalculating a regression model for that many rows the usefulness of this outlier identification method obviously comes into question. On the other hand, the procedure did seem to identify outliers with greater accuracy when run against other tables I’m very familiar with, which consisted of a few thousand rows apiece. There may be a happy medium at work here, in which Cook’s Distance is genuinely useful for a certain class of moderately sized tables in situations where the extra precision of this particular metric is needed. When deciding whether or not the extra computational costs is worth it for a particular table, keep in mind that the performance costs are magnified in my results because I’m running them on a wimpy eight-core semblance of an AMD workstation that has more in common with Sanford and Son’s truck than a real production environment server. Furthermore, the main uses in this field for outlier detection of any kind are in exploratory data mining and data quality examinations, which don’t require constant, ongoing combing of the database for outliers; these are issues of long-term importance, not short-term emergencies like a relational query that has to be optimized perfectly because it may have to run every day, or even every second. Tests like this can be left for off-peak hours on a weekly or monthly basis, so as not to interfere with normal operations. Cook’s Distance might also be preferred when searching for a specific type of outlier, i.e. those that could throw off predictive modeling, just as Benford’s Law is often selected when identifying data quality problems is paramount, especially the intentional data quality issue we call fraud. Cook’s Distance might also prove more useful in cases where the relationship between two variables is at the heart of the questions that the tester chooses to ask. Cook’s and DFFITS can also apparently be used to convert back and forth from another common stat I haven’t yet learned to use, the Wald Statistic, which is apparently used for ferreting out the values of unknown parameters.[12]. If there’s one thing I’ve learned while writing this series, it’s that there’s a shortage of outlier detection methods appropriate to the size of the datasets that DBAs work with. Thankfully, the workaround I translated into T-SQL for my next column allows us to use Mahalanobis Distance to find outliers across columns, without the cosmic computational performance hit for calculating Cook’s D on large SQL Server databases. As with Cook’s D, there are some minor accuracy issues, but these are merely cosmetic when looking for outliers, where detection can be automated but handling ought to require human intervention.

[1] For a quick run-down, see the Wikipedia page “Non-Euclidean Geometry” at http://en.wikipedia.org/wiki/Non-Euclidean_geometry

[2] Cook, R. Dennis, 1977, “Detection of Influential Observations in Linear Regression,” pp. 15-18 in Technometrics, February 1977. Vol. 19, No. 1. A .pdf version is available at the Universidad de São Paulo’s Instituto de Matematica Estatística web address http://www.ime.usp.br/~abe/lista/pdfWiH1zqnMHo.pdf

[3] I originally retrieved it from the Wikipedia page “Cook’s Distance” at http://en.wikipedia.org/wiki/Cook%27s_distance , but there’s no difference between it and the one in Cook’s paper.

[4] I used the formula defined at the Wikipedia page “Mean Squared Error,” at the web address http://en.wikipedia.org/wiki/Mean_squared_error. The same page states that there are two more competing definitions, but I used the one that the Cook’s Distance page linked to (The Wikipedia page “Residual Sum of Squares” at http://en.wikipedia.org/wiki/Residual_sum_of_squares may also be of interest.):

“In regression analysis, the term mean squared error is sometimes used to refer to the unbiased estimate of error variance: the residual sum of squares divided by the number of degrees of freedom. This definition for a known, computed quantity differs from the above definition for the computed MSE of a predictor in that a different denominator is used. The denominator is the sample size reduced by the number of model parameters estimated from the same data, (n-p) for p regressors or (n-p-1) if an intercept is used.[3] For more details, see errors and residuals in statistics. Note that, although the MSE is not an unbiased estimator of the error variance, it is consistent, given the consistency of the predictor.”

“Also in regression analysis, “mean squared error”, often referred to as mean squared prediction error or “out-of-sample mean squared error”, can refer to the mean value of the squared deviations of the predictions from the true values, over an out-of-sample test space, generated by a model estimated over a particular sample space. This also is a known, computed quantity, and it varies by sample and by out-of-sample test space.”

[5] p. 47, Ben-Gan, Itzik, 2012, Microsoft SQL Server 2012 High-Performance T-SQL Using Window Functions . O’Reilly Media, Inc.: Sebastopol, California.

[6] See the CrossValidated thread titled “Is It Possible to Derive Leverage Figures Without a Hat Matrix?”, posted by SQLServerSteve on June 26, 2015 at http://stats.stackexchange.com/questions/158751/is-it-possible-to-derive-leverage-figures-without-a-hat-matrix . Also see the reply by the user Glen_B to the CrossValidated thread titled “Which of these points in this plot has the highest leverage and why?” on July 9, 2014 at http://stats.stackexchange.com/questions/106191/which-of-these-points-in-this-plot-has-the-highest-leverage-and-why/106314#106314

[7] See the formula at the Wikipedia page “DFFITS” at https://en.wikipedia.org/wiki/DFFITS

[8] I retrieved this formula from the most convenient source, the Dummies.com page “How to Calculate a Regression Line” at the web address http://www.dummies.com/how-to/content/how-to-calculate-a-regression-line.html

[9] See the response by the user named kannas at the StackOverflow thread, “Nvarchar(Max) Still Being Truncated,” published Dec. 19, 2011 at the web address http://stackoverflow.com/questions/4833549/nvarcharmax-still-being-truncated

[10] See the Wikipedia page “Cook’s Distance” at http://en.wikipedia.org/wiki/Cook%27s_distance

[11] See National Institute for Standards and Technology, 2014, “4.1.4.1.Linear Least Squares Regression,” published in the online edition of the Engineering Statistics Handbook. Available at http://www.itl.nist.gov/div898/handbook/pmd/section1/pmd141.htm

[12] See the Wikipedia pages “Cook’s Distance,” “DFFITS” and “Wald Test” at http://en.wikipedia.org/wiki/Cook%27s_distance,

http://en.wikipedia.org/wiki/DFFITS and http://en.wikipedia.org/wiki/Wald_test respectively.