By Steve Bolton

…………Based on what little experience I’ve gained from writing this series on finding outliers in SQL Server databases, I expected the Modified Thompson Tau test to be a clunker. It marries the math underpinning one of the most ubiquitous means of outlier detection, Z-Scores, with the methods taken from the field of statistical hypothesis testing, which I’ve grouped together in the middle of this segment of the series. I feared, however, that it would turn out to be a shotgun wedding that would combine the worst drawbacks of both, at least when applied to the kind of large datasets that DBAs encounter every day. As discussed in previous articles in more depth, hypothesis testing is often performed on datasets of just a few dozen or a few hundred rows, whereas SQL Server tables normally have thousands, if not millions or billions of rows; the former is meant to be applied in proving specific points of evidence, while our main use cases tend to be ferreting out data quality problems and exploratory data mining. I’m dispensing with this subset of outlier detection methods (which amount to a kind of DIY data mining) in this segment because they’re not designed for our main user scenarios – although they can be tweaked somewhat to better fit our needs, as Dixon’s Q-Test was in last week’s installment. The Modified Thompson Tau test shares many of the same limitations as the other methods in the same class, such as the requirement of prior goodness-of-fit testing to ensure that the data follows a Gaussian or “normal” distribution (i.e. the bell curve). In fact, I expected it to be worse than Dixon’s Q-Test, Grubbs’ Test, the GESD and the Tietjen-Moore Test because it involves a recursive elimination of outliers, one at a time, which requires constant recalculations of the underlying averages and standard deviations used in the formulas. Not only could this be a performance headache, but this iterative elimination smacks of the kind of logically and ethically dubious handling of outliers I’ve addressed at length in past articles. Any outlier detection workflow must include investigations of their underlying causes, before matching them to an appropriate response; some are the result of bad data collection, some may indicate a non-Gaussian distribution, others may be beneficial depending on the context, such as finding new customer bases in a marketing survey. The Modified Thompson Tau test apparently is misused routinely to simply jettison unwanted data points without further investigation, judging from the frightening number of sources I found on the Internet where professionals did exactly that. That’s not going to fly in a SQL Server database, where the users and IT directors tend to get miffed when a DBA whimsically decides to delete ten thousand records. We can, however, salvage some usefulness out of the test by simply using it to flag potential outliers, rather than deleting them. The underlying stats can be recomputed as if previously detected outliers were eliminated, without actually making any change to the record.

…………Flagging outliers in this manner solves the problem of inappropriate responses that are often associated with the test, but it takes the magic of SQL Server windowing functions to address the recursive recomputations of aggregates like standard deviation and the mean. The declaration section of the dynamic SQL in Figure 1 is shorter than in any other procedure I’ve written in this series because we can’t simply do a one-time measurement of these basic stats; Modified Thompson Tau is the first test we’ve encountered that uses a sliding window of this kind. The formula I retrieved from Wikipedia[1] really isn’t that hard to calculate, nor are the underlying concepts anything new to this series; all we have to do is compute the absolute deviation (which we’ve already seen in the articles on Z-Scores) and use the Calculations.FindCriticalRegionForTDistributionFunction coded for the Grubbs’ Test article to find the critical region. After that, it’s just a simple matter of making a quick mathematical comparison between the two. The difficulty consists in the recursion, which is computationally costly. Furthermore, it is difficult if not impossible to do these calculations in a recursive CTE, thanks to such fun messages as “TOP operator is not allowed in the recursive part of a recursive common table expression,” “Functions with side effects are not allowed in the recursive part of a recursive common table expression” and “GROUP BY, HAVING, or aggregate functions are not allowed in the recursive part of a recursive common table expression.” Prior to the introduction of new windowing function clauses in SQL Server 2012, it might have been necessary to code this with some messy recursive function calls. The code is much shorter and more legible and the performance is probably much better than any alternatives, thanks to the single ROWS UNBOUNDED PRECEDING clause, which really saved my bacon out of the fire this time. Whenever the subject comes up, I never miss an opportunity to plug Itzik Ben-Gan’s classic reference Microsoft SQL Server 2012 High-Performance T-SQL Using Window Functions,[2] which invariably turns out to be useful in unexpected binds like this. I’ve made it a point to learn as much as I can about the largely untapped potential of these new T-SQL clauses, but apparently I need to redouble my efforts, judging on how indispensable they were in this particular situation.

Figure 1: Code for the Modified Thompson Tau Procedure

CREATE PROCEDURE [Calculations].[ModifiedThompsonTauTestSP]

@DatabaseName as nvarchar(128) = NULL, @SchemaName as nvarchar(128), @TableName as nvarchar(128),@ColumnName AS nvarchar(128), @PrimaryKeyName as nvarchar(400), @DecimalPrecision AS nvarchar(50), @Alpha decimal(38,35) = 0.05

AS

DECLARE @SchemaAndTableName nvarchar(400), @SQLString nvarchar(max)

SET @DatabaseName = @DatabaseName + ‘.’

SET @SchemaAndTableName = ISNull(@DatabaseName, ”) + @SchemaName + ‘.’ + @TableName –I’ll change this value one time, mainly for legibility purposes

SET @SQLString = ‘DECLARE @Alpha decimal(5,4)

SET @Alpha = ‘ + CAST(@Alpha AS nvarchar(50)) + ‘ SELECT ‘ + @PrimaryKeyName + ‘, ‘ + @ColumnName + ‘, AbsoluteDeviation, RejectionRegion, ”IsOutlier” = CASE WHEN AbsoluteDeviation > RejectionRegion THEN 1 ELSE 0 END

FROM (SELECT ‘ + @PrimaryKeyName + ‘, ‘ + @ColumnName + ‘, RN, AbsoluteDeviation, (RejectionRegionInput * (RN – 1)) / ((Power(RN, 0.5) * Power(RN – 2 + Power(RejectionRegionInput, 2), 0.5))) AS RejectionRegion

FROM (SELECT ‘ + @PrimaryKeyName + ‘, ‘ + @ColumnName + ‘, RN, AbsoluteDeviation, DataMiningProjects.Calculations.FindCriticalRegionForTDistributionFunction (RN, 1, 0.05) AS RejectionRegionInput

FROM

(SELECT ‘ + @PrimaryKeyName + ‘, ‘ + @ColumnName + ‘, CAST(ROW_NUMBER() OVER (ORDER BY ‘ + @ColumnName + ‘ ASC) AS bigint) AS RN,

Abs(‘ + @ColumnName + ‘ – Avg(CAST(‘ + @ColumnName + ‘ AS Decimal(‘ + @DecimalPrecision + ‘))) OVER (ORDER BY ‘ + @ColumnName + ‘ ASC ROWS UNBOUNDED PRECEDING)) / StDev(CAST(‘ + @ColumnName + ‘ AS Decimal(‘ + @DecimalPrecision + ‘))) OVER (ORDER BY ‘ + @ColumnName + ‘ ASC ROWS UNBOUNDED PRECEDING) AS AbsoluteDeviation

FROM ‘ + @SchemaAndTableName + ‘

WHERE ‘ + @ColumnName + ‘ IS NOT NULL) AS T1

WHERE AbsoluteDeviation IS NOT NULL) AS T2) AS T3

ORDER BY IsOutlier DESC, AbsoluteDeviation DESC, RejectionRegion, ‘ + @ColumnName + ‘, ‘ + @PrimaryKeyName + ‘‘

–SELECT @SQLString — uncomment this to debug string errors

EXEC (@SQLString)

…………To avoid divide-by-zero errors when the standard deviation is zero, I borrowed the NullIf trick from a post by Henrik Staun Poulsen at StackExchange (which probably saved me a lot of time trying to devise an answer of my own, since I was clueless about NullIf).[3] Most of the rest of the code follows the same format I’ve used in previous procedures, with the usual @DecimalPrecision parameter available to avoid arithmetic overflows, the usual set of parameters and implementation code allowing users to select a column in any database for which they have permissions, etc. The messiest part is the use of subqueries to bubble up previous computations to higher levels, which I’m considering recoding as a series of sequential CTEs, if that would be easier for people to follow. As always, I’m using a Calculations schema that users can change and haven’t included code for accommodating spaces in object names or to prevent SQL injection. My usual disclaimer is still operative: I’m posting this in order to learn, not because I know what I’m talking about, so test my code before putting it into production. This is an introduction to the topic with suggestions on how to code it, not the last word. I’m not 100 percent sure I’ve got the order of operations correct in the second subquery or that I’m not supposed to use the @Alpha value squared instead of the rejection region; it’s likewise possible I ought to be feeding the count minus one to the degrees of freedom parameter of the T-distribution function, rather than the simple count. A T-SQL expert might also be able to suggest numerous ways of improving the performance, given that the query’s still a resource hog even with the ROWS UNBOUNDED clause.

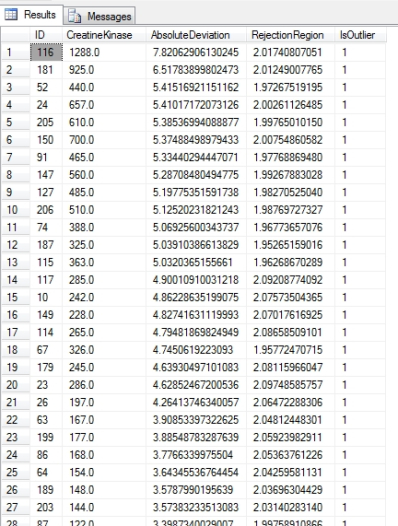

Figure 2: Results for the Modified Thompson Tau Procedure

EXEC [Calculations].[ModifiedThompsonTauTestSP]

@DatabaseName = N’DataMiningProjects‘,

@SchemaName = N’Physics‘,

@TableName = N’HiggsBosonTable‘,

@ColumnName = N’Column1′,

@PrimaryKeyName = N’ID’,

@DecimalPrecision = N’ 33,29′,

@Alpha = 0.05

…………The results in Figure 2 depict outliers for the Creatine Kinase enzyme, which plays in a role in the Duchennes form of muscular dystrophy, which is the subject of a tiny 209-row dataset I downloaded from the Vanderbilt University’s Department of Biostatistics for use as practice data in this series.Whenever performance has been an issue with procedures I’ve posted previously in this series, I’ve stress-tested them against the first float column of the 11-million-row Higgs Boson dataset, which I downloaded from the he University of California at Irvine’s Machine Learning Repository and converted to a nearly 6-gigabyte SQL Server table. That test took an hour and fifteen minutes on my poor beat-up six-core development machine, which is probably several orders of magnitude slower than a real database server yet still sluggish enough to make me worry about the performance in a live environment. On the other hand, this kind of complete examination of an entire column really amounts to a crude data mining operation, of the kind that would normally be run occasionally on off-peak hours. It might thus be sufficient to get the job done as it is, although I’m sure a real T-SQL coder could advise on several ways of getting around the single Sort operation that gobbled up 95 percent of the cost of the execution plan (which I won’t bother to post, because there’s nothing more to the story except that Sort). I have the distinct impression, however, that the ROWS UNBOUNDED PRECEDING method is as close to an optimal method of recursively recalculating the aggregates as we’re going to get.

…………Either way, I feel like I made a unique contribution for the first time in this series, by adapting to SQL Server use cases a statistical test that is often misused, even when applied to its usual scenarios in hypothesis testing. The results in Figure 2 allow DBAs and data miners to make decisions based on a bird’s eye view of the potential outliers in a dataset, without wantonly deleting them in knee-jerk manner. Like the other five outlier detection methods I’ve segregated in this part of the tutorial series, it is still based on hypothesis testing methods that retard its usability, like the requirement of a Gaussian distribution and the difficulties of finding or calculating T-distribution lookup tables for degrees of freedom far in excess of 200 rows. Before proceeding with outlier identification methods that are more likely to be profitable to SQL Server DBAs, like Interquartile Range, Peirce’s Criterion, Cook’s Distance, Mahalanobis Distance and various visual means that can be displayed in Reporting Services, I’ll finish out this segment with a discussion of one of the oldest means, Chauvenet’s Criterion. Like the others in this subset, its usefulness is severely curtailed by its dependence on a normal distribution and the small size of the available lookup tables. Furthermore, it is also implemented in an inherently recursive manner, which brings with it the same performance and logical validity issues that the Modified Thompson Tau test does. I’ll attempt to code it in the next installment of this series anyways, for the sake of completeness and the possibility that a SQL Server user out there might find a use for it – as well as to gain some further experience in translating stats and math equations into code, while passing on my misadventures in T-SQL to others in the hopes that neither I nor they will repeat my cautionary tales.

[1] It is mentioned in the Wikipedia webpage “Outlier,” at the web address http://en.wikipedia.org/wiki/Outlier.

[2] Ben-Gan, Itzik, 2012, Microsoft SQL Server 2012 High-Performance T-SQL Using Window Functions . O’Reilly Media, Inc.: Sebastopol, California.

[3] Poulsen, Henrik Staun, 2009, reply to the thread “How to Avoid the “Divide by Zero” Error in SQL?” published May 14, 2009 on the StackOverflow.com website. Available online at http://stackoverflow.com/questions/861778/how-to-avoid-the-divide-by-zero-error-in-sql