By Steve Bolton

…………In the last edition of this amateur series of self-tutorials on finding outlying values in SQL Server columns, I mentioned that Grubbs’ Test has a number of limitations that sharply constrain its usefulness to DBAs. The Generalized Extreme Studentized Deviate Test (GESD) suffers from some of the same restrictions – most notably the fact that it is only applicable to datasets that have a Gaussian (a.k.a. “normal”) distribution, better known as the bell curve. Nonetheless, the GESD applies to a wider set of use cases because it can be applied to find more than one outlier, unlike the Grubbs’ Test it is derived from. It is “essentially Grubbs test applied sequentially,” with some tweaks applied to its test statistics and critical values to avoid such problems as false negatives with weak stopping criteria.[1] Grubbs’ Test is sometimes performed recursively by deleting aberrant data points one at a time, which raises the whole morass of ethical issues I’ve harped on previous articles, like the far too common tendencies among researchers to classify outliers arbitrarily, subjectively change those classifications (i.e. “moving the goalposts”) and fail to investigate the causes of aberrant values and matching them to appropriate responses. As mentioned previously, outliers can be differentiated by their use cases, numbers and types of inputs, the numbers and types of outputs and their mathematical properties and the means of calculation applied in between the input and output stage, if that would affect performance. A failure in any one of these areas can be worse than an incorrect calculation. An outlier workflow also ought to include rigorous goodness-of-fit testing to make sure that only tests appropriate to the underlying distribution are applied, plus proper classification procedures and criteria, guarding against redefinition (i.e. “changing horses in midstream”) and matching the underlying causes of outliers with appropriate responses. Not all outliers are the result of faulty data collection and storage, so simply deleting aberrant data points as recursive application of the Grubbs’ Test implies is often inappropriate, or even unethical. There is little reason to use it when a test like GESD is available to ameliorate some of these associated drawbacks and temptations The GESD test is still quite limited in comparison to other outlier detection methods we’ll survey here, since it is normally only applied to look for small numbers of aberrant data points, but at least we’re not limited to finding a single one as we are with the Grubbs’ Test. The performance costs for both are quite trivial. Another benefit of the GESD is that we can reuse much of the code from last week’s mistutorial, thereby simplifying the development and troubleshooting processes.

…………We can thank Harvard Biostatistics Prof. Bernard Rosner for this improvement to the Grubbs’ Test, which was published in an issue of the statistical journal Technometrics back in 1983.[2] I was unable to get ahold of the this paper, unlike the original publications for Grubbs’ Test, but I was able to find the formulas at the National Institute for Standards and Technology’s Engineering Statistics Handbook, which is one of the most readable online sources of information on statistics for amateurs like myself. I’d wager that most readers will find a pint of Guinness a lot less boring than these underlying equations, so it is worth noting that we can thank a brewer of Irish beer for some of these formulas. The “Studentized” part of the name is derived from the “Student’s T-distribution” it is dependent on, which I always vaguely pictured as being derived from some sort of high school or college math test; the real story is more colorful, in that it is was the product of a 1908 Biometrick article by William Sealy Gosset, who chose the pen name “Student” after his employer, the Guinness brewery in Dublin, required him to publish under a pseudonym.[3] Just something more colorful that degrees of freedom and critical values to meditate on the next time you’ve had one too many draughts of Guinness. I’m not sure why the GESD calculations, like those of Grubbs’ Test, are dependent on both the T-distribution and the bell curve, although one of my missions when starting this series was to grasp the underlying mechanics and logic of the formulas, which is something I was deficient in when writing my last series of tutorials, on SQL Server Data Mining (SSDM). I am trying to acquire the skill to translate equations into T-SQL, Multidimensional Expressions (MDX) and Visual Basic (VB) code as quickly as possible, but sometimes still struggle to get the formulas right. Some caution is in order here, because the code in the figure below only matched the first four results for Rosner’s 54 sample data points, in the example calculation at the NIST webpage. I had some issues following the order of operations in the NIST’s equations at first, but it is odd that they would only be off by a hundredth of a decimal point for the first four results, then diverge after that. This ought to refocus attention on one of the main caveats associated with any of my tutorial series: always check my code before putting it into production, because I’m writing this in hopes of learning, not because I already know what I’m doing; I’m only publishing it in order to sharpen my thinking further and in the hopes that others might gain something from my misadventures.

Figure 1: Code for the GESD Test Procedure

CREATE PROCEDURE [Calculations].[GESDTestSP]

@DatabaseName as nvarchar(128) = NULL, @SchemaName as nvarchar(128), @TableName as nvarchar(128),@ColumnName AS nvarchar(128), @PrimaryKeyName as nvarchar(400), @Alpha decimal(38,35) = 0.05, @NumberOfTests bigint = 10

AS

SET @DatabaseName = @DatabaseName + ‘.’

DECLARE @SchemaAndTableName nvarchar(400), @SQLString nvarchar(max)

SET @SchemaAndTableName = ISNull(@DatabaseName, ”) + @SchemaName + ‘.’ + @TableName

SET @SQLString =

‘DECLARE @Count bigint

SELECT @Count=Count(‘ + @ColumnName + ‘)

FROM ‘ + @SchemaAndTableName + ‘

WHERE ‘ + @ColumnName + ‘ IS NOT NULL

SELECT RN, ‘ + @PrimaryKeyName + ‘, ‘ + @ColumnName + ‘ GESDStatistic, P, V, CriticalRegion, ”IsOutlier” = CASE WHEN GESDStatistic > CriticalRegion THEN 1 ELSE 0 END

FROM (SELECT RN, ‘ + @PrimaryKeyName + ‘, ‘ + @ColumnName + ‘, GESDStatistic, P, V, (Calculations.FindCriticalRegionForTDistributionFunction (V, 1, 1-P) * (@Count – RN)) / Power(((@Count – (RN – (1 + (Power(Calculations.FindCriticalRegionForTDistributionFunction (V, 1, 1-P), 2))))) * (@Count – (RN + 1))), 0.5) AS CriticalRegion

FROM (SELECT RN, ‘ + @PrimaryKeyName + ‘, ‘ + @ColumnName + ‘, GESDStatistic, 1 – (‘ + CAST(@Alpha AS nvarchar(50)) + ‘ / (2 * (@Count – (RN + 1)))) AS P, ((@Count – RN) – 1) AS V

FROM (SELECT TOP ‘ + CAST(@NumberOfTests AS nvarchar(50)) + ‘ ROW_NUMBER() OVER (PARTITION BY 1 ORDER BY ‘ + @ColumnName + ‘ DESC) AS RN, ‘ + @PrimaryKeyName + ‘, ‘ + @ColumnName + ‘,

(‘ + @ColumnName + ‘ – Avg(‘ + @ColumnName + ‘) OVER (PARTITION BY 1 ORDER BY ‘ + @ColumnName + ‘ DESC ROWS BETWEEN CURRENT ROW AND UNBOUNDED FOLLOWING)) / StDev(‘ + @ColumnName + ‘) OVER (PARTITION BY 1 ORDER BY ‘ + @ColumnName + ‘ DESC ROWS BETWEEN CURRENT ROW AND UNBOUNDED FOLLOWING) AS GESDStatistic

FROM ‘ + @SchemaAndTableName + ‘

WHERE ‘ + @ColumnName + ‘ IS NOT NULL

ORDER BY ‘ + @ColumnName + ‘ DESC) AS T1) AS T2) AS T3′

–SELECT @SQLString — uncomment this to debug string errors

EXEC (@SQLString)

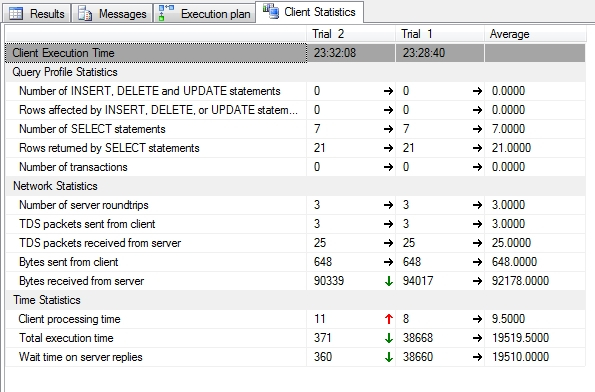

…………Anyone who has read previous articles in this series should be able to cut through all the gobbledygook in Figure 1 by noticing the commonalities between this T-SQL code and that of previous procedures. Once again, the first five parameters allow users to perform the test on any column in any database for which they have permissions and the first three lines of the procedure make some adjustments to the string names of those parameters for legibility purposes. There is no @OrderByCode as in previous procedures and @DecimalPrecision is likewise not needed. Instead, we must supply values for two new parameters, the @NumberOfTests to perform (which I’ve set to an arbitrary default of zero) and the @Alpha value, which is a core concept in hypothesis testing along with confidence levels, critical values, critical regions, statistical significance and the like. I strongly recommend Will G. Hopkins’ website A New View of Statistics for anyone interested in a readable introduction to these topics, which really aren’t as hard to grasp as they seem – as long as someone explains them in plain English. As usual, the procedure is created in a schema called Calculations, which you can change to your liking; uncommenting the next-to-last line allows you to debug the dynamic SQL; and you’ll have to add your own code to accommodate spaces in object names, which I don’t allow, or to handle SQL injection, which I haven’t included to keep my code short and to the point. This procedure is much shorter than last week’s because I’m reusing the code I already published for the Calculations.FindCriticalRegionForTDistributionFunction, which performs the comparisons against the T-distribution to see if a particular value is an outlier. The code is also much shorter because there’s only one version of the GESD, whereas Grubbs’ Test has two-tailed, lower one-tailed and upper one-tailed versions. As is customary, I retrieve the global statistics once only at the beginning of the dynamic SQL, but in this case, but all we need is a simple record count. As usual, it takes several subqueries to perform all of the calculations required, which forms the bulk of the rest of the dynamic SQL. I’m really quite proud of myself for coding it with the DESC ROWS BETWEEN CURRENT ROW AND UNBOUNDED FOLLOWING clauses rather than a common table expression (CTE), since one of my goals is to make my T-SQL more efficient by implementing the kind of windowing functions Itzik Ben-Gan discusses in his classic reference Microsoft SQL Server 2012 High-Performance T-SQL Using Window Functions.[4] It seemed to be the most efficient solution to the problem, although performance really isn’t much of an issue with either GESD or Grubbs’. Figure 3 shows only a minimal change in the execution time when a non-clustered index was added, while the execution plans weren’t really noteworthy enough to publish.

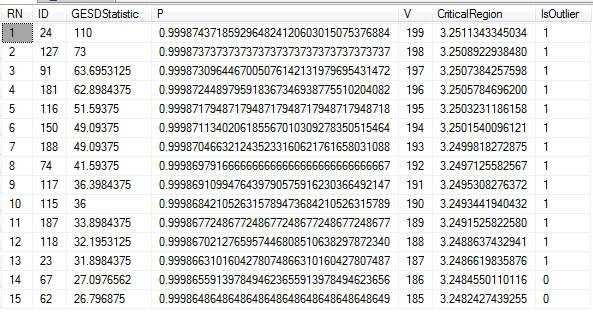

Figure 2: Results for the GESD Test Procedure

EXEC Calculations.GESDTestSP

@DatabaseName = N’DataMiningProjects‘,

@SchemaName = N’Health‘,

@TableName = N’DuchennesTable‘,

@ColumnName = N’PyruvateKinase‘,

@PrimaryKeyName= ‘ID’,

@Alpha = 0.05,

@NumberOfTests = 15

Figure 3: Client Statistics for the GESD Test Procedure

…………If performance had been an issue I would have run the procedure against the first float column in the Higgs Boson Dataset made publicly available by the University of California at Irvine’s Machine Learning Repository, which occupies nearly 6 gigabytes of space in the DataMiningProjects database I’ve created to encompass all of the three practice datasets we’ll be using in this tutorial series. Instead, I ran it against the PyruvateKinase column of a tiny 9-kilobyte dataset on the Duchennes form of muscular dystrophy, published online by Vanderbilt University’s Department of Biostatistics. Since calculations on the same enzyme were also performed in last week’s article, we can easily contrast them. The stark, obvious difference is that we only received one row of output for Grubbs’, whereas Figure 2 flags the first 13 rows as outliers based on the fact that their GESD statistics exceed the values in the T-distribution lookup table for the associated critical region, which is in turn determined by the probability values (P) and the degrees of freedom (V, which is in this case the record count).

…………The EXEC code above it specified a common @Alpha value of 0.05 and a test of 15 values, which is why we received 15 results. This is certainly more useful than returning a single output of the kind we get from Grubbs’ Test, but still of limited utility to DBAs who normally work with tables of thousands or even billions of rows. While writing this series, one of the weaknesses I’ve discovered with applying standard outlier detection methods to SQL Server databases is that many of them simply aren’t designed with the Big Data buzzword in mind. Many of them do a fantastic job when used in hypothesis testing, the main use case scenario they were designed for, which is a much narrower and more specific task than the two most common uses cases DBAs face, exploratory data mining and checking for data quality problems. Studies of the first kind often involve just a few hundred or even just a few dozen cases, whereas in the latter we may be dealing with millions of records. It is almost impossible, however, to find lookup tables for many of the distributions and calculations associated with these hypothesis tests that go beyond more than a few hundred values. For example, I had to hunt all over the Internet to find a table of T-distribution values that went up as far as 200 values, which is still below the 209 rows of the Duchennes table and minuscule in comparison to the 11 million rows of the Physics.HiggsBosonTable. I’ve also learned that attempting to fill the gap by calculating the missing values for these tables yourself can be quite computationally expensive and surprisingly difficult to code, as the cumulative distribution function (CDF) for the Gaussian bell curve can be. I now suspect that Grubbs and GESD belong to a subset of outlier detection methods that DBAs will rarely find use cases for, along with such related means as the Tietjen-Moore Test, Dixon’s Q-Test, Chauvenet’s Criterion and the Modified Thompson Tau Test. I’ll dispense with these in the middle of the series since I’ve already written most of the code for them and might as well not let it go to waste, just in case a reader out there discovers a need to include them in their toolbelt. After this quick stretch we’ll get into methods like Interquartile Range and Peirce’s Criterion, which I expect to be much more useful for our scenarios, although not perhaps as much as topics we’ve already covered like Benford’s Law and Z-Scores. I also have high hopes for Cook’s Distance and Mahalanobis Distance, which I’ll tackle after a recap of SSDM Clustering and an interlude into Visual Outlier Detection with Reporting Services, in which we can spot outliers with the naked eye. For now, I’ll finish on quickly getting out of the way other means of outlier detection from the same class as GESD and Grubbs. Many of these share some of the same severe limitations, such as dependence on a normal distribution. GESD may be the most flexible among them, since it allows you to specify the number of outliers you want to look for, whereas Dixon’s Q-Test and Grubbs limit you to just one. As we shall see next week, the Tietjen-Moore test appears at first glance to be more useful since it also include a parameter like @NumberofTests. Its utility is crimped, however, by the subtle difference that it only tells you whether or not the dataset contains that number of outliers. GESD will likely be more useful, in that it can actually flag the specified number of records as aberrant data points.

[1] See National Institute for Standards and Technology, 2014, “1.3.5.17.1. Grubbs’ Test for Outliers,” published in the online edition of the Engineering Statistics Handbook. Available at http://www.itl.nist.gov/div898/handbook/eda/section3/eda35h1.htm

[2] Rosner, Bernard, 1983, “Percentage Points for a Generalized ESD Many-Outlier Procedure,” pp. 165-172 in Technometrics, May, 1983. Vol. 25, No. 2. Original citation found at the NIST webpage “1.4.3. References For Chapter 1: Exploratory Data Analysis,” published in the online edition of the Engineering Statistics Handbook. Available on the Internet at http://www.itl.nist.gov/div898/handbook/eda/section4/eda43.htm#Rosner

[3] See the Wikipedia page on the “Student’s T-distribution,” available at the web address http://en.wikipedia.org/wiki/Student’s_t-distribution .

[4] Ben-Gan, Itzik, 2012, Microsoft SQL Server 2012 High-Performance T-SQL Using Window Functions . O’Reilly Media, Inc.: Sebastopol, California.