Right now one of the hottest topics around the world is the cloud and the successful methods for the migration to the cloud. In the world of Microsoft Data Platform and the relational databases, the Azure SQL Database represents one of the primary targets for everyone. Azure SQL Database is a relational database, offered as a PaaS (Platform as a Service), offering besides almost exact surface area of SQL Server (at times a number of actual features are well in front of the On-Premises offerings, since Microsoft is really delivering Cloud First releases).

When you are using Azure SQL Database, have in mind that what you receive is not just 1 replica, but a full high availability solution with 3 replicas and automated patching that will require no intervention for upgrades from your side.

While it is far from being perfect (and let us be clear – there are NO perfect solution, and there will never be), it is an incredible offering that more and more of OH22 clients are adopting for their relational database needs.

Needless to say, that the most typical way of migration starts with migration to Azure Virtual Machine with SQL Server, after which the migration to Azure SQL Database takes place. For somebody making the first steps in the cloud, this is rather safe way of getting to know the joys and the bad side of the cloud.

I decided to put a list of the migration methods that can be useful for migrating to Azure SQLDatabase. By all means it is not complete and if you have any suggestions to expand it – do not be shy.

The current list of the ways that I am considering is here:

- SQL Server Management Studio (SSMS)

- BACPAC + SSMS/Portal/Powershell

- SQL Azure Migration Wizard (SAMW)

- SQL Server Data Tools (Visual Studio) + BCP/SSIS

- Azure Data Factory

- Transactional Replication

- Linked Server

Before we start

Important Tip: Select a reasonably high Premium Database for getting the best throughput when importing data into the Azure SQLDatabase, I would recommend something around P4 or P6 as the basis, and once your are done, then scale down your database to the level and edition that suits your needs. It will allow you to execute migrations in a very reasonable amount of time.

SQL Server Management Studio (SSMS)

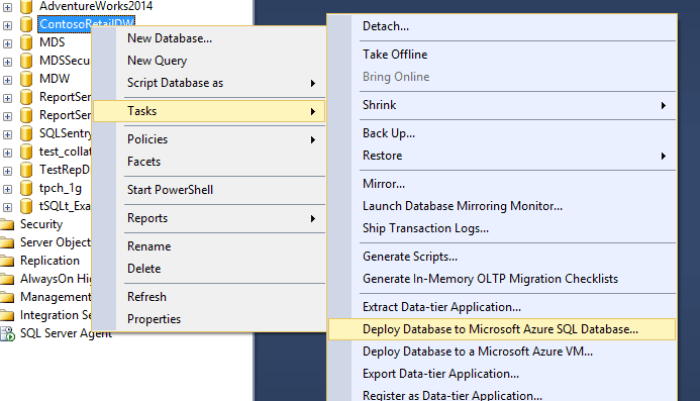

In SSMS, there is an option for migrating a database directly to Deploy Database Directly to Microsoft Azure SQL Database. With a right click on the database in Object Explorer, and selecting Tasks option, you will be able to execute this operation.

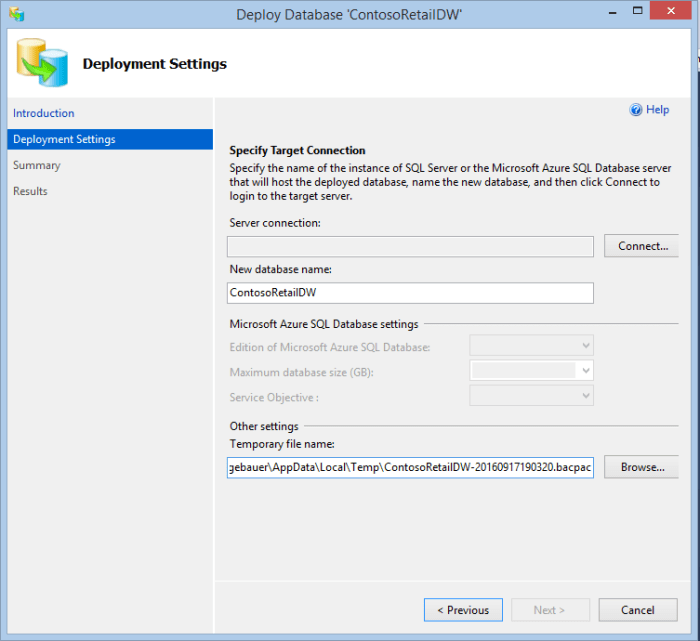

Next step is to establish a connection to the Azure SQL Server, that you will need to configure in the Azure Portal, with the help of Powershell or CLI or whatever works for you:

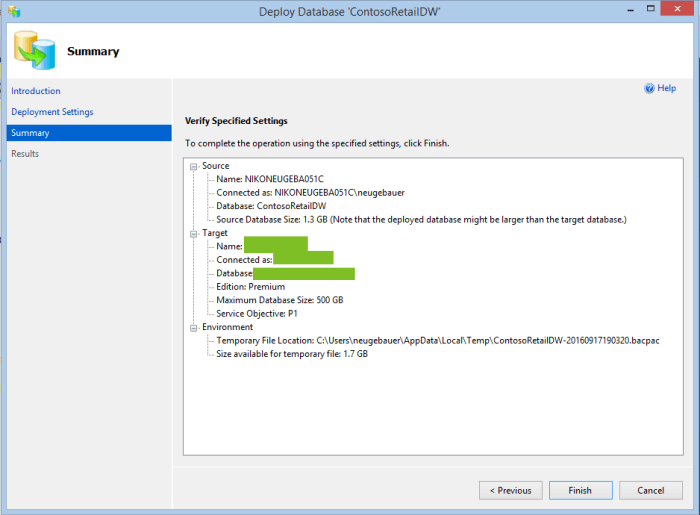

After establishing a connection to the Azure SQL Server (a logical aggregation of the Azure SQL Databases), you will be able to select the name of the new database that you will be deploying to (heck, I wish I would be able to deploy to an existing DB with this way, I swear – but for all this time this functionality has not been implemented unfortunately).

The other thing you can specify is the location of the exported .BACPAC file (A BACPAC is an archive that contains a database’s schema and data, and is very useful for the migrations). If you have a faster drive or LUN, then specify it if you are following this path.

After extracting your database to the specified BACPAC file, you will be able to see the progress for each of the tables that is being migrated to Azure SQLDatabase.

In this scenario, the migration is executed behind the GUI with the help of the SQLPackage.exe, the utility that runs the export / import processes converting databases to BACPAC and importing BACPAC into the databases.

The same SQLPackage.Exe utility will compare the compatibility of the extracted database before importing the data into the new SQLDB. This utility is actually being used by multiple applications, such as Visual Studio.

Well, you can execute migration in the way you want by invoking the SQLPackage.exe and uploading the generated BACPAC directly into a

You can use any of the popular applications for uploading your files into the Azure Storage Blob Container, which you can use & reuse for creating new SQL Databases in Azure.

I personally love Azure Storage Explorer, but there are so many others and of course you can use Powershell to automate this task

I will not try to repeat something that is already well written and explained, and so for this path please follow the article Import a BACPAC file to create an Azure SQL database.

A significant advantage here is that you can use multiple threads while uploading the data, plus you can create multiple databases from the same DACPAC file. The disadvantage is of course that currently you will not be able to use premium storage, thus the speed will be quite limited (in comparison with a P11 or P15) but I believe that this limitation will be removed in the future.

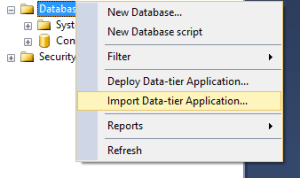

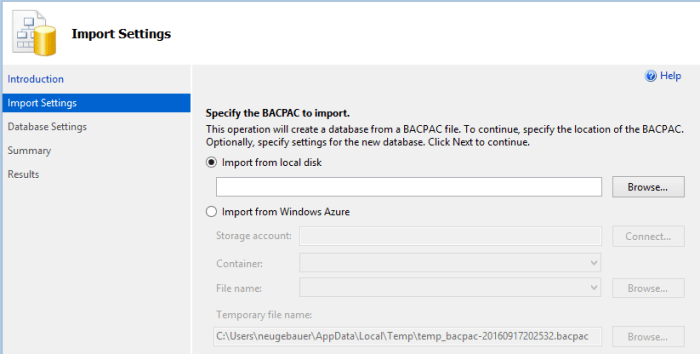

Notice that you can execute this process with the help of the SSMS, by simply connecting to your Azure SQL Server, right-clicking on the Databases and selecting option Import Data-tier Application, as shown on the picture below:

In the next step, you will be able to choose the location of your BACPAC file, if it is to be found on your local drive or if it is already uploaded to Azure Blob Storage:

After selecting Database properties, you will be able to move your DB to an Azure SQL Database.

SQL Azure Migration Wizard (SAMW)

For some projects the SQL Azure Migration Wizard (SAMW) can be a very interesting option, this is an open-source tool from CodePlex that using the BCP tool under the cover will export every single table from your local Database and will push the exported result into Azure SQL Database, while comparing the feature list with the supported one and showing the exact errors that take place.

At the moment of writing of this article, the last update to this tool was over 15 months ago, and the latest release SQL Server 2016 is not supported at all and so I would tend to be very careful before using it.

This tool can be helpful if you are not very technical and your database is not very big (well under 1 GB).

SQL Server Data Tools (Visual Studio) + BCP/SSIS

If you are serious about database development, than you must be using SSDT (SQL Server Database Tools), which is Visual Studio based tool for database development.

SSDT will allow you to compile your project for your target (Azure SQL Database), making sure that every single feature in T-SQL that you are using is compatible with the place you are looking to deploy your application (You can use on-premises versions of SQL Server as the target).

There are so many great options within SSDT, such as schema-compare where you can compare the database schema (and you can do it even between 2 servers that have nothing to do with your project), snapshots and so on & on.

Once you are done with the project adjustments, you can take the generated DACPAC (Data-tier Application Component Packages) from the release folder and deploy it to the Azure SQL Database. You can also deploy directly from the SSDT by using Publish option, or you can use schema-compare option and deploy the differences with a script based on the differences.

For the data transfer you can use SQL Server Integration Services or BCP utility, plus there is always an option for Azure Data Factory (ADF) that is described in the next step.

Azure Data Factory

Azure Data Factory, or commonly known as ADF is a great resource for controlling and executing a number of activities for and within Microsoft Azure.

Because of the good number of optimisation this tool is a great choice if you are migrating rather big databases with big tables to Azure SQL Database. ADF will serialise and compress the data before moving it into the Azure SQLDB, thus a lot of times optimising the times spent on the migration process.

There is a great article Move data by using Copy Activity which I vividly recommend to read before advancing with this method and notice that you will need to install and configure the Data Management Gateway on the Virtual Machine where your SQL Server is located (on premises or in Azure) in order to take advantage of the Azure Data Factory.

The downside of this method (using ADF) is that you all need to configure every single table manually and that it might take an unreasonable amount of time, if the number of your tables is in hundreds or thousands.

Transactional Replication

My favourite way of migrating Databases online to Azure SQL Database is the Transactional Replication. Very easy to setup, exactly as a traditional transactional replication on-premises with specificity that you will need to push data to the subscriber (Azure SQLDB) since there are no processes in Azure SQLDB that can do this work for you. Of course there can be something done in Azure Automation, but to my knowledge there is nothing supported that is implemented so far.

For the initialisation a Snapshot will be generated and it will be seeded into the Azure SQLDB.

Once the data is fully synchronised you can simply change the connection settings from the SQL Server to Azure SQL Database and voila, you are done!

If you are interested in Transactional Replication bases, take a look at this almost 1.5 years old video at one of my favourite shows Data Exposed, where Jean-Yves Devant is explaining them.

Tip: at PASSCamp in Germany last December (2015) I have already shown how to set it up in a hands-on lab, and so I highly recommend this bleeding-edge event.

Linked Server

There is something I have learned on one of my most recent Azure SQL Database projects: using Linked Server from your SQL Server can be one effective tool for data transfer to Azure SQL Database.

If you have a lot (hundreds or thousands) of really small tables (in small MB), then establishing a Linked Server directly to your SQLDatabase, opening multiple connections and transferring the data directly can be very effective. There are no additional resources involved and the true limit in this case is the landing capacity of your database, plus the number of connections that you will open on your SQL Server.

Let us setup a linked server with the following code (notice that you will need to specify your Azure SQL Server and credentials for that):

-- Add a Linked Server to Azure SQL DB

EXEC sp_addlinkedserver

@server = N'AzureSQLDB',

@srvproduct = N'Azure SQL DB',

@provider = N'SQLNCLI',

@datasrc = N'mydb.database.windows.net,1433',

@catalog = 'ContosoRetailDW';

-- Configure Login to Azure SQLDB

EXEC sp_addlinkedsrvlogin

@rmtsrvname = 'AzureSQLDB',

@useself = 'FALSE',

@locallogin = NULL,

@rmtuser = 'myAzureUser',

@rmtpassword = 'MyA#zureP4$$w0dr;)'

-- Enable RPC in & out

EXEC master.dbo.sp_serveroption @server = N'AzureSQLDB', @optname = N'rpc', @optvalue = N'true';

EXEC master.dbo.sp_serveroption @server = N'AzureSQLDB', @optname = N'rpc out', @optvalue = N'true';From now on, you can easily transfer tables 1 by 1, being able even to compare the source & target tables, making sure that you are synchronising the missing data only. For example to make sure that my migration was successful I will run the following query against my favourite table FactOnlineSales:

select Sum(SalesAmount) from AzureSQLDB.ContosoRetailDW.dbo.FactOnlineSales;

Warning: you will not be able to take advantage of the Columnstore Indexes in this scenario, because Columnstore Indexes do not support cursors and this is how SQL Server Native Client is functioning.

For the large tables, the optimisation of the process might be very worth it trying the ADF or SSIS/BCP scenario, where you can take advantage of the multiple threads and effective data compression.

The Missing Stuff

Polybase – even though SQL Server 2016 supports Polybase out of the box and the Azure SQL DataWarehouse makes great usage of it (and all 3 versions have the very same codebase, though the features and implementations are wildly different), right now there is no possibility of using it for migrating to Azure SQLDatabase. I would love to be able to put data into Azure Blob Storage and import it with the help of the simple T-SQL commands.

The final thoughts

I am certainly not expecting this to be the complete list of ways to migrate to Azure SQL Database, but this is the one I am carrying with me with my toolbox and I am eager to expand it with more effective ways.