What will be much easier with Microsoft Fabric?

Microsoft Fabric is built on a foundation of Software as a Service (SaaS), which takes simplicity and integration to a whole new level. SaaS is a software licensing and delivery model in which software is provided over the internet and is hosted by a third-party provider. This means that the software is available to users on-demand and can be accessed from anywhere with an internet connection.

What exactly is easier with Fabric?

Creating of lakehouse

To create a lakehouse in Azure Synapse, you need to follow a series of steps. First, you will need to create an Azure Synapse workspace, which serves as the central hub for managing your data and analytics workflows. Next, set up a storage account to store your data in the cloud. After that, create an Azure Synapse Analytics workspace, which allows you to perform advanced analytics and processing on your data. To facilitate data processing, create a Spark pool within the workspace. Now, you can start ingesting your data into the cloud storage layer, specifically into the “raw” zone of the data lake. At this stage, the data remains untyped, untransformed, and uncleaned.

To create a lakehouse in Microsoft Fabric, follow these steps. If you don’t have a Fabric workspace for Power BI, create one. Then, browse to the Data Engineering homepage within the workspace and locate the Lakehouse card under the New section. Click on it to start the creation process. Enter a name for the lakehouse and, if required, specify a sensitivity label. Finally, click on Create to initiate the creation. Once the lakehouse is created, Microsoft Fabric automatically generates a SQL Endpoint, enabling data access and SQL-based queries. Leverage the capabilities of the lakehouse within Microsoft Fabric for efficient data engineering and analysis.

Connection to Azure Data Lake Storage

Power BI, outside of the Fabric environment, requires properly configured roles or permissions to communicate with Azure Data Lake Storage. It can connect either through an import mode, which is faster, or through direct query, which provides real-time data but is slower as it constantly queries the source data.

In contrast, Fabric utilizes the Onelake environment, reducing the complexities of setting permissions and introducing a new feature called Direct Lake. Direct Lake enables loading parquet-formatted files directly from a data lake without the need to query a Lakehouse endpoint or import/duplicate data into a Power BI dataset. It serves as a fast-path for loading data from the lake directly into the Power BI engine, making it immediately available for analysis.

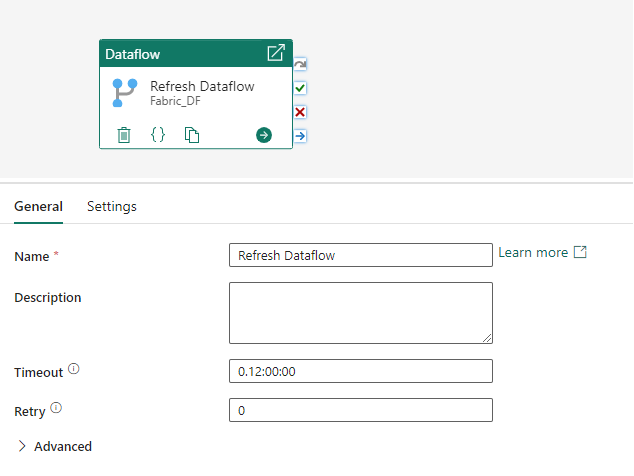

DataFlow orchestration

Dataflows in Power BI provide a convenient way to transform and prepare data for analysis. When working outside of the Power BI Fabric, these dataflows rely on scheduled refreshes. With a Pro license, you can schedule up to 8 refreshes per day, while Premium offers a higher limit of 48 refreshes. Refreshes can be set in 30-minute intervals, where you don’t have a guaranteed exact start time, but only a 30-minute interval during which the refresh should occur.

However, when utilizing dataflows within the Power BI Fabric, you gain additional capabilities and integration possibilities. By orchestrating dataflows directly from Azure Data Factory, you can seamlessly incorporate these data transformations into a standard ETL (Extract, Transform, Load) or ELT (Extract, Load, Transform) process. This means that you can leverage the full power and flexibility of Azure Data Factory to orchestrate and manage the dataflow operations.

Sync Storage with your local PC

If you want to work with and synchronize documents and data with Azure Data Lake Storage from your local computer, you can utilize the Azure Storage Explorer application. To use Azure Storage Explorer, you need to sign into your Azure account and connect to your storage account using the account name and key. You can find your account keys in the Azure portal. To upload and download data, you can use the upload and download buttons within Azure Storage Explorer.

However, if you are working with Microsoft Fabric and OneLake, you can simply install the OneLake File Explorer application. This application seamlessly integrates OneLake with Windows File Explorer, similar to how OneDrive operates. It allows users to copy locally stored data to OneLake, read newly uploaded data with a Fabric Notebook, and even supports file updates. The application automatically syncs all OneLake items that you have access to in Windows File Explorer.

In this context, “sync” refers to pulling up-to-date metadata on files and folders and sending locally made changes to the OneLake service. Syncing does not involve downloading the data itself; instead, placeholders are created. To download the actual data locally, you need to double-click on a file.

I have been dealing with MS SQL Server for several years. I enjoy both scripting and server administration. Joyful Craftsmen allowed me to deepen my knowledge on cloud solutions as well. Therefore, I would like to share with you the acquired knowledge in the field of data migration to Azure. I’m interested in Power BI and Microsoft Fabric as well.

LUKÁŠ KARLOVSKÝ

Data Engineer