Foreword

I was always wondering what the KEEP PLAN hint does. The documentation isn’t very specific (emphasis mine):

Forces the Query Optimizer to relax the estimated recompile threshold for a query.

In this post, I’d like to prove what it really does.

The answer

Like Dwarves of Moria, I delved too greedily and too deep. I found the answer in a Plan Caching and Recompilation in SQL Server 2012 whitepaper before trying it out on my own. Relevant excerpt:

KEEP PLAN

The KEEP PLAN query hint changes the recompilation thresholds for temporary tables, and makes them identical to those for permanent tables. Therefore, if changes to temporary tables are causing many recompilations, this query hint can be used.

But since it doesn’t show the proof, I decided to test it out anyway.

Recompilations and AUTO_UPDATE_STATISTICS

First, we need to cover these concepts for our test to make sense.

According to Extended Events, there are 20 reasons for recompilations.

SELECT dxmv.map_key, dxmv.map_value

FROM sys.dm_xe_map_values AS dxmv

WHERE dxmv.name = 'statement_recompile_cause'

| map_key | map_value |

|---|---|

| 1 | Schema changed |

| 2 | Statistics changed |

| 3 | Deferred compile |

| 4 | Set option change |

| 5 | Temp table changed |

| 6 | Remote rowset changed |

| 7 | For browse permissions changed |

| 8 | Query notification environment changed |

| 9 | PartitionView changed |

| 10 | Cursor options changed |

| 11 | Option (recompile) requested |

| 12 | Parameterized plan flushed |

| 13 | Test plan linearization |

| 14 | Plan affecting database version changed |

| 15 | Query Store plan forcing policy changed |

| 16 | Query Store plan forcing failed |

| 17 | Query Store missing the plan |

| 18 | Interleaved execution required recompilation |

| 19 | Not a recompile |

| 20 | Multi-plan statement required compilation of alternative query plan |

I’m primarily interested in #2 - Statistics changed.

What happens is described nicely in the AUTO_UPDATE_STATISTICS documentation. Again, emphasis mine:

Specifies that Query Optimizer updates statistics when they’re used by a query and when they might be out-of-date. Statistics become out-of-date after insert, update, delete, or merge operations change the data distribution in the table or indexed view. Query Optimizer determines when statistics might be out-of-date by counting the number of data modifications since the last statistics update and comparing the number of modifications to a threshold. The threshold is based on the number of rows in the table or indexed view.

Query Optimizer checks for out-of-date statistics before it compiles a query and runs a cached query plan. Query Optimizer uses the columns, tables, and indexed views in the query predicate to determine which statistics might be out-of-date. Query Optimizer determines this information before it compiles a query. Before running a cached query plan, the Database Engine verifies that the query plan references up-to-date statistics.

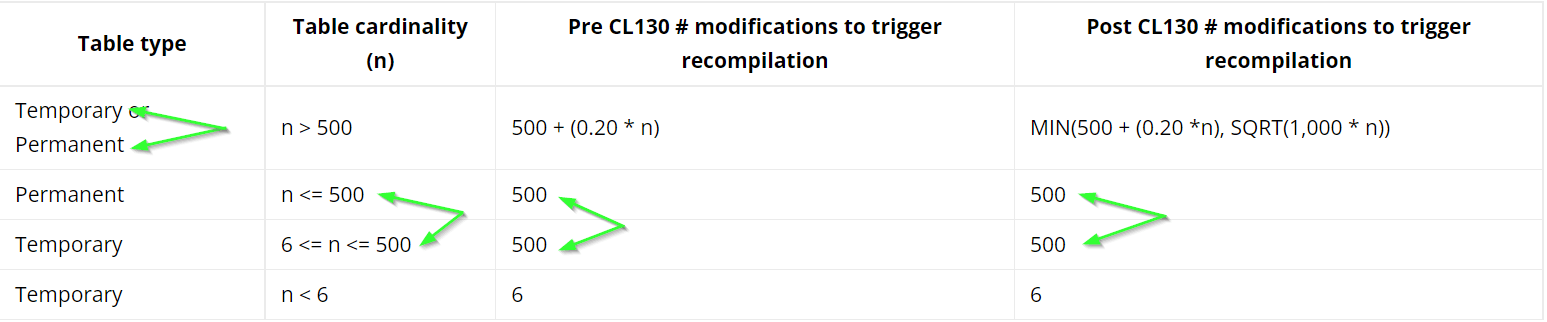

The recompilation thresholds are described here. There has been a change in Compatibility Level (CL) 130, so I put it in the same table side by side for comparison.

| Table type | Table cardinality (n) | Pre CL130 # modifications to trigger recompilation | Post CL130 # modifications to trigger recompilation |

|---|---|---|---|

| Temporary or Permanent | n > 500 | 500 + (0.20 * n) | MIN(500 + (0.20 *n), SQRT(1,000 * n)) |

| Permanent | n <= 500 | 500 | 500 |

| Temporary | 6 <= n <= 500 | 500 | 500 |

| Temporary | n < 6 | 6 | 6 |

Armed with this knowledge, let’s test.

Test scenario

I will do the tests only on the CL150 environment as it includes both the old and new thresholds.

I’ll test the Permanent and Temporary thresholds separately because there are subtle differences.

- I’ll create an environment with 3 different table sizes and 3 separate Stored Procedures that read from them.

- Each table size will test one of the thresholds (500, 20% and SQRT(1,000 * n))

- Then I’ll prepare monitoring to track the modification to stats, cached plans and recompilations.

- The first part of the test includes running the Procedures without the

KEEP PLANhint to verify the thresholds.- Do a fresh update of the Statistics

- Run the Procedure to cache the plan

- Modify the Statistics just below the threshold

- Rerun the Procedure to see a cache hit

- Do one more modification

- Rerun the Procedure to see auto_stats and recompilation events

- Then I’ll rerun the tests with the

KEEP PLANhint and see if the thresholds are affected. - Finally, I’ll repeat the tests on a statement using Temporary tables.

Preparing the environment and monitoring

⚠️ When testing this on my local instance running on Windows, the plan cache was randomly cleared, which affects the testing. Therefore I ran all my tests in a Docker container which didn’t have this problem

The AUTO_UPDATE_STATISTICS must be enabled on the test DB and the tempdb.

CREATE DATABASE KeepPlan

GO

USE KeepPlan

ALTER DATABASE KeepPlan

SET AUTO_UPDATE_STATISTICS ON

/* Helper table to fill and update the tables under test */

CREATE TABLE dbo.Nums

(

n int

, CONSTRAINT PK_Nums PRIMARY KEY (n)

)

CREATE TABLE dbo.PermaSmall

(

n int

, CONSTRAINT PK_PermaSmall PRIMARY KEY (n)

)

GO

CREATE TABLE dbo.PermaMedium

(

n int

, CONSTRAINT PK_PermaMedium PRIMARY KEY (n)

)

GO

CREATE TABLE dbo.PermaLarge

(

n int

, CONSTRAINT PK_PermaLarge PRIMARY KEY (n)

)

;WITH

L0 AS(SELECT 1 AS c UNION ALL SELECT 1),

L1 AS(SELECT 1 AS c FROM L0 CROSS JOIN L0 AS B),

L2 AS(SELECT 1 AS c FROM L1 CROSS JOIN L1 AS B),

L3 AS(SELECT 1 AS c FROM L2 CROSS JOIN L2 AS B),

L4 AS(SELECT 1 AS c FROM L3 CROSS JOIN L3 AS B),

L5 AS(SELECT 1 AS c FROM L4 CROSS JOIN L4 AS B),

Nums AS(SELECT ROW_NUMBER() OVER(ORDER BY (SELECT NULL)) AS n FROM L5)

, tally AS (SELECT TOP (100000) n FROM Nums ORDER BY n)

INSERT INTO dbo.Nums WITH(TABLOCKX) (n)

SELECT

n

FROM tally

INSERT INTO dbo.PermaLarge WITH(TABLOCKX) (n)

SELECT TOP (100000) n FROM dbo.Nums ORDER BY n

INSERT INTO dbo.PermaMedium WITH(TABLOCKX) (n)

SELECT TOP (1000) n FROM dbo.Nums ORDER BY n

INSERT INTO dbo.PermaSmall WITH(TABLOCKX) (n)

SELECT TOP (500) n FROM dbo.Nums ORDER BY n

/* Have a fresh update of the statistics */

UPDATE STATISTICS dbo.PermaSmall WITH FULLSCAN

UPDATE STATISTICS dbo.PermaMedium WITH FULLSCAN

UPDATE STATISTICS dbo.PermaLarge WITH FULLSCAN

GO

CREATE OR ALTER PROCEDURE dbo.ReadSmall

AS

BEGIN

SELECT

*

FROM dbo.PermaSmall AS ps

WHERE ps.n >= (SELECT 1)

--OPTION (KEEP PLAN)

END

GO

CREATE OR ALTER PROCEDURE dbo.ReadMedium

AS

BEGIN

SELECT

*

FROM dbo.PermaMedium AS ps

WHERE ps.n >= (SELECT 1)

--OPTION (KEEP PLAN)

END

GO

CREATE OR ALTER PROCEDURE dbo.ReadLarge

AS

BEGIN

SELECT

*

FROM dbo.PermaLarge AS ps

WHERE ps.n >= (SELECT 1)

--OPTION (KEEP PLAN)

END

The subquery in the WHERE clause is to avoid a Trivial Plan which doesn’t care about the Statistics update.

I’ve also created a procedure per table because the Statistics must be “interesting”. Otherwise, I wouldn’t be able to trigger auto_stats along with the recompilation.

Monitoring statistics

We can periodically check the stats, modification counter and thresholds with this query.

SELECT

obj.name AS tableName,

stat.name AS statName,

CAST(sp.last_updated AS time) Last_update_time,

sp.rows,

sp.steps,

sp.modification_counter AS modCounter

, d.compatibility_level AS CL

, ( 500. + (0.20 * sp.rows)) AS calcFlat

, (SQRT(1000. * sp.rows)) AS calcSqrt

, ca.lesser AS lesserOfTwo

, CASE

WHEN sp.rows <= 500

THEN 500.

ELSE CASE

WHEN d.compatibility_level < 130

THEN 500. + (0.20 * sp.rows)

WHEN d.compatibility_level >= 130

THEN ca.lesser

END

END AS threshold

FROM

sys.objects AS obj

INNER JOIN sys.stats AS stat

ON stat.object_id = obj.object_id

JOIN sys.stats_columns AS sc

ON sc.object_id = stat.object_id

AND sc.stats_id = stat.stats_id

AND sc.stats_column_id = 1

JOIN sys.columns AS c

ON c.object_id = obj.object_id

AND c.column_id = sc.column_id

CROSS APPLY sys.dm_db_stats_properties (stat.object_id, stat.stats_id) AS sp

JOIN sys.databases AS d

ON d.database_id = DB_ID()

CROSS APPLY

(

SELECT MIN(v.threshold) AS lesser

FROM

(

VALUES

( 500. + (0.20 * sp.rows))

, (sqrt(1000. * sp.rows))

) v (threshold)

) ca

WHERE

obj.is_ms_shipped = 0

AND obj.name LIKE 'Perma%'

ORDER BY sp.rows

OPTION (RECOMPILE, KEEPFIXED PLAN)

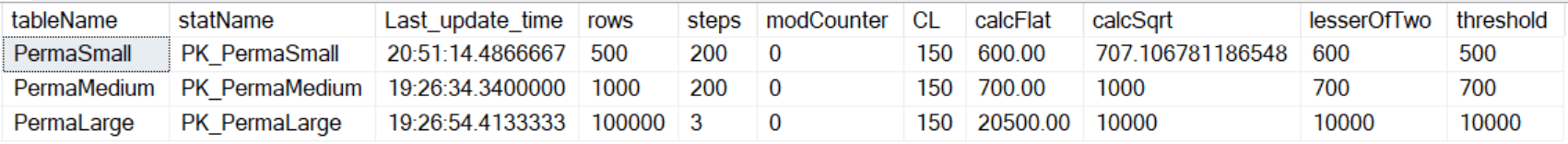

This is my output

Monitoring recompilations

I will use an Extended Events session to track the caching and recompilations. Here’s the definition.

CREATE EVENT SESSION [StatementCompilation] ON SERVER

ADD EVENT sqlserver.auto_stats

(

WHERE

[package0].[not_equal_uint64]([status],'Loading stats without updating')

AND

(

[sqlserver].[like_i_sql_unicode_string]([statistics_list],N'%Perma%')

OR [sqlserver].[like_i_sql_unicode_string]([statistics_list],N'%#Temp%')

)

),

ADD EVENT sqlserver.sp_cache_hit

(

SET

collect_object_name=1

, collect_plan_handle=1

WHERE [sqlserver].[like_i_sql_unicode_string]([object_name],N'Read%')

),

ADD EVENT sqlserver.sp_cache_insert

(

SET

collect_cached_text=1

, collect_database_name=1

, collect_plan_handle=1

ACTION

(

sqlserver.sql_text

, sqlserver.tsql_stack

)

WHERE

[sqlserver].[like_i_sql_unicode_string]([object_name],N'Read%')

),

ADD EVENT sqlserver.sp_statement_starting

(

SET

collect_object_name=1

, collect_statement=1

WHERE

[sqlserver].[like_i_sql_unicode_string]([object_name],N'Read%')

),

ADD EVENT sqlserver.sql_statement_recompile

(

SET

collect_object_name=1

, collect_statement=1

ACTION

(

sqlserver.query_hash_signed

)

WHERE

[sqlserver].[equal_i_sql_unicode_string]([sqlserver].[database_name],N'KeepPlan')

AND

(

[sqlserver].[like_i_sql_unicode_string]([statement],N'%(SELECT 0)%')

OR [sqlserver].[like_i_sql_unicode_string]([object_name],N'Read%')

)

),

ADD EVENT sqlserver.sql_statement_starting

(

SET collect_statement=1

WHERE

[sqlserver].[like_i_sql_unicode_string]([statement],N'%(SELECT 0)%')

OR [sqlserver].[like_i_sql_unicode_string]([statement],N'%FROM dbo.Nums%')

)

ALTER EVENT SESSION StatementCompilation ON SERVER STATE = START

Monitoring the plan cache

I will use this statement to query information about the plan cache and parse some interesting columns out of the XML:

WITH XMLNAMESPACES ('http://schemas.microsoft.com/sqlserver/2004/07/showplan' AS ns)

SELECT

decp.refcounts

, decp.usecounts

, ca1.si.value('@Statistics', 'nvarchar(255)') AS StatisticsName

, ca1.si.value('@ModificationCount', 'bigint') AS ModificationCount

, ca1.si.value('@SamplingPercent', 'bigint') AS SamplingPercent

, ca1.si.value('@LastUpdate', 'datetime2(3)') AS LastUpdate

, decp.plan_handle

, deqp.query_plan

FROM sys.dm_exec_cached_plans AS decp

CROSS APPLY sys.dm_exec_query_plan(decp.plan_handle) AS deqp

CROSS APPLY deqp.query_plan.nodes('//ns:OptimizerStatsUsage') AS ca(cr)

CROSS APPLY ca.cr.nodes('ns:StatisticsInfo') AS ca1(si)

WHERE OBJECT_NAME(deqp.objectid,DB_ID('KeepPlan')) LIKE 'Read%'

OPTION (RECOMPILE)

Testing the thresholds without the KEEP PLAN

Let’s start the XE session StatementCompilation and Watch Live Data.

First, we will clear the plan cache.

DBCC FREEPROCCACHE

Then execute the first procedure. Run it as a single statement within the batch and without the white space.

EXEC dbo.ReadSmall

In the Monitoring statistics, we saw that the threshold for the table PermaSmall was 500.

So we will do 499 modifications using this query:

/* Modify Perma tables */

;WITH nums

AS (SELECT TOP (499) n FROM dbo.Nums ORDER BY n)

UPDATE p

SET p.n = p.n /* fake modification */

FROM dbo.PermaSmall AS p /* Change table PermaSmall, PermaMedium, PermaLarge */

JOIN nums AS n ON p.n = n.n

OPTION (KEEPFIXED PLAN)

GO

I will use this query many times, the only difference being the referenced table and the number in the TOP clause.

The KEEPFIXED PLAN hint is there to prevent unwanted triggering of auto stats update.

- Let’s execute the Procedure again to see that it’s still in the cache.

- Then run the modification again, but now swapping the

TOP (499)forTOP (1) - And finally, rerun the Procedure - this should trigger the auto stats update and recompilation.

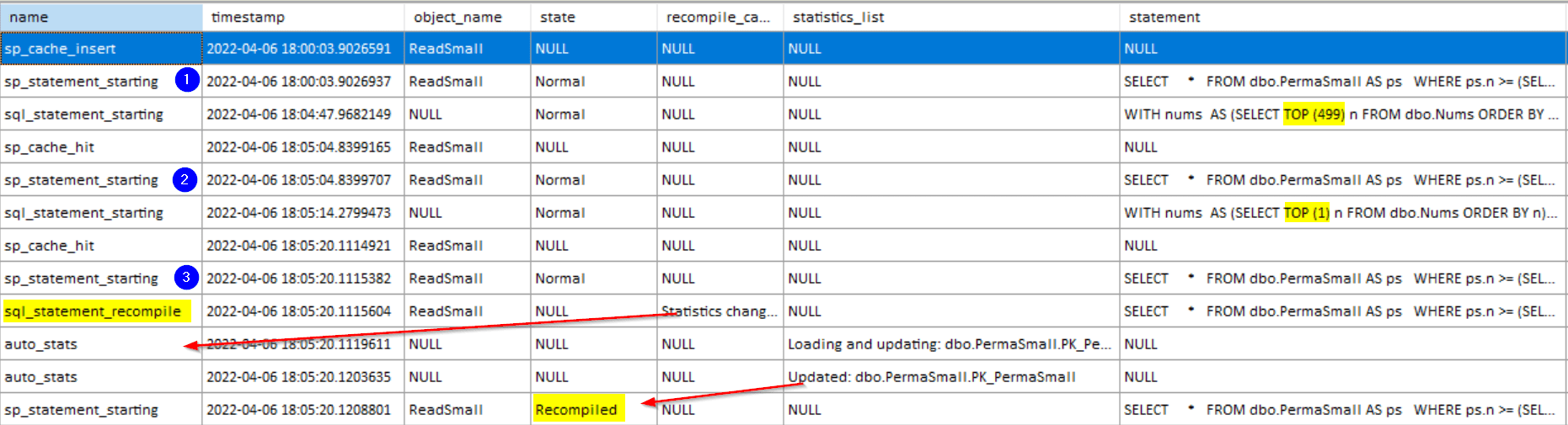

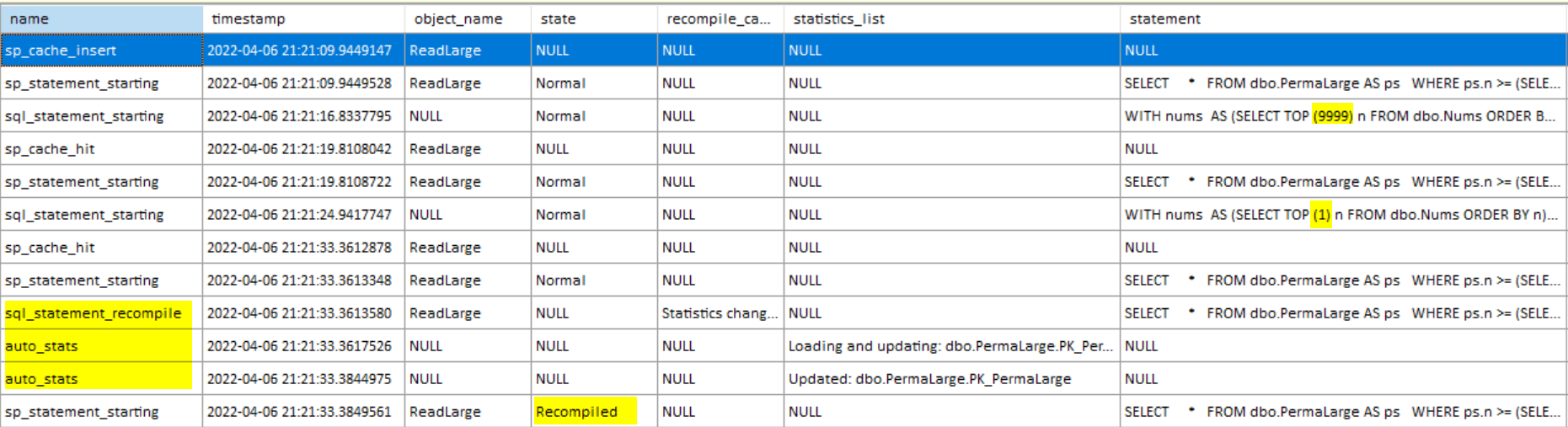

This is my output from the XE session.

- The (1), (2) and (3) are the executions of the procedure.

- After the first execution, I could see the update of 499 rows and that the plan was still in cache.

- After the second execution, I did yet another single modification.

- I can see that the cache had a hit again, but after sp_statement_starting, there is sql_statement_recompile with the cause Statistics changed.

- That triggers the Loading and updating of the PermaSmall table Statistics.

- After that, I see the sp_statement_starting again, but this time with state = Recompiled.

Testing again with a slight variation

I will repeat the test for the PermaMedium and PermaLarge, but first, let’s revisit the whitepaper from earlier.

There is an interesting excerpt about data modification tracking and its effect on the Recompilation Threshold (RT).

During query compilation, the query processor loads zero or more statistics defined on tables referenced in a query. These statistics are known as interesting statistics. For every table referenced in a query, the compiled query plan contains:

- A list of all of the statistics loaded during query compilation. For each such statistic, a snapshot value of a counter that counts the number of table modifications is stored. The counter is called colmodctr. A separate colmodctr exists for each table column (except computed non-persisted columns).

- The threshold crossing test — which is performed to decide whether to recompile a query plan — is defined by the formula:

ABS( colmodctr(current) – colmodctr(snapshot)) ) >= RT

I was not able to verify this in my testing.

Therefore, I will repeat the previous test with a slight variation. I will do, for example, 100 modifications to the statistics before I cache the plan.

If I plug the PermaMedium threshold and the snapshot value into the equation above:

ABS(colmodctr(current) - 100) >= 700

colmodctr(current) >= 800

That means I should be able to trigger a recompile after 800 modifications.

- Let’s clear the cache again with the

DBCC FREEPROCCACHE. - Run the modification code against PermaMedium with a value of 100.

- And then cache the plan for the ReadMedium stored procedure.

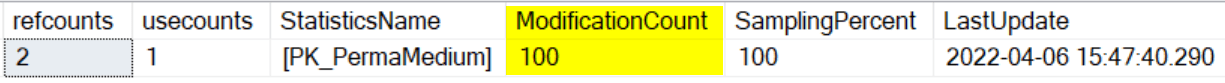

- We can check the plan cache for the snapshot value.

The following steps should be the same as previously:

- Do 599 modifications (We already have 100 modifications, and the threshold should be 700)

- Rerun the Procedure - cache hit

- Do 1 extra modification

- Rerun the Procedure - auto stats update + recompilation.

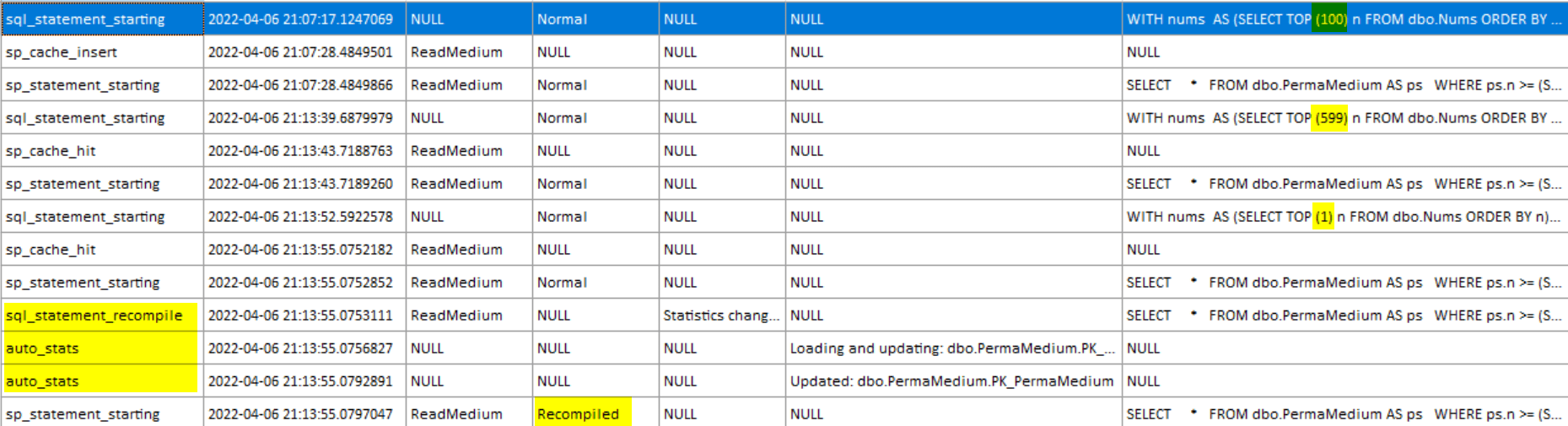

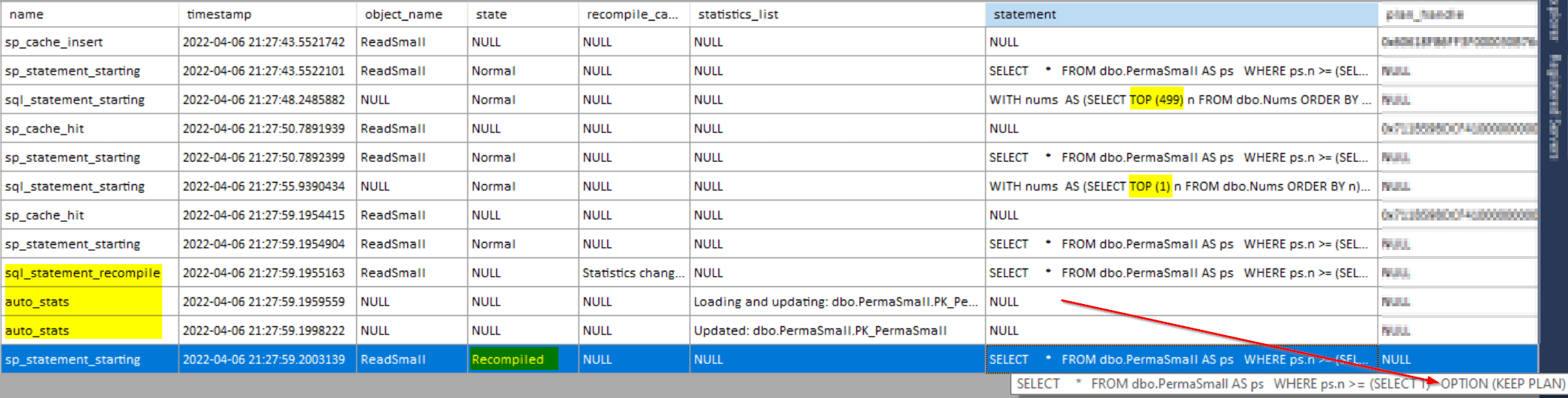

Here are my results:

We can see that I did the first 100 modifications before caching the plan.

But the stats were recompiled when hitting the original threshold of 700.

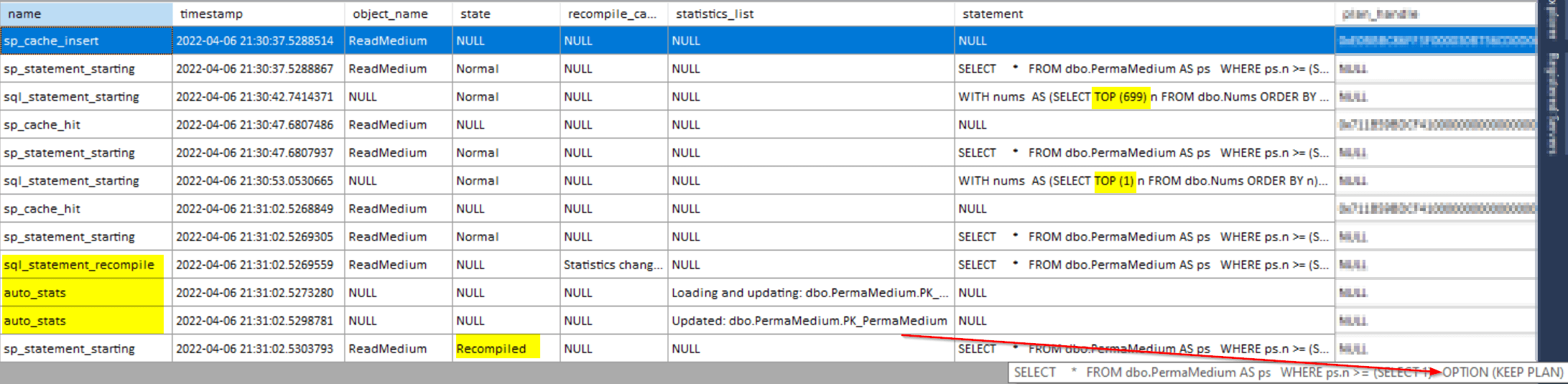

The last remaining test is the PermaLarge table. That’s to showcase the new SQRT threshold (instead of the old 20%).

I will only post the XE result:

Everything went as expected. Once we crossed the threshold of 10,000, auto stats update and recompile were fired.

Add a KEEP PLAN hint

I will change the definition of the three Stored Procedures by uncommenting the KEEP PLAN hint.

Then I’ll rerun the previous tests hoping that the recompilation thresholds have been “relaxed” and find out the new threshold values.

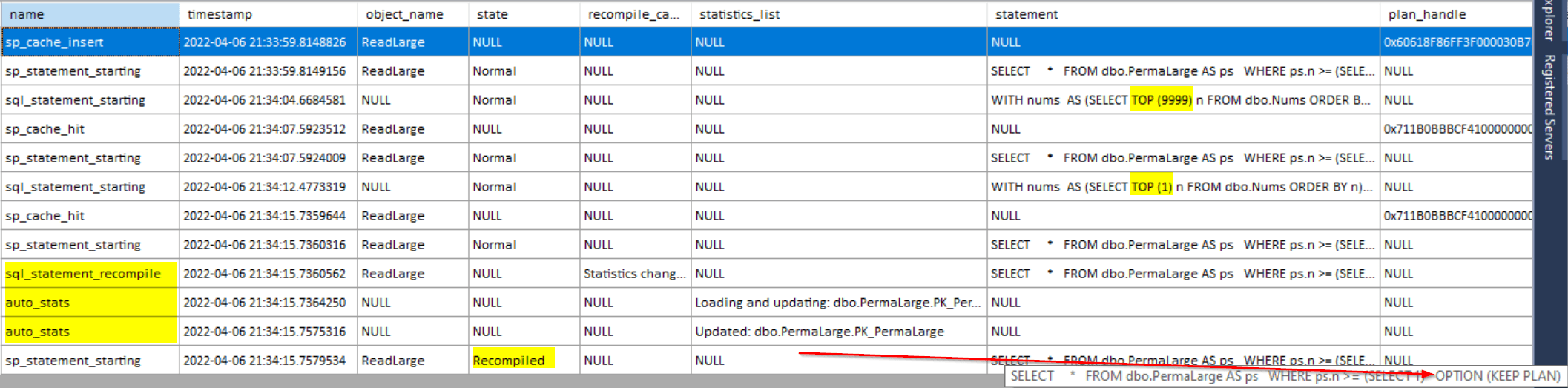

Here are the results

The thresholds are exactly the same as they were without the hint.

We can conclude that the KEEP PLAN does not affect Permanent tables.

But how about Temp tables? If you remember the quote from the whitepaper, it should change the Temporary tables threshold to be the same as permanent tables.

Temp table thresholds

This took me a bit longer to test because there are some subtle differences.

First of all, I won’t be running it in a Stored Procedure because of the additional complexity of the Temp table caching and Deferred compilations.

I create the Temp table in one batch and run the statement in another one.

I do both in the same session because of the Temp table scope.

CREATE TABLE #TempTable

(

n int

)

INSERT INTO #TempTable (n)

SELECT TOP (5) n FROM dbo.Nums ORDER BY n

The statement to be cached:

GO

SELECT * FROM

#TempTable AS tt

WHERE tt.n > (SELECT 0)

--OPTION (KEEP PLAN)

GO

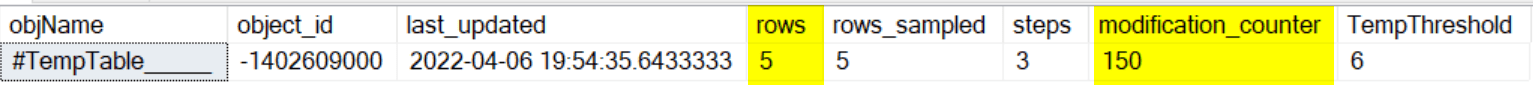

I use this statement to check the #TempTable stats (must be run in the tempdb)

USE tempdb

SELECT

LEFT(obj.name, 15) AS objName,

obj.object_id,

sp.last_updated,

sp.rows,

sp.rows_sampled,

sp.steps,

sp.modification_counter,

CASE WHEN sp.rows < 6 THEN '6'

WHEN sp.rows BETWEEN 6 AND 500 THEN '500'

ELSE 'Same as perma table'

END AS TempThreshold

FROM

sys.objects AS obj

INNER JOIN sys.stats AS stat

ON stat.object_id = obj.object_id

JOIN sys.stats_columns AS sc

ON sc.object_id = stat.object_id

AND sc.stats_id = stat.stats_id

AND sc.stats_column_id = 1

JOIN sys.columns AS c

ON c.object_id = obj.object_id

AND c.column_id = sc.column_id

CROSS APPLY sys.dm_db_stats_properties (stat.object_id, stat.stats_id) AS sp

WHERE

obj.is_ms_shipped = 0

AND obj.type = 'U'

ORDER BY sp.rows

OPTION (RECOMPILE, KEEPFIXED PLAN)

But with a similar update statement, I couldn’t reproduce the threshold recompilation.

I have only 5 rows and need 6 modifications to trigger the recompile (according to Docs).

So I thought maybe I’ll do multiple passes over the same data

This update did not work for me.

/* Modify Temp tables */

;WITH nums

AS (SELECT TOP (5) n FROM dbo.Nums ORDER BY n)

UPDATE t

SET t.n = t.n /* fake modification */

FROM #TempTable AS t

JOIN nums AS n ON t.n = n.n

OPTION (KEEPFIXED PLAN)

GO 30

The GO 30 will repeat the batch 30 times.

The stats showed several modifications.

And even in XE, I couldn’t see auto stats update or recompile

I’ve tried deleting and reinserting the rows - no result as well.

At last, I finally made it work by just inserting new rows.

Let’s restart the test - clear the cache and drop and recreate the Temp table.

This time I will initialize it with 3 rows. It doesn’t matter as long as it’s below 6.

DBCC FREEPROCCACHE

DROP TABLE IF EXISTS #TempTable

CREATE TABLE #TempTable

(

n int

)

INSERT INTO #TempTable (n)

SELECT TOP (3) n FROM dbo.Nums ORDER BY n

GO

- Run the same statement as previously to cache it.

- Then add 5 new rows (1 below the threshold)

- Run the statement to see there is no recompilation

- Add 1 more row

- Run the statement and see auto stats update and recompilation

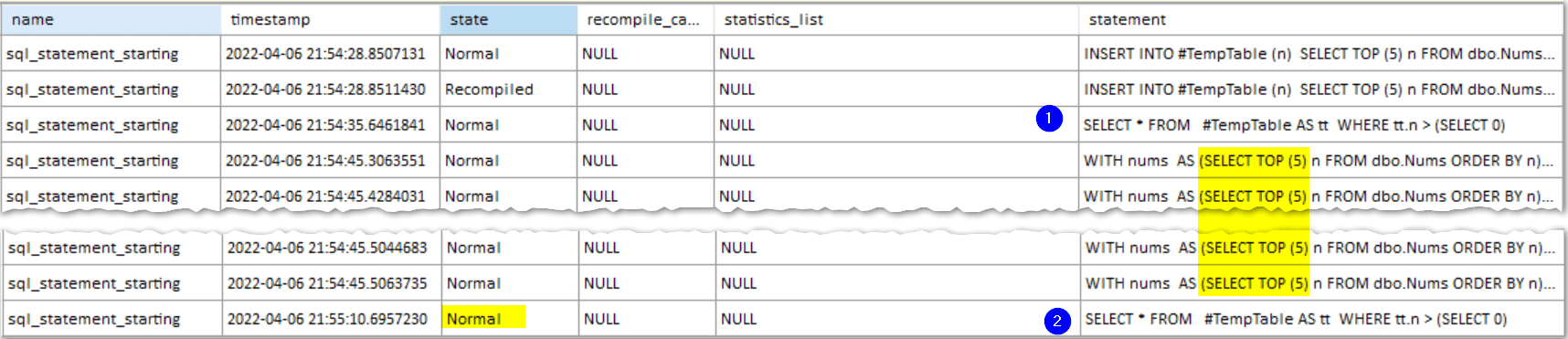

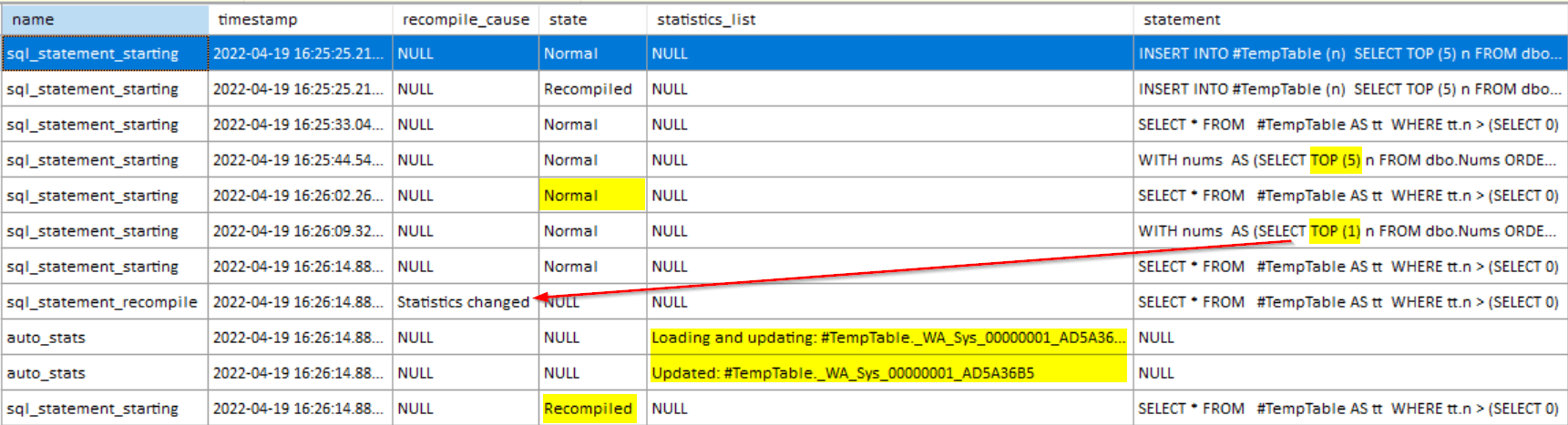

Here is the XE result

Now that we can reproduce the recompilation threshold let’s reset and rerun the test but this time, let’s uncomment the KEEP PLAN hint.

We can see that after the 6 modifications, there was no recompile. I’ve inserted additional 493 rows, bringing it to 499 total modifications.

There was still no recompile.

Adding another row carried it over the new threshold of 500 and finally triggered the auto stats.

Finally, the KEEP PLAN hint actually changed something. Let’s test for the larger Temp tables.

Conclusion

But wait! Do you remember the threshold table from the beginning?

Once the Temp table has more than 5 rows, it has the same threshold as the Permanent table.

That means that the KEEP PLAN hint has no effect again!

KEEP PLAN - I hereby dub thee the worst query hint I know.

Be right back; I have to create a Pull Request and fix the hint’s description in the SQL Docs.

Edit (2022/04/19): Here’s the Pull Request.

Update

(2022/04/19): Paul solved another mystery for me.

Remember when I couldn’t force the Temp table recompilations with modifications only and had to insert new rows? The reason for this is an optimization called Reduced recompilations for workloads using temporary tables across multiple scopes.

Because I’m on Compatibility Level 150, I can turn it off with these trace flags.

DBCC TRACEON (11036, -1); -- reduce recompilations

DBCC TRACEON (11048, -1); -- reduce recompilations 150

For brevity, I’ll only post the test results. The test case is the same as the initial Temp table test.

Here’s the Temp table stats modification counter after 5 updates.

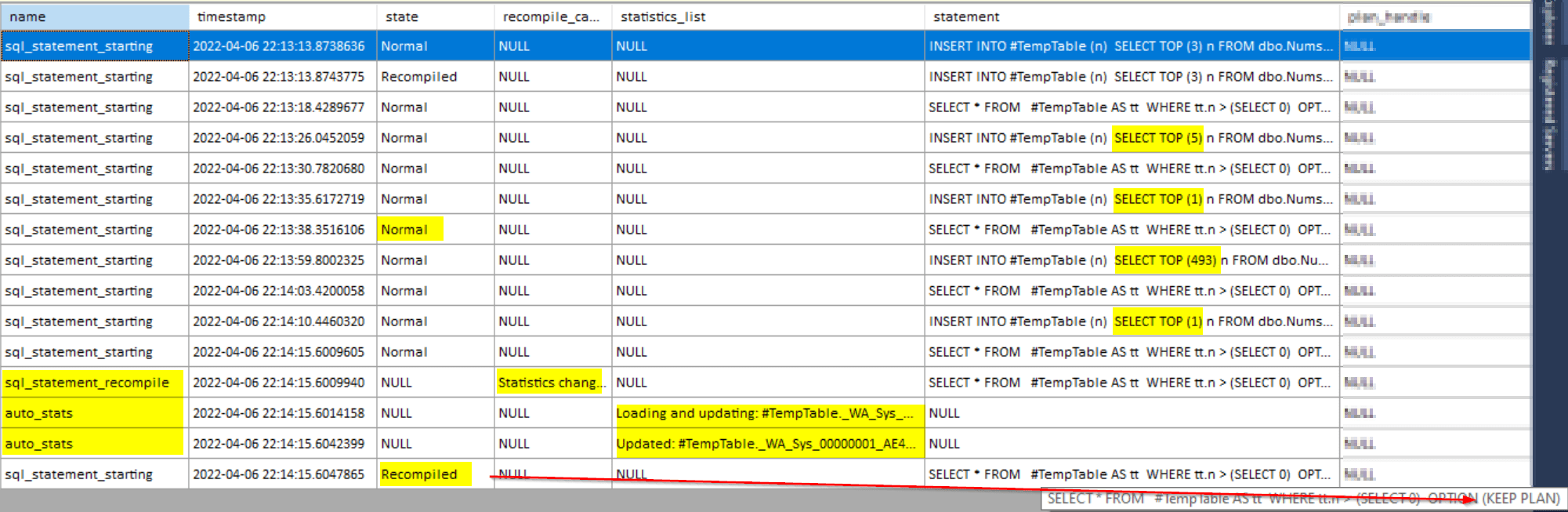

And here’s the full Extended Events output.

We can see that it recompiles after 6 modifications while keeping the cardinality of 5.

Then I clear the proc cache, recreate the Temp table and repeat the test with the KEEP PLAN hint.

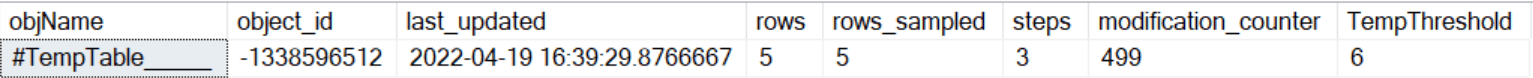

The Temp table stats modification counter after 499 updates.

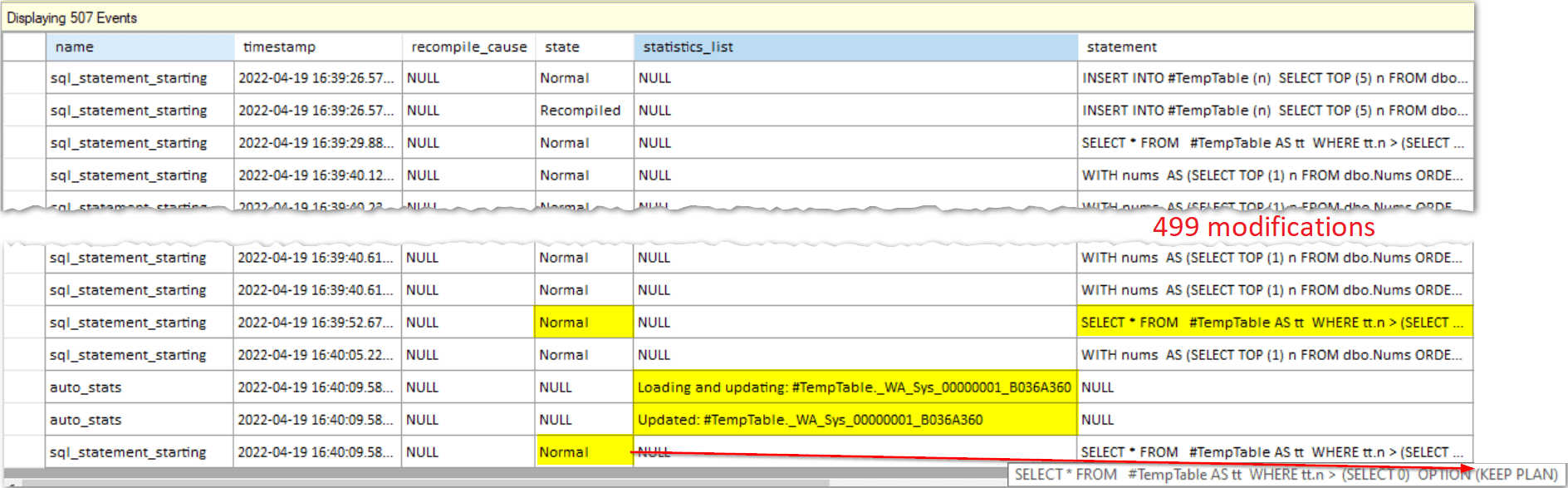

The full Extended Events output.

- Since I have only 5 rows in the Temp table, I’ve repeated a modification to one row 499 times.

- After that, I rerun the query to see the recompile hadn’t been triggered.

- One more modification and rerunning the query triggers the auto_stats

The weird thing is that even after that, the sql_statement_starting state is still Normal and not Recompiled.

Don’t forget to turn off the trace flags.

DBCC TRACEOFF (11036, -1);

DBCC TRACEOFF (11048, -1);

Acknowledgements

Paul White (t), who gave me useful tips when I was stuck. And who also written these fantastic blog posts:

And these articles: