By Steve Bolton

…………Since this series on using SQL Server to implement the whole gamut of information metrics is wide-ranging in nature, it will also be somewhat disorganized by necessity; this is doubly true, given that I’m writing it in order to learn the material faster, not because I’m already intimately familiar with these topics. Nonetheless, I’m trying to provide what structure I can along the way, including segregating the most important entropy measures of information theory in this early segment. I’m slowly ratcheting up the level of complexity within this section by introducing simpler concepts first and using them as stepping stones to more difficult ones, yet this week’s topic differs in the manner of its complexity from that of the previous article. The foray we took into thermodynamic entropies in the last post was difficult due to the depth of the subject matter whenever definitions of qualities like “randomness” and “order” are in play. I put off discussing Conditional and Joint Entropy until this post because their complexity is of a different type; basically, they just involve binary comparisons of entropy rather than the simple unary metrics I introduced in Information Measurement with SQL Server, Part 2.1: The Uses and Abuses of Shannon’s Entropy and Information Measurement with SQL Server, Part 2.2: The Rényi Entropy and Its Kin. They’re among the first topics discussed in texts on information theory and coding theory and shouldn’t be too difficult to fathom, if readers could swallow the last two installments in this series. Coding them is not terribly difficult, although they do present some challenges in terms of performance and interpretation.

…………One of the benefits of reading my amateur self-tutorials is that you get sample T-SQL code and a few paragraphs of commentary, which basically saves the hassle of having to consult gigantic math tomes chock full of arcane equations. Data miners shouldn’t have to give a dissertation on the theorems and lemmas that justify the underlying algorithms, any more than a commuter should be required to give a dissertation on automotive engineering in order to get a license. To that end, I usually remove all of the associated equations to suit my target audience of SQL Server DBAs and data miners, who can probably grasp them much easier in T-SQL form. I broke that rule in the article on Shannon’s Entropy for a sound reason and this time around, I’ll bend it a little in order to clear up some possible sources of confusion about probability notation. Symbols like P(A|B) denote a conditional probability, which signifies, “Given a value for B, what is the probability of A?” Joint probability is denoted by P(A,B) or P(AB), which can be read as, “What is the probability of two specific values for A and B occurring together?” Both concepts have counterparts in information theory, where we simply replace the P with the H symbol to denote entropy rather than probability. This may come in handy if readers want to try to one of several alternatives formulas for arriving at the same figures with these entropies, or want to double-check my sample T-SQL in Figure 1 (which is always a wise idea, since I don’t yet have any real expertise in these subjects).

Unit and Scaling Issues in the Sample T-SQL

I chose the methods for calculating both that I believed would mesh well with the code I posted a few articles ago for Shannon’s Entropy. Although the Figure 1 is somewhat lengthier than the sample T-SQL in that post, the same basic format is at work. Once again, I include a @LogarithmBase parameter so that users can easily switch between base 2, base 10 and Euler’s Number to derive the three main units use for entropy, bits (i.e. shannons), bans (i.e. hartleys) and nats respectively. The INSERT INTO populates a table variable by selecting from the same Higgs Boson dataset, which is ideal for stress-testing the kind of routines I’ve been posting for the last few tutorial series, since it has 11 million rows of mainly float columns.[1] Shannon’s Entropy is derived in precisely the same way as before by using intermediate table variable columns like SummationInput1, except that it must be done for two of the dataset’s float columns this time around. The tricky part is the use of the GROUP BY CUBE statement, which allows us to simultaneously calculate the joint proportions of both columns without traversing the table repeatedly. The GROUPING_ID conditions derived from it are used to distinguish the aggregates of the two columns and their joint proportions in the CASE statements, which were not necessary in the original sample T-SQL for Shannon’s Entropy. We likewise need to take two additional counts in this routine, since we need to perform calculations on an additional column and its joint distribution with the other; in this particular instance Count1 and Count2 are equal to the count of the whole dataset, i.e. the JointCount, simply because the Higgs Boson dataset has no null values for these columns. This is not going to be the case with many other datasets.

…………It is also good to keep in mind that I’m once again cheating a little by using known proportions as probability values, which could also be derived from alternative sources like sampling, probability distribution formulas and deductive reasoning about the underlying processes, as is possible with simple examples like dice and card games. Most of the texts I’ve read on information theory and coding theory begin with the same dice and card examples, but in most real-world applications, the underlying processes are far too complex to reason them out in advance. As I mentioned in the articles on the Hartley Function and thermodynamic entropies, in some cases it may be necessary to calculate probabilities across all possible values of a particular column, but that can get messy since it involves taking permutations and combinations over large data types. There’s simply no way we’re going to cram all 1038 + 1 values that are permissible in a decimal(38,0) column into standard combinatorics formulas, given that the highest values we can use for factorials is about 170. This is an inherent limitation of SQL Server’s data types, which actually do a much better job at this than many of its competitors in the data mining market, like Minitab and WEKA. By using existing proportions, we can eliminate millions of values that are not found in the dataset, but for which we might have to assign nonzero values if we were using uniform distribution or whatever. It is worthwhile to note though that this kind of subtle fudging is more appropriate to the sizes of the tables used in relational databases and cubes than in ordinary scientific analysis, since we have extensive counts that can be leveraged in this manner. Our roles are almost reversed: it is far easier for DBAs and Analysis Services users to leverage actual counts of this kind, whereas it is much easier for scientists to perform calculations involving permutations, factorials and the like.

…………I also took the easy way out in setting the table variable data types, since Figure 1 is merely intended to convey the underlying concepts rather than to serve as production code. Values1 and 2 in the table variable are set to decimal(33,29) because that’s the precision and scale of the original columns, whereas the proportions are set to decimal(38,37), since they should never exceed 1 and therefore require just 1 digit to the left of the decimal place. The SummationInput columns are set to (38,21) arbitrarily, since I’m familiar with these two columns and was certain that this was enough space to accommodate them, while retaining enough precision to get the point across; I could have used floats, but kept getting annoying floating point rounding errors at lower precisions than I expected. The validation code in the middle of the routine can be uncommented if users want to inspect the table variable’s individual values or make sure that the proportions sum to 1; these check may reveal a few rounding errors that I haven’t yet been able to track down and weed out, but they’re occurring at trivial precision values and don’t really detract from the lessons. The good news is that once we calculate either the Joint Entropy or Conditional Entropy, it is trivial to derive the other using the Chain Rule[2], which involves some simple subtraction or addition operations. We therefore get two metrics for the price of one. I wagered that it would be less costly to derive the Joint Entropy first, since it can be done alongside the individual Shannon’s Entropy values less awkwardly than the Conditional Entropy can through alternative formulas.

Figure 1: T-SQL for Joint and Conditional Entropy

DECLARE @LogarithmBase decimal(38,36)

SET @LogarithmBase = 2 — 2.7182818284590452353602874713526624977 — 10

DECLARE @Count1 bigint, @Count2 bigint, @JointCount bigint, @ShannonsEntropy1 float, @ShannonsEntropy2 float, @JointEntropy float

SELECT @Count1=Count(*)

FROM DataMiningProjects.Physics.HiggsBosonTable

WHERE Column1 IS NOT NULL

SELECT @Count2=Count(*)

FROM DataMiningProjects.Physics.HiggsBosonTable

WHERE Column2 IS NOT NULL

SELECT @JointCount=Count(*)

FROM DataMiningProjects.Physics.HiggsBosonTable

WHERE Column1 IS NOT NULL OR Column2 IS NOT NULL

DECLARE @EntropyTable table

(Value1 decimal(33,29),

Value2 decimal(33,29),

ValueCount bigint,

Proportion1 decimal(38,37),

Proportion2 decimal(38,37),

JointProportion decimal(38,21),

SummationInput1 decimal(38,21),

SummationInput2 decimal(38,21),

JointSummationInput decimal(38,21)

)

INSERT INTO @EntropyTable

(Value1, Value2, ValueCount, Proportion1,Proportion2, JointProportion, SummationInput1, SummationInput2, JointSummationInput)

SELECT Value1, Value2,ValueCount, Proportion1,Proportion2, JointProportion,

Proportion1 * Log(Proportion1, @LogarithmBase) AS SummationInput1,

Proportion2 * Log(Proportion2, @LogarithmBase) AS SummationInput2,

JointProportion * Log(JointProportion, @LogarithmBase) AS JointSummationInput

FROM (SELECT Value1, Value2,ValueCount,

CASE WHEN GroupingIDColumn1 = 0 AND GroupingIDColumn2 = 1 THEN ValueCount / CAST(@Count1 AS float) ELSE NULL END AS Proportion1,

CASE WHEN GroupingIDColumn1 = 1 AND GroupingIDColumn2 = 0 THEN ValueCount / CAST(@Count2 AS float) ELSE NULL END AS Proportion2,

CASE WHEN GroupingIDColumn1 = 0 AND GroupingIDColumn2 = 0 THEN ValueCount / CAST(@JointCount AS float) ELSE NULL END AS JointProportion,

GroupingIDColumn1 = 0,GroupingIDColumn2

FROM (SELECT Column1 AS Value1, Column2 AS Value2, Count(*) AS ValueCount, GROUPING_ID(Column1) AS GroupingIDColumn1, GROUPING_ID(Column2) AS GroupingIDColumn2

FROM Physics.HiggsBosonTable

WHERE Column1 IS NOT NULL AND Column2 IS NOT NULL

GROUP BY CUBE (Column1, Column2)) AS T1) AS T2

— for validation

–SELECT * FROM @EntropyTable

–SELECT SUM(Proportion1), SUM(Proportion2), SUM(JointProportion)

–FROM @EntropyTable

SELECT @ShannonsEntropy1 = SUM(SummationInput1) * –1,

@ShannonsEntropy2 = SUM(SummationInput2) * –1,

@JointEntropy = SUM(JointSummationInput) * –1

FROM @EntropyTable

SELECT @ShannonsEntropy1 AS ShannonsEntropyForX, @ShannonsEntropy2 AS ShannonsEntropyForY, @JointEntropy AS JointEntropy,

@ShannonsEntropy1 + @ShannonsEntropy2 AS SumOfIndividualShannonEntropies,

@JointEntropy – @ShannonsEntropy1 AS ConditionalEntropyOfBGivenA,

@JointEntropy – @ShannonsEntropy2 AS ConditionalEntropyOfAGivenB

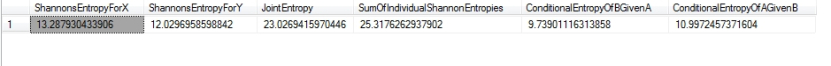

Figure 2: Results from the Higgs Boson Dataset

…………Both Joint and Conditional Entropy must meet a bevy of validity tests, which the results in Figure 2 pass with flying colors. First, the Shannon’s Entropy for the first float column is identical to the results we received in previous articles. Likewise, the Joint Entropy is greater than the individual entropies of both columns, but less than their sum, as indicated by SumOfIndividualShannonEntropies.[3] As expected, the ConditionalValueOfBGivenA is below that of the Shannon’s Entropy for the second column and ConditionalValueOfAGivenB is below that of the first. One unexpected result was that the JointEntropy is close to the output of the multiset versions of the Hartley function I coded in Information Measurement with SQL Server, Part 1: A Quick Review of the Hartley Function, which returned 23.2534966642115 and 23.3910011060398 respectively. This may merely be a fluke, since Column2 has a very different distribution and the Hartley function only took Column1 into account in that particular article. Moreover, I tested this procedure on other combinations of float columns in the same dataset and received results between 22 and 23 most of the time, even when Column1 wasn’t being tested at all. I’m not yet well-versed enough in these matters to say if this indicates that we could derive additional insights from our data, by comparing each column’s Hartley function against the Joint Entropy.

…………The performance implications are of more immediate concern, of course. These two columns have 9,119,674 distinct combinations between them, which required a few gigabytes of TempDB space for spooling; SQL Server also gobbled up a few more gigs of RAM during these calculations, so it is probably wise to keep ample memory at hand. The good news is that this code ran in just 1:46, which is much better than I expected for multiple calculations on two float columns across 11 million rows. I have to perform these kinds of calculations on a beat-up clunker of a development machine that routinely falls apart like the Blues Brothers’ car, so the results on professional hardware are likely to be several orders of magnitude better. They could also probably benefit from a tune-up in the hands of a qualified T-SQL expert, which I am not. I’ve always had a little trouble with grouping statements, so there may be more efficient ways to write this; I doubt that would involve calculating the counts in the INSERT though, even though this could be done, albeit more awkwardly than taking them in advance. The INSERT statement accounted for 96 percent of the batch and 46 percent of those costs were incurred in a single Sort, so that operator might be a good target for performance tweaks. Another 49 percent was locked up in a single Table Insert operator, which probably can’t be dispensed with through optimization. The execution plan was short and otherwise uneventful though, since seeks and scans on the nonclustered indexes of the two columns did the bulk of the heavy lifting.

Interpretations and Use Cases for Joint and Conditional Entropies

…………So why bother going to the trouble of calculating these metrics at all? There’s no point in expending any server resources without first answering Aristotle’s causa efficiens. There are several different categories of use cases for these measures, some of which are really straightforward: whenever any question arises in any data model arises concerning the “news value” of one column given a value for another, or of a state description with two specific values for those columns, these are usually the measures we’d turn to. Conditional Entropy answers the fundamental question “How much can knowing a value of Y tell us about X?” whereas Joint Entropy measures the same thing as Shannon’s Entropy, except for two or more columns. As I’ve emphasized throughout this series, interpretation is a critical stage in any workflow involving information theory metrics, and in the case of these binary entropies, their subtle implications give rise to a whole additional class of use cases. For example, then knowing Y can tell us everything about X if the value of Conditional Entropy is zero, but progressively less as the value rises; in this roundabout way, it becomes a sort of measure of association by proxy, which might be integrated with more familiar measures like correlation, covariance and regression. Conditional Entropy is also sometimes referred to as “equivocation,” especially when it is interpreted a conditional uncertainty. If we were speaking strictly of the original application of information theory to communication channels, then we can view a change in equivocation as a “decrease of uncertainty as to what message might have been enciphered.”[4] It is also used to “justify the definition for channel capacity.”[5] Wendell R. Garner’s excellent but largely unnoticed work from 1962, Uncertainty and Structure as Psychological Concepts, is chock full of formulas in which Conditional Entropy can be transmuted into various measures of uncertainty interactions[6], which are akin to the interaction effects in analysis of variance (ANOVA). These might be useful in the kind of “uncertainty management” programs I delved into in the Implementing Fuzzy Sets with SQL Server series. Some of these related measures, like conditional and contingent uncertainty, can be used to quantify such qualities as redundancy, the relationships between variables and the amount of information transmitted between them.[7] The math is rather thick but Garner’s arguments are sufficiently rigorous for demonstrating connections between Conditional Entropy to both “irrelevant information”[8] and patterns: as he puts it, “structure is related uncertainty…structure is still the important kind of uncertainty, and unstructured uncertainty is equivocation, or noise, or error, whichever you prefer. It is uncertainty which is unrelated to another uncertainty.”[9] It also has subtle relationships with measures of “irony”[10] and a priori knowledge.[11]

…………The third class of use cases for these entropies involves using them to calculate other information metrics, just as we derived Conditional Entropy from Joint Entropy in this article. In like manner, Joint Entropy can be useful as a stepping stone to Mutual Information, one of the most important measures in information theory. It would thus be wise to have it in our toolbelts, if we want to go beyond what the existing software can do and do some wildcat data mining, using DIY code for metrics and algorithms that haven’t been implemented yet. One of the surprises I’ve encountered while trying to learn these fields in recent years has been the sheer size of the yawning gap between the research and theory that undergirds data mining and the available software, which is in some respects decades behind the theoreticians. Only a fraction of the available measures and techniques are available anywhere in the analytics marketplace. Since this situation is likely to last for a long time to come, it may be helpful to acquire the skills to develop our own DIY solutions, which is where posts like this might prove useful (perhaps as cautionary tales against making the same amateur mistakes). To that end, I’ll also address a couple of up-and-coming metrics known as Shared Information Distance and Lautum Information, both of which are related to the more established topic of Mutual Information. It would be feasible to take this segment of the series on a whole array of tangents, including coding all of the information theory counterparts of probabilistic concepts like multiple outcomes, independent events, mutually exclusive events, non-exclusive, unordered pairs and compound events. All of these have equivalent implementations and ramifications in terms of entropy, but I’ll restrict my scope to actual information metrics in keeping with the title of the series, rather than all of the calculations and principles that can be derived from them. In the next article I’ll also dispense with Self Information, which is the entropic counterpart to probabilities for a single record. I’ll complete my wrap-up of this segment of the Information Measurement series with brief discussions of Entropy Rate and Information Rate, which are fairly simple to code.

[1] I downloaded this ages ago from the University of California at Irvine’s Machine Learning Repository and converted it to a SQL Server table, which now takes up about 5 gigabytes of space in a sham DataMiningProjects database.

[2] See the Wikipedia pages “Conditional Entropy” and “Entropy (Information Theory) ” at http://en.wikipedia.org/wiki/Entropy_(information_theory) and http://en.wikipedia.org/wiki/Conditional_entropy respectively.

[3] See the Wikipedia page “Joint Entropy ” at http://en.wikipedia.org/wiki/Joint_entropy

[4] p. 272, Pierce, John Robinson, 1980, An Introduction to Information Theory: Symbols, Signals & Noise. Dover Publications: New York. Also see Pierce, John Robinson, 1961, Symbols, Signals and Noise: The Nature and Process of Communication. Harper: New York.

[5] pp. 49-62, Mansuripur, Masud, 1987, Introduction to Information Theory. Prentice-Hall: Englewood Cliffs, N.J.

[6] p. 106, Garner, Wendell R., 1962, Uncertainty and Structure as Psychological Concepts. Wiley: New York.

[7] IBID., p. 96, 136.

[8] IBID., p. 316.

[9] IBID., p. 339.

[10] p. 46, Ritchie, L. David., 1991, Information. Sage Publications: Newbury Park, Calif.

[11] p. 19, Brillouin, Léon, 1962, Science and Information Theory. Academic Press: New York.