I didn’t expect anything for free in index maintenance. After all, it takes a lot of CPU and transaction log space to remove fragmentation.

Let’s talk about two things that SQL Server does for us: one statistic is created per index we create, and when we rebuild that index, we get fresh statistics.

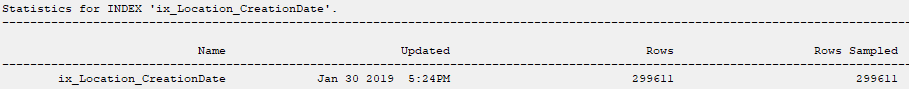

For all the time we spend rebuilding our indexes, the big gain we get is fresh statistics and those statistics are made with full scan.

Let’s get some proof

Using StackOverflow2010, here’s my index.

CREATE NONCLUSTERED INDEX ix_Location_CreationDate ON dbo.Users (Location, CreationDate) INCLUDE (Reputation); GO

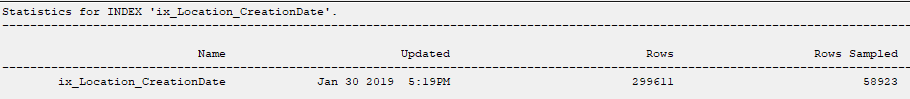

Now, let’s update the statistics with the automatic sample size.

UPDATE STATISTICS Users (ix_Location_CreationDate);

I say that index needs to be rebuilt, at least for the purposes of this demo.

ALTER INDEX ix_Location_CreationDate ON dbo.Users REBUILD;

Now taking a second look at the statistics:

Moral of the post

Statistics are automatically updated with the default sample size when a certain amount of data changes. I won’t talk about what that certain amount is, because it changes in major versions of SQL Server. What that means to us is that data changes can occasionally change statistics, which can mean worse performance.

Then, when the index maintenance jobs run and rebuild the indexes, the statistics get a full sample of the data. This means that your performance might improve from fresh statistics alone.

Stay tuned!