- The data it shows acts as a measuring stick for your performance tuning changes.

- It provides a good way of isolating the query changes you are making from other changes that may be happening on the server.

Rather watch than read? Head over to my YouTube channel and watch this week’s content instead.

So what’s STATISTICS IO data look like?

To show IO statistics on your query, you first need to execute:

-- This applies to your current session only SET STATISTICS IO ON; GO

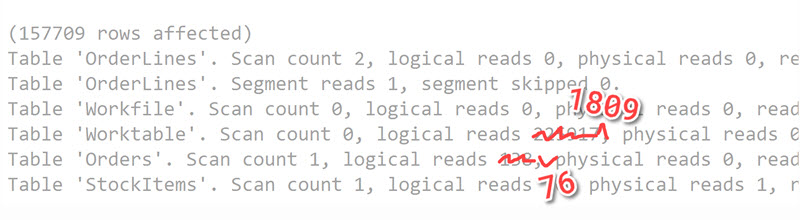

After running a query with with the above setting turned on, check SQL Server Management Studio’s Messages tab to see output that looks something like this:

(157709 rows affected) Table 'OrderLines'. Scan count 2, logical reads 0, physical reads 0, read-ahead reads 0, lob logical reads 159, lob physical reads 0, lob read-ahead reads 0. Table 'OrderLines'. Segment reads 1, segment skipped 0. Table 'Workfile'. Scan count 0, logical reads 0, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0. Table 'Worktable'. Scan count 0, logical reads 225917, physical reads 0, read-ahead reads 0, lob logical reads 225702, lob physical reads 0, lob read-ahead reads 0. Table 'Orders'. Scan count 1, logical reads 138, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0. Table 'StockItems'. Scan count 1, logical reads 16, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

The key things that my eyes are drawn to on initial examination of the STATISTICS IO output are the following:

- Logical reads: The number of 8kB pages SQL Server had to read from the buffer cache (memory) in order to process and return the results of your query. The more pages that need to be read, the slower your query.

- Worktables/Workfiles: These are temporary objects that SQL Server creates in tempdb in order to process query results. Although not always bad, it might indicate that SQL is doing more work than it needs to (perhaps an index could help?)

- Lob Logical Reads: The number of large objects (e.g. varchar(max)) SQL is having to read. I take the most cursory glance at this – if I’m returning high numbers of lobs, I might want to make sure I actually need them. If not, I may add an index or move the lobs off to a separate table.

There are more properties in the STATISTICS IO output, but if we can significantly decrease the above three indicators then chances are good that we’ll improve our query performance.

So why are these three indicators so useful?

Tracking performance changes

The main reason I like performance tuning with STATISTICS IO is because it makes it easy to create a baseline for my query performance. When I make changes to the query, it’s then easy to see if my changes helped or hurt the query.

The main metric I use for this is logical reads. Logical reads refers to pages pulled from the cache (memory) versus physical reads which indicates the number of pages from disk. However, all pages get loaded from disk into cache before SQL Server is able to use them.

This makes logical reads great for tracking performance changes because it clearly tells me how many 8kB pages in total SQL Server needed to read in order to my return my data.

If I add an index, does the total number of pages read go up or down? Let me check my logical read counts and see.

What if I add some additional filtering or restructure my query? I can easily tell if my changes hurt performance by seeing if the total number of logical reads went up or down.

Logical reads allow me to easily track the effectiveness of my tuning tactics.

The same concept applies to my worktable and logical lob read properties. For the former, any time SQL Server is having to write data out to disk (tempdb in this case), performance will be slower.

In the latter case, if SQL Server is needing to move around large objects that comprise of multiple 8kB pages each, things will be slow. If I can keep track of how many lob logical reads SQL Server is performing, then I can focus on removing that overhead from my query.

Isolating other factors that impact performance

For the same reason logical reads make it easy to track query performance after making changes, using logical reads makes it easy to mute other factors that might affect performance.

For example, I used to think that simply watching how long it took a query to run was a good indicator of helping me performance tune. If the total number of seconds it took my query to run decreased, my changes helped improve performance!

This is a potentially deceptive way to measure performance though because what if during my first run the server was getting slammed by other queries? Using elapsed run time isn’t an effective way to measure performance.

Also, server environment hardware isn’t always the same. I might test a query in one environment and then deploy it to another. My testing on an empty dev box might have been great, but as soon as the query runs in production along with all other queries, it might not perform as well.

More than one way to analyze a query

STATISTICS IO is a great place to start your performance tuning process.

It doesn’t mean that you have to stop there though. While being able to track the effects of your tuning changes and isolating other environment variables is important, ultimately you will have to use other means to actually improve performance.

So be sure to look at execution plans, dig into index and table statistics, rewrite the order of your table joins to see if it makes a difference, etc… Just remember, performance always comes back to how much data SQL Server needs to process – reduce that and your queries will surely perform better.

Thanks for reading. You might also enjoy following me on Twitter.