For tables with more than 500 rows - 20% of tables row count + 500 changes

For tables with less than 500 rows - 500 changes

However, in modern day times, the above formula may not be effective. 10 million row tables is pretty much a norm and not typically considered huge. The threshold of changes for 10 million row table would be 2,000,500 changes, which is a huge number of changes required to trigger auto stat update. This effectively implies, on larger tables one would be running without updated statistics almost all the time as the threshold is too high as the tables get bigger. To address this problem, SQL Server 2016 has a behavior called dynamic statistic threshold, which is enabled by default.

As the table gets bigger, the threshold for statistic update reduces. For tables greater than 25,000 rows dynamic statistics is applicable. For example consider the following table with 1 million rows

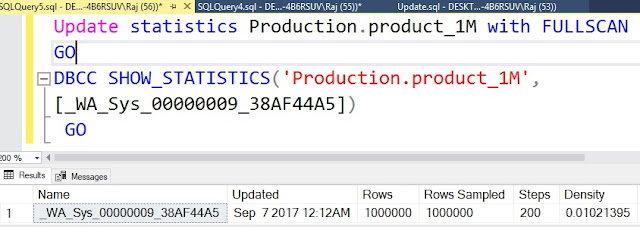

Let’s update statistic before starting the task

Make note the updated stat time (12:12 AM)

Now let’s run the following script to make 100,000 changes (10%)

SET ROWCOUNT 100000

GO

/* SET Rowcount so that we can update just 100K rows */

Update Production.product_1M

SET StandardCost = StandardCost + 5

WHERE Productid % 5 = 0

GO

Please note on older versions of SQL Server, by default, 10% changes wouldn't suffice to trigger auto stat update.

Now let’s check if statistics are updated. A select is query has to be fired on the table to trigger auto statistic update.

Picture referred from the following blog: https://blogs.msdn.microsoft.com/saponsqlserver/2011/09/07/changes-to-automatic-update-statistics-in-sql-server-traceflag-2371/