Most data warehouses have at least a couple of Type 2 Slowly Changing Dimensions. We use them to keep history so we can see what an entity looked like at the time an event occurred. From an ETL standpoint, I think Type 2 SCDs are the most commonly over-complicated and under-optimized design pattern I encounter.

There is a Slowly Changing Dimension Transformation built into SSIS, but most people recommend against using it as it isn’t very efficient. I think many of the people that do use it do so simply because they feel it’s easier than digging in and understanding the operations that need to be done in order to roll your own Type 2 SCD processing.The most common mistake I see in SCD 2 packages, whether using the built-in transformation or creating your own data flow, is that people use OLEDB commands to perform updates one row at a time rather than writing updates to a staging table and performing a set-based update on all rows. If your dimension is small, the performance from row by row updates may be acceptable, but the overhead associated with using a staging table and performing set-based update will probably be negligible. So why not keep a consistent pattern for all type 2 dimensions and require no changes if the dimension grows?

I am here to encourage you: don’t be scared of slowly changing dimensions. Once you learn the design pattern, you will find they aren’t that difficult to understand. And once you have the Biml, creating them is fairly painless.

My Design Pattern

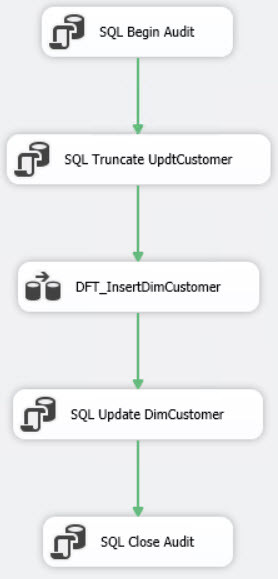

BimlScript allows me to create a reusable design pattern for SSIS that I can employ for each Type 2 dimension that I create. Despite the need to keep history, my Type 2 SCD doesn’t look that much different from my Type 1 SCD. In fact the control flow is exactly the same.

I start with an audit step to log the beginning of package execution. Then I truncate the staging table used to contain my updates. Next I have the Data Flow Task to insert new rows into the dimension table and updates into my update table. Then I run an Execute SQL Task to perform the updates. Finally I log the end of package execution.

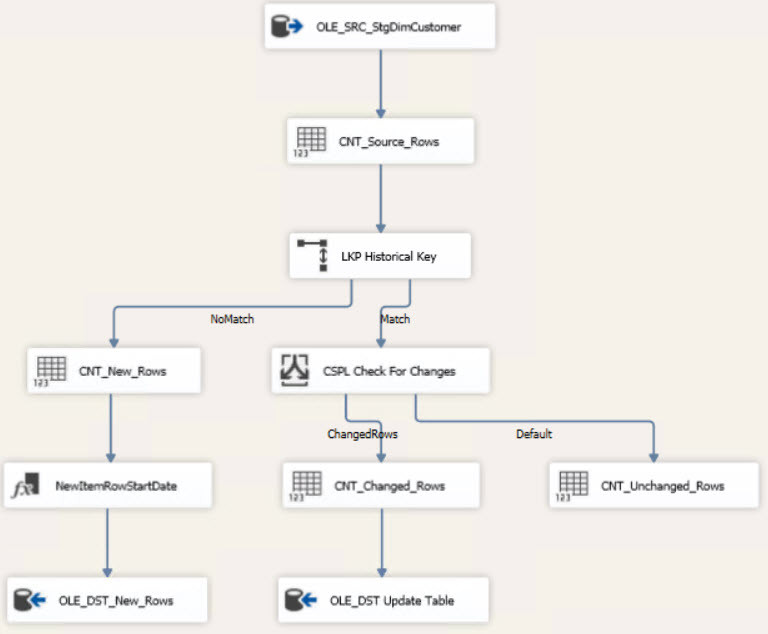

The Data Flow Task looks a like a Type 1 SCD until the end, where I have a Derived Column Transformation before I insert new rows into my dimension table.

As with my Type 1 pattern, the combination and transformation of the data (the business logic) is performed in a view in SQL Server, and the mechanics of capturing the history is performed in SSIS.

The steps in this data flow task are:

- Retrieve data from my source view.

- Count the rows for package logging purposes.

- Perform a lookup to see if the entity already exists in the dimension table (more info on that below).

- If the entity doesn’t exist at all in the dimension table, it goes into the left path where I count the number of rows, add a derived column that sets the row start date to “01/01/1900 00:00:00”, and then insert the row into the dimension table.

- If the entity does exist in the table, I check it for changes.

- If there are changes to the entity, I count the number of rows and then insert the row into an update table.

- Entities with no changes are simply counted for audit purposes.

The Details

Source View with Hashkeys

I’m using product data from the AdventureWorks database for an example. I create a view based upon my staging table that holds the data from the source system. In addition to providing the data values from the source data, my view does the following:

- Adds in the unknown row

- Adds the hashkeys for change detection

- Sets the row start date

CREATE VIEW [dbo].[StgProduct2]

AS

with Productbase as (

SELECT [productid],

[name],

[productnumber],

[makeflag],

[finishedgoodsflag],

,

[safetystocklevel],

[reorderpoint],

[standardcost],

[listprice],

,

[sizeunitmeasurecode],

[weightunitmeasurecode],

[weight],

[daystomanufacture],

[productline],

[class],

[style],

[sellstartdate],

[sellenddate],

[discontinueddate],

1 as RowIsCurrent,

0 as isDeleted

FROM [Staging].[Product]

UNION

Select -1 as productid, 'unknown' as name, null as productnumber, null as makeflag,

null as finishedgoodsflag, null as color, null as safetystocklevel,

null as reorderpoint, null as standardcost, null as listprice, null as size,

null as sizeunitmeasurecode, null as weightunitmeasurecode, null as weight,

null as daystomanufacture, null as productline, null as class, null as style,

null as sellstartdate, null as sellenddate, null as discontinueddate,

1 as RowIsCurrent, 0 as isDeleted

) ,

productdata as

(

Select [productid], [name], [productnumber], [makeflag], [finishedgoodsflag], ,

[safetystocklevel], [reorderpoint], [standardcost], [listprice], ,

[sizeunitmeasurecode], [weightunitmeasurecode], [weight], [daystomanufacture],

[productline], [class], [style], [sellstartdate], [sellenddate], [discontinueddate],

rowiscurrent, isdeleted

, CONVERT(VARBINARY(20), HASHBYTES('MD5', CONCAT([ProductID], ' '))) AS HistoricalHashKey

, CONVERT(VARBINARY(20), HASHBYTES('MD5', CONCAT([name], [productnumber], [makeflag],

[finishedgoodsflag], , [safetystocklevel], [reorderpoint], [standardcost], [listprice],

, [sizeunitmeasurecode], [weightunitmeasurecode], [weight], [daystomanufacture],

[productline], [class], [style], [sellstartdate], [sellenddate], [discontinueddate],

rowiscurrent, isDeleted ))) AS ChangeHashKey

from Productbase sb

)

Select [productid], [name], [productnumber], [makeflag], [finishedgoodsflag], ,

[safetystocklevel], [reorderpoint], [standardcost], [listprice], , [sizeunitmeasurecode],

[weightunitmeasurecode], [weight], [daystomanufacture], [productline], [class], [style],

[sellstartdate], [sellenddate], [discontinueddate], rowiscurrent, isdeleted

,HistoricalHashKey, ChangeHashKey

, CONVERT(VARCHAR(34), HistoricalHashKey, 1) AS HistoricalHashKeyASCII

, CONVERT(VARCHAR(34), ChangeHashKey, 1) AS ChangeHashKeyASCII

, dateadd(MINUTE, -1, current_timestamp) as rowenddate, CURRENT_TIMESTAMP as rowstartdate

from productdata ds

The Change Detection Lookup

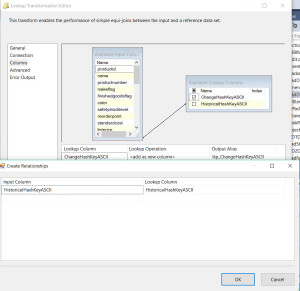

The lookup in my DFT compares the HistoricalHashKeyASCII column from the source view with the varchar version of the HistoricalHashKey from the dimension table and adds the lookup column lkp_ChangeHashKeyASCII to the data set.

Rows that do not match are new rows, as in that product has never been in the dimension table before. Rows that do match have a row in the dimension table and will then be evaluated to see if there are any changes in the values for that product.

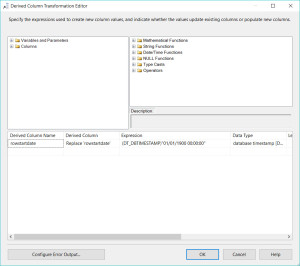

Derived Column for New Rows

The no match output of the lookup are new rows for products that are not in the dimension table. Since this is the first row in the table for that product, we want this row to be effective from the beginning of time until the end of time. The beginning of time in the data warehouse is 01/01/1900. Since I often load the data multiple times per day, I use a date/time field rather than a date. If you only need the precision of a day, you can cut your row end date/time back to just a date. In my pattern, the current row has a null row end date, but you could easily add a derived column to set the end date to 12/31/9999 if you prefer.

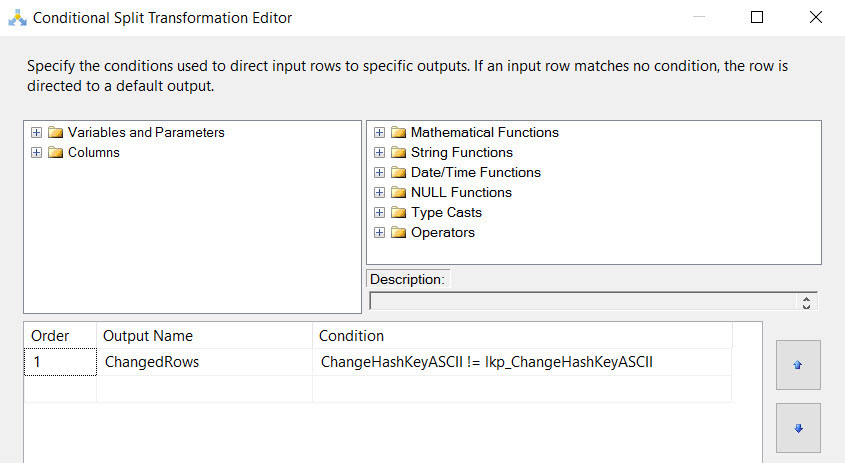

Conditional Split to Check Existing Products For Changes

For existing products, we only want to update the products for which some attribute has changed. We check for changes using a conditional split. When we performed the lookup against the HistoricalHashKeyASCII value, we added the lookup column lkp_ChangeHashKeyASCII from the dimension table.

The match output from the lookup flows into the conditional split. Then we check to see if there is a difference between the change hash from the source view and the change hash in the dimension table that we have added to our data set.

If the change hash values are equal the row hasn’t changed and we can pass it on to get the count of unchanged rows. If the change hash values are not equal, we know some column will have a value to update and we can pass it on to the update staging table.

Update Commands

After the data flow task completes, there is an Execute SQL Task to update the changed rows. There are two commands in the task:

- Set the row end date and row is current flag on all existing rows in the dimension for which there is an update.

- Insert the new rows for the changed products.

UPDATE A

SET RowisCurrent = 0,

A.rowenddate = UA.rowenddate,

A.updatedttm = CURRENT_TIMESTAMP

FROM [Updt].UpdtProduct2 AS UA

JOIN dbo.Product2 AS A

ON UA.historicalhashkey = A.historicalhashkey

WHERE A.rowiscurrent = 1;

INSERT INTO dbo.Product2

([productid]

,[name]

,[productnumber]

,[makeflag]

,[finishedgoodsflag]

,

,[safetystocklevel]

,[reorderpoint]

,[standardcost]

,[listprice]

,

,[sizeunitmeasurecode]

,[weightunitmeasurecode]

,[weight]

,[daystomanufacture]

,[productline]

,[class]

,[style]

,[sellstartdate]

,[sellenddate]

,[discontinueddate]

,[rowiscurrent]

,[isdeleted]

,[HistoricalHashKey]

,[ChangeHashKey]

,[rowenddate]

,[rowstartdate])

SELECT [productid]

,[name]

,[productnumber]

,[makeflag]

,[finishedgoodsflag]

,

,[safetystocklevel]

,[reorderpoint]

,[standardcost]

,[listprice]

,

,[sizeunitmeasurecode]

,[weightunitmeasurecode]

,[weight]

,[daystomanufacture]

,[productline]

,[class]

,[style]

,[sellstartdate]

,[sellenddate]

,[discontinueddate]

,[rowiscurrent]

,[isdeleted]

,[HistoricalHashKey]

,[ChangeHashKey]

,null

,[rowstartdate]

FROM updt.updtProduct2;

The BimlScript

I have a Dim2.Biml file that just contains the design pattern with code blocks that allow me to pass parameters to it for the particular dimension I’m making. I also have a Biml file that contains the project level connection managers. Then I have a Biml file that obtains the package specific parameters and passes them to the Dim2.Biml file.

ProjectConnections.biml

<#@ template language="C#" tier="1" #>

<Connections>

<OleDbConnection Name="AWBIML" ConnectionString ="Data Source=localhost\SQL2014;

Initial Catalog=AWBIML;Integrated Security=SSPI;Provider=SQLNCLI11.1;" CreateInProject="true"/>

<OleDbConnection Name="Audit" ConnectionString ="Data Source=localhost\SQL2014;

Initial Catalog=AWBIML;Integrated Security=SSPI;Provider=SQLNCLI11.1;" CreateInProject="true"/>

</Connections>

The ProjectConnections file doesn’t begin with Biml tags because it gets included in the middle of the CreateDim1.biml file. AWBIML is a local database I created to contain my data mart.

Dim2.biml

This is the file that contains my design pattern.

<#@ template language="C#" tier="2" #>

<#@ property name="PackageName" type="String" #>

<#@ property name="SourceConnection" type="String" #>

<#@ property name="DestinationConnection" type="String" #>

<#@ property name="DestinationSchemaName" type="String" #>

<#@ property name="DestinationTableName" type="String" #>

<#@ property name="DestinationTable" type="String" #>

<#@ property name="DataFlowName" type="String" #>

<#@ property name="DataFlowSourceName" type="String" #>

<#@ property name="DataFlowQuery" type="String" #>

<#@ property name="QueryOutputPathName" type="String" #>

<#@ property name="DestinationName" type="String" #>

<#@ property name="UpdateSchemaName" type="String" #>

<#@ property name="UpdateTableName" type="String" #>

<#@ property name="UpdateConnection" type="String" #>

<#@ property name="UpdateSQLStatement" type="String" #>

<Package Name="<#=PackageName#>" Language="None">

<Parameters>

<Parameter DataType="String" Name="ParentPackageID">00000000-0000-0000-0000-000000000000</Parameter>

</Parameters>

<Variables>

<Variable EvaluateAsExpression="true" DataType="String" IncludeInDebugDump="Exclude" Name="QualifiedTableSchema">"[" + @[User::SchemaName] + "].[" + @[User::TableName] + "]"</Variable>

<Variable DataType="String" IncludeInDebugDump="Exclude" Name="QueryAuditStart">EXECUTE [Audit].[PackageControlStart] @PackageName = ?, @PackageId = ?, @ParentPackageId = ?, @ExecutionId = ?, @StartTime = ?;</Variable>

<Variable DataType="String" IncludeInDebugDump="Exclude" Name="QueryAuditUpdate">EXECUTE [Audit].[PackageControlStop] @PackageId = ?, @ExecutionId = ?, @InsertRowQuantity = ?, @UpdateRowQuantity = ?, @UnchangedRowQuantity=?;</Variable>

<Variable DataType="Int32" IncludeInDebugDump="Include" Name="RowCountChanged">0</Variable>

<Variable DataType="Int32" IncludeInDebugDump="Include" Name="RowCountNew">0</Variable>

<Variable DataType="Int32" IncludeInDebugDump="Include" Name="RowCountSource">0</Variable>

<Variable DataType="Int32" IncludeInDebugDump="Include" Name="RowCountUnchanged">0</Variable>

<Variable DataType="String" IncludeInDebugDump="Exclude" Name="SchemaName"><#=DestinationSchemaName#></Variable>

<Variable DataType="String" IncludeInDebugDump="Exclude" Name="TableName"><#=DestinationTableName#></Variable>

</Variables>

<Tasks>

<Dataflow Name="DFT_Insert<#=DestinationTableName#>">

<Transformations>

<RowCount Name="CNT_Changed_Rows" VariableName="User.RowCountChanged">

<InputPath OutputPathName="CSPL Check For Changes.ChangedRows" />

</RowCount>

<ConditionalSplit Name="CSPL Check For Changes">

<InputPath OutputPathName="LKP Historical Key.Match" />

<OutputPaths>

<OutputPath Name="ChangedRows">

<Expression>ChangeHashKeyASCII != lkp_ChangeHashKeyASCII</Expression>

</OutputPath>

</OutputPaths>

</ConditionalSplit>

<RowCount Name="CNT_New_Rows" VariableName="User.RowCountNew">

<InputPath OutputPathName="LKP Historical Key.NoMatch" />

</RowCount>

<Lookup Name="LKP Historical Key" NoMatchBehavior="RedirectRowsToNoMatchOutput" OleDbConnectionName="<#=DestinationConnection#>">

<DirectInput>SELECT

CONVERT(VARCHAR(34), ChangeHashKey, 1) AS ChangeHashKeyASCII

, CONVERT(VARCHAR(34), HistoricalHashKey, 1) AS HistoricalHashKeyASCII

FROM

<#=DestinationSchemaName#>.<#=DestinationTableName#>

where RowIsCurrent = 1

</DirectInput>

<Parameters>

<Parameter SourceColumn="HistoricalHashKeyASCII" />

</Parameters>

<ParameterizedQuery>select * from (SELECT

CONVERT(VARCHAR(34), ChangeHashKey, 1) AS ChangeHashKeyASCII

, CONVERT(VARCHAR(34), HistoricalHashKey, 1) AS HistoricalHashKeyASCII

FROM

<#=DestinationSchemaName#>.<#=DestinationTableName#>) [refTable]

where [refTable].[HistoricalHashKeyASCII] = ?</ParameterizedQuery>

<InputPath OutputPathName="CNT_Source_Rows.Output" />

<Inputs>

<Column SourceColumn="HistoricalHashKeyASCII" TargetColumn="HistoricalHashKeyASCII" />

</Inputs>

<Outputs>

<Column SourceColumn="ChangeHashKeyASCII" TargetColumn="lkp_ChangeHashKeyASCII" />

</Outputs>

</Lookup>

<DerivedColumns Name="NewItemRowStartDate">

<InputPath OutputPathName="CNT_New_Rows.Output" />

<Columns>

<Column ReplaceExisting="true" Name="RowStartDate" DataType="DateTime">

(DT_DBTIMESTAMP)"01/01/1900 00:00:00"

</Column>

</Columns>

</DerivedColumns>

<OleDbDestination Name="OLE_DST_New_Rows" ConnectionName="<#=DestinationConnection#>">

<InputPath OutputPathName="NewItemRowStartDate.Output" />

<ExternalTableOutput Table="<#=DestinationSchemaName#>.<#=DestinationTableName#>" />

<Columns>

<Column SourceColumn="RowEndDate" IsUsed="false"/>

</Columns>

</OleDbDestination>

<RowCount Name="CNT_Source_Rows" VariableName="User.RowCountSource">

<InputPath OutputPathName="<#=DataFlowSourceName#>.Output" />

</RowCount>

<OleDbSource Name="<#=DataFlowSourceName#>" ConnectionName="<#=SourceConnection#>">

<DirectInput><#=DataFlowQuery#></DirectInput>

</OleDbSource>

<RowCount Name="CNT_Unchanged_Rows" VariableName="User.RowCountUnchanged">

<InputPath OutputPathName="CSPL Check For Changes.Default" />

</RowCount>

<OleDbDestination Name="OLE_DST Update Table" ConnectionName="<#=DestinationConnection#>">

<InputPath OutputPathName="CNT_Changed_Rows.Output" />

<ExternalTableOutput Table="[<#=UpdateSchemaName#>].[<#=UpdateTableName#>]" />

</OleDbDestination>

</Transformations>

<PrecedenceConstraints>

<Inputs>

<Input OutputPathName="SQL Truncate <#=UpdateTableName#>.Output" />

</Inputs>

</PrecedenceConstraints>

</Dataflow>

<ExecuteSQL Name="SQL Begin Audit" ConnectionName="Audit">

<VariableInput VariableName="User.QueryAuditStart" />

<Parameters>

<Parameter Name="0" VariableName="System.PackageName" DataType="String" Length="-1" />

<Parameter Name="1" VariableName="System.PackageID" DataType="Guid" Length="-1" />

<Parameter Name="2" VariableName="ParentPackageID" DataType="Guid" Length="-1" />

<Parameter Name="3" VariableName="System.ServerExecutionID" DataType="Int64" Length="-1" />

<Parameter Name="4" VariableName="System.StartTime" DataType="Date" Length="-1" />

</Parameters>

</ExecuteSQL>

<ExecuteSQL Name="SQL Close Audit" ConnectionName="Audit">

<VariableInput VariableName="User.QueryAuditUpdate" />

<Parameters>

<Parameter Name="0" VariableName="System.PackageID" DataType="Guid" Length="-1" />

<Parameter Name="1" VariableName="System.ServerExecutionID" DataType="Int64" Length="-1" />

<Parameter Name="2" VariableName="User.RowCountNew" DataType="Int32" Length="-1" />

<Parameter Name="3" VariableName="User.RowCountChanged" DataType="Int32" Length="-1" />

<Parameter Name="4" VariableName="User.RowCountUnchanged" DataType="Int32" Length="-1" />

</Parameters>

<PrecedenceConstraints>

<Inputs>

<Input OutputPathName="SQL Update <#=DestinationTableName#>.Output" />

</Inputs>

</PrecedenceConstraints>

</ExecuteSQL>

<ExecuteSQL Name="SQL Truncate <#=UpdateTableName#>" ConnectionName="<#=UpdateConnection#>">

<DirectInput>Truncate Table [<#=UpdateSchemaName#>].[<#=UpdateTableName#>]</DirectInput>

<PrecedenceConstraints>

<Inputs>

<Input OutputPathName="SQL Begin Audit.Output" />

</Inputs>

</PrecedenceConstraints>

</ExecuteSQL>

<ExecuteSQL Name="SQL Update <#=DestinationTableName#>" ConnectionName="<#=DestinationConnection#>">

<DirectInput><#=UpdateSQLStatement#></DirectInput>

<PrecedenceConstraints>

<Inputs>

<Input OutputPathName="DFT_Insert<#=DestinationTableName#>.Output" />

</Inputs>

</PrecedenceConstraints>

</ExecuteSQL>

</Tasks>

</Package>

CreateDim2Packages.Biml

This file simply feeds values into the code nuggets in the Dim2.biml file. In practice, I store these values in a table and change this file to pull the values from a table so when I generate SSIS packages from this file, all my type 2 dimension packages are generated rather than just one.

<#@ template language="C#" hostspecific="true" #>

<#@ import namespace="System.Data" #>

<#@ import namespace="System.Data.SqlClient" #>

<#@ import namespace="System.IO" #>

<Biml xmlns="http://schemas.varigence.com/biml.xsd">

<!--

<#

string PackageName = "LoadDimProduct2";

string SourceConnection = "AWBIML";

string DestinationConnection = "AWBIML";

string DestinationSchemaName = "dbo";

string DestinationTableName = "Product2";

string DestinationTable = "dbo.Product2";

string DataFlowName = "DFT_Product2";

string DataFlowSourceName = "OLE_SRC_StgProduct";

string QueryOutputPathName = "OLE_SRC_StgProduct.Output";

string DestinationName = "OLE_DST_Product2";

string UpdateSchemaName = "Updt";

string UpdateTableName = "UpdtProduct2";

string UpdateConnection = "AWBIML";

string DataFlowQuery = @"SELECT [productid]

,[name]

,[productnumber]

,[makeflag]

,[finishedgoodsflag]

,

,[safetystocklevel]

,[reorderpoint]

,[standardcost]

,[listprice]

,

,[sizeunitmeasurecode]

,[weightunitmeasurecode]

,[weight]

,[daystomanufacture]

,[productline]

,[class]

,[style]

,[sellstartdate]

,[sellenddate]

,[discontinueddate]

,[rowiscurrent]

,[isdeleted]

,[HistoricalHashKey]

,[ChangeHashKey]

,[HistoricalHashKeyASCII]

,[ChangeHashKeyASCII]

,[rowenddate]

,[rowstartdate]

FROM [dbo].[StgProduct2];" ;

string UpdateSQLStatement = @"UPDATE A

SET RowisCurrent = 0,

A.rowenddate = UA.rowenddate,

A.updatedttm = CURRENT_TIMESTAMP

FROM [Updt].UpdtProduct2 AS UA

JOIN dbo.Product2 AS A

ON UA.historicalhashkey = A.historicalhashkey

WHERE A.rowiscurrent = 1;

INSERT INTO dbo.Product2

([productid]

,[name]

,[productnumber]

,[makeflag]

,[finishedgoodsflag]

,

,[safetystocklevel]

,[reorderpoint]

,[standardcost]

,[listprice]

,

,[sizeunitmeasurecode]

,[weightunitmeasurecode]

,[weight]

,[daystomanufacture]

,[productline]

,[class]

,[style]

,[sellstartdate]

,[sellenddate]

,[discontinueddate]

,[rowiscurrent]

,[isdeleted]

,[HistoricalHashKey]

,[ChangeHashKey]

,[rowenddate]

,[rowstartdate])

SELECT [productid]

,[name]

,[productnumber]

,[makeflag]

,[finishedgoodsflag]

,

,[safetystocklevel]

,[reorderpoint]

,[standardcost]

,[listprice]

,

,[sizeunitmeasurecode]

,[weightunitmeasurecode]

,[weight]

,[daystomanufacture]

,[productline]

,[class]

,[style]

,[sellstartdate]

,[sellenddate]

,[discontinueddate]

,[rowiscurrent]

,[isdeleted]

,[HistoricalHashKey]

,[ChangeHashKey]

,null

,[rowstartdate]

FROM updt.updtProduct2;" ;

#>

-->

<#@ include file="ProjectConnection.biml" #>

<Packages>

<#=CallBimlScript("Dim2.biml", PackageName, SourceConnection, DestinationConnection, DestinationSchemaName, DestinationTableName, DestinationTable, DataFlowName, DataFlowSourceName, DataFlowQuery, QueryOutputPathName, DestinationName, UpdateSchemaName, UpdateTableName, UpdateConnection, UpdateSQLStatement)#>

</Packages>

</Biml>

Get the Biml

You can download the Biml and SQL files necessary to use this pattern here. This zip file contains:

- 3 Biml files (ProjectConnections, Dim2, CreateDim2Packages)

- 1 Word doc with a brief explanation of the files and design pattern

- 8 SQL files to make the schemas, tables, views, and stored procedures for the product dimension.

This pattern is for a pure Type 2 SCD. I’ll post again soon with a pattern that handles hybrid Type 2 and Type 6 SCDs.

For more info on Biml, see my other Biml posts or check out BimlScript.com.