(2021-May-17) It took some time to come up with a final title for this blog post in my attempt to encapsulate its several major ideas: Azure Data Factory (ADF) pipeline, Emails with attachments from Azure Storage Account, transform this pipeline into a template that can be used multiple times.

The official Microsoft documentation on using templates in ADF development states this: https://docs.microsoft.com/en-us/azure/data-factory/solution-templates-introduction, "Templates are useful when you're new to Data Factory and want to get started quickly. These templates reduce the development time for building data integration projects thereby improving developer productivity."

I also blogged about using templates in Azure Data Factory two years ago:

I will share my recent process of creating a generic pipeline in ADF (aka “template”), and I will add some of my thoughts about using pipeline templates in ADF at the end of this post.

User story from the recent project work

I had several different file generation processes that involved various incoming and outgoing files. As a result of each process's data transformation, different log files are generated. I have (A) location (folder in Azure storage account) for data files and (B) location for log files for each of the processes.

I wanted to create a generic ADF pipeline that would take location A & B as a parameter to perform the following set of tasks:

- Scan File Locations (A & B) and collect filenames of all the existing files there

- Prepare a message request (Email message details, List of files) and submit it to a Logic App

- Execute Logic App to send an Email with the requested files to attach.

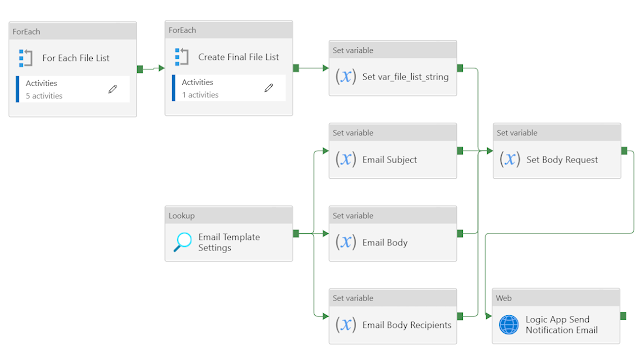

The final version of the generic ADF pipeline looks the following way:

To use this generic pipeline in another process I will simply need to execute it and pass a JSON array parameter value with the list of Azure storage account folders for a file scan.

(1) Scan File Locations (A & B) and collect filenames of all the existing files

This step is a simple collection of the consecutive steps:

1) Return a list of files from a requested file location

2) Filter the output of the (1) activities only for files, in case if folders also exist there

4) and 5) steps are needed to preserve the JSON array output from the (3) step and allow this array further concatenation in case if the initial request contains multiple Azure storage locations. This is done purely because of the current limitation of Azure Data Factory to allow variable self-reference.

(2) Prepare a message request (Email message details, List of files) and submit it to a Logic App

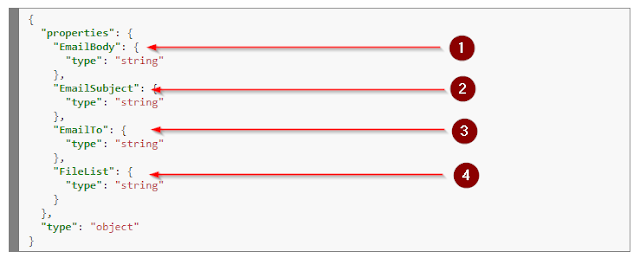

My Email messaging logic HTTP request expected 4 parameters: Email Body, Email Subject, Email To, and File List.

The first 3 parameters are retrieved from an Azure storage config file, you could also use other external data repositories for such information.

The rest of the steps to prepare a payload for my Logic App are simple:

1) Conversion of the JSON file list into a text form (aka, JSON array but feels like text and can be passed to an HTTP request).

2) Combine the output of the (1) step with the Email Body, Email Subject, Email To elements into one variable for validation and future reference purpose.

Further unit & integration testing proved that this generic pipeline allowed processing of a single Azure storage folder location with one file as well as multiple Azure storage locations with tens of files.

Conclusion

So, eventually, I didn't turn this ADF pipeline into a template, because it was easier for me to create it once and then executing this pipeline by referencing it in another pipeline process and passing all the necessary parameter values.

What I like about using pipeline templates is their way to show how things can be developed in the Azure Data Factory environment. What I don't like about ADF templates is their explicit requirement to provide all necessary linked services/datasets even before getting a copy of ADF pipelines from the template gallery. I can't just visually see a template-based pipeline unless I put all INs and OUTs.

If you still don't feel that my musing is boring then here is a source code of this generic pipeline.

Decision to transform it into a template is all yours :- )