It may seem obvious, but I’ve heard more than one person suggest to me that statistics on a clustered index just don’t matter. That if the clustered index can satisfy a given query, it’s going to get selected. That just didn’t make any sense to me, but I haven’t seen anyone set up a test that shows how it might work one way or the other. Here you go.

First, I’m going to create a table and load it up with data. I’m intentionally using strings because I don’t want to confuse the ease of management of integers within indexes. I also went for one column that would have a very attractive set of statistics and one that would have a very ugly set. Also, because we’re only dealing with two columns at any given juncture, either a clustered or a non-clustered index would be a covering index. Finally, I didn’t mark the clustered index as unique because I wanted the non-selective clustered index and the highly selective clustered index to both have to deal with that extra bit of processing. Here’s how I set up the table and the data:

CREATE TABLE dbo.IndexTest ( SelectiveString VARCHAR(50), NonSelectiveString VARCHAR(2)) WITH Nums AS (SELECT TOP (100000) ROW_NUMBER() OVER (ORDER BY (SELECT 1 )) AS n FROM master.sys.all_columns AS ac CROSS JOIN master.sys.all_columns AS ac2 ) INSERT INTO dbo.IndexTest (SelectiveString, NonSelectiveString ) SELECT n, CASE WHEN n % 3 = 0 THEN 'ab' WHEN n % 5 = 0 THEN 'ac' WHEN n % 7 = 0 THEN 'bd' ELSE 'aa' END FROM Nums;

From there I created the first two indexes:

CREATE CLUSTERED INDEX ClusteredSelective ON dbo.IndexTest (SelectiveString); CREATE NONCLUSTERED INDEX NonClusteredNonSelective ON dbo.IndexTest (NonSelectiveString);

Then I ran each of these queries, both of which are actually going after fairly selective bits of data, although largely relatively speaking in terms of the second query:

SELECT * FROM dbo.IndexTest AS it WHERE SelectiveString = '2323'; SELECT * FROM dbo.IndexTest AS it WHERE NonSelectiveString = 'aa';

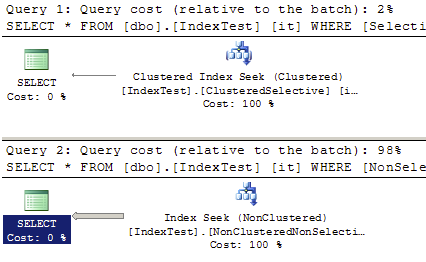

This resulted in the following two execution plans:

As you can see, the clustered index was used in the first query. It makes sense because we’re querying against the clustered key and it’s a very highly selective key. The second query, despite being against a fairly non-selective key, 48,000 rows out of 100,000, used the non-clustered index. If I drop the non-clustered index and use just the cluster for the second query, the number of reads goes from 110 to 299 despite the fact that the same data is being returned. Clearly there’s a huge advantage to how data is ordered. Also, clearly, the fact that the statistics suggest that the cluster can’t immediately satisfy the query makes the optimizer choose other options. But, what happens if we change the indexes like this:

CREATE NONCLUSTERED INDEX NonClusteredSelective ON dbo.IndexTest (SelectiveString); CREATE CLUSTERED INDEX ClusteredNonSelective ON dbo.IndexTest (NonSelectiveString);

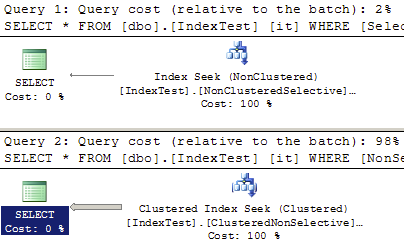

Then, when I rerun my queries, I get these execution plans:

At least to my mind, it’s pretty clear. The statistics for the cluster clearly help the optimizer decide if that index is useful. Yeah, if I drop the nonclustered indexes and then run the queries the clustered index is always used, but that’s not because the cluster is selective or not, it’s because the cluster is the table.

I’m not sure where this concept that the statistics of a clustered do not matter, but, from these tests, it seems that they do.

And remember, in just a couple of weeks I’ll be doing 7 hours of query performance tuning instruction at the PASS Summit. You can sign up, as far as I know, right up to the day of the event. The name of the session is Query Performance Tuning: Start to Finish. I cover gathering metrics, understanding the optimizer, reading execution plans, and tuning queries. It’s a beginner’s level to intermediate course. It should be a lot of fun. Go here to register. I hope to see you there.