Intro

In the last blog I showed how important statistics are for CCI bulk load even on small tables. This time I would like to show the surprising behavior of dynamic SQL, based on DB setting.

Issue

Again, I’m not discovering America here but the impact on CCI load is huge as we again end up with Trickle Insert instead of nice parallel bulk insert.

As I mentioned in the last blog due to memory and tem db limitations we load data in batches. In each batch we build dynamic SQL query which we then execute via SP_EXECUTESQL. We recognized some of the batches are slow. So, we monitored the query and we have learned we have instead generated where condition with value parametrized where condition.

So instead of seeing this:

WHERE DateQuarterNumber = 201801

We see in this in the query:

WHERE DateQuarterNumber = @1

Which of course resulted in the query optimized for totally wrong parameter value with low row number estimate as a result. The plans in following pictures are almost identical. There is nothing wrong with them except insert phase – For first plan – it is a single threaded trickle insert.

We have same impact as in previous blog – optimizer expect low number of rows to insert and switches from parallel bulk load to trickle Insert.

If you get query execution plan like this for multimillion table, you will end up with:

- Single threaded, fully logged insert operation. CLOSED segments instead of COMPRESSED segments. Tuple mover will then later compress those segments.

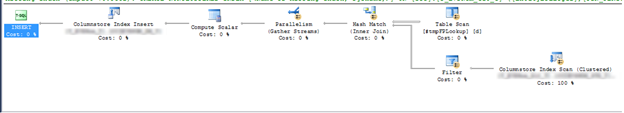

First plan (wrong):

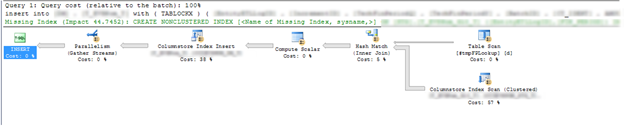

Second Plan (good):

Solution

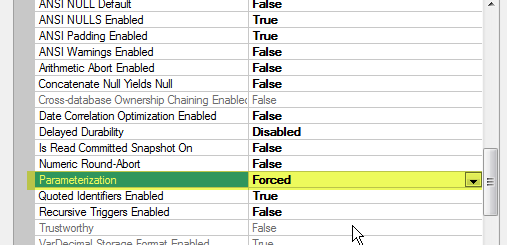

Behavior was driven by following DB setting which we could not influence at that time.

For us the best solution was to use OPTION (RECOMPILE) in each dynamic statement.

Compiling query time (when comparing it with manipulation with large volume of the data) takes negligible percentage of whole duration of query execution. Therefore, we can afford to pay this price for having an optimal plan.