(2022-Jan-10) My short answer to this question is Yes and No. Yes, you can use Windows self-hosted Azure DevOps agent to deploy Python function to the Linux based Azure Function App; and, No, you can’t use Windows self-hosted Azure DevOps agent to build Python code since it will require collection/compilation/build of all Python-depended libraries on a Linux OS platform.

Disclaimer: The purpose of writing this blog post is not to teach anybody, but rather to share my painful journey to automate data project components. This journey is mostly based on my ignorance in many IT areas as well as my lack of DevOps experience, rightful lack of experience since I’m just a data engineer :- )

My story: there is a Python solution that will be used to extract data from an external data provider and I want to automate its deployment to a Function App in Azure using already existing Azure DevOps agents hosted on Window Azure VMs.

Traditional approach

A conventional way to work on this would require following all the instructions from the Microsoft documentation resource that describes how to build continuous delivery of a Function App in Azure – https://docs.microsoft.com/en-us/azure/azure-functions/functions-how-to-azure-devops?tabs=dotnet-core%2Cyaml%2Cpython

Where a simple pipeline to Build your Python code looks like this:

pool:

vmImage: ubuntu-latest

steps:

- task: UsePythonVersion@0

displayName: "Setting python version to 3.7 as required by functions"

inputs:

versionSpec: '3.7'

architecture: 'x64'

- bash: |

if [ -f extensions.csproj ]

then

dotnet build extensions.csproj --output ./bin

fi

pip install --target="./.python_packages/lib/site-packages" -r ./requirements.txt

- task: ArchiveFiles@2

displayName: "Archive files"

inputs:

rootFolderOrFile: "$(System.DefaultWorkingDirectory)"

includeRootFolder: false

archiveFile: "$(System.DefaultWorkingDirectory)/build$(Build.BuildId).zip"

- task: PublishBuildArtifacts@1

inputs:

PathtoPublish: '$(System.DefaultWorkingDirectory)/build$(Build.BuildId).zip'

artifactName: 'drop'and this is how you can Deploy this build to a Function App:

trigger:

- main

variables:

# Azure service connection established during pipeline creation

azureSubscription: <Name of your Azure subscription>

appName: <Name of the function app>

# Agent VM image name

vmImageName: 'ubuntu-latest'

- task: AzureFunctionApp@1 # Add this at the end of your file

inputs:

azureSubscription: <Azure service connection>

appType: functionAppLinux # default is functionApp

appName: $(appName)

package: $(System.ArtifactsDirectory)/**/*.zip

#Uncomment the next lines to deploy to a deployment slot

#Note that deployment slots is not supported for Linux Dynamic SKU

#deployToSlotOrASE: true

#resourceGroupName: '<Resource Group Name>'

#slotName: '<Slot name>'

Challenges with the traditional approach

(A) Python installation

Hosting your own DevOps agents comes with a responsibility to install/update all the software that will be used during the work of your build/deployment pipelines. Python is no exception in this case. After locating the latest installation file you have to make sure that you:

- Install it to the following folder on your DevOps machine: $AGENT_TOOLSDIRECTORYPython3.9.9x64; Python version may vary.

- Install Python for All Users.

- Manually create an empty x64.complete file. This will indicate that Python “downloading” step is complete during your DevOps operations.

(B) Python bash commands

Bash command from the Microsoft documentation needs to be converted to a regular command that can be executed in your Windows DevOps agent. So in my case I changed bash commands to Command-Line tasks: https://docs.microsoft.com/en-us/azure/devops/pipelines/tasks/utility/command-line?view=azure-devops&tabs=yaml

From this

- bash: | if [ -f extensions.csproj ] then dotnet build extensions.csproj --output ./bin fi pip install --target="./.python_packages/lib/site-packages" -r ./requirements.txt

To this

- task: CmdLine@2

displayName: "Updating Python pip"

inputs:

script: 'python.exe -m pip install --upgrade pip'

workingDirectory: $(System.DefaultWorkingDirectory)/fapp

- task: CmdLine@2

displayName: "Installing Python libraries"

inputs:

script: 'python.exe -m pip install --target="./.python_packages/lib/site-packages" -r requirements.txt'

workingDirectory: $(System.DefaultWorkingDirectory)/fapp

(C) Python libraries and firewall settings

Changing Bash commands to CmdLine@2 tasks was only a part of the work to enable Python libraries' installation. My self-hosted DevOps agent VM was behind a corporate firewall. So, make sure to enable the following hostnames in your firewall, so that your agent could access Python libraries metadata and their binaries:

- pypi.python.org

- pypi.org

- pythonhosted.org

- files.pythonhosted.org

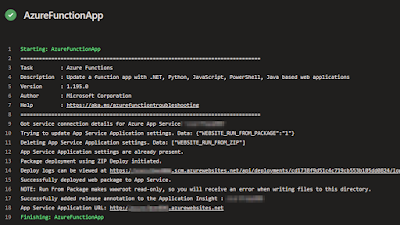

(D) Deployment worked well, however deployed Azure Function had some errors

My task (AzureFunctionApp@1) to deploy Python solution worked well and I was able to see the list of Azure Functions in the portal. However, the actual code in Azure showed a very generic error message: “Exception: ModuleNotFoundError: No module named 'module_name'”.

Microsoft anticipating that this type of error would frequently appear and it has created a separate documentation article to troubleshoot this Python error in Azure Functions: https://docs.microsoft.com/en-us/azure/azure-functions/recover-python-functions?tabs=vscode

- The package can't be found

- The package isn't resolved with proper Linux wheel

- The package is incompatible with the Python interpreter version

- The package conflicts with other packages

- The package only supports Windows or macOS platforms

After validating the actual deployed files I got stopped at the “package isn't resolved with proper Linux wheel” issue, where one of the Python depended library wheel files had “cp39-cp39-win_amd64” tag value when it was expecting the “cp39-cp39-linux_x86_64” value, since Python functions run only on Linux in Azure. The reason why I had “win_amd64” in my deployed package, was that all the depended Python libraries were collected and compiled on the Windows self-hosted Azure DevOps agent. So, I needed to find a way to make it look like Linux before running the deployment task.

My initial idea for to create a script and replace all the wheel files’ tags with “cp39-cp39-linux_x86_64” value, a bad idea. Then I thought to use the “pip install –target” parameter to specify that I want to collect and compile all the dependent Python libraries for the Linux platform on my Windows self-hosted VM with the Azure DevOps agent. This one failed too since I received even more error messages during the pip install command running.

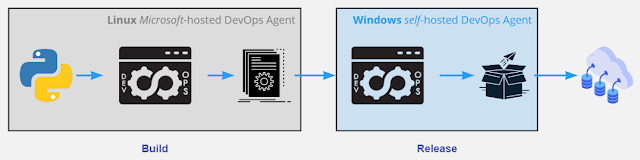

So, I was stuck with an idea, that either I should provision a new Azure DevOps agent installed on Linux VM and run both my build and deployment tasks for the Python Function App, or come up with a less complicated solution where I could still use my existing Windows self-hosted agents. This is how my story continued…

Non-traditional approach, to me

- I didn’t want to provision another Azure Linux VM to host DevOps agent just for the Python Function Code deployment (cost, software configuration and firewall settings).

- Also, I wanted to use the existing Windows self-hosted Azure DevOps agent that is already used for other project artifacts deployment.

trigger:

branches:

include:

- main

paths:

include:

- fapp/*

exclude:

- fapp/README.md

variables:

# Agent VM image name

vmImageName: ubuntu-latest

stages:

- stage: Build

displayName: Build stage

jobs:

- job: Build

displayName: Build

pool:

vmImage: $(vmImageName)

steps:

displayName: "Updating Python pip"

inputs:

script: 'pip install --upgrade pip'

workingDirectory: $(System.DefaultWorkingDirectory)/fapp

- task: CmdLine@2

displayName: "Installing Python libraries"

inputs:

script: 'pip install --target="./.python_packages/lib/site-packages" -r requirements.txt'

workingDirectory: $(System.DefaultWorkingDirectory)/fapp

- task: ArchiveFiles@2

displayName: "Archive files"

inputs:

rootFolderOrFile: "$(System.DefaultWorkingDirectory)/fapp

includeRootFolder: false

archiveFile: "$(System.DefaultWorkingDirectory)/build$(Build.BuildId).zip"

- task: PublishBuildArtifacts@1

inputs:

PathtoPublish: '$(System.DefaultWorkingDirectory)/build$(Build.BuildId).zip'

artifactName: FunctionsChallenges with the non-conventional approach

(A) Python version is important

I didn't include the UsePythonVersion@0 task in my initial YAML code and that proved to be wrong, since I got the old error message again, “Exception: ModuleNotFoundError: No module named 'module_name'”. After further investigation, the Python libs had Linux tag value in their wheel files, however Python version was slightly off. It was deployed as 3.8 version, whre my target Azure Function App was set to the Python 3.9 version.

After setting this YAML task, I had passed on the "No module named " error message:

- task: UsePythonVersion@0

displayName: "Setting python version to 3.9 as required by functions"

inputs:

versionSpec: '3.9'

architecture: 'x64'

(B) Import Error: /lib/x86_64-linux-gnu/libm.so.6: version `GLIBC_2.29' not found

Next attempt to build and deploy my code was successful, however the execution of my Python Function App failed with another error message, "ImportError: /lib/x86_64-linux-gnu/libm.so.6: version `GLIBC_2.29' not found".

Thankfully, I wasn't the only one who had experienced the same problem in the past (https://issueexplorer.com/issue/Azure/azure-functions-python-worker/818) and I was able to resolve this issue by replacing the "vmImageName: ubuntu-latest" to "vmImageName: 'ubuntu-18.04'" in my YMAL code, and this helped me a lot!

variables: # Agent VM image name vmImageName: 'ubuntu-18.04'

(C) Build and Release pipeline are separated

This is a very minor issue to maintain and update both DevOps pipeline (build & release) in two separate places, the rest is easy and very familiar.

Conclusion

As a conclusion to this blog post, I was able to make Linux and Windows, even more, friendlier in my mind by creating this CI/CD pipeline for my Python Function App. Yes, I can deploy the Python Function App to Azure using Windows self-hosted agents, but only if a build is made on a Linux agent too.

Photo by olia danilevich from Pexels