As part of a project I’m currently involved with, we’re migrating a database to SQL Server 2008 and performing some internal updates to the schema and data. Once the database portion of the upgrade is complete, we’d like to update all database statistics before continuing.

As part of a project I’m currently involved with, we’re migrating a database to SQL Server 2008 and performing some internal updates to the schema and data. Once the database portion of the upgrade is complete, we’d like to update all database statistics before continuing.

On a large database, updating all statistics with a full scan can take a looooong time. It takes that much longer when you’re waiting for it to finish. To help expedite the process, I started looking for a way to divide the work into parallel threads. The quick and dirty solution would be to use SQL to generate an update statistics statement for each of my tables, manually split that out into separate scripts and run each script in a separate session. Bo-ring.

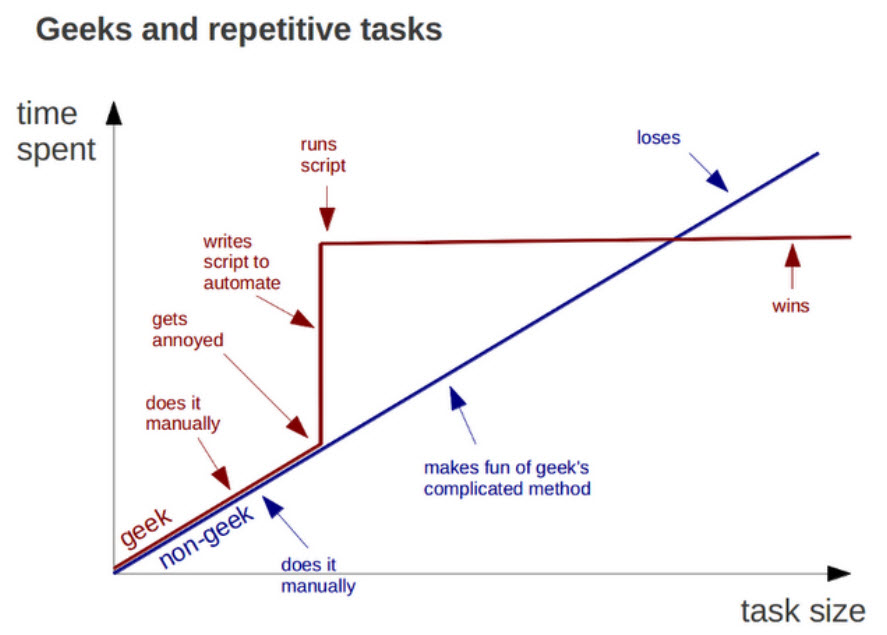

That approach is fine if you only ever want to do this once and you enjoy spending your time manually performing such mundane tasks. Me on the other hand, I’d prefer to spend several hours designing, scripting, and refining an automated method. Even if I only ever get to use it once, it’s still time well spent in my book.

Ideally, I wanted

- the ability to run this against any database on any instance,

- minimal footprint; I don’t want to have to create jobs or objects, etc on a target server for this to work,

- the number of threads should be dynamic; a server with a lot of horsepower will be able to run more concurrent threads than some dinky dev server.

So I started thinking about tools I could use. As I’ve made clear many times in the past, I’m not a developer. I’m sure if I were, I’d have a number of weapons in my arsenal. I, however, am a lowly DBA, so I need to keep things simple. Some ideas I batted around:

- Powershell

- Powershell

- …Powershell!

I’m pretty sure you can see where I’m going with this.

My general approach was still to use SQL to script out the update stats commands, write the commands out to n separate .sql files (n being the number of threads) and then execute all of the scripts concurrently. Again, keeping it simple.

Distributing the workload

The first task was to generate the update stats statements and divide them into groups. I used the ROW_NUMBER() command with a modulus operator to generate group numbers.

declare @p_threads smallint

SET @p_threads = 4

select 'UPDATE STATISTICS '+quotename(s.name, '[')+'.'+quotename(o.name, '[')+' WITH FULLSCAN;' as sqlstmt , ((ROW_NUMBER() OVER (order by s.name, o.name )) % @p_threads) as threadnum

FROM

sys.objects o

join sys.schemas s on o.schema_id = s.schema_id and o.type in ('U', 'V')

group by s.name, o.name

order by threadnum, o.name I really could have stopped there. That was a perfectly good solution. There was just one teensy detail that bothered me. Ideally, the workload should be evenly distributed among all of the threads. I shouldn't have one thread that finished in a minute and while the rest chugged along for another 2 hours. This query did nothing to achieve any sort of balance.

So I started trying different methods. (Warning: I spent way too much time on this part. Feel free to skim.) I tried using the reserved field in sysindexes to spread out the load by size. Then I decided that that wasn't perfect because there might be a big table with only a couple stats on it, but a somewhat smaller table with a whole bunch of stats will, overall, take longer to process.

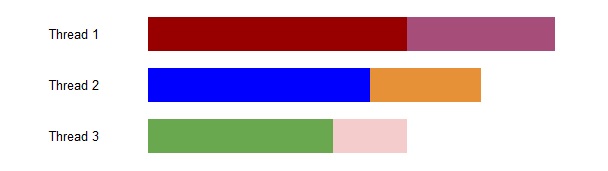

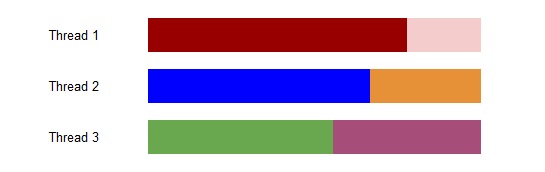

Taking this into consideration, I then switched to using row counts multiplied by the number of stats on the table. I sorted them in descending order based on this new "size" measurement, and striped them across the groups. 0,1,2,3,0,1,2,3, etc. But then I got to thinking that the first few threads will always have the most work to do, because I was distributing work like this, picture each block as a table:

What I really should be doing is striping back and forth, like so:

Yeah, like I said, I spent a lot of time on this. But here's what I ended up with, incorporated into Powershell:

##### Generate update stats statements #####

$connection = new-object system.data.sqlclient.sqlconnection( `

"Data Source=$p_instance;Initial Catalog=$p_database;User Id=$p_userid; Password=$p_passwd;");

$connection.Open()

$cmd = $connection.CreateCommand()

$query = ";with statsCTE as (

select 'UPDATE STATISTICS '+quotename(s.name, '[')+'.'+quotename(o.name,'[')+' WITH FULLSCAN;' as sqlstmt

, (ROW_NUMBER() OVER (order by (max(i.rowcnt)*count(i.id)) desc)-1) % $p_threads as asc_threadnum

, ($p_threads - 1) - ((ROW_NUMBER() OVER (order by (max(i.rowcnt)*count(i.id)) desc) -1 ) % $p_threads) as desc_threadnum

, ((ROW_NUMBER() OVER (order by (max(i.rowcnt)*count(i.id)) desc)-1) / $p_threads) %2 as odd_even

from sysindexes i

join sys.objects o on o.object_id = i.id and o.type in ('U', 'V')

join sys.schemas s on s.schema_id = o.schema_id

group by s.name, o.name

)

select s.sqlstmt

,case(s.odd_even)

when 0 then s.asc_threadnum

when 1 then s.desc_threadnum

end as threadnum

from statsCTE s

order by threadnum, s.sqlstmt"

$cmd.CommandText = $query

$reader = $cmd.ExecuteReader() Creating the sql files

Over-engineer much? Now that that was finally settled, I could move on to writing those statements to their respective files. Just in case I wanted to run this job against multiple databases, I used the instance and database name in each of the sql filenames, along with the thread number, i.e. MyInstance_MyDatabase_updstats_1.sql. For each record I read in, I check to see if the script file it belongs to exists. If it does, I append the current command. If not, I create it and writing the current command.

##### Write commands to script files #####

$jobname = "$instance"+"_"+"$p_database"+"_updstats"

$outfile = "$pwd\$jobname"+".log"

$ofile = New-Item -type file $outfile -force

add-content $outfile "$(get-date) : Building SQL Scripts"

while($reader.Read()) {

$sqlstmt = $reader['sqlstmt']

$threadnum = $reader['threadnum']

$statsfile = $jobname+"_"+$threadnum+".sql"

if (Test-Path $pwd\$statsfile)

{

add-content $pwd\$statsfile $sqlstmt

}

else

{

$file = New-Item -type file $pwd\$statsfile

add-content $file "SET NOCOUNT ON;"

add-content $file $sqlstmt

}

} Executing the threads

Now I just needed a mechanism to actually execute all of these scripts. I didn’t know exactly how to do it in Powershell, but I was pretty darn sure it could be done. I commenced to Googling. What I was looking for was something akin to using “nohup some_command &” in UNIX. I found that in the Start-Job command. Start-Job starts a background Powershell job on the local server. I can use it to kick off another process (like a block of update stats commands) and immediately continue with my next task (another block of update stats commands). Perfect.

##### Now run the scripts #####

$files = Get-ChildItem $pwd -filter "$jobname*.sql"

foreach( $file in $files)

{

$file = "$pwd\$file"

Start-Job -filepath "$pwd\update-stats.ps1" -ArgumentList @($p_instance, $p_database, $p_userid, $p_passwd, $file, $outfile) -name $jobname

} Here’s where my lack of Powershell expertise comes in. No matter what I tried, I could not get it all to work from that single Start-Job command. The best I could do was have Start-Job execute another Powershell script that, in turn, runs the sql script. Not a big deal, it allowed me to add in some logging statements. That update-stats.ps1 script:

# update-stats.ps1

param(

[string]$p_instance=$null,

[string]$p_database=$null,

[string]$p_userid=$null,

[string]$p_passwd=$null,

[string]$p_file=$null,

[string]$p_output=$null

)

add-content $p_output "$(get-date) : $p_file starting."

sqlcmd -S $p_instance -U $p_userid -P $p_passwd -d $p_database -i $p_file #-o $outfile

add-content $p_output "$(get-date) : $p_file complete. Removing SQL file. "

remove-item $p_file -force Putting it all together

Ok. I was pretty sure I had everything I needed. My final product:

# do-UpdateStats.ps1

# usage: ./do-UpdateStats.ps1 <Instance name> <Database name> <Login> <Password> <Threads>

param(

[string]$p_instance=$null,

[string]$p_database=$null,

[string]$p_userid=$null,

[string]$p_passwd=$null,

[int]$p_threads=$null

)

[System.Reflection.Assembly]::LoadWithPartialName('Microsoft.SqlServer.SMO') | out-null

[System.Reflection.Assembly]::LoadWithPartialName('Microsoft.SqlServer.ConnectionInfo') | out-null

##### Separate out the instance name if not the default instance #####

$inst = $p_instance.Split("\")

if ($inst.Length -eq 1)

{

$instance = $inst[0]

}

else

{

$instance = $inst[1]

}

##### Generate update stats statements #####

$connection = new-object system.data.sqlclient.sqlconnection( `

"Data Source=$p_instance;Initial Catalog=$p_database;User Id=$p_userid; Password=$p_passwd;");

$connection.Open()

$cmd = $connection.CreateCommand()

$query = ";with statsCTE as (

select 'UPDATE STATISTICS '+quotename(s.name, '[')+'.'+quotename(o.name,'[')+' WITH FULLSCAN;' as sqlstmt

, (ROW_NUMBER() OVER (order by (max(i.rowcnt)*count(i.id)) desc)-1) % $p_threads as asc_threadnum

, ($p_threads - 1) - ((ROW_NUMBER() OVER (order by (max(i.rowcnt)*count(i.id)) desc) -1 ) % $p_threads) as desc_threadnum

, ((ROW_NUMBER() OVER (order by (max(i.rowcnt)*count(i.id)) desc)-1) / $p_threads) %2 as odd_even

from sysindexes i

join sys.objects o on o.object_id = i.id and o.type in ('U', 'V')

join sys.schemas s on s.schema_id = o.schema_id

group by s.name, o.name

)

select s.sqlstmt

,case(s.odd_even)

when 0 then s.asc_threadnum

when 1 then s.desc_threadnum

end as threadnum

from statsCTE s

order by threadnum, s.sqlstmt"

$cmd.CommandText = $query

$reader = $cmd.ExecuteReader()

##### Write commands to script files #####

$jobname = "$instance"+"_"+"$p_database"+"_updstats"

$outfile = "$pwd\$jobname"+".log"

$ofile = New-Item -type file $outfile -force

add-content $outfile "$(get-date) : Building SQL Scripts"

while($reader.Read()) {

$sqlstmt = $reader['sqlstmt']

$threadnum = $reader['threadnum']

$statsfile = $jobname+"_"+$threadnum+".sql"

if (Test-Path $pwd\$statsfile)

{

add-content $pwd\$statsfile $sqlstmt

}

else

{

$file = New-Item -type file $pwd\$statsfile

add-content $file "SET NOCOUNT ON;"

add-content $file $sqlstmt

}

}

##### Now run the scripts #####

$files = Get-ChildItem $pwd -filter "$jobname*.sql"

foreach( $file in $files)

{

$file = "$pwd\$file"

Start-Job -filepath "$pwd\update-stats.ps1" -ArgumentList @($p_instance, $p_database, $p_userid, $p_passwd, $file, $outfile) -name $jobname

} Minor snag

I tested running it against my project database. Using 8 threads I was able to take a several hour job and complete it in under 90 minutes. It ran like a champ. Except for one thing: I couldn’t schedule it. My threads would start and immediately die. You see, Start-Job isn’t quite like “nohup some_command &”. With nohup, that some_command will continue to run even if your session ends. With Start-Job, your background jobs end when the calling session ends. So my scheduled job would fire off all the background jobs and then quit, thus terminating the background jobs.

The answer: Wait-Job. I’d given each of those jobs a name that started with MyServer_MyDatabase_updstats. Wait-Job would wait for each of those jobs to finish before ending the calling script.

Wait-Job -name $jobname

Geekdom realized

After all that, I believe I have lived up to the Geek standard.