As I mentioned in my column in June, one of the many lessons I learned at the first-ever SQL Saturday event hosted by the Professional Association of SQL Server (PASS) Rochester chapter was that data mining is perhaps the least understood feature of Microsoft’s industry-leading database server software. It’s also one of the most useful tools in the SQL Server suite, however, so it’s about time this underutilized feature got some more press. It is so little known and so little documented that my six series of blog posts beginning with An Informal Compendium of SSAS Errors, Part 1: Introduction may be the only centralized source of information on the planet for some of the errors we encounter in SQL Server Analysis Services (SSAS) and its data mining language, known as Data Mining Extensions (DMX), in particular. Attending the SQL Saturday meeting merely reinforced my impression that there is a crying need for publicity for the feature, even if it has to come from an amateur like myself. As I’ve mentioned before, I have not yet worked in the field as a DBA, despite having a Microsoft Certified Database Administrator (MCDBA) certification in SQL Server 2000 and all three MCITP certifications in SQL Server 2008 R2. I would like to specialize in data mining, but that doesn’t signify that I’m a guru by any means; there are certainly DBAs working in the field who have more experience with this topic than I do. As a disclaimer to this entire series of tutorials, I encourage you to listen to them instead – if they can be found – if anything I say conflicts with their advice. I wouldn’t dare write a series of self-tutorials like this if we weren’t dealing with such a paucity of information on the data mining tools, despite the fact that they are so incredibly useful. As a further illustration, at the SQL Saturday I encountered a lot of interest in SSAS, but found myself having to explain to people who worked with it on a daily basis just what the data mining tools did and the kind of problems they could solve. That kind of disjunction requires a response of some kind, even if it comes from an amateur. Hopefully, I will kill two birds with one stone by familiarizing myself more with SQL Server data mining as I write this series, by forcing myself to think about it more clearly. If I labeled a “Stairway” series of tutorials, as other blogs on more well-known SQL Server topics are titled, then it would have to come with the explicit disclaimer that I will be climbing this stairway myself as I write the series. I may trip as I climb the rungs, but even my inevitable missteps will provide valuable lessons to those readers who walk along with me.

Many tutorials on a wide variety of computing topics, particularly those provided by large corporations, tend to be cluttered with a lot of restatements of the obvious. Because data mining is a brain-bending topic to begin with, I’m going to skip a lot of the basics you’d expect to find in an article like this. I assume the readers are working programmers or DBAs, many of whom have a lot more experience than I do, so I expect that everyone can do without the usual screenshots, code, etc. on mundane, self-evident tasks, like creating an SSAS project in Business Intelligence Development Studio (BIDS, for SQL Server 2005, 2008 and 2008 R2) or SQL Server Data Tools (SSDT, for the latest version of SQL Server). Virtually all of the screenshots I will post will come from SSDT.

At this point you will obviously also need to select a data source, which isn’t as straightforward as it may seem. I used to assume that cubes would be preferred to directly accessing relational sources, since MDX and DMX both operate on mainly static data within SSAS, but I recently discovered that this isn’t necessarily the case. Jamie MacLennan, one of the key members of Microsoft’s Data Mining team until recently, advised in this post at the company’s Data Mining Forum to use cubes only when dealing with data that Online Analytical Processing (OLAP) is good at handling. For example, cubes might be a wise choice when you want to mine additive measures, but not for data without aggregates. Of course, I imagine it is wise to exercise common sense and not try to load a couple of million rows from an OLTP server during peak usage time simply to run some data mining analysis that could wait till later, or be loaded into a cube incrementally by SQL Server Integration Services (SSIS). I have yet to hear of this being tested yet, but it might also be helpful use some of the exciting new windowing functions in SQL Server 2012 to perform some of the analytics on the relational side, before importing the data to a DSV for mining. As Itzik Ben-Gan demonstrates in his excellent book Microsoft SQL Server 2012 High-Performance T-SQL Using Window Functions, when smartly used these functions can be highly efficient at performing certain operations which once voraciously sucked server resources (like one of these nasties that devoured hapless black-and-white swimmers in Attack of the Giant Leeches). We needn’t be that terrified of calculating a moving average on a relational system anymore. Given that the Time Series algorithm makes use of moving averages and other algorithms also depend internally on other calculations that can now be performed more efficiently on the relational side than they used to be, the door is open to creative combinations of both windowing functions and data mining through SSAS. In cases like these, it might be more appropriate to use a relational source in your DSV than it once was.

Dodging Disaster with DSVs, Diagrams and Data Types:

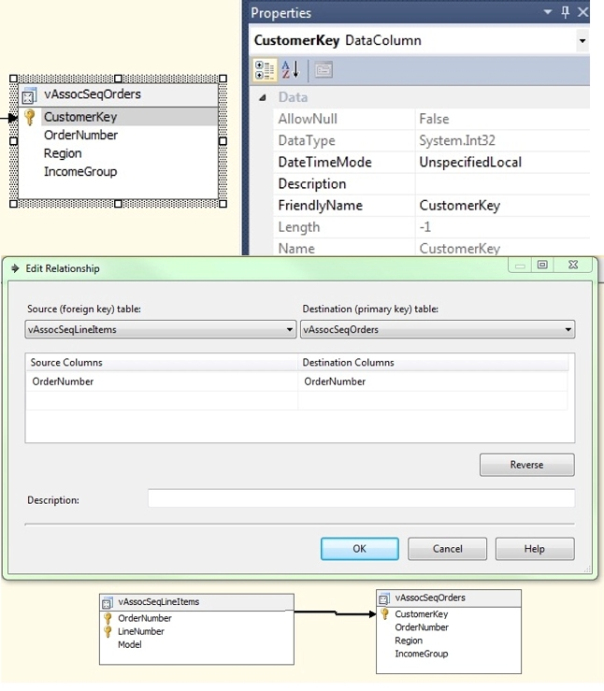

After setting the usual Project Properties and deciding on either a relational database or cube as your source, you’d normally create a Data Source with some basic connection information, then a Data Source View (DSV) that selects specific data from it, all of which is pretty straightforward. There are some potential pitfalls to watch out for at this stage though, especially the fact that relationships in the DSV diagrams are depicted in a different direction than in Visual Basic. If you come from the VB world like I do, you’d expect the primary key to be on the left side in second diagram in Figure 1, which can lead to a lot of confusion if you don’t pick up on this difference right away

Figure 1: Data Source Views

It is important to watch out for data type mismatches, which can lead to a lot of frustrating errors that can be difficult to track down. Your data will likely be in one format in the data source, which may include T-SQL data types if you’re trying to mine a relational database, but will be converted to .Net-style data types in the DSV, sometimes with unexpected results. By checking the data types in the SSDT Properties window as below, we can see that the CustomerKey column imported from the AdventureWorks 2012 Data Warehousing database is assigned a System.Int32 type. After crossing that hurdle, you also have to deal with DMX data types, which are assigned when you build a mining structure from a DSV. These include Text, Long, Boolean, Double and Date, which is a much shorter list of types than those supported by the DSVs, or by T-SQL and MDX. I’ve seen numerous problems crop up in these conversions, particularly when Bit and Boolean columns are involved, so keep on the lookout and be prepared to alter, cast or convert the data types at any of these three levels. Trust me, changing the data type later in the process is asking for trouble with arcane error messages that cost a lot of time to track down, so prepare ahead of time.

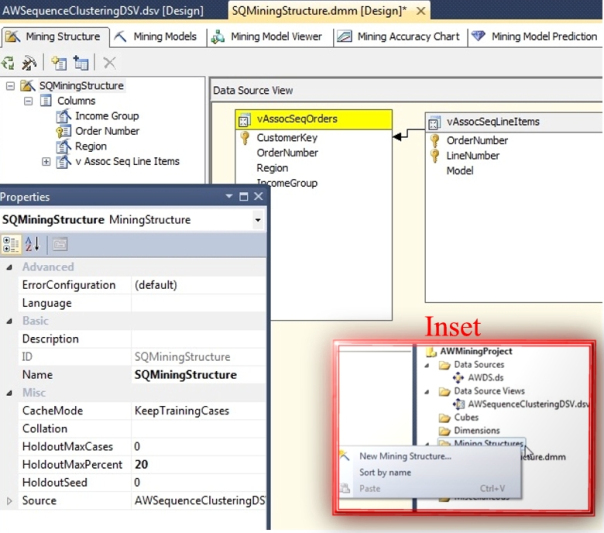

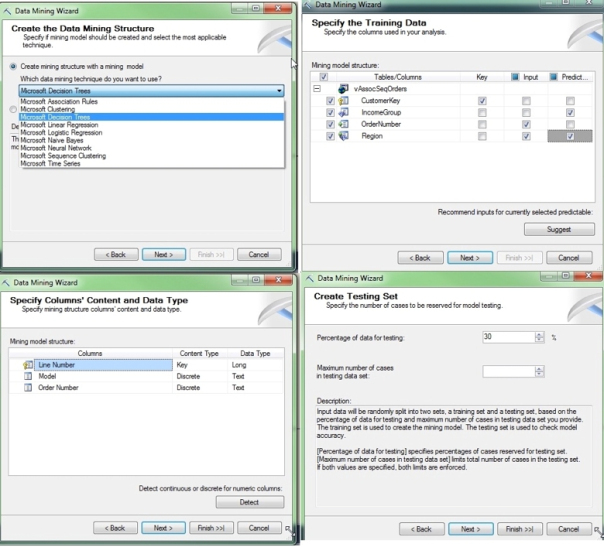

Data mining is performed on mining models, which are in turn built upon mining structures which organize data imported from a cube or relational source into a DSV. Each has its own tab at the top of Figure 2; I’ll go over the Mining Model Viewer tab in my next post and then tackle the Mining Accuracy Chart and Mining Model Prediction tabs towards the end of the series, once we have a stronger grounding in the fundamentals of data mining. Only one of the nine algorithms Microsoft supplies out of the box can be assigned to a particular model, but a structure can contain multiple models that use different algorithms, or different parameters if the same algorithm is used again. It is possible to create a new model and assign an algorithm after a mining structure is created, by simply adding a new model, but whenever possible I prefer to use the Data Mining Wizard to help cut down on Content and data type conflicts. To bring up the Wizard, right-click the folder labeled Mining Structures in the BIDS or SSDT Solution Explorer as depicted above in the Inset on Figure 2, then fill in the pages titled Select the Definition method and Create the Data Mining Structure, which are fairly self-explanatory.

Figure 2: Data Mining Structure Tabs and Properties (Inset – Calling Up the Data Mining Wizard)

After you’ve selected a DSV and identified any Table content types to include (which is a required step when using Sequence Clustering), the most important steps are to set the Content and data types appropriately in the Wizard pages labeled Specify the Training Data and Specify Columns’ Content and Data Type. On the next-to-last page, Create Testing Set, you choose either a percentage or a static number of rows from the DSV to train the algorithm with. The amount of data and the way it is split can affect the data mining results, but I have haven’t experimented with it much. It is apparently common to leave it at the default value of 30 percent; I have yet to see an example of a user in the Data Mining Forum or elsewhere setting either the maximum cases or percentage to very different values, with the most common runner-up being 20 percent. Once the mining structure is created, these can be set to new values with the HoldoutMaxCases and HoldoutMaxPercent properties, which are visible only when you right-click the structure in the Mining Structure tab, not when clicking it in the Solution Explorer. From there, you can also set related properties like CacheMode and HoldoutSeed that I haven’t played with much. It is also possible to bring up several aforementioned properties for individual columns by right-clicking them in the same tab, under the mining structure name, but take care when altering the Type or Content. As a word of caution, many of the errors I’ve seen crop up in SQL Server Data Mining (I’ll invent the acronym SSDM for brevity’s sake) revolve around either inappropriate Content types or data type mismatches, so it is a good idea to understand your data fairly well before creating a new mining project in the Wizard.

Figure 3: Four Data Mining Wizard Windows

The Input and Predictable checkboxes on the Specify Training Data page are fairly self-explanatory. The data any column selected as an Input will be used to determine the values in the dataset output by the algorithm, i.e. the Predictable columns, which can also be Inputs; this is really no more complex than feeding a set of parameters to a function and returning a set of values, albeit on the scale of whole datasets. Although you select an algorithm in the Wizard prior to this step or setting the Content and data types, it is crucial to set these correctly, because it will constrain the types of algorithms you can use. This requires some planning ahead, long before you reach the point of creating a project or running the Data Mining Wizard, to avoid potential mismatches between Content types, data types and algorithms that can cost a developer a lot of time. Without prior planning, in fact, we won’t even know what algorithms to apply in order to get back meaningful, useful information.

Discontent with Content

The first step in any data mining project must be to understand the data involved, even before we decide what questions we want to ask about it. Because SSDM operates on either an SSAS or regular OLTP database, it would of course be helpful to apply standard data modeling techniques when building the data sources, with everything from basic foreign key relationships and primary keys to the kinds of deep insights that experts like Joe Celko specialize in. This isn’t a tutorial in Data Modeling 101, so I won’t get into a discussion of prerequisites like modeling skills that many DBAs already have a much deeper understanding of than I do. DBAs new to SSDM will, however, have to look at data modeling from an unfamiliar angle. In addition to all of the usual work that goes into building a cube or relational model, such as identifying appropriate data types, data miners must be able to categorize their columns by meaning, particularly when it implies some type of order to the data in the column.

When building a mining structure from a DSV, you must set a property called Content for each column, which can be set to one of the following choices: Key, Key Sequence, Key Time, Table, Discrete, Discretized, Continuous, Cyclical and Ordered. It is also possible to mark a column as Classified in conjunction with the Classified column property, to identify one column as the statistical PROBABILITY, VARIANCE, STDEV, PROBABILITY_VARIANCE, PROBABILITY_STDEV or SUPPORT of another column, but this only applies to custom data mining algorithms, so I won’t venture into this advanced topic until the end of the tutorial series. A column designated with the Key type is simple to understand, because it is basically equivalent to a primary key in a relational table; Key Sequence is likewise simply the key when Sequence Clustering is chosen as the algorithm for data analysis, while Key Time is the key when using the Time Series algorithm. A Table type is basically equivalent to a dependent table joined by a foreign key, but I will not discuss this in detail until my tutorial on Sequence Clustering, since it is most relevant in that algorithm. I will also postpone until then an explanation of the Cyclical and Ordered types, which are simply treated at Discrete by all nine algorithms except Sequence Clustering. To put it briefly, the first type indicates a cyclical ordered set, while the second identifies an order but without implying a rank. The classic textbook example of the first is a week, which repeats itself every seven days. An example of Ordered data familiar to programmers might be the iterations in a Visual Studio Team Foundation Server project, in which tasks in Iteration 1 come prior to those in Iteration 10, but without the latter necessarily being ten times more important, or coming a fixed multiple of time after the former. There is no fixed magnitude in such a rank, but there is in other types of data, such as classes of weight in boxing. Again, these fine distinctions are only important when using the Sequence Clustering algorithm, which is a complex topic I will discuss much later in the series.

The most common Content distinctions you will need to make before creating a mining structure are between the Discrete, Discretized and Continuous types. The first of these is most useful for designating categories which aren’t ordered, with the classic example most often given in the data mining literature being a column identifying gender. As BOL puts it, a Discrete column “cannot imply ordering, even if the values are numeric,” nor can it contain fractional values. A region code for each state or province would be a Discrete content type, but not a number implying a rank or order to different geographic data types, such as the number 1 for a village, 2 for a city, 3 for a county, 4 for a state and 5 for a nation. As long as the latter type of column does not contain fractions, it can still be designated as Discrete, but the analysis performed on it won’t be ordered in any way; a village would be treated with the same significance as a state, which might lead to poor interpretations of the results the data mining algorithms return. It would be better to designate such a column as Continuous, which is one of only two choices possible when data contains fractional values, as is the case when SQL Server data types like decimal, numeric or float are involved. Association Rules, however, does not allow Continuous types as inputs or predictable attributes, which presents a dilemma that can be solved only by either excluding that column or designated it as Discretized. Choosing the latter allows you to operate on continuous data which might be crucial to interpreting any mining results, but at the cost of any order or rank that might be implied by the data type. SSAS breaks up the data in any column labeled as Discretized into buckets, in a process which is known as discretization, but the buckets have no relationship to each other. For example, suppose that you have a column of the SQL Server decimal data type that includes the values 1, 1.1, 1.5, 3 and 3.3. The first two values might be included in the same bucket, the middle value in a second bucket and the last two in a third, but the value 3.3 won’t be treated as a multiple of 1.1, despite the fact that the original data type implies it. Instead, 3.3 and 1.1 will be treated as separate states, such as the codes 1 and 2 for male and female in an ISO standard gender column. Unfortunately, this is the only alternative when dealing with algorithms which don’t allow Continuous columns. Unless you set the DiscretizationBucketCount property for a Discretized column, SSAS will automatically default to five buckets for a relational source, or the square root of the number of distinct values for a cube source.

Users can also select between two separate DiscretizationMethod property values for finer control of the discretization process, or allow SQL Server to choose for them by selecting Automatic. When set to Clusters, SSAS samples 1,000 values in the column and runs several trials of the Expectation Maximization (EM) clustering method (which is also used internally by several algorithms), but this sophisticated approach to bucketing requires more processing resources and only works on numeric data. The drawback of EqualAreas is that it creates buckets of roughly equal size, which doesn’t work well if the distribution curves of the data are lopsided. Thus far, after some experimentation (inspired by Ben-Gan’s book) I’ve sometimes gotten better results by creating my own buckets manually, through such means as the NTILE windowing function in T-SQL, which behaves similarly to EqualAreas. It may also be helpful to do your own sampling on columns you plan to designate as Continuous in the mining structure, to discover whether the distribution curve is lopsided, forms a bell curve, or is simply a straight line with no difference in likelihood between the values; if so, you provide a hint to your data mining algorithm by setting the Distribution property for that column to Log Normal, Normal or Uniform, respectively. Prior to importing your data it is also wise to think about how you will set the ModelingFlags for a particular column, to select a null handling strategy. In a mining structure, you can set the NOT_NULL flag, which will halt all processing if a single null is encountered. After you build one or more mining models on a structure, you can set MODEL_EXISTENCE_ONLY to indicate that the column is either Missing or Existing, if the presence of the data is more important than its actual value. For the Linear Regression and Decision Trees algorithms you can also set a REGRESSOR flag, which I’ll address when those particular algorithms come up for discussion in future columns.

Choosing an Algorithm, i.e. Asking the Right Questions

The nine algorithms included in SSDM are so useful that it is sometimes possible to extract valuable information out of the results without really understanding their functions. Nevertheless, it might obviously be more productive to have at least a crude understanding of your data and the questions you want to ask of it before choosing an algorithm, especially since the data mining method you choose constrains which column Content types you can use. Each algorithm requires a key column, but otherwise provides the user with great leeway in choosing the columns to use as inputs and/or outputs, as long as the Content type is allowed for that algorithm. Simply adding all of the columns in your DSV as both inputs and outputs indiscriminately may not only have a negative impact on performance, but clutter the results with data that is either meaningless or even misleading. For example, there may be an obvious reason to add a column titled DayOfWeek and populated with values like Monday, Tuesday, Wednesday etc. as an input to a Time Series algorithm, since you may want to predict what the value of some measure will be on a future day of the week. The value of that measure would be identified as a Predictable attribute and perhaps also as an input, if you believe there is a chance that it may affect the value of some other measure. But there will rarely be a logical reason to use DayOfWeek as a Predictable attribute in such a scenario, because we already know when the days of the week occur. Think of the inputs and predictable outputs as plugs in an old-fashioned telephone switchboard; you, as the operator, must make intelligent choices about which columns to plug in as inputs, predictable outputs, or both, otherwise you’re just going to a lot of trouble to get back a lot of undecipherable noise. In addition, parameters are available for fine-grained control of the behavior of each algorithm. That is a more advanced topic that I will discuss in future articles on each individual algorithm, because there are some differences in the parameters they take.

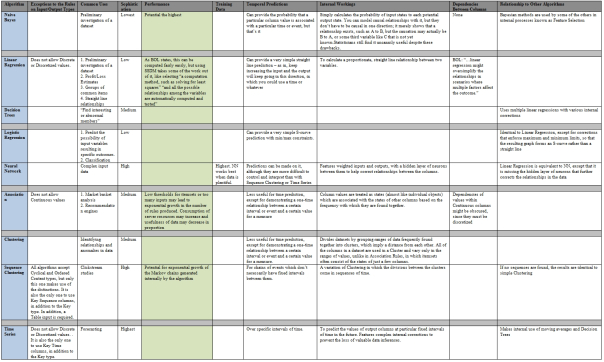

Each algorithm can spit out a mass of data, complete with fancy diagrams you can view in BIDS or SSDT, but it may be meaningless if you don’t pick the right tool for the job. Some of the distinctions between them are quite fine, while others are stark and obvious; I’m still learning the ropes, but have devised the crude chart below that may be of use in differentiating usage scenarios for each. View Figure 4 maximized to see a comparison of considerations when choosing a data mining method. As I touched on earlier, one of the first distinctions we have to make is between the Content types that we can use as inputs and outputs, since some of the algorithms simply won’t accept certain types of data. All of the algorithms accept the Key, Table, Cyclical and Ordered Content types, but as depicted below, there are a few differences between them, such as the handling of Cyclical and Ordered, the acceptance of Continuous, Discrete or Discretized content, or requirements for Key Sequence or Key Time columns. The Cyclical, Ordered, Table and the three Key types cannot be used as Predictable columns, but all of the other Content types can be designated as outputs if the algorithm in question accepts them as inputs. The most important differences are that Linear Regression and Time Series do not accept Discrete or Discretized values, Association Rules does not accept Continuous columns and the requirement of a Table input in Sequence Clustering. The latter is also the only algorithm that performs special processing of Cyclical and Ordered data.

Figure 4: Choosing an Algorithm (open the picture for a maximized view)

The Neural Network algorithm works best on complex datasets, but that also means that it requires a larger amount of data than other data mining methods to reach its maximum utility. Both Naïve Bayes and Linear Regression are useful for preliminary investigations of data, while the latter is also often applied to simple profit-loss margins for particular products. Logistic Regression is similar to Linear Regression, except that it is more useful for predicting specific states as outcomes rather than a range of possible values. Sequence Clustering is often used for clickstream studies, Time Series for forecasting, Association Rules for E-commerce recommendation engines and market basket analysis and Clustering for identifying relationships and abnormal values for members of a dataset. Decision Trees is also often used to “find interesting or abnormal members,” as BOL puts it. These are just a few of the most common applications for these algorithms, which have some quite subtle overlaps in functionality.

Another way to differentiate between them is by the kind of temporal predictions they make. Neural Networks can make useful temporal predictions, but they are more difficult to set up and interpret than the two leading time-based algorithms. Sequence Clustering works best for chains of events that don’t necessarily have fixed intervals of time between them; for example, a cardiologist might find precursive signs of an irregular heartbeat that only occasionally strikes a patient at random times of the day. Time Series is best used for making predictions for values of measures in specific future intervals of time, based on inputs of specific intervals of time. It is the most sophisticated of the data mining methods in SSDM, followed closely by Sequence Clustering and the Neural Network method, with Clustering, Association Rules and Decision Trees being of medium complexity and Logistic Regression, Linear Regression and Naïve Bayes having the least sophistication. I’ll delve into the internal workings of each algorithm when the time for its particular blog post comes, but it is important to mention that Time Series contains detailed logic to correct a number of erroneous conclusions that can arise when extrapolating existing time data. It is possible to use the other algorithms to predict future events, if we use time values as inputs, but they contain no such corrective logic and thus make gargantuan assumptions that current relationships between columns will always remain the same. For example, Linear Regression can be thought of as a simple straight line: increase the value of an input and the output will increase or decrease in the same proportion that it always has. Every human being makes predictions like this, by half-consciously using the same methodology: the darker the sky gets, the more it rains, for example. If we use time as an input, we can make similar statements, such as, “last year I earned $100 on an investment, this year that changed to $200, so next year I will make $300.” Of course, one weakness of Linear Regression is its simplistic interpretations, which may not take into account other inputs, such as a dew point or speed of cloud movement, which may prevent it from raining as much as it did the last time the sky got particularly dark. Similarly, it may be naïve to make time-based assumptions; the investor in this example may actually lose money, if they don’t take other variables into account. It is important to keep in mind with all of these algorithms that they don’t work magic; no matter how brilliantly they are designed, they can’t violate the Ex Nihilo principle of creating something out of nothing. If we supply it with bad data, the principle of “Garbage In, Garbage” out applies, but if we don’t supply enough data, or of the right kind, we simply won’t get anything useful back at all. Time Series features some brilliant internal corrections to minimize the loss of valuable inferences to the data it is given, but it can’t magically predict the future out of thin air.

It is also helpful to think of each algorithm as building on the others, which is why I divided the chart above into several segments. Naïve Bayes is not explicitly used as a building block for any of the others, but Bayesian methods are sometimes used by other algorithms in an internal process known as Feature Selection, which I will explain in greater detail in later articles. Logistic Regression can be viewed as a variation on Linear Regression, with constraints that cap the results at certain minimum and maximum outcomes, thereby forming S-curve graphs rather than straight lines. It differs from the Neural Network (NN) method only in the fact that it is missing the internal layer of weighted neurons that NN uses to further correct relationship deduced from the data. Decision Trees also builds upon Linear Regression, but by adding corrections to multiple simultaneous regressions. Sequence Clustering builds upon Clustering, while Time Series uses moving averages, regression and Decision Trees internally. We can also differentiate the nine algorithms from each other by the way they handle dependencies within the data. Naïve Bayes assumes all of the inputs are independent from each other, but has been proven so surprisingly useful that statisticians have commented on its uncanny advantages in various academic journals. Linear Regression may oversimplify important dependencies between columns, while Association Rules may obscure dependencies between values within a column by discretizing them and treating them as independent states. Suppose, for example, that we are dealing with a dataset in which movies are rated on a scale from 1 to 5. Association Rules may discretize these values into five buckets – or worse still, values other than five – and then create rules associating certain values with other columns, without taking into account the fact that the numbers 1 through 5 are part of a continuum of ordered values with fixed intervals. The number 1 thus has no dependent relationship to the number 3; they are both treated as states, like the name of a business or the GUID of a database object might be. Naïve Bayes and Linear Regression present much less of a performance problem than other algorithms do, but an explosive growth in the discovery of the rules relating items together can occur in Association Rules, if too many inputs are chosen or the threshold for making rules are set too low. BOL also warns about the possibility of an exponential growth in the Markov models used internally by Sequence Clustering, but thus far I have only had my development machine paralyzed by the Association Rules problem. To make matters worse, the excess rules generated when this problem crops up may be entirely useless and simply clutter the results, at the cost of a significant performance hit to boot. Nonetheless, Association Rules can provide additional valuable insights into your data, depending on what questions you want to ask of it. It is often used in conjunction with Clustering models, which seems similar, in that one algorithm groups things into itemsets and the latter into clusters. There are some subtle differences that count for a lot though. Clustering takes all of the input columns into account when forming a set, while Association Rules only evaluates a few of them; for example, a single cluster might include specific values or ranges for all ten columns in a dataset, while an itemset may group together individual states for just three of the ten, depending on how you’ve set up your inputs, outputs and parameters. A cluster is akin to an object with properties, whereas as each part of an itemset is a state that is treated like an object, which is associated by a probability level with some other state of another column that is also treated as a discrete object. Association Rules establishes similarities between states based on their frequency, while Clustering divides dataset values into common groups; the latter implies a distance between clusters, but no distance is implied between itemsets.

At present, I plan to address Association Rules in Part 6 of this series of blog posts and Clustering in Part 7. I’m essentially going in order of sophistication, using the simpler algorithms and concepts as building blocks to explain the more complex ones that make use of them later on. Part 1 will begin with Naïve Bayes, with Linear Regression, Logistic Regression, Decision Trees and Neural Network following that. Part 8 will be divided into two posts, one dealing with Sequence Clustering and another explaining the uses of nested tables, which that algorithm makes extensive use of. The last data mining method, Time Series, may be divided into two parts if that’s what it takes to explain the two separate algorithms that make it up, ArtXP and ARIMA. After that I’ll hopefully do a post or two on retrieving values through DMX statements like prediction joins and prediction functions, but for the sake of simplicity I’m not going to delve into that until the much simpler task of retrieving the results in the BIDS and SSDT Content Viewers has been taken care of. The use of prediction joins and functions is fairly common, but there is no sense in further complicating a topic that is already complex before we need them. After that I’ll tackle other useful aspects of SSDM, including Cross Validation and Lift Charts, Data Mining Dimensions, Predictive Model Markup Language (PMML), Querying Metadata through Schema Rowsets and other means, the Mining Model Prediction Tab, OLAP mining models and drillthrough. Keep in mind that the list of features that are unavailable in SQL Server 2012 Standard Edition is quite long, so if your organization really wants to get into some serious data mining, it may pay to go the whole hog and get either Enterprise Edition or Business Intelligence Edition. The new windowing functions in 2012 may make it easier than ever before to do some data mining on the cheap on the relational side, but the data mining tools in SSAS are still more sophisticated and valuable by several orders of magnitude. It may be possible now, for example, to recreate some Time Series functionality purely through T-SQL, but it would entail so much research, developer time, testing and risk that it probably wouldn’t be economical to do so. Unless you need to build your own variation on one of these algorithms, it will probably always pay in the long run to simply buy the Enterprise or BI Edition off the shelf rather than recreating its functionality on your own.

Before we can get into a discussion of higher functionality of that kind, we have to take care of the basic task of getting some data to play with. I want to kill two birds with one stone by familiarizing myself, as a rookie, with areas of SQL Server that I need to learn more about, while simultaneously using data that other DBAs can theoretically relate to. I understand SQL Server I/O just well enough to be able to roughly decipher the results of its I/O dynamic management views (DMVs), but just little enough to need to learn more about the topic. There are many more memory counters and DMVs to choose from in the T-SQL arsenal, but I would be out of my depth in deciphering it; that area of SQL Server is still beyond my ken. Therefore, I’m going to poll some performance counters, sys.dm_exec_query_stats, sys.dm_io_virtual_file_stats, sys.dm_os_wait_stats and possibly the System Log every minute for a week or two and then use the results as inputs to the various data mining algorithms. I’ll try to explain as succinctly as possible why I used certain columns in the datasets as inputs, predictable outputs or both, and how to set the column properties appropriately, such as the Content types. I’ll also mess with the algorithm parameters and see what happens. I’m not sure what I’ll find, but I expect that there will be spikes and troughs in I/O depending on other events in the operating system, as well as what I happen to be doing with my new copy of SQL Server Developer Edition 2012 at the time. I also expect to learn a lot I didn’t know about SSDM, because my brain still has too many null spots on that topic; hopefully my internal dataset on mining will be a little less sparse at the end of the process. Because I have not been paid to do this yet, I expect a flood of corrections from people who actually know what they’re talking about; as I said before, this series of tutorials would be presumptuous, if there weren’t so little else out there beyond Microsoft’s own material. At times my explanations will be incomplete or even wrong, but even poor information is sometimes better than none, especially when such a useful tool is languishing for lack of publicity. This stairway is not only rickety but long, because this topic is a tall order. Before diving into the algorithms and their results, it would be wise to explain not only the dataset we’re going to use (such as the columns of the two DMVs and the System Log), but also to give a simple introduction to the statistics we get back and what they mean. Each algorithm returns different results, but there are some commonalities in the datasets they return and between the graphs used to depict them in BIDS and SSDT. In A Rickety Stairway to SQL Server Data Mining, Part 0.1: Data In, Data Out I will try to provide a foundation in the data we’re going to input and the outputs we can expect to find, so that we have something solid to fall back on in case the stairway of understanding gives way, so to speak. I’m going to try to explain the topic of statistics in a couple of paragraphs, without using equations, which will be a trick commensurate to this one by evil magician David Blaine. We won’t get into the meat and potatoes of the series until A Rickety Stairway to SQL Server Data Mining, Part 1: The Naïve Bayes Algorithm, but come back after the Thanksgiving holiday for that statistical gimmick and see if I can pull it off. Stay tuned – statistics doesn’t have to be drab and boring, not when we can end blog posts with a good cliffhanger like this.