OK, so that is quite a contradictory title, but unfortunately it is true. There is a common misconception that the query with the highest percentage relative to batch is the worst performing. Simply put, it is a lie, or more accurately we dont understand what these figures mean.

Consider the two below simple queries:

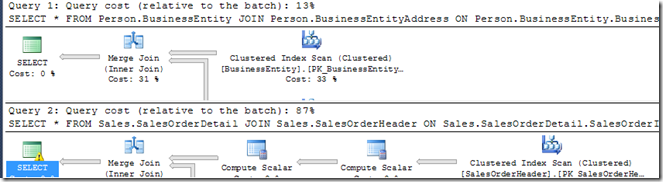

SELECT * FROM Person.BusinessEntity JOIN Person.BusinessEntityAddress ON Person.BusinessEntity.BusinessEntityID = Person.BusinessEntityAddress.BusinessEntityID go SELECT * FROM Sales.SalesOrderDetail JOIN Sales.SalesOrderHeader ON Sales.SalesOrderDetail.SalesOrderID = Sales.SalesOrderHeader.SalesOrderID

After executing these and looking at the plans, I see this :

So, a 13% / 87% split , but 13% / 87% of WHAT ? CPU ? Duration ? Reads ? Writes ? or some magical weighted algorithm ?

In a Profiler trace of the two we can find the metrics we are interested in.

CPU and duration are well out but what about reads (210 and 1935)? To save you doing the maths, though you are more than welcome to, that’s a 90.2% / 9.8% split. Close, but no cigar.

Lets try a different tact. Looking at the execution plan the “Estimated Subtree cost” of query 1 is 0.29449 and query 2 its 1.96596. Again to save you the maths that works out to 13.03% and 86.97%, round those and thats the figures we are after. But, what is the worrying word there ? “Estimated”.

So these are not “actual” execution costs, but what’s the problem in comparing the estimated costs to derive a meaning of “Most Costly”. Well, in the case of simple queries such as the above , probably not a lot. In more complicated queries , a fair bit.

By modifying the second query to also show the total number of lines on each order

SELECT *,COUNT(*) OVER (PARTITION BY Sales.SalesOrderDetail.SalesOrderID) FROM Sales.SalesOrderDetail JOIN Sales.SalesOrderHeader ON Sales.SalesOrderDetail.SalesOrderID = Sales.SalesOrderHeader.SalesOrderID

The split in percentages is now 6% / 94% and the profiler metrics are :

Even more of a discrepancy.

Estimates can be out with actuals for a whole host of reasons, scalar UDF’s are a particular bug bear of mine and in-fact the cost of a udf call is entirely hidden inside the execution plan. It always estimates to 0 (well, a very small number).

Take for instance the following udf

Create Function dbo.udfSumSalesForCustomer(@CustomerId integer) returns money as begin Declare @Sum money Select @Sum= SUM(SalesOrderHeader.TotalDue) from Sales.SalesOrderHeader where CustomerID = @CustomerId return @Sum end

If we have two statements , one that fires the udf and another that doesn't:

Select CustomerID from Sales.Customer order by CustomerID go Select CustomerID,dbo.udfSumSalesForCustomer(Customer.CustomerID) from Sales.Customer order by CustomerID

The costs relative to batch is a 50/50 split, but the has to be an actual cost of firing the udf. Indeed profiler shows us :

No where even remotely near 50/50!!!!

Moving forward to window framing functionality in SQL Server 2012 the optimizer sees ROWS and RANGE ( see here for their functional differences) as the same ‘cost’ too

SELECT SalesOrderDetailID,SalesOrderId, SUM(LineTotal) OVER(PARTITION BY salesorderid ORDER BY Salesorderdetailid RANGE unbounded preceding) from Sales.SalesOrderdetail go SELECT SalesOrderDetailID,SalesOrderId, SUM(LineTotal) OVER(PARTITION BY salesorderid ORDER BY Salesorderdetailid Rows unbounded preceding) from Sales.SalesOrderdetail

By now it wont be a great display to show you the Profiler trace reads a *tiny* bit different.

So moral of the story, Percentage relative to batch can give a rough ‘finger in the air’ measurement, but dont rely on it as fact.