Introduction

The problem statement is to execute a Data Factory Pipeline automatically every time an email is received with a predefined subject and an attachment file. The attachment file needs to be used in the Azure Data Factory Pipeline execution.

Here are the Azure Resources used:

Steps

I will explain the step-by-step process to design a Data Factory pipeline to be executed automatically with the attachment text file received through email. The Logic App workflow will be used to retrieve the mail attachment and save it in Azure Storage. An event based trigger will be created to execute the pipeline every time the file is available in Azure Storage.

Step 1

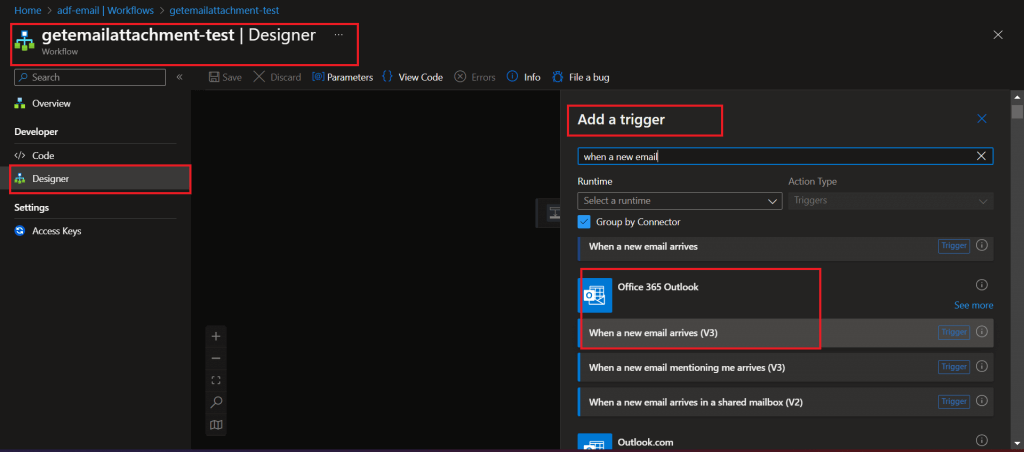

Create a Logic App workflow, named getemailattachment-test. Go to the Designer menu item and add a trigger, named When a new email arrives, deom the (V3) from Office 365 Outlook category.

Step 2

A few advanced parameters are selected and values are set in the trigger. Emails matching the parameter values will only be selected for processing in the Logic App workflow. You can see the settings in the image below.

Step 3

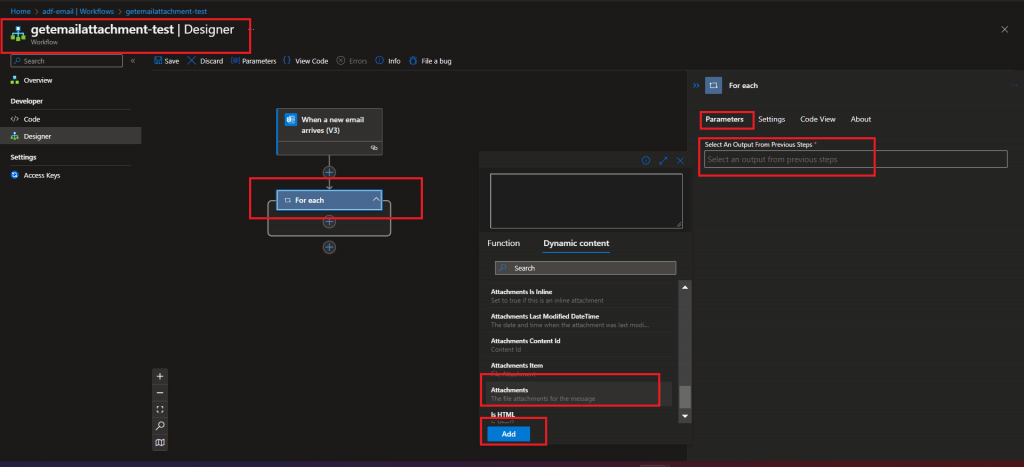

Add a "For each" action after the trigger in the workflow. Select the parameter value as attachments from the trigger output.

Parameter Value

triggerBody()?['attachments']

Step 4

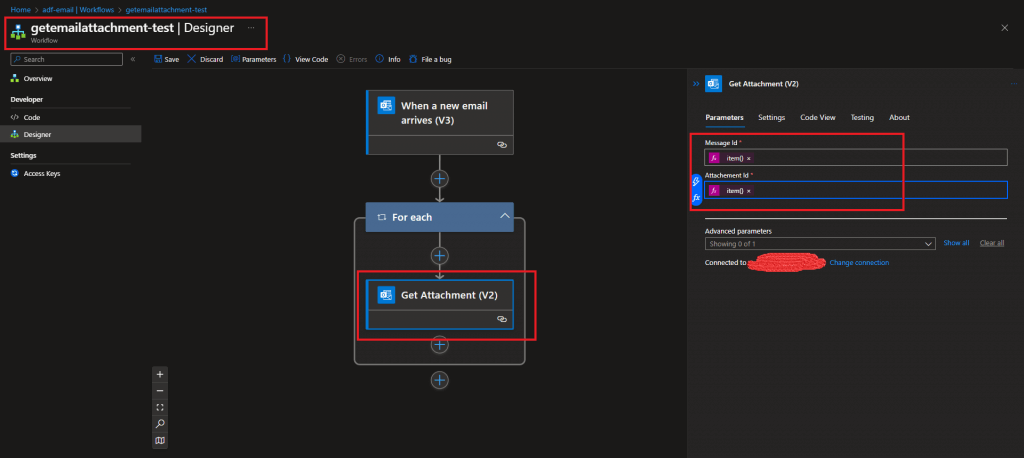

Add a Get Attachment (V2) activity inside the For Each block. Select the parameter values from the trigger output.

Message Id:

triggerBody()?['id']

Attachment Id:

item()?['id']

Step 5

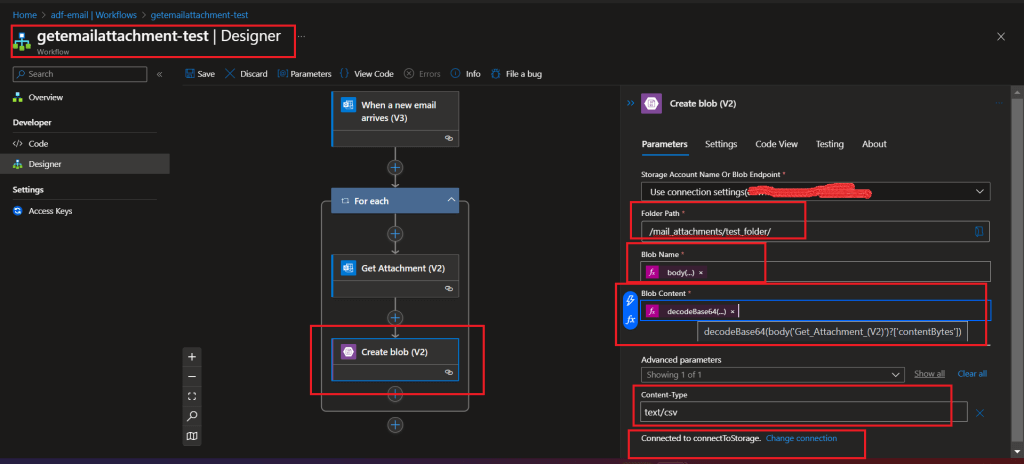

Add an activity, named Create blob (V2), after Get Attachment (V2) inside the For each block.

The Storage Account connection needs to be set first. The folder path is provided where the email attachment will be saved. Instead of a hardcoded folder path, dynamic content also can be provided. The Blob name and Blob Content parameter values are selected from the Get Attachment (V2) activity output.

Blob name

body('Get_Attachment_(V2)')?['name']

Blob Content

decodeBase64(body('Get_Attachment_(V2)')?['contentBytes'])The content type parameter value is set as text/csv to avoid other types of attachments to be copied, if any.

Save the workflow and enable it.

Step 6

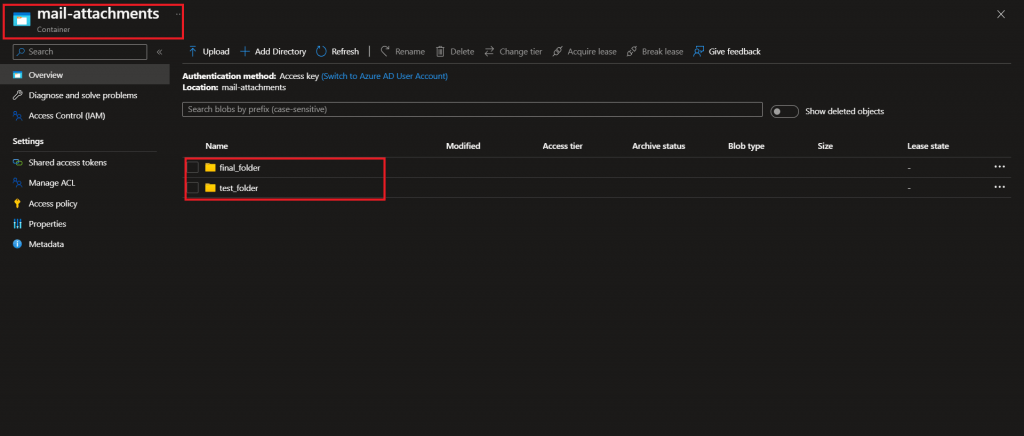

Create a container, named mail-attachments, under the ADLS Gen 2 Storage Account. Also, create two folders, named test_folder and final_folder, inside the container. The Logic App workflow execution should copy the CSV email attachment in the test_folder.

Both the folders are empty.

Step 7

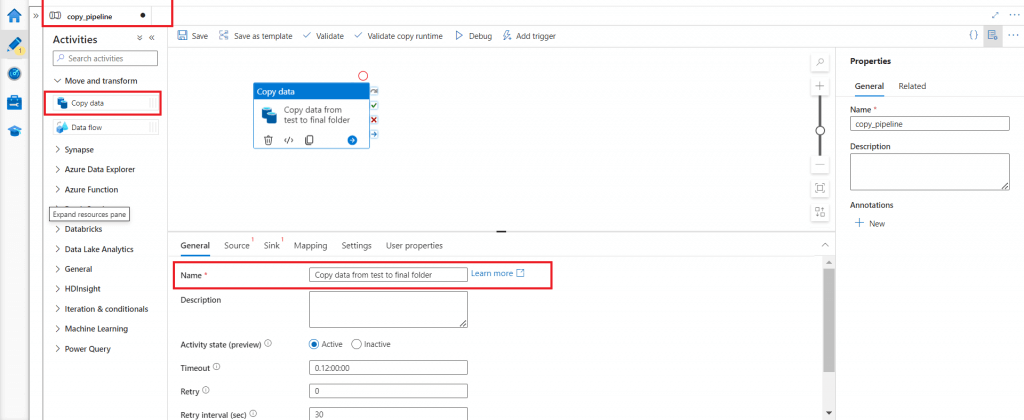

Create a new Azure Data Factory Pipeline, named copy_pipeline. A Copy data activity is added. This activity should copy the trigger_test.csv file from test_folder to final_folder as created in the last step.

Step 8

Create a new Storage events trigger, named trigger_copyfiles, for the copy_pipeline. Whenever the trigger_test.csv file is created in the test_folder of the mail-attachments container, this trigger should start and execute copy_pipeline.

Start the trigger if not already done.

Step 9

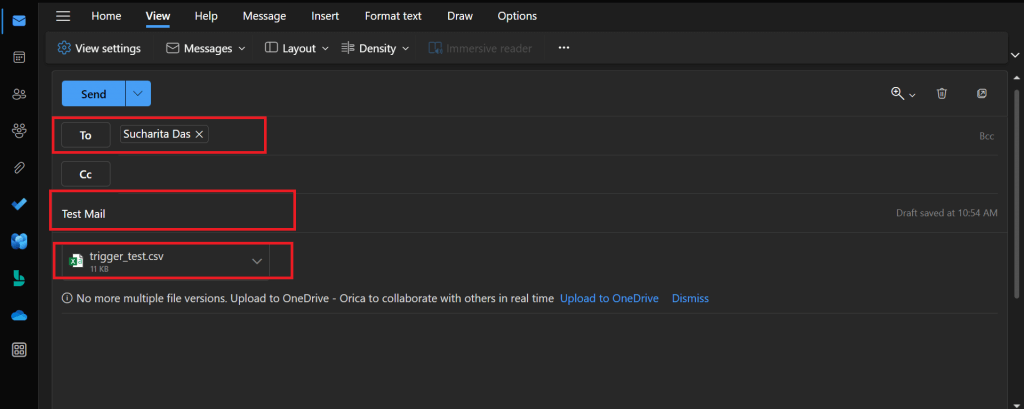

Send a mail to the Outlook Office 365 email id as configured in the Logic App. The Subject Line should match with the Subject filter used in the Logic App Workflow trigger.

The attachment named trigger_test.csv is added with the email.

Step 10

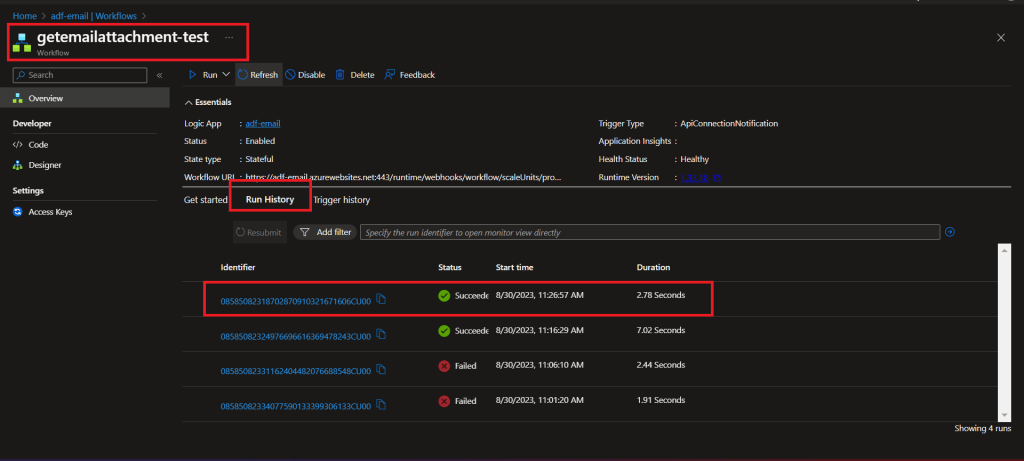

Once the email reaches the mailbox, the workflow is triggered. The run details of the workflow can be viewed from the Overview menu item.

Step 11

Once the workflow run is completed, trigger_test.csv file is avthe ailable in the test_folder of mail-attachments container.

Step 12

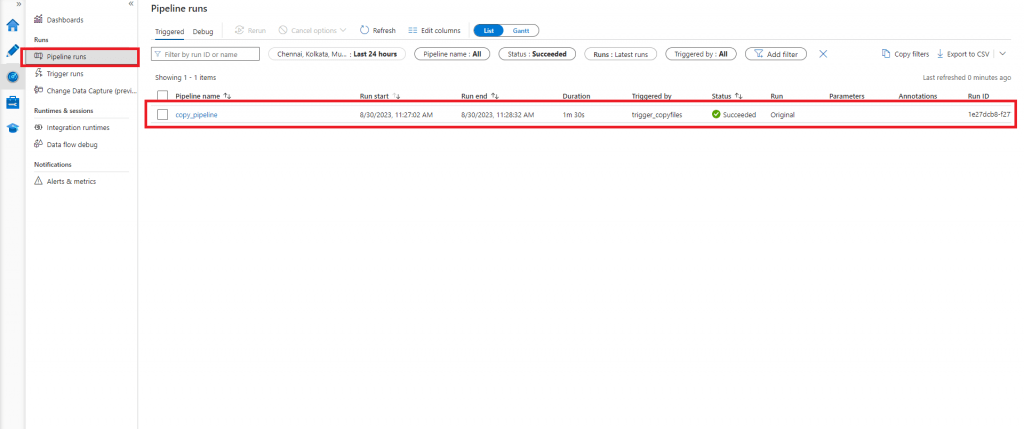

Go to the Monitor tab of Azure Data Factory Studio. The trigger, named trigger_copyfiles, should have started and succeeded.

Step 13

In the Pipeline runs menu item, the copy_pipeline should have succeeded as well.

Step 14

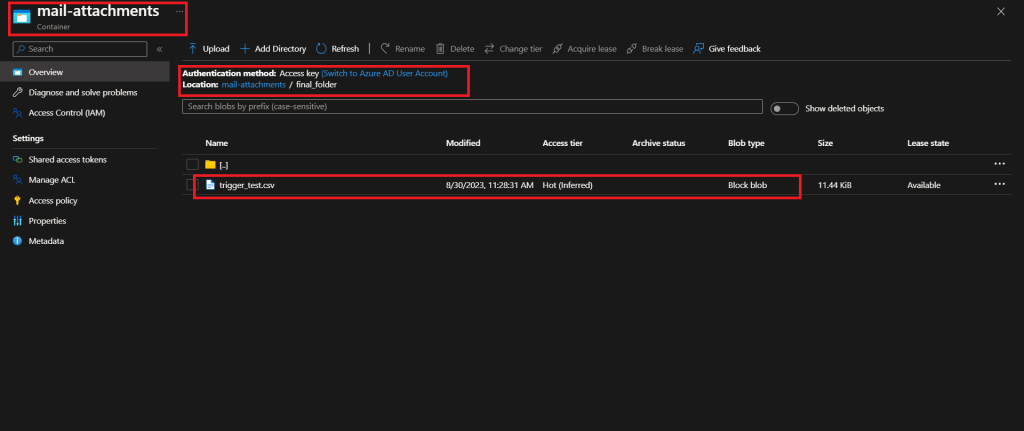

The file, named trigger_test.csv, is available in the final_folder after the successful completion of the Data Factory trigger and pipeline.

Conclusion

The Logic App workflow, Azure Data Factory Pipeline, Data Factory Trigger, and Storage Account together can make the entire process automated when the input data is sent in an email and need to be processed through Data Factory Pipeline. The email Inbox, workflow trigger run history, and Data Factory pipeline runs need to be monitored for end-to-end successful processing. If attachment file with the same name is sent to the Mailbox periodically, the Logic App workflow can be modified to first copy the existing attachment file in the source location of Storage Account to another folder and then copy the latest attachment file to continue the automated end-to-end file processing at a regular interval.