Introduction

Let’s face it – one of the most embarrassing things that can go wrong for a DBA is for the SQL Server to shut itself down because it runs out of drive space. This is past the time when we should have gone to the network team and told them we needed more space. This is the time when production is down, everyone is asking when the instance will be back up and the boss wants an update every 3 minutes until the database is back up. In short, this is a situation we all want to avoid. The simple task of monitoring drive space is one that, in my opinion, should be automated. If we do it right, we can even make some projections and tell the network team when we’re going to run out of space more than a day ahead of time.

This is the type of activity that will be limited to DBA use. I put these types of database objects in a DBA database that I keep on every server. I wrote this to work on SQL Server 2008 Standard Edition.

The act of monitoring drive space involves two activities: capturing drive space data to report on it and including some predictive reporting for when drives will run out of space.

Space: Capturing the Data

There are a great many ways to capture drive space data. This is the approach that I use. It’s done completely in T-SQL and doesn’t have any dependencies other than the command shell. This process runs daily as a database job and stores data about each drive in a table.

Table Creation

The first step is to build a couple of tables. I need a table to capture drive space data, and that table is pretty simple.

IF OBJECT_ID('dbo.DriveSpace', 'u') IS NOT NULL DROP TABLE dbo.DriveSpace;

CREATE TABLE dbo.DriveSpace (

ID Integer not null IDENTITY (1, 1),

CONSTRAINT DriveSpace_PK primary key clustered (ID),

Drive Char(1) not null,

Total Bigint not null,

Free Bigint not null,

EntryDate Datetime not null default GETDATE());For later reporting, I find it useful to have a table of drives on the server on which I want to report. I use this table because not all servers are configured with the same drive letters. They would be identical in an ideal world, but I live in the real world where not everything is perfect. This table is also a simple one.

IF OBJECT_ID('svr.ServerDrives', 'u') IS NOT NULL DROP TABLE svr.ServerDrives;

CREATE TABLE svr.ServerDrives (

ID Integer not null IDENTITY (1, 1),

CONSTRAINT ServerDrives_PK primary key clustered (ID),

ServerName Varchar(128) not null,

DriveLetter Char(1) not null,

Description Varchar(32) not null,

EntryDate Datetime not null DEFAULT GETDATE());Note that I have a ServerName column. I have this table populated with the drives on all my SQL Servers. They’re all the same so I can query one table and see all the drives on all the servers. This is just for convenience. The drives rarely change and any time I need to add anything to the table, I add the rows to the tables on all servers so they’re all the same. Updates happen so rarely that I haven’t found the need to automate synchronization of the tables.

All I really need for now are rows for the drives on this server, so I’ll populate it while I’m here. You’ll likely need to adjust the drive letters for the drives on your server.

SELECT @@SERVERNAME, d.Letter, d.Description, GETDATE()

FROM (VALUES('C', 'System'),

('D', 'Data'),

('L', 'Log'),

('R', 'Backup')

) d (Letter, Description)

ORDER BY d.Letter;Collecting Drive Space Data

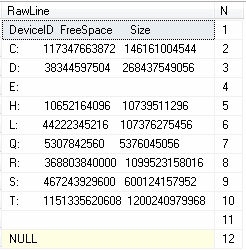

The next step is to create a procedure to collect the drive space data. I’m going to take advantage of a DOS command called WMIC. It’s one of those commands that does a bunch of different things, but the entire command is beyond the scope of this article. I’ll use the wmic logicaldisk get command to return the following output.

C:\>wmic logicaldisk get deviceid,freespace,size DeviceID FreeSpace Size C: 117406580736 146161004544 D: 38366912512 268437549056 E: H: 10652164096 10739511296 L: 44222345216 107376275456 Q: 5307842560 5376045056 R: 369259921408 1099523158016 S: 467243929600 600124157952 T: 1151335620608 1200240979968 C:\>

The first step in creating the procedure is to create the procedure and create a temp table to receive the output of the DOS command. I’m including N as an IDENTITY column here and the reason for it will be clear soon.

IF OBJECT_ID('dbo.TrackDriveSpace', 'p') IS NOT NULL DROP PROCEDURE dbo.TrackDriveSpace;

go

CREATE PROCEDURE dbo.TrackDriveSpace

AS

BEGIN

DECLARE @dtmNow Datetime = GETDATE();

-----------------------------------------------------------------------------

--initialization

-----------------------------------------------------------------------------

SET NOCOUNT ON;

--create a temp table to capture our incoming rows from the dos command

IF OBJECT_ID('tempdb.dbo.#incoming', 'u') IS NOT NULL

DROP TABLE #incoming; CREATE TABLE #incoming ( RawLine Varchar(255), N integer not null identity(1, 1));

Next, I’ll shell out to the DOS command and capture the output into the temp table.

-----------------------------------------------------------------------------

--shell out to dos and call wmic to get the disk space data needed

-----------------------------------------------------------------------------

INSERT INTO #incoming(rawline)

EXECUTE xp_cmdshell 'wmic logicaldisk get deviceid,freespace,size';The #incoming temp table now looks like this.

The temp table contains everything I need in one column. The structure might not be the perfect columnar format yet, but it isn’t impossible. Parsing out the columns consists of 3 CTEs and an INSERT statement.

- The first CTE (cteHeader) queries the header row.

See the N column? That’s the IDENTITY column I used to assign a row number when the DOS command populated the temp table. That’s the key to getting the header row.

- The second CTE (cteStart) looks at the header row and gets the character positions that start each “column” within the single column.

- The third CTE (cteDriveinfo) uses the column positions to extract the values in the “columns” and eliminate the unwanted rows. There’s a near-empty row for the empty DVD-ROM, an empty row at the end of the command and the normal NULL row when executing any DOS command directly into a table. Yes, there is a non-SARGable predicate here, but it’s necessary to eliminate the empty row. Also, I am going to scan the whole table whether it’s there or not because I need all the data. There aren’t any indexes that could have used anyway.

- The INSERT statement converts the strings to numbers and stores the data in the permanent table.

-----------------------------------------------------------------------------

--extract the data and write the rows to the permanent table

-----------------------------------------------------------------------------

WITH cteHeader AS (

--read our header row

SELECT RawLine

FROM #incoming

WHERE N = 1

),

cteStart AS (

--determine the starting positions of each column to extract

SELECT DriveStart = 1,

FreeStart = CHARINDEX('FreeSpace', h.RawLine),

TotalStart = CHARINDEX('Size', h.RawLine)

FROM cteHeader h

),

cteDriveInfo AS (

--isolate each "column" of data, allowing for the return at the end of the last column

SELECT Drive = SUBSTRING(i.RawLine, p.DriveStart, 1),

FreeBytes = LTRIM(RTRIM(SUBSTRING(i.RawLine, p.FreeStart, p.TotalStart - p.FreeStart))),

TotalBytes = RTRIM(LTRIM(RTRIM(REPLACE(SUBSTRING(i.Rawline, p.TotalStart, 99), CHAR(13), ''))))

FROM #incoming i

CROSS APPLY cteStart p

WHERE i.N > 1

AND RawLine IS NOT NULL

AND NOT SUBSTRING(RawLine, 3, 99) = REPLICATE(SUBSTRING(RawLine, 3, 99), ' ')

)

INSERT INTO dbo.DriveSpace(Drive, Total, Free, EntryDate)

SELECT LOWER(Drive), CONVERT(Bigint, TotalBytes), CONVERT(Bigint, FreeBytes), @dtmNow

FROM cteDriveInfo

WHERE NOT TotalBytes = ''

ORDER BY Drive;

The only thing left to do is to clean up and terminate the procedure.

----------------------------------------------------------------------------- --termination ----------------------------------------------------------------------------- DROP TABLE #incoming; END; go

I could just iterate through the drives in the svr.ServerDrives table I created earlier, but that would require a loop. Not only would it be the less efficient approach, but it wouldn’t let me query the space history for other drives.

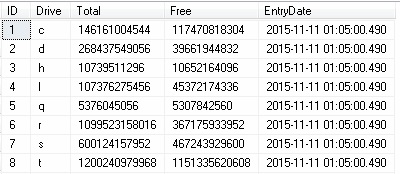

When the procedure is executed, it populates one row into the table for each drive. Here’s an example of what one day’s worth of data looks like.

Over time, this builds up a history of drive space data for all the drives. I have the procedure scheduled to run as a step in a daily job.

Querying the Data

Now that I’m building up history, what can I do with it? I’ll start by getting two different types of recent space data.

Recent Space Data

To get the most recent picture of each monitored drive, I can query it like this.

WITH cteDrives AS (

SELECT Drive, Total, Free, EntryDate, ROW_NUMBER() OVER(PARTITION BY Drive ORDER BY EntryDate DESC) rn

FROM dbo.DriveSpace

),

cteRecent AS (

SELECT Drive, Total, Free

FROM cteDrives

WHERE rn = 1

)

SELECT sd.DriveLetter, sd.Description, CONVERT(Numeric(18, 1), r.Total * 1.0 / POWER(1024, 3)) TotalGB,

CONVERT(Numeric(18, 1), r.Free * 1.0 / POWER(1024, 3)) FreeGB,

CONVERT(Numeric(18, 1), 100 - (r.Free * 100.0 / r.Total)) FreePercent

FROM cteRecent r

INNER JOIN svr.ServerDrives sd ON r.Drive = sd.DriveLetter

WHERE sd.ServerName = @@SERVERNAME

ORDER BY sd.DriveLetter;To get the most recent full run, I have to eliminate the svr.ServerDrives table from the query to include the data from all the drives.

WITH cteRecent AS (

SELECT TOP 1 EntryDate

FROM dbo.DriveSpace

ORDER BY EntryDate DESC

)

SELECT ds.Drive, CONVERT(Numeric(18, 1), ds.Total * 1.0 / POWER(1024, 3)) TotalGB,

CONVERT(Numeric(18, 1), ds.Free * 1.0 / POWER(1024, 3)) FreeGB,

CONVERT(Numeric(18, 1), 100 - (ds.Free * 100.0 / ds.Total)) FreePercent

FROM dbo.DriveSpace ds

CROSS APPLY cteRecent r

WHERE ds.EntryDate = r.EntryDate

ORDER BY ds.Drive;Time: How Much Do I Have Left?

The ultimate question to answer is how much time I have left before my drives run out of space. Running out of space is precisely what I want to avoid. This is where the fun part begins and I really get to use the data I’ve collected.

I first need to make some decisions about how I calculate the time I have left.

- I’ll assume that the average rate of growth remains constant for each drive over time. In other words, the growth over the past month represents the growth I can expect over the next month.

- Any increase in free space from one day to the next presents an interesting wrinkle.

- Should I count the time when the SAN administrator allocated another 200 GB? I don’t have a record of when SAN volume expansions were done.

- What about when I archived the 3 databases that were no longer required? I have a record of when I archived each database, but I didn’t track data and log space at the time of archival.

- Because I may or may not want to include space gains in the calculations, I’ll make it an optional parameter to define at run-time. I want the ability to eliminate it to get the actual data growth over time.

- I’ll use the average daily growth over a number of days to determine how many days remain before running out of space. Activity can vary on the server and I might want to see how sampling 90 days compares against a year’s worth of history, so I’ll make the number of days a run-time parameter.

- Since I have different drives for data, log and backup files, I’ll need to calculate a separate average daily growth for each type of drive.

Given the average daily rate of growth and the amount of free space, I can calculate how many days I have left until I’m out of space. Given the number of days, I’ll calculate the “date of death” for each drive.

I’ll start by defining my procedure and creating a couple of temporary tables. The @Days parameter will specify how many days of history I want to consider. The @IncludeNegativeGrowth parameter is to specify if I want to include negative growth (an increase in available space) or not. Because this could represent a SAN volume extension, I’ll default to excluding days where we gain space.

ALTER PROCEDURE svr.RecentDriveGrowth(@Days Integer,

@IncludeNegativeGrowth Bit = 0)

AS

BEGIN

--initialization

SET NOCOUNT ON;

SET ANSI_WARNINGS OFF;

--create a temp table to hold the daily free space

IF OBJECT_ID('tempdb.dbo.#DriveInfo', 'u') IS NOT NULL DROP TABLE #DriveInfo;

CREATE TABLE #DriveInfo (

ID Integer,

EntryDate Date,

CurrentData Numeric(18, 3),

CurrentLog Numeric(18, 3),

CurrentBackup Numeric(18, 3),

NextData Numeric(18, 3),

NextLog Numeric(18, 3),

NextBackup Numeric(18, 3),

DataGrowth Numeric(18, 3),

LogGrowth Numeric(18, 3),

BackupGrowth Numeric(18, 3));

--create a temp table to hold the dates each drive will fill up and die

IF OBJECT_ID('tempdb.dbo.#DatesOfDeath', 'u') IS NOT NULL DROP TABLE #DatesOfDeath;

CREATE TABLE #DatesOfDeath (

Drive Varchar(32),

DailyGrowth Numeric(18, 6),

DaysUntilEmpty Numeric(18, 1),

DateEmpty Date);

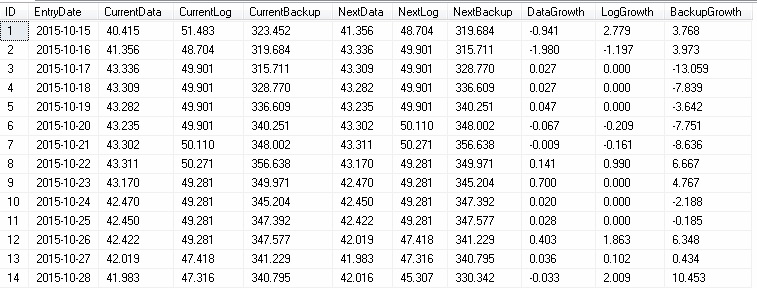

The #DriveInfo table is my working table. This is where I’ll build up a table of dates and space available, then fill in the next day’s space available, which will give me the growth per day. The #DatesOfDeath table is the one where I’ll store the report I build up for each drive.

The next step is to populate the #DriveInfo table. This query does a lot of work, which is divided into 3 CTEs and 1 INSERT statement.

- The first CTE (cteDrives) queries a list of drives to check on this server. This is the same table created and populated back at the beginning of this article. I’m using this table because I want to limit the analysis to the data, log and backup drives. I’m not going to look at the system, quorum and other drives at this point. I could adapt it later to include them, but they don’t have a lot of variability on my SQL Servers.

- The second CTE (cteDriveInfo) builds a list of drive space data. It joins in the history from the dbo.DriveSpace table, filtered to the recent @Days of history. It uses a crosstab query to convert the row-based data into columns for the free space on the data, log and backup drives. Even through the space data is captured in bytes, everything is converted to GB to make it easier to read.

- The last CTE (cteDailyGrowth) queries cteDriveInfo and joins it to itself offset by 1 row. This returns a result set with the free space on the current day and the next day. This set-based approach is lightning-fast and gives me the data I need from the next row without using LEAD, which is available in SQL 2012 and later.

- The INSERT statement populates the date, current and next day’s free space and the growth into my working table.

--build the base data set for the drives on this server

WITH cteDrives AS (

--start with the list of drives to check for this server

SELECT DriveLetter, Description

FROM svr.ServerDrives

WHERE ServerName = @@SERVERNAME

),

cteDriveInfo AS (

--query a list of dates going back @Days days and use a crosstab to pivot the rows into columns for each date.

--this gives a table with date and free space on the data, log and backup drives, along with an ascending

--integer for each row.

SELECT ROW_NUMBER() OVER(ORDER BY ds.EntryDate) RowNum, ds.EntryDate,

DataFree = CONVERT(Numeric(18, 3), MAX(CASE WHEN d.Description = 'Data' THEN ds.Free * 1.0 / POWER(1024, 3) END)),

LogFree = CONVERT(Numeric(18, 3), MAX(CASE WHEN d.Description = 'Logs' THEN ds.Free * 1.0 / POWER(1024, 3) END)),

BackupFree = CONVERT(Numeric(18, 3), MAX(CASE WHEN d.Description = 'Backup' THEN ds.Free * 1.0 / POWER(1024, 3) END))

FROM cteDrives d

INNER JOIN dbo.DriveSpace ds ON ds.Drive = d.DriveLetter

WHERE EntryDate >= DATEADD(day, 0, DATEDIFF(day, 0, GETDATE()) - @Days)

GROUP BY ds.EntryDate

),

cteDailyGrowth AS (

--using the row number, join the drive free table to itself, offset by one day. this gets the free

-- space for each drive on that day and also on the next day.

SELECT di1.RowNum, di1.EntryDate, di1.DataFree CurrentData, di1.LogFree CurrentLog, di1.BackupFree CurrentBackup,

di2.DataFree NextData, di2.LogFree NextLog, di2.BackupFree NextBackup

FROM cteDriveInfo di1

INNER JOIN cteDriveInfo di2 ON di2.RowNum = di1.RowNum + 1

)

--populate the temp table with the drive, date and free space for each drive on the date and the following date.

--the growth of each drive is the current minus the next.

INSERT INTO #DriveInfo(ID, EntryDate, CurrentData, CurrentLog, CurrentBackup,

NextData, NextLog, NextBackup, DataGrowth, LogGrowth, BackupGrowth)

SELECT RowNum, CAST(EntryDate AS Date), CurrentData, CurrentLog, CurrentBackup, NextData, NextLog, NextBackup,

DataGrowth = CurrentData - NextData,

LogGrowth = CurrentLog - NextLog,

BackupGrowth = CurrentBackup - NextBackup

FROM cteDailyGrowth

ORDER BY EntryDate;When this step is done, our #DriveInfo table will look like this.

I now have each drive’s free space data for each day and the next day, along with each drive’s growth for the day.

The next step is to use the average growth per day to determine how many days I have left until the drive runs out of space. The free space, rate of growth and time left is going to vary by drive, so I’ll start by calculating the numbers for the Data drive first. There are 3 steps involved in this calculation, divided into 2 CTEs and 1 INSERT statement.

- The first CTE (cteDataGrowth) calculates the average daily growth for the data drive. It includes negative growth (reclaimed space or SAN volume extensions) according to the @IncludeNegativeGrowth parameter I passed to the procedure. This result is a single number that is the average daily growth.

- The second CTE (cteRecentFree) returns the most recent free space on the data drive and the date on which the row was created.

- The INSERT statement writes a single row to my report table - #DatesOfDeath. The number of days until empty is the current free space divided the average daily growth. Given the number of days, adding it to the most recent date is simple.

WITH cteDataGrowth AS (

--calculate the average growth per day, including negative growth only when specified in @IncludeNegativeGrowth

SELECT AVG(DataGrowth) Daily

FROM #DriveInfo

WHERE ((@IncludeNegativeGrowth = 0 AND DataGrowth >= 0)

OR @IncludeNegativeGrowth = 1)

),

cteRecentFree AS (

--read the most recent drive space info

SELECT TOP 1 CurrentData, EntryDate

FROM #DriveInfo

ORDER BY ID DESC

)

--record the number of days and date of death for the drive

INSERT INTO #DatesOfDeath(Drive, DailyGrowth, DaysUntilEmpty, DateEmpty)

SELECT 'Data', CONVERT(Numeric(18, 6), dg.Daily),

DaysUntilDataEmpty = CONVERT(Numeric(18, 1), rf.CurrentData / dg.Daily),

DateDataEmpty = DATEADD(day, CONVERT(Integer, rf.CurrentData / dg.Daily), rf.EntryDate)

FROM cteRecentFree rf

CROSS APPLY cteDataGrowth dg;The next step is to do the same thing for the Log and Backup columns. The code is the same as for the Data columns, except it uses the appropriate columns to build the same data for the Log and Backup drives, which is then populated in the #DatesOfDeath table. I won’t list all the code here, but it is included in the script attached to this article.

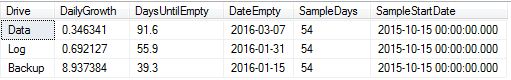

The last step is to select the data for the reports. The first report is the data in the #DatesOfDeath table. It contains 1 row for each drive and returns the drive, average daily growth, number of days and the date when the drive will be empty. I’ll also include the start date of the same and the number of days used in the sample.

--select the report of dates of death

SELECT Drive, DailyGrowth, DaysUntilEmpty,

DateEmpty = CASE WHEN DaysUntilEmpty < 0 THEN NULL ELSE DateEmpty END,

@Days SampleDays, DATEADD(day, 0, DATEDIFF(day, 0, GETDATE()) - @Days) SampleStartDate

FROM #DatesOfDeath

ORDER BY CASE Drive WHEN 'Data' THEN 1

WHEN 'Log' THEN 2

WHEN 'Backup' THEN 3

ELSE 4

END;The report to show the details comes from the #DriveInfo table. I include it in my normal output because I’m interested in seeing the data that went into the report. I can look for outliers and research if anything unusual happened on that day or if it was a normal process.

--select the drive space history used in the report

SELECT ID Day, EntryDate, CurrentData, CurrentLog, NextData, NextLog, DataGrowth, LogGrowth

FROM #DriveInfo

ORDER BY ID;The only thing left to do is to drop the temporary tables.

--termination DROP TABLE #DatesOfDeath; DROP TABLE #DriveInfo;

Now the report can be run using a simple T-SQL statement like this at any time.

execute dba.svr.RecentDriveGrowth 54, 0;

The report is also very simple, but the projection it gives is priceless.

The time left will vary, depending on how much history you consider. If, for example, you do a one-time load that's really intense, you'll have a lot of log space consumed on a particular day, which can skew the average. In my environment, I find it best to look at the last 30 and 90 days and use my best judgement from there.

Conclusion

This article has shown a simple way to avoid one of the most embarrassing situations a DBA could encounter – SQL Server shutting itself down because a drive ran out of space. Regular monitoring of the most recent free space collected will help to avoid the situation from ever occurring.

Aside from simple monitoring, the procedure to look at the average daily growth per drive enables the DBA to see when the drives will need more space and take action before it becomes an emergency. Think about it – giving the network team a 30-day lead time that you’re going to need more SAN space is a lot better than telling them you need more space in the next 30 minutes or production will be down. That’s the real power of the relatively simple process of capturing drive space data every day.

Expansion and Adaptability

Using the techniques presented here, you can capture nearly anything from the system you want to monitor. The WMIC command alone is very powerful and has a great many first-level commands.

Personally, I don’t like to have SQL Server monitoring consolidated on a single server, but you could do so if you wanted to. The WMIC command can accept a delimited list of servers, so you could process and store it all on a single SQL Server.

If you want an email with the drive space data, you could use the techniques in this article to collect the data. You can then create a nicely-formatted HTML email and send it to yourself.

Like so many things, where you go from here can be answered in only one way: It depends.