Introduction

Whether you want a rock solid Disaster Recovery (DR) technology or are trying to migrate to a new server with minimal downtime, Log Shipping has a lot to offer. One of the primary strengths of the DR solution used in a previous article is the ability to dynamically read the environment and customize the failover process just for you. The solution knows which databases are Log Shipped, when to run and disable applicable jobs, what log backup files need to be applied, etc. When utilizing Microsoft's Log Shipping setup, this type of "intelligence" can be helpful for a couple of reasons. First, the awareness makes failing over a large number of databases at one time much easier. Second, applying a high number of transaction log backups is a part of the process and is handled for you. This means that whether you have multiple databases, multiple transaction log backups, or both, this solution has you covered.

Consider this solution if you want to:

- Perform a DR test and demonstrate you are prepared for a true disaster.

- Fail over to the Secondary as part of a true DR situation.

- Bring up the Secondary for service because the Primary is down and won't come back up.

- Migrate to a new server with minimal downtime.

Design Considerations

In order to work towards a flexible and dynamic solution, certain design considerations were taken into account with this solution. The first was to support only Microsoft's Log Shipping setup and not custom solutions. Microsoft's implementation is tried and true and one can't begin to guess at how someone else might implement such functionality.

The second consideration was to make the DR failover process more standard and repeatable for Log Shipping. This stability should be available regardless of the number of databases involved and how many transaction log backups need to be applied.

The third item was to develop a process that was low-tech and required only a T-SQL script and SQL Server Management Studio to execute. This helps give us the confidence that when the need arises we'll be able to successfully fail over.

Getting Started

Let's quickly look at some of the configuration needed before running the master script, Streamline_LS_Failovers.sql. If you plan on failing over from the Primary, there needs to be a Linked Server on the Secondary that points back to the Primary. This allows for the reading of important information, such as where database log backups are being placed, etc.

Open the master script in SQL Management Studio as a sysadmin that has access to the location of the database backup files. Configure your query window by selecting Query -> Results To Grid up in the menu bar to help format the output properly. Then connect to the Secondary server by selecting Query -> Connection -> Connect. Please review the script contents for a full list of prerequisites before proceeding.

Next, a couple of setup options need to be configured in order to create the customized failover scripts. The first option gives you control over whether you want to fail over from the Primary server or not.

SET @FailoverFromPrimary = '-' -- Valid entries are 'Y' or 'N'. Please replace the dash with one of these values

If you choose to set the variable to 'Y', a final transaction log backup will be performed on each Log Shipped database as part of the failover process. If not, no tail log backup will be attempted and the Secondary will simply apply all applicable transaction log backups before coming online. For the purpose of this article, we'll assume 'Y' is specified and the Primary is available.

The second option allows for you to choose where you want the failover scripts to be created.

SET @ScriptsLocation = '-' -- Update dash to desired network location for generated scripts

This should be a safe and accessible place on your network. Make sure the account that SQL Server is running as has write access to the place you've designated.

Generating the SQL Scripts

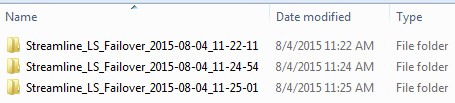

Running the master script does not actually try to fail over, but rather generates the scripts needed to perform the failover when ready. For every time you run the master script, a new folder with the latest scripts is created in the location you designated. After running the master script a few times, the network location I specified contains the following folders:

Scripts 01.sql through 10.sql are contained in each folder above and are to be run in order. The first five scripts handle failing over to the Secondary and the second five handle failing back to the Primary. Providing a step-by-step process to follow is definitely welcome when you find yourself in a stressful DR situation. Feel free to run the master script against your Secondary at any point. While the recommendation is to generate a new set of scripts just prior to failing over, they can still be created at any time and reviewed for understanding.

When running the master script, the surrounding environment is investigated and a series of SQL commands are then constructed. Investigating the environment takes on a couple of different forms, the first of which is to make good use of the Log Shipping tables in MSDB. These tables contain valuable information and are the "brains" behind the operation. Important information, such as which server is the Primary in the Log Shipping setup and when the last transaction log backup was applied to each database, is available from the system tables.

Another means of investigation is to review the list of files in the appropriate log backup directories. If database DB1 is backing up to '\\Server\Share\DB1' then we need to find all log backups in that directory. We only look for files that contain the database name and the '.trn' extension somewhere in the name. By then comparing what we know from the system tables with what we actually found in the backup directories, we can learn a lot about the environment.

Once the investigation is complete, we explicitly create tables in the tempdb database and use them as a type of holding area. After running the master script the following tables exist:

These tables are populated with information used to perform the failover and failback. This includes each database's path for transaction log backups, the building blocks for the SQL scripts that will eventually be generated, and helpful configuration information. For example, the table "LS_SysJobNames" contains a listing of all jobs belonging to this Log Shipping setup. The list is later used to execute, disable, and eventually re-enable the appropriate jobs on each server. The tables above also provide a type of audit trail to examine, should issues be encountered when running the master script.

Reviewing the SQL Scripts

Now that we've generated the necessary scripts for failing over, let's briefly touch on each one and the primary action(s).

01.sql - To Be Run Against Primary Server

Executes the log backup jobs on the Primary to get the latest transactions and waits until they have completed. Once they're finished they are then disabled to prevent them from running automatically.

02.sql - To Be Run Against Secondary Server

Removes any restore delay that may have been configured for your primary databases and notes them for later. Some companies have their Secondary databases running a number of hours behind the Primary. This allows them to potentially recover from a data integrity issue if caught in time. After removing the delay, this script runs the Copy jobs and Restore jobs to get the latest transaction log backups applied.

03.sql - To Be Run Against Secondary Server

Determines the location of transaction log backups from the Primary. Using that, 03.sql remotely performs a final log backup of the Primary databases. All users are forcefully disconnected during this step and databases are left in the NORECOVERY state. The Copy jobs are executed once again to bring the final log backups across to the Secondary.

04.sql - To Be Run Against Secondary Server

Examines the last restored log file to determine the path to the rest of the log backups for each database. Searching through the folders generates a list of all files that contain '.trn' somewhere within their name. After comparing this to what log backups were captured in the system tables 04.sql generates the applicable Restore Log statements. These are executed, in order, to bring the Secondary databases in line with the Primary databases. Databases are still in NORECOVERY mode.

05.sql - To Be Run Against Secondary Server

Performs a Restore Log With RECOVERY command against each database and makes them available for all users.

NOTE: At this point in the process, all the databases on the Secondary are in sync with the ones on the Primary and have been made available for use. After configuring traffic to now go against the Secondary (via an update to a DNS entry, etc.), here are a couple of possibilities for next steps:

- If this is a true DR situation, or a database migration to a new server, proper database backups (full, differential, log) will need to be set up immediately. These will protect important data and ensure log files don't fill up due to a lack of log backups.

- If this is a DR test, perform whatever testing is necessary to be confident in your overall DR strategy. This may include having users enter data and run commonly used reports.

Assuming this was a DR test and we're ready to fail back, let's walk through each script and the primary action(s).

06.sql - To Be Run Against Secondary Server

Performs a log backup against each database to capture any transactions that took place after the failover. All users are forcefully disconnected during this step and databases are left in the NORECOVERY state. Databases are now ready for Log Shipping to engage again.

07.sql - To Be Run Against Secondary Server

Remotely restores the earlier log backups to each database on the Primary. Also recovers the databases, but leaves them in Single_User mode.

08.sql - To Be Run Against Primary Server

Sets all databases back to Multi_User mode so that they are accessible to all users.

09.sql - To Be Run Against Primary Server

Enables the Log Backup jobs so that Log Shipping backups will begin running normally again on the Primary.

10.sql - To Be Run Against Secondary Server

Enables the Log Restore jobs so that Log Shipping restores will begin running normally again on the Secondary. This script also sets any restore delays back to what they were before the failover process.

Executing the SQL Scripts

In SQL Management Studio, choose File -> Open -> File (or CTRL+O)

In the "Open File" dialog box, find the location you specified for the scripts 01.sql through 10.sql exist. Before selecting all the files and opening them, click the "Name" column header to reorder them to be 10.sql through 01.sql. You should see something similar to this:

Click the "Open" button and the scripts will open in SQL Management Studio. The benefit of reordering them first is that after they've been opened 01.sql has the focus and 02.sql through 10.sql are at the top running from left to right.

Now that we have the scripts open, let's begin the failover process. Click on the 01.sql tab at the top to make sure you're on the right script. Then, carefully verify you're connected to the correct server. In every script there is a section that looks something like this:

----------------------------------------------------------------------------------------- --YourServerName: EXECUTE this script against YourServerName -----------------------------------------------------------------------------------------

Right-click on the script, choose "Connection" and then "Change Connection." Ensure the proper "Server Name" is selected and then click "Connect." Perform this step at the beginning of every script to avoid having issues. Each script has short-circuit logic that compares your connection to the one listed in the script. If they don't match, the connection is terminated and you'll need to reopen the script in order to try again.

Once 01.sql has successfully completed, simply click on the 02.sql tab in SQL Management Studio, verify you're connected to the server specified, and click "Execute". Repeat this for 02.sql through 05.sql, using the drop-down arrow to the right of the tabs to select scripts that aren't shown on the screen. Follow any instructions included in each script and ensure they complete successfully before moving to the next one. After 05.sql you will be running on the Secondary and the following message is returned: "DATABASES ARE NOW AVAILABLE ON YourServerName". At this point, you're ready to begin using the server and should follow the NOTE mentioned earlier in this article. If the Secondary server is going to be treated as the new Production server from here on out, proper backups need be configured as soon as possible, etc.

If this was a DR test, or the Primary came back up and you want to fail back, click on 06.sql in SQL Management Studio. Verify you're connected to the server the script specifies and click "Execute" to begin failing back. Repeat this for 07.sql through 10.sql to fail back to the Primary and re-engage Log Shipping. Once this is complete you should check the history of the LS_Alert_YourServerName job on both the Primary and the Secondary to see if there are any errors.

Taking Action

Be sure to create any additional documentation around the use of this solution, if needed. Also, it's highly recommended that you regularly perform other steps necessary for supporting a successful failover:

- Script out your logins (either automate this via a scheduled job or execute manually as part of each DR test, depending on how frequently your logins change).

- Script out any applicable jobs and copy them to the Secondary server where they should be disabled unless actually needed as part of a true failover.

- Determine whether you have any SSIS packages that may need to be copied across as part of every DR test you perform.

The steps above help ensure your key systems are not just back up and running again, but that for your users it's business as usual. Now, consider testing out the solution for yourself. You'll get valuable experience and gain the confidence that your critical applications are well protected.

If you'd like to download the solution, visit the CodePlex site at: http://streamlinelogshippingfailovers.codeplex.com