An Introduction

What to do when your hands are tied?

When they discovered VMware, my employers became overly excited. For every new

application, they created a new Windows Server environment with (despite my

recommendations) it's own SQL Server. And so SQL Servers were popping up like

wild mushrooms in a field of cows with the runs (cows have 4 stomachs). Every

couple of weeks, another 2 or 3 SQL Servers would appear in the network list,

and I would have no idea where they came from.

Additionally, SQL Servers are a free for all where I currently work, with network

administrators and 2nd line support going on and fiddling with the Servers as

and when they please. There is no change control. Passwords can change without

me knowing, Servers are shut down at times during the night, out of hours, without

a thought as to what it might affect. SQL Servers are installed by non-DBAs

and no consideration is taken towards parameters outside the wizards default

setup.

This is not

a made up scenario, this is where I work. Now, I ask you from DBA to DBA, how

do you manage 47 business critical servers in an environment like this? Forty

seven SQL logs are 37 too many to check on a daily basis. As well as 47

x ? jobs (439 currently). Not only do failed jobs need to be checked, but their

run times too. If a network fairy shut down a server during the night,

SQL Server will not flag the job up as having failed. And then how do you manage

disk space for this many servers when space is added and removed without

any notice (due to another new toy, the SAN). And there are your test restores

and log space and index rebuilds.... It's a lot to do in an uncontrolled environment

for this many critical servers for one DBA. I can honestly say that during my

first few months, I was losing sleep. I don't mean to go on about my own work

experiences, but I'm going somewhere with this.

Despite the

severity of the situation and even though little has changed, things are now

very different, as through centralizing, I am one step ahead of everyone else

in the department. With these scripts I am on top of every aspect of monitoring

and regularly find myself informing colleagues in the department of errors and

warnings in their areas well before they are even aware of them.

What are these articles about?

These articles are about centralizing the monitoring of all your SQL Servers

(and Oracle ones too, if you have them) and packaging the results in an ASP.Net

web application. I had read many good articles on the topic of centralizing

and used some of the methods, but the majority of these returned results in

the query window or used SQL Mail to deliver the results to your inbox. With

a heap of different areas to check daily, this felt a little fiddley and messy.

I wanted a cleaner way of monitoring all the servers. I wanted reports. Daily

reports in a slick page, accessible from anywhere. And so I fired up Visual

Studio, and over many months developed a suite of web applications that gave

me a birds eye view of all the SQL Servers in the organization.

Through a series of articles,

I'll demonstrate how I did this and will cover everything from the SQL Server

side to the writing of the web application itself in ASP.Net/VB.Net. You don't

need to know anything about .Net, as the format provided will be a step-by-step

guide describing how to setup the Visual Studio environment, how to write the

server side .Net code to display the results and to how to deliver the web pages

across the network so that they are accessible from anywhere. To make this suitable

for as many people as possible, I will assume you know nothing, so forgive me

if I iterate over something that may seem obvious or something you already know.

As there is so much to cover, I'll actually do very little explaining on how

some things are the way they are in .Net, or the technology behind them, but

feel free to Google these as, needless to say, there are very good web sites

that do this. The aim here however is to get you up and running, so that you

can systematically build your own .Net solutions in your own time, that will

in-turn aid your day to day DBA duties. Whilst there are various ways of actually

extracting data from databases in .Net, we will mostly use T-SQL to do this,

purely so that you can combine your existing DBA SQL skills with web design

abd development. If there is an ounce of creativity in you, you’ll find

yourself reveling in doing so. It's great when it finally all comes together.

So enough faffing.

How does it work?

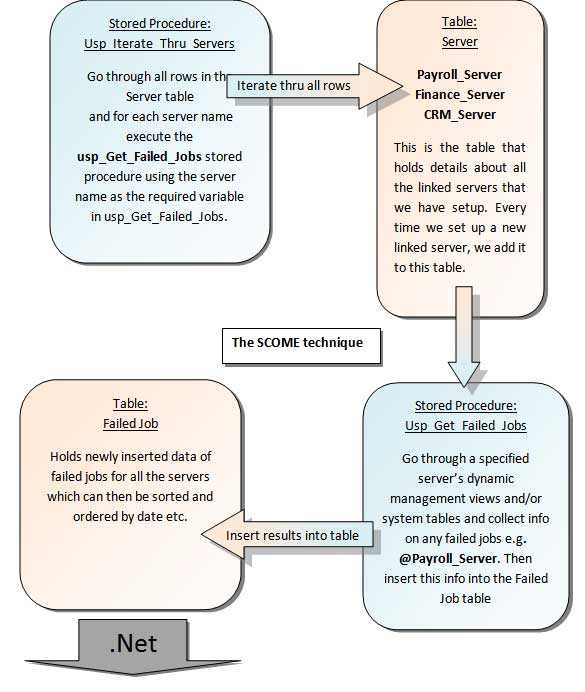

Here’s a run down on how the system works (I'll provide scripts

and step-by-step instructions in articles to come). Let's say that we want to

monitor for failed jobs on two SQL Servers called Payroll_Server and CRM_Server.

1. First

thing's first, you ask the boss for your own SQL Server "SQL_Admin"

to run all your monitoring and auditing scripts from. You want your own server

because later you'll be performing test restores on to it.

2. You chase

the request for your server.

3. Six months later you've

got your server. You now create your own database "DBA_Admin" that

will hold all tables that will store the data regarding the SQL Servers that

are being monitored.

4. You create two linked

server connections from SQL_Admin to Payroll_Server and CRM_Server.

5. You create a table

in DBA_Admin called "Linked_Server_Details" that holds details about

all servers to be monitored (i.e. all linked servers), in this instance Payroll_Server

and CRM_Server.

6. You create a table

in DBA_Admin called "Failed_Job". This table will hold data regarding

all failed jobs on all linked servers being monitored.

7. Now we setup the mechanism

that will collect the data from all the linked servers. It's what I call the

SCOME technique (Servers Coming Outa My Ears). We create a stored procedure

called usp_GetFailedJob. This stored procedure accepts an input parameter;

the name of any linked server i.e. usp_GetFailedJob 'Payroll_Server'. The

stored procedure then fetches data from Payroll_Server's Dynamic Management

Views and/or system tables and puts it into the Failed_Job.

8. But we want SQL_Admin

to run this procedure on all our linked servers so that the table Failed_Job

holds data about failed jobs on all servers being monitored. We have data

about Payroll_Server's failed jobs but not CRM_Server's failed jobs. So we

create another stored procedure call usp_iterate_thru_servers. This proc goes

through all servers in the Linked_Server_Details table one row at a time,

collects the name of the server being monitored and sticks it in front of

usp_GetFailedJob. Usp_GetFailedJob then does it's bit. We end up with a table

of data of all failed jobs for all linked servers.

9. We create a job on

SQL_Admin called Failed_Jobs and schedule this to run however often we want

it to. We give this job a new category called "Monitoring_Jobs"

(this is because we'll later write a web report that will tell us if any of

our monitoring jobs themselves have failed!).

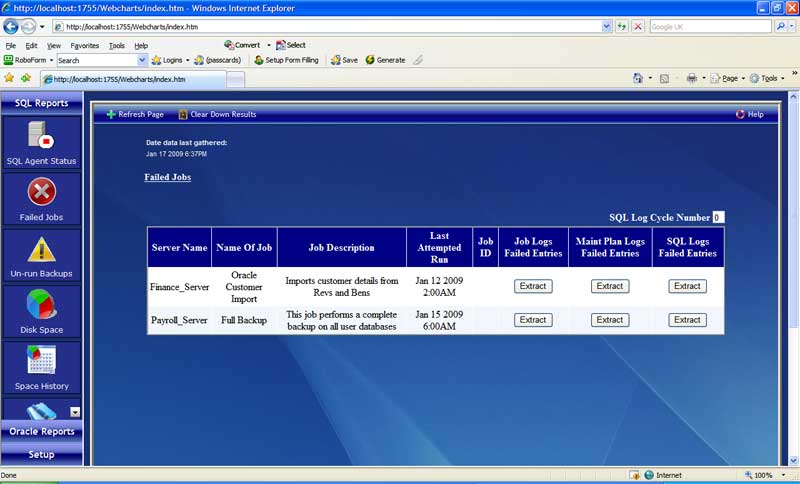

10. Now the fun part,

we fire up Visual Studio and create a colorful web application that collects

data from the table and displays it for us. Additionally, from this one page

we can provide links to other pages that display the errors logs to see why

it failed (saves us firing up SSMS), have a graphical representation of the

number of failed jobs in the last month, the date the data was last collected

etc.

11. We setup Internet

Information Services to display the application as a web site so that we can

access it from anywhere and then we publish the site.

12. We can now check anytime

during the day if any of the hundreds of jobs across all our servers have

failed.

That's it. The job is not

resource heavy and completes within seconds. Now the beauty of the SCOME technique,

is that this system can be applied to any type of DBA monitoring i.e. Disk Space,

Unrun backups, Log size etc. Just replace the job's specific DMVs with those

that hold data on backups or disk space or Log space. And replace the Failed_Job

table with a table such as Disk_Space. You can have a whole suite of reports.

In fact, if you can think of any that could be added to the suite, please share

your idea with us in the article forum. I am genuinely open to suggestions on

this and will be happy to write new articles based on these suggestions (as

well as use them at work!).

Here's a figure representing

the SCOME technique.

We use ASP.Net to read the results from the table and display them in a web page...

Now substitute

the failed jobs system tables and dynamic management views for any other,

and you can collect info about anything SQL Server, on all your linked servers.

In the next

article we'll setup the SQL Server side of things; the tables, linked servers,

security settings and any necessary MSDTC settings that needs changing.

See you then,

Drew